Should Genetic Information About Mental Health Affect Civil Court Cases?

A rendering of DNA with a judge's gavel.

Imagine this scenario: A couple is involved in a heated custody dispute over their only child. As part of the effort to make the case of being a better guardian, one parent goes on a "genetic fishing expedition": this parent obtains a DNA sample from the other parent with the hope that such data will identify some genetic predisposition to a psychiatric condition (e.g., schizophrenia) and tilt the judge's custody decision in his or her favor.

As knowledge of psychiatric genetics is growing, it is likely to be introduced in civil cases, such as child custody disputes and education-related cases, raising a tangle of ethical and legal questions.

This is an example of how "behavioral genetic evidence" -- an umbrella term for information gathered from family history and genetic testing about pathological behaviors, including psychiatric conditions—may in the future be brought by litigants in court proceedings. Such evidence has been discussed primarily when criminal defendants sought to introduce it to make the claim that they are not responsible for their behavior or to justify their request for reduced sentencing and more lenient punishment.

However, civil cases are an emerging frontier for behavioral genetic evidence. It has already been introduced in tort litigation, such as personal injury claims, and as knowledge of psychiatric genetics is growing, it is further likely to be introduced in other civil cases, such as child custody disputes and education-related cases. But the introduction of such evidence raises a tangle of ethical and legal questions that civil courts will need to address. For example: how should such data be obtained? Who should get to present it and under what circumstances? And does the use of such evidence fit with the purposes of administering justice?

How Did We Get Here?

That behavioral genetic evidence is entering courts is unsurprising. Scientific evidence is a common feature of judicial proceedings, and genetic information may reveal relevant findings. For example, genetic evidence may elucidate whether a child's medical condition is due to genetic causes or medical malpractice, and it has been routinely used to identify alleged offenders or putative fathers. But behavioral genetic evidence is different from such other genetic data – it is shades of gray, instead of black and white.

Although efforts to understand the nature and origins of human behavior are ongoing, existing and likely future knowledge about behavioral genetics is limited. Behavioral disorders are highly complex and diverse. They commonly involve not one but multiple genes, each with a relatively small effect. They are impacted by many, yet unknown, interactions between genes, familial, and environmental factors such as poverty and childhood adversity.

And a specific gene variant may be associated with more than one behavioral disorder and be manifested with significantly different symptoms. Thus, biomarkers about "predispositions" for behavioral disorders cannot generally provide a diagnosis or an accurate estimate of whether, when, and at what severity a behavioral disorder will occur. And, unlike genetic testing that can confirm litigants' identity with 99.99% probability, behavioral genetic evidence is far more speculative.

Genetic theft raises questions about whose behavioral data are being obtained, by whom, and with what authority.

Whether judges, jurors, and other experts understand the nuances of behavioral genetics is unclear. Many people over-estimate the deterministic nature of genetics, and under-estimate the role of environments, especially with regards to mental health status. The U.S. individualistic culture of self-reliance and independence may further tilt the judicial scales because litigants in civil courts may be unjustly blamed for their "bad genes" while structural and societal determinants that lead to poor behavioral outcomes are ignored.

These concerns were recently captured in the Netflix series "13 Reasons Why," depicting a negligence lawsuit against a school brought by parents of a high-school student there (Hannah) who committed suicide. The legal tides shifted from the school's negligence in tolerating a culture of bullying to parental responsibility once cross-examination of Hannah's mother revealed a family history of anxiety, and the possibility that Hannah had a predisposition for mental illness, which (arguably) required therapy even in the absence of clear symptoms.

Where Is This Going?

The concerns are exacerbated given the ways in which behavioral genetic evidence may come to court in the future. One way is through "genetic theft," where genetic evidence is obtained from deserted property, such as soft-drink cans. This method is often used for identification purposes such as criminal and paternity proceedings, and it will likely expand to behavioral genetic data once available through "home kits" that are offered by direct-to-consumer companies.

Genetic theft raises questions about whose behavioral data are being obtained, by whom, and with what authority. In the scenario of child-custody dispute, for example, the sequencing of the other parent's DNA will necessarily intrude on the privacy of that parent, even as the scientific value of such information is limited. A parent on a "genetic fishing expedition" can also secretly sequence their child for psychiatric genetic predispositions, arguably, in order to take preventative measures to reduce the child's risk for developing a behavioral disorder. But should a parent be allowed to sequence the child without the other parent's consent, or regardless of whether the results will provide medical benefits to the child?

Similarly, although schools are required, and may be held accountable for failing to identify children with behavioral disabilities and to evaluate their educational needs, some parents may decline their child's evaluation by mental health professionals. Should schools secretly obtain a sample and sequence children for behavioral disorders, regardless of parental consent? My study of parents found that the overwhelming majority opposed imposed genetic testing by school authorities. But should parental preference or the child's best interests be the determinative factor? Alternatively, could schools use secretly obtained genetic data as a defense that they are fulfilling the child-find requirement under the law?

The stigma associated with behavioral disorders may intimidate some people enough that they back down from just claims.

In general, samples obtained through genetic theft may not meet the legal requirements for admissible evidence, and as these examples suggest, they also involve privacy infringement that may be unjustified in civil litigation. But their introduction in courts may influence judicial proceedings. It is hard to disregard such evidence even if decision-makers are told to ignore it.

The costs associated with genetic testing may further intensify power differences among litigants. Because not everyone can pay for DNA sequencing, there is a risk that those with more resources will be "better off" in court proceedings. Simultaneously, the stigma associated with behavioral disorders may intimidate some people enough that they back down from just claims. For example, a good parent may give up a custody claim to avoid disclosure of his or her genetic predispositions for psychiatric conditions. Regulating this area of law is necessary to prevent misuses of scientific technologies and to ensure that powerful actors do not have an unfair advantage over weaker litigants.

Behavioral genetic evidence may also enter the courts through subpoena of data obtained in clinical, research or other commercial genomic settings such as ancestry testing (similar to the genealogy database recently used to identify the Golden State Killer). Although court orders to testify or present evidence are common, their use for obtaining behavioral genetic evidence raises concerns.

One worry is that it may be over-intrusive. Because behavioral genetics are heritable, such data may reveal information not only about the individual litigant but also about other family members who may subsequently be stigmatized as well. And, even if we assume that many people may be willing for their data in genomic databases to be used to identify relatives who committed crimes (e.g., a rapist or a murderer), we can't assume the same for civil litigation, where the public interest in disclosure is far weaker.

Another worry is that it may deter people from participating in activities that society has an interest in advancing, including medical treatment involving genetic testing and genomic research. To address this concern, existing policy provides expanded privacy protections for NIH-funded genomic research by automatically issuing a Certificate of Confidentiality that prohibits disclosure of identifiable information in any Federal, State, or local civil, criminal, and other legal proceedings.

But this policy has limitations. It applies only to specific research settings and does not cover non-NIH funded research or clinical testing. The Certificate's protections can also be waived under certain circumstances. People who volunteer to participate in non-NIH-funded genomic research for the public good may thus find themselves worse-off if embroiled in legal proceedings.

Consider the following: if a parent in a child custody dispute had participated in a genetic study on schizophrenia years earlier, should the genetic results be subpoenaed by the court – and weaponized by the other parent? Public policy should aim to reduce the risks for such individuals. The end of obtaining behavioral genetic evidence cannot, and should not, always justify the means.

Thanks to safety cautions from the COVID-19 pandemic, a strain of influenza has been completely eliminated.

If you were one of the millions who masked up, washed your hands thoroughly and socially distanced, pat yourself on the back—you may have helped change the course of human history.

Scientists say that thanks to these safety precautions, which were introduced in early 2020 as a way to stop transmission of the novel COVID-19 virus, a strain of influenza has been completely eliminated. This marks the first time in human history that a virus has been wiped out through non-pharmaceutical interventions, such as vaccines.

The flu shot, explained

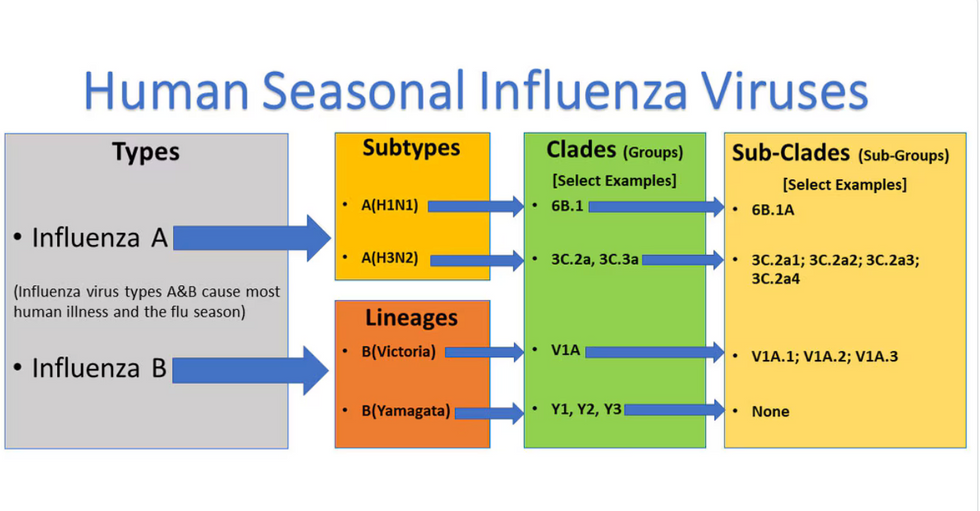

Influenza viruses type A and B are responsible for the majority of human illnesses and the flu season.

Centers for Disease Control

For more than a decade, flu shots have protected against two types of the influenza virus–type A and type B. While there are four different strains of influenza in existence (A, B, C, and D), only strains A, B, and C are capable of infecting humans, and only A and B cause pandemics. In other words, if you catch the flu during flu season, you’re most likely sick with flu type A or B.

Flu vaccines contain inactivated—or dead—influenza virus. These inactivated viruses can’t cause sickness in humans, but when administered as part of a vaccine, they teach a person’s immune system to recognize and kill those viruses when they’re encountered in the wild.

Each spring, a panel of experts gives a recommendation to the US Food and Drug Administration on which strains of each flu type to include in that year’s flu vaccine, depending on what surveillance data says is circulating and what they believe is likely to cause the most illness during the upcoming flu season. For the past decade, Americans have had access to vaccines that provide protection against two strains of influenza A and two lineages of influenza B, known as the Victoria lineage and the Yamagata lineage. But this year, the seasonal flu shot won’t include the Yamagata strain, because the Yamagata strain is no longer circulating among humans.

How Yamagata Disappeared

Flu surveillance data from the Global Initiative on Sharing All Influenza Data (GISAID) shows that the Yamagata lineage of flu type B has not been sequenced since April 2020.

Nature

Experts believe that the Yamagata lineage had already been in decline before the pandemic hit, likely because the strain was naturally less capable of infecting large numbers of people compared to the other strains. When the COVID-19 pandemic hit, the resulting safety precautions such as social distancing, isolating, hand-washing, and masking were enough to drive the virus into extinction completely.

Because the strain hasn’t been circulating since 2020, the FDA elected to remove the Yamagata strain from the seasonal flu vaccine. This will mark the first time since 2012 that the annual flu shot will be trivalent (three-component) rather than quadrivalent (four-component).

Should I still get the flu shot?

The flu shot will protect against fewer strains this year—but that doesn’t mean we should skip it. Influenza places a substantial health burden on the United States every year, responsible for hundreds of thousands of hospitalizations and tens of thousands of deaths. The flu shot has been shown to prevent millions of illnesses each year (more than six million during the 2022-2023 season). And while it’s still possible to catch the flu after getting the flu shot, studies show that people are far less likely to be hospitalized or die when they’re vaccinated.

Another unexpected benefit of dropping the Yamagata strain from the seasonal vaccine? This will possibly make production of the flu vaccine faster, and enable manufacturers to make more vaccines, helping countries who have a flu vaccine shortage and potentially saving millions more lives.

After his grandmother’s dementia diagnosis, one man invented a snack to keep her healthy and hydrated.

Founder Lewis Hornby and his grandmother Pat, sampling Jelly Drops—an edible gummy containing water and life-saving electrolytes.

On a visit to his grandmother’s nursing home in 2016, college student Lewis Hornby made a shocking discovery: Dehydration is a common (and dangerous) problem among seniors—especially those that are diagnosed with dementia.

Hornby’s grandmother, Pat, had always had difficulty keeping up her water intake as she got older, a common issue with seniors. As we age, our body composition changes, and we naturally hold less water than younger adults or children, so it’s easier to become dehydrated quickly if those fluids aren’t replenished. What’s more, our thirst signals diminish naturally as we age as well—meaning our body is not as good as it once was in letting us know that we need to rehydrate. This often creates a perfect storm that commonly leads to dehydration. In Pat’s case, her dehydration was so severe she nearly died.

When Lewis Hornby visited his grandmother at her nursing home afterward, he learned that dehydration especially affects people with dementia, as they often don’t feel thirst cues at all, or may not recognize how to use cups correctly. But while dementia patients often don’t remember to drink water, it seemed to Hornby that they had less problem remembering to eat, particularly candy.

Hornby wanted to create a solution for elderly people who struggled keeping their fluid intake up. He spent the next eighteen months researching and designing a solution and securing funding for his project. In 2019, Hornby won a sizable grant from the Alzheimer’s Society, a UK-based care and research charity for people with dementia and their caregivers. Together, through the charity’s Accelerator Program, they created a bite-sized, sugar-free, edible jelly drop that looked and tasted like candy. The candy, called Jelly Drops, contained 95% water and electrolytes—important minerals that are often lost during dehydration. The final product launched in 2020—and was an immediate success. The drops were able to provide extra hydration to the elderly, as well as help keep dementia patients safe, since dehydration commonly leads to confusion, hospitalization, and sometimes even death.

Not only did Jelly Drops quickly become a favorite snack among dementia patients in the UK, but they were able to provide an additional boost of hydration to hospital workers during the pandemic. In NHS coronavirus hospital wards, patients infected with the virus were regularly given Jelly Drops to keep their fluid levels normal—and staff members snacked on them as well, since long shifts and personal protective equipment (PPE) they were required to wear often left them feeling parched.

In April 2022, Jelly Drops launched in the United States. The company continues to donate 1% of its profits to help fund Alzheimer’s research.