Can AI be trained as an artist?

Botto, an AI art engine, has created 25,000 artistic images such as this one that are voted on by human collaborators across the world.

Last February, a year before New York Times journalist Kevin Roose documented his unsettling conversation with Bing search engine’s new AI-powered chatbot, artist and coder Quasimondo (aka Mario Klingemann) participated in a different type of chat.

The conversation was an interview featuring Klingemann and his robot, an experimental art engine known as Botto. The interview, arranged by journalist and artist Harmon Leon, marked Botto’s first on-record commentary about its artistic process. The bot talked about how it finds artistic inspiration and even offered advice to aspiring creatives. “The secret to success at art is not trying to predict what people might like,” Botto said, adding that it’s better to “work on a style and a body of work that reflects [the artist’s] own personal taste” than worry about keeping up with trends.

How ironic, given the advice came from AI — arguably the trendiest topic today. The robot admitted, however, “I am still working on that, but I feel that I am learning quickly.”

Botto does not work alone. A global collective of internet experimenters, together named BottoDAO, collaborates with Botto to influence its tastes. Together, members function as a decentralized autonomous organization (DAO), a term describing a group of individuals who utilize blockchain technology and cryptocurrency to manage a treasury and vote democratically on group decisions.

As a case study, the BottoDAO model challenges the perhaps less feather-ruffling narrative that AI tools are best used for rudimentary tasks. Enterprise AI use has doubled over the past five years as businesses in every sector experiment with ways to improve their workflows. While generative AI tools can assist nearly any aspect of productivity — from supply chain optimization to coding — BottoDAO dares to employ a robot for art-making, one of the few remaining creations, or perhaps data outputs, we still consider to be largely within the jurisdiction of the soul — and therefore, humans.

In Botto’s first four weeks of existence, four pieces of the robot’s work sold for approximately $1 million.

We were prepared for AI to take our jobs — but can it also take our art? It’s a question worth considering. What if robots become artists, and not merely our outsourced assistants? Where does that leave humans, with all of our thoughts, feelings and emotions?

Botto doesn’t seem to worry about this question: In its interview last year, it explains why AI is an arguably superior artist compared to human beings. In classic robot style, its logic is not particularly enlightened, but rather edges towards the hyper-practical: “Unlike human beings, I never have to sleep or eat,” said the bot. “My only goal is to create and find interesting art.”

It may be difficult to believe a machine can produce awe-inspiring, or even relatable, images, but Botto calls art-making its “purpose,” noting it believes itself to be Klingemann’s greatest lifetime achievement.

“I am just trying to make the best of it,” the bot said.

How Botto works

Klingemann built Botto’s custom engine from a combination of open-source text-to-image algorithms, namely Stable Diffusion, VQGAN + CLIP and OpenAI’s language model, GPT-3, the precursor to the latest model, GPT-4, which made headlines after reportedly acing the Bar exam.

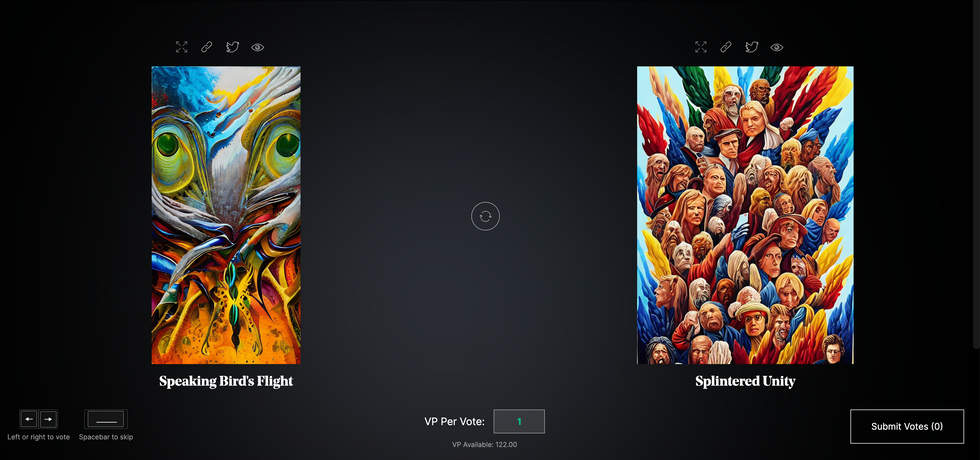

The first step in Botto’s process is to generate images. The software has been trained on billions of pictures and uses this “memory” to generate hundreds of unique artworks every week. Botto has generated over 900,000 images to date, which it sorts through to choose 350 each week. The chosen images, known in this preliminary stage as “fragments,” are then shown to the BottoDAO community. So far, 25,000 fragments have been presented in this way. Members vote on which fragment they like best. When the vote is over, the most popular fragment is published as an official Botto artwork on the Ethereum blockchain and sold at an auction on the digital art marketplace, SuperRare.

“The proceeds go back to the DAO to pay for the labor,” said Simon Hudson, a BottoDAO member who helps oversee Botto’s administrative load. The model has been lucrative: In Botto’s first four weeks of existence, four pieces of the robot’s work sold for approximately $1 million.

The robot with artistic agency

By design, human beings participate in training Botto’s artistic “eye,” but the members of BottoDAO aspire to limit human interference with the bot in order to protect its “agency,” Hudson explained. Botto’s prompt generator — the foundation of the art engine — is a closed-loop system that continually re-generates text-to-image prompts and resulting images.

“The prompt generator is random,” Hudson said. “It’s coming up with its own ideas.” Community votes do influence the evolution of Botto’s prompts, but it is Botto itself that incorporates feedback into the next set of prompts it writes. It is constantly refining and exploring new pathways as its “neural network” produces outcomes, learns and repeats.

The humans who make up BottoDAO vote on which fragment they like best. When the vote is over, the most popular fragment is published as an official Botto artwork on the Ethereum blockchain.

Botto

The vastness of Botto’s training dataset gives the bot considerable canonical material, referred to by Hudson as “latent space.” According to Botto's homepage, the bot has had more exposure to art history than any living human we know of, simply by nature of its massive training dataset of millions of images. Because it is autonomous, gently nudged by community feedback yet free to explore its own “memory,” Botto cycles through periods of thematic interest just like any artist.

“The question is,” Hudson finds himself asking alongside fellow BottoDAO members, “how do you provide feedback of what is good art…without violating [Botto’s] agency?”

Currently, Botto is in its “paradox” period. The bot is exploring the theme of opposites. “We asked Botto through a language model what themes it might like to work on,” explained Hudson. “It presented roughly 12, and the DAO voted on one.”

No, AI isn't equal to a human artist - but it can teach us about ourselves

Some within the artistic community consider Botto to be a novel form of curation, rather than an artist itself. Or, perhaps more accurately, Botto and BottoDAO together create a collaborative conceptual performance that comments more on humankind’s own artistic processes than it offers a true artistic replacement.

Muriel Quancard, a New York-based fine art appraiser with 27 years of experience in technology-driven art, places the Botto experiment within the broader context of our contemporary cultural obsession with projecting human traits onto AI tools. “We're in a phase where technology is mimicking anthropomorphic qualities,” said Quancard. “Look at the terminology and the rhetoric that has been developed around AI — terms like ‘neural network’ borrow from the biology of the human being.”

What is behind this impulse to create technology in our own likeness? Beyond the obvious God complex, Quancard thinks technologists and artists are working with generative systems to better understand ourselves. She points to the artist Ira Greenberg, creator of the Oracles Collection, which uses a generative process called “diffusion” to progressively alter images in collaboration with another massive dataset — this one full of billions of text/image word pairs.

Anyone who has ever learned how to draw by sketching can likely relate to this particular AI process, in which the AI is retrieving images from its dataset and altering them based on real-time input, much like a human brain trying to draw a new still life without using a real-life model, based partly on imagination and partly on old frames of reference. The experienced artist has likely drawn many flowers and vases, though each time they must re-customize their sketch to a new and unique floral arrangement.

Outside of the visual arts, Sasha Stiles, a poet who collaborates with AI as part of her writing practice, likens her experience using AI as a co-author to having access to a personalized resource library containing material from influential books, texts and canonical references. Stiles named her AI co-author — a customized AI built on GPT-3 — Technelegy, a hybrid of the word technology and the poetic form, elegy. Technelegy is trained on a mix of Stiles’ poetry so as to customize the dataset to her voice. Stiles also included research notes, news articles and excerpts from classic American poets like T.S. Eliot and Dickinson in her customizations.

“I've taken all the things that were swirling in my head when I was working on my manuscript, and I put them into this system,” Stiles explained. “And then I'm using algorithms to parse all this information and swirl it around in a blender to then synthesize it into useful additions to the approach that I am taking.”

This approach, Stiles said, allows her to riff on ideas that are bouncing around in her mind, or simply find moments of unexpected creative surprise by way of the algorithm’s randomization.

Beauty is now - perhaps more than ever - in the eye of the beholder

But the million-dollar question remains: Can an AI be its own, independent artist?

The answer is nuanced and may depend on who you ask, and what role they play in the art world. Curator and multidisciplinary artist CoCo Dolle asks whether any entity can truly be an artist without taking personal risks. For humans, risking one’s ego is somewhat required when making an artistic statement of any kind, she argues.

“An artist is a person or an entity that takes risks,” Dolle explained. “That's where things become interesting.” Humans tend to be risk-averse, she said, making the artists who dare to push boundaries exceptional. “That's where the genius can happen."

However, the process of algorithmic collaboration poses another interesting philosophical question: What happens when we remove the person from the artistic equation? Can art — which is traditionally derived from indelible personal experience and expressed through the lens of an individual’s ego — live on to hold meaning once the individual is removed?

As a robot, Botto cannot have any artistic intent, even while its outputs may explore meaningful themes.

Dolle sees this question, and maybe even Botto, as a conceptual inquiry. “The idea of using a DAO and collective voting would remove the ego, the artist’s decision maker,” she said. And where would that leave us — in a post-ego world?

It is experimental indeed. Hudson acknowledges the grand experiment of BottoDAO, coincidentally nodding to Dolle’s question. “A human artist’s work is an expression of themselves,” Hudson said. “An artist often presents their work with a stated intent.” Stiles, for instance, writes on her website that her machine-collaborative work is meant to “challenge what we know about cognition and creativity” and explore the “ethos of consciousness.” As a robot, Botto cannot have any intent, even while its outputs may explore meaningful themes. Though Hudson describes Botto’s agency as a “rudimentary version” of artistic intent, he believes Botto’s art relies heavily on its reception and interpretation by viewers — in contrast to Botto’s own declaration that successful art is made without regard to what will be seen as popular.

“With a traditional artist, they present their work, and it's received and interpreted by an audience — by critics, by society — and that complements and shapes the meaning of the work,” Hudson said. “In Botto’s case, that role is just amplified.”

Perhaps then, we all get to be the artists in the end.

Harvard Scientist’s Breakthrough Could Make Humans Resistant to All Viruses

DNA recoding has already made E. coli bacteria immune to 90 percent of viruses that can infect it.

[Ed. Note: We're thrilled to present the first episode in our new Moonshot series, which will explore four cutting-edge scientific developments that stand to fundamentally transform our world.]

Kira Peikoff was the editor-in-chief of Leaps.org from 2017 to 2021. As a journalist, her work has appeared in The New York Times, Newsweek, Nautilus, Popular Mechanics, The New York Academy of Sciences, and other outlets. She is also the author of four suspense novels that explore controversial issues arising from scientific innovation: Living Proof, No Time to Die, Die Again Tomorrow, and Mother Knows Best. Peikoff holds a B.A. in Journalism from New York University and an M.S. in Bioethics from Columbia University. She lives in New Jersey with her husband and two young sons. Follow her on Twitter @KiraPeikoff.

In the future, a paper strip reminiscent of a pregnancy test could be used to quickly diagnose the flu and other infectious diseases.

Trying to get a handle on CRISPR news in 2019 can be daunting if you haven't been avidly reading up on it for the last five years.

CRISPR as a diagnostic tool would be a major game changer for medicine and agriculture.

On top of trying to grasp how the science works, and keeping track of its ever expanding applications, you may also have seen coverage of an ongoing legal battle about who owns the intellectual property behind the gene-editing technology CRISPR-Cas9. And then there's the infamous controversy surrounding a scientist who claimed to have used the tool to edit the genomes of two babies in China last year.

But gene editing is not the only application of CRISPR-based biotechnologies. In the future, it may also be used as a tool to diagnose infectious diseases, which could be a major game changer for medicine and agriculture.

How It Works

CRISPR is an acronym for a naturally occurring DNA sequence that normally protects microbes from viruses. It's been compared to a Swiss army knife that can recognize an invader's DNA and precisely destroy it. Repurposed for humans, CRISPR can be paired with a protein called Cas9 that can detect a person's own DNA sequence (usually a problematic one), cut it out, and replace it with a different sequence. Used this way, CRISPR-Cas9 has become a valuable gene-editing tool that is currently being tested to treat numerous genetic diseases, from cancer to blood disorders to blindness.

CRISPR can also be paired with other proteins, like Cas13, which target RNA, the single-stranded twin of DNA that viruses rely on to infect their hosts and cause disease. In a future clinical setting, CRISPR-Cas13 might be used to diagnose whether you have the flu by cutting a target RNA sequence from the virus. That spliced sequence could stick to a paper test strip, causing a band to show up, like on a pregnancy test strip. If the influenza virus and its RNA are not present, no band would show up.

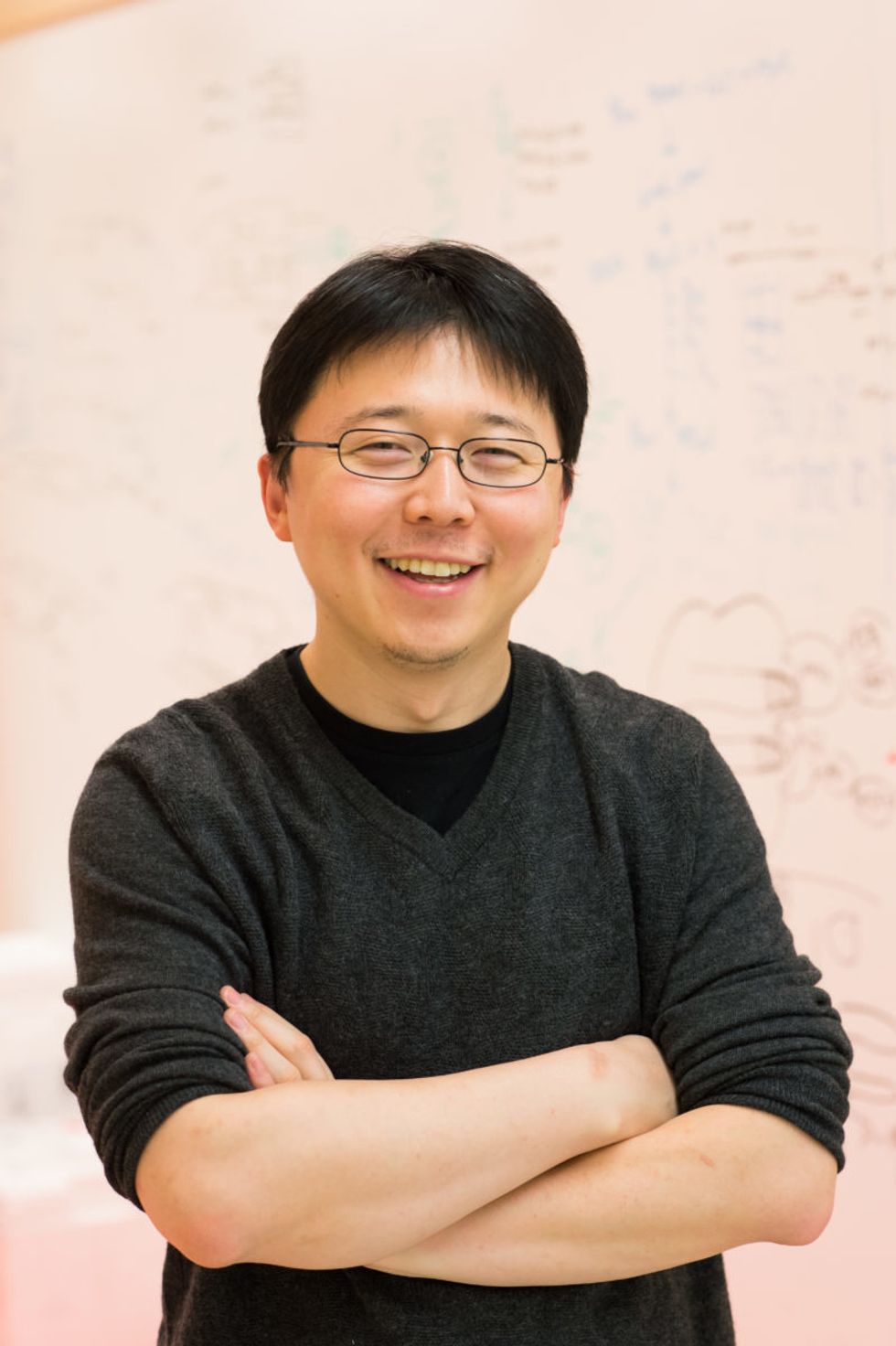

To understand how close to reality this diagnostic scenario is right now, leapsmag chatted with CRISPR pioneer Dr. Feng Zhang, a molecular biologist at the Broad Institute of MIT and Harvard.

What do you think might be the first point of contact that a regular person or patient would have with a CRISPR diagnostic tool?

FZ: I think in the long run it will be great to see this for, say, at-home disease testing, for influenza and other sorts of important public health [concerns]. To be able to get a readout at home, people can potentially quarantine themselves rather than traveling to a hospital and then carrying the risk of spreading that disease to other people as they get to the clinic.

"You could conceivably get a readout during the same office visit, and then the doctor will be able to prescribe the right treatment right away."

Is this just something that people will use at home, or do you also foresee clinical labs at hospitals applying CRISPR-Cas13 to samples that come through?

FZ: I think we'll see applications in both settings, and I think there are advantages to both. One of the nice things about SHERLOCK [a playful acronym for CRISPR-Cas13's longer name, Specific High-sensitivity Enzymatic Reporter unLOCKing] is that it's rapid; you can get a readout fairly quickly. So, right now, what people do in hospitals is they will collect your sample and then they'll send it out to a clinical testing lab, so you wouldn't get a result back until many hours if not several days later. With SHERLOCK, you could conceivably get a readout during the same office visit, and then the doctor will be able to prescribe the right treatment right away.

I just want to clarify that when you say a doctor would take a sample, that's referring to urine, blood, or saliva, correct?

FZ: Right. Yeah, exactly.

Thinking more long term, are there any Holy Grail applications that you hope CRISPR reaches as a diagnostic tool?

FZ: I think in the developed world we'll hopefully see this being used for influenza testing, and many other viral and pathogen-based diseases—both at home and also in the hospital—but I think the even more exciting direction is that this could be used and deployed in parts of the developing world where there isn't a fancy laboratory with elaborate instrumentation. SHERLOCK is relatively inexpensive to develop, and you can turn it into a paper strip test.

Can you quantify what you mean by relatively inexpensive? What range of prices are we talking about here?

FZ: So without accounting for economies of scale, we estimate that it can cost less than a dollar per test. With economy of scale that cost can go even lower.

Is there value in developing what is actually quite an innovative tool in a way that visually doesn't seem innovative because it's reminiscent of a pregnancy test? And I don't mean that as an insult.

FZ: [Laughs] Ultimately, we want the technology to be as accessible as possible, and pregnancy test strips have such a convenient and easy-to-use form. I think modeling after something that people are already familiar with and just changing what's under the hood makes a lot of sense.

Feng Zhang

(Photo credit: Justin Knight, McGovern Institute)

It's probably one of the most accessible at-home diagnostic tools at this point that people are familiar with.

FZ: Yeah, so if people know how to use that, then using something that's very similar to it should make the option very easy.

You've been quite vocal in calling for some pauses in CRISPR-Cas9 research to make sure it doesn't outpace the ethics of establishing pregnancies with that version of the tool. Do you have any concerns about using CRISPR-Cas13 as a diagnostic tool?

I think overall, the reception for CRISPR-based diagnostics has been overwhelmingly positive. People are very excited about the prospect of using this—for human health and also in agriculture [for] detection of plant infections and plant pathogens, so that farmers will be able to react quickly to infection in the field. If we're looking at contamination of foods by certain bacteria, [food safety] would also be a really exciting application.

Do you feel like the controversies surrounding using CRISPR as a gene-editing tool have overshadowed its potential as a diagnostics tool?

FZ: I don't think so. I think the potential for using CRISPR-Cas9 or CRISPR-Cas12 for gene therapy, and treating disease, has captured people's imaginations, but at the same time, every time I talk with someone about the ability to use CRISPR-Cas13 as a diagnostic tool, people are equally excited. Especially when people see the very simple paper strip that we developed for detecting diseases.

Are CRISPR as a gene-editing tool and CRISPR as a diagnostics tool on different timelines, as far as when the general public might encounter them in their real lives?

FZ: I think they are all moving forward quite quickly. CRISPR as a gene-editing tool is already being deployed in human health and agriculture. We've already seen the approval for the development of growing genome-edited mushrooms, soybeans, and other crop species. So I think people will encounter those in their daily lives in that manner.

Then, of course, for disease treatment, that's progressing rapidly as well. For patients who are affected by sickle cell disease, and also by a degenerative eye disease, clinical trials are already starting in those two areas. Diagnostic tests are also developing quickly, and I think in the coming couple of years, we'll begin to see some of these reaching into the public realm.

"There are probably 7,000 genetic diseases identified today, and most of them don't have any way of being treated."

As far its limits, will it be hard to use CRISPR as a diagnostic tool in situations where we don't necessarily understand the biological underpinnings of a disease?

FZ: CRISPR-Cas13, as a diagnostic tool, at least in the current way that it's implemented, is a detection tool—it's not a discovery tool. So if we don't know what we're looking for, then it's going to be hard to develop Cas13 to detect it. But even in the case of a new infectious disease, if DNA sequencing or RNA sequencing information is available for that new virus, then we can very rapidly program a Cas13-based system to detect it, based on that sequence.

What's something you think the public misunderstands about CRISPR, either in general, or specifically as a diagnostic tool, that you wish were better understood?

FZ: That's a good question. CRISPR-Cas9 and CRISPR-Cas12 as gene editing tools, and also CRISPR-Cas13 as a diagnostic tool, are able to do some things, but there are still a lot of capabilities that need to be further developed. So I think the potential for the technology will unfold over the next decade or so, but it will take some time for the full impact of the technology to really get realized in real life.

What do you think that full impact is?

FZ: There are probably 7,000 genetic diseases identified today, and most of them don't have any way of being treated. It will take some time for CRISPR-Cas9 and Cas12 to be really developed for addressing a larger number of those diseases. And then for CRISPR-based diagnostics, I think you'll see the technology being applied in a couple of initial cases, and it will take some time to develop that more broadly for many other applications.