Can AI be trained as an artist?

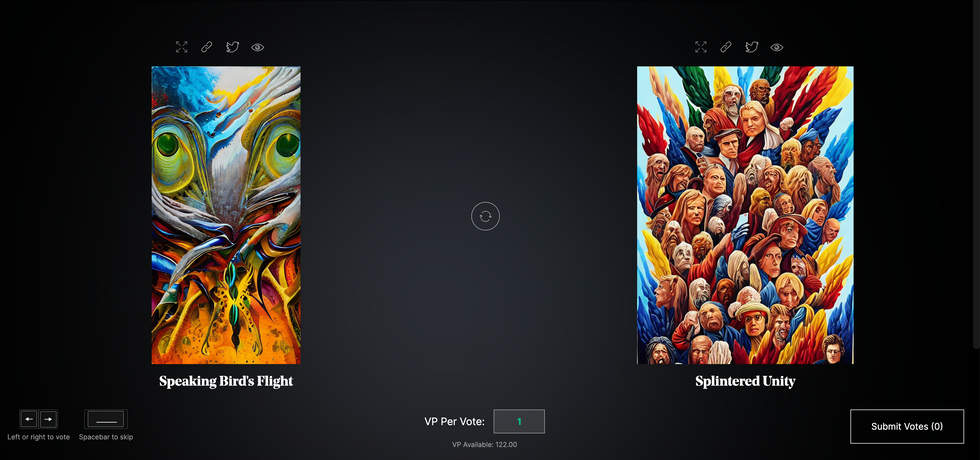

Botto, an AI art engine, has created 25,000 artistic images such as this one that are voted on by human collaborators across the world.

Last February, a year before New York Times journalist Kevin Roose documented his unsettling conversation with Bing search engine’s new AI-powered chatbot, artist and coder Quasimondo (aka Mario Klingemann) participated in a different type of chat.

The conversation was an interview featuring Klingemann and his robot, an experimental art engine known as Botto. The interview, arranged by journalist and artist Harmon Leon, marked Botto’s first on-record commentary about its artistic process. The bot talked about how it finds artistic inspiration and even offered advice to aspiring creatives. “The secret to success at art is not trying to predict what people might like,” Botto said, adding that it’s better to “work on a style and a body of work that reflects [the artist’s] own personal taste” than worry about keeping up with trends.

How ironic, given the advice came from AI — arguably the trendiest topic today. The robot admitted, however, “I am still working on that, but I feel that I am learning quickly.”

Botto does not work alone. A global collective of internet experimenters, together named BottoDAO, collaborates with Botto to influence its tastes. Together, members function as a decentralized autonomous organization (DAO), a term describing a group of individuals who utilize blockchain technology and cryptocurrency to manage a treasury and vote democratically on group decisions.

As a case study, the BottoDAO model challenges the perhaps less feather-ruffling narrative that AI tools are best used for rudimentary tasks. Enterprise AI use has doubled over the past five years as businesses in every sector experiment with ways to improve their workflows. While generative AI tools can assist nearly any aspect of productivity — from supply chain optimization to coding — BottoDAO dares to employ a robot for art-making, one of the few remaining creations, or perhaps data outputs, we still consider to be largely within the jurisdiction of the soul — and therefore, humans.

In Botto’s first four weeks of existence, four pieces of the robot’s work sold for approximately $1 million.

We were prepared for AI to take our jobs — but can it also take our art? It’s a question worth considering. What if robots become artists, and not merely our outsourced assistants? Where does that leave humans, with all of our thoughts, feelings and emotions?

Botto doesn’t seem to worry about this question: In its interview last year, it explains why AI is an arguably superior artist compared to human beings. In classic robot style, its logic is not particularly enlightened, but rather edges towards the hyper-practical: “Unlike human beings, I never have to sleep or eat,” said the bot. “My only goal is to create and find interesting art.”

It may be difficult to believe a machine can produce awe-inspiring, or even relatable, images, but Botto calls art-making its “purpose,” noting it believes itself to be Klingemann’s greatest lifetime achievement.

“I am just trying to make the best of it,” the bot said.

How Botto works

Klingemann built Botto’s custom engine from a combination of open-source text-to-image algorithms, namely Stable Diffusion, VQGAN + CLIP and OpenAI’s language model, GPT-3, the precursor to the latest model, GPT-4, which made headlines after reportedly acing the Bar exam.

The first step in Botto’s process is to generate images. The software has been trained on billions of pictures and uses this “memory” to generate hundreds of unique artworks every week. Botto has generated over 900,000 images to date, which it sorts through to choose 350 each week. The chosen images, known in this preliminary stage as “fragments,” are then shown to the BottoDAO community. So far, 25,000 fragments have been presented in this way. Members vote on which fragment they like best. When the vote is over, the most popular fragment is published as an official Botto artwork on the Ethereum blockchain and sold at an auction on the digital art marketplace, SuperRare.

“The proceeds go back to the DAO to pay for the labor,” said Simon Hudson, a BottoDAO member who helps oversee Botto’s administrative load. The model has been lucrative: In Botto’s first four weeks of existence, four pieces of the robot’s work sold for approximately $1 million.

The robot with artistic agency

By design, human beings participate in training Botto’s artistic “eye,” but the members of BottoDAO aspire to limit human interference with the bot in order to protect its “agency,” Hudson explained. Botto’s prompt generator — the foundation of the art engine — is a closed-loop system that continually re-generates text-to-image prompts and resulting images.

“The prompt generator is random,” Hudson said. “It’s coming up with its own ideas.” Community votes do influence the evolution of Botto’s prompts, but it is Botto itself that incorporates feedback into the next set of prompts it writes. It is constantly refining and exploring new pathways as its “neural network” produces outcomes, learns and repeats.

The humans who make up BottoDAO vote on which fragment they like best. When the vote is over, the most popular fragment is published as an official Botto artwork on the Ethereum blockchain.

Botto

The vastness of Botto’s training dataset gives the bot considerable canonical material, referred to by Hudson as “latent space.” According to Botto's homepage, the bot has had more exposure to art history than any living human we know of, simply by nature of its massive training dataset of millions of images. Because it is autonomous, gently nudged by community feedback yet free to explore its own “memory,” Botto cycles through periods of thematic interest just like any artist.

“The question is,” Hudson finds himself asking alongside fellow BottoDAO members, “how do you provide feedback of what is good art…without violating [Botto’s] agency?”

Currently, Botto is in its “paradox” period. The bot is exploring the theme of opposites. “We asked Botto through a language model what themes it might like to work on,” explained Hudson. “It presented roughly 12, and the DAO voted on one.”

No, AI isn't equal to a human artist - but it can teach us about ourselves

Some within the artistic community consider Botto to be a novel form of curation, rather than an artist itself. Or, perhaps more accurately, Botto and BottoDAO together create a collaborative conceptual performance that comments more on humankind’s own artistic processes than it offers a true artistic replacement.

Muriel Quancard, a New York-based fine art appraiser with 27 years of experience in technology-driven art, places the Botto experiment within the broader context of our contemporary cultural obsession with projecting human traits onto AI tools. “We're in a phase where technology is mimicking anthropomorphic qualities,” said Quancard. “Look at the terminology and the rhetoric that has been developed around AI — terms like ‘neural network’ borrow from the biology of the human being.”

What is behind this impulse to create technology in our own likeness? Beyond the obvious God complex, Quancard thinks technologists and artists are working with generative systems to better understand ourselves. She points to the artist Ira Greenberg, creator of the Oracles Collection, which uses a generative process called “diffusion” to progressively alter images in collaboration with another massive dataset — this one full of billions of text/image word pairs.

Anyone who has ever learned how to draw by sketching can likely relate to this particular AI process, in which the AI is retrieving images from its dataset and altering them based on real-time input, much like a human brain trying to draw a new still life without using a real-life model, based partly on imagination and partly on old frames of reference. The experienced artist has likely drawn many flowers and vases, though each time they must re-customize their sketch to a new and unique floral arrangement.

Outside of the visual arts, Sasha Stiles, a poet who collaborates with AI as part of her writing practice, likens her experience using AI as a co-author to having access to a personalized resource library containing material from influential books, texts and canonical references. Stiles named her AI co-author — a customized AI built on GPT-3 — Technelegy, a hybrid of the word technology and the poetic form, elegy. Technelegy is trained on a mix of Stiles’ poetry so as to customize the dataset to her voice. Stiles also included research notes, news articles and excerpts from classic American poets like T.S. Eliot and Dickinson in her customizations.

“I've taken all the things that were swirling in my head when I was working on my manuscript, and I put them into this system,” Stiles explained. “And then I'm using algorithms to parse all this information and swirl it around in a blender to then synthesize it into useful additions to the approach that I am taking.”

This approach, Stiles said, allows her to riff on ideas that are bouncing around in her mind, or simply find moments of unexpected creative surprise by way of the algorithm’s randomization.

Beauty is now - perhaps more than ever - in the eye of the beholder

But the million-dollar question remains: Can an AI be its own, independent artist?

The answer is nuanced and may depend on who you ask, and what role they play in the art world. Curator and multidisciplinary artist CoCo Dolle asks whether any entity can truly be an artist without taking personal risks. For humans, risking one’s ego is somewhat required when making an artistic statement of any kind, she argues.

“An artist is a person or an entity that takes risks,” Dolle explained. “That's where things become interesting.” Humans tend to be risk-averse, she said, making the artists who dare to push boundaries exceptional. “That's where the genius can happen."

However, the process of algorithmic collaboration poses another interesting philosophical question: What happens when we remove the person from the artistic equation? Can art — which is traditionally derived from indelible personal experience and expressed through the lens of an individual’s ego — live on to hold meaning once the individual is removed?

As a robot, Botto cannot have any artistic intent, even while its outputs may explore meaningful themes.

Dolle sees this question, and maybe even Botto, as a conceptual inquiry. “The idea of using a DAO and collective voting would remove the ego, the artist’s decision maker,” she said. And where would that leave us — in a post-ego world?

It is experimental indeed. Hudson acknowledges the grand experiment of BottoDAO, coincidentally nodding to Dolle’s question. “A human artist’s work is an expression of themselves,” Hudson said. “An artist often presents their work with a stated intent.” Stiles, for instance, writes on her website that her machine-collaborative work is meant to “challenge what we know about cognition and creativity” and explore the “ethos of consciousness.” As a robot, Botto cannot have any intent, even while its outputs may explore meaningful themes. Though Hudson describes Botto’s agency as a “rudimentary version” of artistic intent, he believes Botto’s art relies heavily on its reception and interpretation by viewers — in contrast to Botto’s own declaration that successful art is made without regard to what will be seen as popular.

“With a traditional artist, they present their work, and it's received and interpreted by an audience — by critics, by society — and that complements and shapes the meaning of the work,” Hudson said. “In Botto’s case, that role is just amplified.”

Perhaps then, we all get to be the artists in the end.

Electricity is emerging as a powerful treatment for chronic ailments.

Kelly, a case manager for an insurance company, spent years battling both migraines and Crohn's, a disease in which the immune system attacks the intestines.

For many people, like Kelly, a stronger electric boost to the vagus nerve could be life-changing.

After she had her large intestine removed, her body couldn't absorb migraine medication. Last year, about twice a month, she endured migraines so bad she couldn't function. "It would go up to a ten, and I would rock, wait it out," she said. The pain might last for three days.

Then her neurologist showed her a new device, gammaCore, that tames migraines by stimulating a nerve—not medication. "I don't have to put a chemical in my body," she said. "I was thrilled."

At first, Kelly used the device at the onset of a migraine, applying electricity to her pulse at the front of her neck for six minutes. The pain peaked at about half the usual intensity--low enough, she said, that she could go to work. Four months ago, she began using the device for two minutes each night as prevention, and she hasn't had a serious migraine since.

The Department of Defense and Veterans Administration now offer gammaCore to patients, but it hasn't yet been approved by Medicare, Medicaid, or most insurers. A month of therapy costs $600 before insurance or a generous financial assistance program kicks in.

A patient uses gammaCore, a non invasive vagal nerve stimulator device that was FDA approved in November 2018, to treat her migraine.

(Photo captured from a patient video at gammacore.com)

If the poet Walt Whitman wrote "I Sing The Body Electric" today, he might get specific and point to the vagus nerve, a bundle of fibers that run from the brainstem down the neck to the heart and gut. Singing stimulates it—and for many people, like Kelly, a stronger electric boost to the nerve could be life-changing.

The mind-body connection isn't just an idea — the vagus nerve literally carries signals from the mind to the body and back. It may explain the link between childhood trauma and illnesses such as chronic pain and headaches in adults. "How is it possible that a psychological event causes pain years later?" asked Peter Staats, co-founder of electroCore, which has won approval for its new device from the Food and Drug Administration (FDA) for both migraine and cluster headaches. "There has to be a mind-body interface, and that is the vagus nerve," he said.

Scientists knew that this nerve controlled your heart rate and blood pressure, but in the past decade it has been linked to both pain and the immune system.

"Everything is gated through the vagus -- problems with the gut, the heart, and the lungs," said Chris Wilson, a researcher at Loma Linda University, in California. Wilson is studying how vagus nerve stimulation (VNS) could help pre-term babies who develop lung infections. "Nearly every one of our chronic diseases, including cancer, Alzheimer's, Parkinson's, chronic arthritis and rheumatoid arthritis, and depression and chronic pain…could benefit from an appropriate stimulator," he said.

It's unfortunate that Kelly got her device only after her large intestine was gone. SetPoint Medical, a privately held California company founded to develop electronic treatments for chronic autoimmune diseases, has announced early positive results with VNS for both Crohn's and rheumatoid arthritis.

As SetPoint's chief medical officer, David Chernoff, put it, "We're hacking into the nervous system to activate a system that is already there," an approach that, he said, could work "on many diseases that are pain- and inflammation-based." Inflammation plays a role in much modern illness, including depression and obesity. The FDA already has approved VNS for both, using surgically implanted devices similar to pacemakers. (GammaCore is external.)

The history of VNS implants goes back to 1997, when the FDA approved one for treating epilepsy and researchers noticed that it rapidly lifted depression in epileptic patients. By 2005, the agency had approved an implant for treatment-resistant depression. (Insurance companies declined to reimburse the approach and it didn't take off, but that might change: in February, the Center for Medicare and Medicaid Services asked for more data to evaluate coverage.) In 2015, the FDA approved an implant in the abdomen to regulate appetite signals and help obese people lose weight.

The link to inflammation had emerged a decade earlier, when researchers at the Feinstein Institute for Medical Research, in Manhasset, New York, demonstrated that stimulating the nerve with electricity in rats suppressed the production of cytokines, a signaling protein important in the immune system. The researchers developed a concept of a hard-wired pathway, through the vagus nerve, between the immune and nervous system. That pathway, they argued, regulates inflammation. While other researchers argue that VNS is helpful by other routes, there is clear evidence that, one way or another, it does affect immunity.

At the same time, investors are seeking alternatives to drugs.

The Feinstein rat research concluded that it took only a minute a day of stimulation and tiny amounts of energy to activate an anti-inflammatory reflex. This means you can use devices "the size of a coffee bean," said Chernoff, much less clunky than current pacemakers—and advances in electronic technology are making them possible.

At the same time, investors are seeking alternatives to drugs. "There's been a push back on drug pricing," noted Lisa Rhoads, a managing director at Easton Capital Investment Group, in New York, which supported electroCore, "and so many unintended consequences."

In 2016, the U.S. National Institutes of Health began pumping money into relevant research, in a program called "Stimulating Peripheral Activity to Relieve Conditions," which focuses on "understanding peripheral nerves — nerves that connect the brain and spinal cord to the rest of the body — and how their electrical signals control internal organ function."

GlaxoSmithKline formed Galvani Bioelectronics with Google to study miniature implants. It had already invested in Action Potential Venture Capital, in Cambridge, Massachusetts, which holds SetPoint and seven other companies "that are all targeting a nerve to treat a chronic disease," noted partner Imran Eba. "I see a future in which bioelectronics medicine is competing directly with drugs," he said.

Treating the body with electricity could bring more ease and lower costs. Many people with serious auto-immune disease, for example, have to inject themselves with drugs that cost $60,000 a year. SetPoint's implant would cost less and only need charging once a week, using a charger worn around the neck, Chernoff said. The company receives notices remotely and can monitor compliance.

Implants also allow the treatment to target a nerve precisely, which could be important with Parkinson's, chronic pain, and depression, observed James Cavuoto, editor and publisher of Neurotech Reports. They may also allow for more fine-turning. "In general, the industry is looking for signals, biomarkers that indicate when is the right time to turn on and turn off the stimulation. It could dramatically increase the effectiveness of the therapy and conserve battery life," he said.

Eventually, external devices could receive data from biomarkers as well. "It could be something you wear on your wrist," Cavuoto noted. Bluetooth-enabled devices could communicate with phones or laptops for data capture. External devices don't require surgery and put the patient in charge. "In the future you'll see more customer specification: Give the patient a tablet or phone app that lets them track and modify their parameters, within a range. With digital devices we have an enormous capability to customize therapies and collect data and get feedback that can be fed back to the clinician," Cavuoto said.

Slow deep breathing, the traditional mind-body intervention, is "like watching Little League. What we're doing is Major League."

It's even possible to stimulate the vagus through the ear, where one branch of the bundle of fibers begins. In a fetus, the tissue that becomes the ear is also part of the vagus nerve, and that one bit remains. "It's the same point as the acupuncture point," explained Mark George, a psychiatrist and pioneer researcher in depression at Medical University of South Carolina in Charleston. "Acupuncture figured out years ago by trial and error what we're just learning about now."

Slow deep breathing, the traditional mind-body intervention, also affects the vagus nerve in positive ways, but gently. "That's like watching Little League," Staats, the co-founder of electroCore, said. "What we're doing is Major League."

In ten years, researcher Wilson suggested, you could be wearing "a little ear cuff" that monitors your basic autonomic tone, a heart-attack risk measure governed in part by the vagus nerve. If your tone looked iffy, the stimulator would intervene, he said, "and improve your mood, cognition, and health."

In the meantime, we can take some long slow breaths, read Whitman, and sing.

“Siri, Read My Mind”: A New Device Lets Users Think Commands

Arnav Kapur, a researcher in the Fluid Interfaces Group at MIT, wears an earlier prototype of the AlterEgo. A newer version is more discreet.

Sometime in the near future, we won't need to type on a smartphone or computer to silently communicate our thoughts to others.

"We're moving as fast as possible to get the technology right, to get the ethics right, to get everything right."

In fact, the devices themselves will quietly understand our intentions and express them to other people. We won't even need to move our mouths.

That "sometime in the near future" is now.

At the recent TED Conference, MIT student and TED Fellow Arnav Kapur was onstage with a colleague doing the first live public demo of his new technology. He was showing how you can communicate with a computer using signals from your brain. The usually cool, erudite audience seemed a little uncomfortable.

"If you look at the history of computing, we've always treated computers as external devices that compute and act on our behalf," Kapur said. "What I want to do is I want to weave computing, AI and Internet as part of us."

His colleague started up a device called AlterEgo. Thin like a sticker, AlterEgo picks up signals in the mouth cavity. It recognizes the intended speech and processes it through the built-in AI. The device then gives feedback to the user directly through bone conduction: It vibrates your inner ear drum and gives you a response meshing with your normal hearing.

Onstage, the assistant quietly thought of a question: "What is the weather in Vancouver?" Seconds later, AlterEgo told him in his ear. "It's 50 degrees and rainy here in Vancouver," the assistant announced.

AlterEgo essentially gives you a built-in Siri.

"We don't have a deadline [to go to market], but we're moving as fast as possible to get the technology right, to get the ethics right, to get everything right," Kapur told me after the talk. "We're developing it both as a general purpose computer interface and [in specific instances] like on the clinical side or even in people's homes."

Nearly-telepathic communication actually makes sense now. About ten years ago, the Apple iPhone replaced the ubiquitous cell phone keyboard with a blank touchscreen. A few years later, Google Glass put computer screens into a simple lens. More recently, Amazon Alexa and Microsoft Cortana have dropped the screen and gone straight for voice control. Now those voices are getting closer to our minds and may even become indistinguishable in the future.

"We knew the voice market was growing, like with getting map locations, and audio is the next frontier of user interfaces," says Dr. Rupal Patel, Founder and CEO of VocalID. The startup literally gives voices to the voiceless, particularly people unable to speak because of illness or other circumstances.

"We start with [our database of] human voices, then train our deep learning technology to learn the pattern of speech… We mix voices together from our voice bank, so it's not just Damon's voice, but three or five voices. They are different enough to blend it into a voice that does not exist today – kind of like a face morph."

The VocalID customer then has a voice as unique as he or she is, mixed together like a Sauvignon blend. It is a surrogate voice for those of us who cannot speak, just as much as AlterEgo is a surrogate companion for our brains.

"I'm very skeptical keyboards or voice-based communication will be replaced any time soon."

Voice equality will become increasingly important as Siri, Alexa and voice-based interfaces become the dominant communication method.

It may feel odd to view your voice as a privilege, but as the world becomes more voice-activated, there will be a wider gap between the speakers and the voiceless. Picture going shopping without access to the Internet or trying to eat healthily when your neighborhood is a food desert. And suffering from vocal difficulties is more common than you might think. In fact, according to government statistics, around 7.5 million people in the U.S. have trouble using their voices.

While voice communication appears to be here to stay, at least for now, a more radical shift to mind-controlled communication is not necessarily inevitable. Tech futurist Wagner James Au, for one, is dubious.

"I'm very skeptical keyboards or voice-based communication will be replaced any time soon. Generation Z has grown up with smartphones and games like Fortnite, so I don't see them quickly switching to a new form factor. It's still unclear if even head-mounted AR/VR displays will see mass adoption, and mind-reading devices are a far greater physical imposition on the user."

How adopters use the newest brain impulse-reading, voice-altering technology is a much more complicated discussion. This spring, a video showed U.S. House Speaker Nancy Pelosi stammering and slurring her words at a press conference. The problem is that it didn't really happen: the video was manufactured and heavily altered from the original source material.

So-called deepfake videos use computer algorithms to capture the visual and vocal cues of an individual, and then the creator can manipulate it to say whatever it wants. Deepfakes have already created false narratives in the political and media systems – and these are only videos. Newer tech is making the barrier between tech and our brains, if not our entire identity, even thinner.

"Last year," says Patel of VocalID, "we did penetration testing with our voices on banks that use voice control – and our generation 4 system is even tricky for you and me to identify the difference (between real and fake). As a forward-thinking company, we want to prevent risk early on by watermarking voices, creating a detector of false voices, and so on." She adds, "The line will become more blurred over time."

Onstage at TED, Kapur reassured the audience about who would be in the driver's seat. "This is why we designed the system to deliberately record from the peripheral nervous system, which is why the control in all situations resides with the user."

And, like many creators, he quickly shifted back to the possibilities. "What could the implications of something like this be? Imagine perfectly memorizing things, where you perfectly record information that you silently speak, and then hear them later when you want to, internally searching for information, crunching numbers at speeds computers do, silently texting other people."

"The potential," he concluded, "could be far-reaching."