Beyond Henrietta Lacks: How the Law Has Denied Every American Ownership Rights to Their Own Cells

A 2017 portrait of Henrietta Lacks.

The common perception is that Henrietta Lacks was a victim of poverty and racism when in 1951 doctors took samples of her cervical cancer without her knowledge or permission and turned them into the world's first immortalized cell line, which they called HeLa. The cell line became a workhorse of biomedical research and facilitated the creation of medical treatments and cures worth untold billions of dollars. Neither Lacks nor her family ever received a penny of those riches.

But racism and poverty is not to blame for Lacks' exploitation—the reality is even worse. In fact all patients, then and now, regardless of social or economic status, have absolutely no right to cells that are taken from their bodies. Some have called this biological slavery.

How We Got Here

The case that established this legal precedent is Moore v. Regents of the University of California.

John Moore was diagnosed with hairy-cell leukemia in 1976 and his spleen was removed as part of standard treatment at the UCLA Medical Center. On initial examination his physician, David W. Golde, had discovered some unusual qualities to Moore's cells and made plans prior to the surgery to have the tissue saved for research rather than discarded as waste. That research began almost immediately.

"On both sides of the case, legal experts and cultural observers cautioned that ownership of a human body was the first step on the slippery slope to 'bioslavery.'"

Even after Moore moved to Seattle, Golde kept bringing him back to Los Angeles to collect additional samples of blood and tissue, saying it was part of his treatment. When Moore asked if the work could be done in Seattle, he was told no. Golde's charade even went so far as claiming to find a low-income subsidy to pay for Moore's flights and put him up in a ritzy hotel to get him to return to Los Angeles, while paying for those out of his own pocket.

Moore became suspicious when he was asked to sign new consent forms giving up all rights to his biological samples and he hired an attorney to look into the matter. It turned out that Golde had been lying to his patient all along; he had been collecting samples unnecessary to Moore's treatment and had turned them into a cell line that he and UCLA had patented and already collected millions of dollars in compensation. The market for the cell lines was estimated at $3 billion by 1990.

Moore felt he had been taken advantage of and filed suit to claim a share of the money that had been made off of his body. "On both sides of the case, legal experts and cultural observers cautioned that ownership of a human body was the first step on the slippery slope to 'bioslavery,'" wrote Priscilla Wald, a professor at Duke University whose career has focused on issues of medicine and culture. "Moore could be viewed as asking to commodify his own body part or be seen as the victim of the theft of his most private and inalienable information."

The case bounced around different levels of the court system with conflicting verdicts for nearly six years until the California Supreme Court ruled on July 9, 1990 that Moore had no legal rights to cells and tissue once they were removed from his body.

The court made a utilitarian argument that the cells had no value until scientists manipulated them in the lab. And it would be too burdensome for researchers to track individual donations and subsequent cell lines to assure that they had been ethically gathered and used. It would impinge on the free sharing of materials between scientists, slow research, and harm the public good that arose from such research.

"In effect, what Moore is asking us to do is impose a tort duty on scientists to investigate the consensual pedigree of each human cell sample used in research," the majority wrote. In other words, researchers don't need to ask any questions about the materials they are using.

One member of the court did not see it that way. In his dissent, Stanley Mosk raised the specter of slavery that "arises wherever scientists or industrialists claim, as defendants have here, the right to appropriate and exploit a patient's tissue for their sole economic benefit—the right, in other words, to freely mine or harvest valuable physical properties of the patient's body. … This is particularly true when, as here, the parties are not in equal bargaining positions."

Mosk also cited the appeals court decision that the majority overturned: "If this science has become for profit, then we fail to see any justification for excluding the patient from participation in those profits."

But the majority bought the arguments that Golde, UCLA, and the nascent biotechnology industry in California had made in amici briefs filed throughout the legal proceedings. The road was now cleared for them to develop products worth billions without having to worry about or share with the persons who provided the raw materials upon which their research was based.

Critical Views

Biomedical research requires a continuous and ever-growing supply of human materials for the foundation of its ongoing work. If an increasing number of patients come to feel as John Moore did, that the system is ripping them off, then they become much less likely to consent to use of their materials in future research.

Some legal and ethical scholars say that donors should be able to limit the types of research allowed for their tissues and researchers should be monitored to assure compliance with those agreements. For example, today it is commonplace for companies to certify that their clothing is not made by child labor, their coffee is grown under fair trade conditions, that food labeled kosher is properly handled. Should we ask any less of our pharmaceuticals than that the donors whose cells made such products possible have been treated honestly and fairly, and share in the financial bounty that comes from such drugs?

Protection of individual rights is a hallmark of the American legal system, says Lisa Ikemoto, a law professor at the University of California Davis. "Putting the needs of a generalized public over the interests of a few often rests on devaluation of the humanity of the few," she writes in a reimagined version of the Moore decision that upholds Moore's property claims to his excised cells. The commentary is in a chapter of a forthcoming book in the Feminist Judgment series, where authors may only use legal precedent in effect at the time of the original decision.

"Why is the law willing to confer property rights upon some while denying the same rights to others?" asks Radhika Rao, a professor at the University of California, Hastings College of the Law. "The researchers who invest intellectual capital and the companies and universities that invest financial capital are permitted to reap profits from human research, so why not those who provide the human capital in the form of their own bodies?" It might be seen as a kind of sweat equity where cash strapped patients make a valuable in kind contribution to the enterprise.

The Moore court also made a big deal about inhibiting the free exchange of samples between scientists. That has become much less the situation over the more than three decades since the decision was handed down. Ironically, this decision, as well as other laws and regulations, have since strengthened the power of patents in biomedicine and by doing so have increased secrecy and limited sharing.

"Although the research community theoretically endorses the sharing of research, in reality sharing is commonly compromised by the aggressive pursuit and defense of patents and by the use of licensing fees that hinder collaboration and development," Robert D. Truog, Harvard Medical School ethicist and colleagues wrote in 2012 in the journal Science. "We believe that measures are required to ensure that patients not bear all of the altruistic burden of promoting medical research."

Additionally, the increased complexity of research and the need for exacting standardization of materials has given rise to an industry that supplies certified chemical reagents, cell lines, and whole animals bred to have specific genetic traits to meet research needs. This has been more efficient for research and has helped to ensure that results from one lab can be reproduced in another.

The Court's rationale of fostering collaboration and free exchange of materials between researchers also has been undercut by the changing structure of that research. Big pharma has shrunk the size of its own research labs and over the last decade has worked out cooperative agreements with major research universities where the companies contribute to the research budget and in return have first dibs on any findings (and sometimes a share of patent rights) that come out of those university labs. It has had a chilling effect on the exchange of materials between universities.

Perhaps tracking cell line donors and use restrictions on those donations might have been burdensome to researchers when Moore was being litigated. Some labs probably still kept their cell line records on 3x5 index cards, computers were primarily expensive room-size behemoths with limited capacity, the internet barely existed, and there was no cloud storage.

But that was the dawn of a new technological age and standards have changed. Now cell lines are kept in state-of-the-art sub zero storage units, tagged with the source, type of tissue, date gathered and often other information. Adding a few more data fields and contacting the donor if and when appropriate does not seem likely to disrupt the research process, as the court asserted.

Forging the Future

"U.S. universities are awarded almost 3,000 patents each year. They earn more than $2 billion each year from patent royalties. Sharing a modest portion of these profits is a novel method for creating a greater sense of fairness in research relationships that we think is worth exploring," wrote Mark Yarborough, a bioethicist at the University of California Davis Medical School, and colleagues. That was penned nearly a decade ago and those numbers have only grown.

The Michigan BioTrust for Health might serve as a useful model in tackling some of these issues. Dried blood spots have been collected from all newborns for half a century to be tested for certain genetic diseases, but controversy arose when the huge archive of dried spots was used for other research projects. As a result, the state created a nonprofit organization to in essence become a biobank and manage access to these spots only for specific purposes, and also to share any revenue that might arise from that research.

"If there can be no property in a whole living person, does it stand to reason that there can be no property in any part of a living person? If there were, can it be said that this could equate to some sort of 'biological slavery'?" Irish ethicist Asim A. Sheikh wrote several years ago. "Any amount of effort spent pondering the issue of 'ownership' in human biological materials with existing law leaves more questions than answers."

Perhaps the biggest question will arise when -- not if but when -- it becomes possible to clone a human being. Would a human clone be a legal person or the property of those who created it? Current legal precedent points to it being the latter.

Today, October 4, is the 70th anniversary of Henrietta Lacks' death from cancer. Over those decades her immortalized cells have helped make possible miraculous advances in medicine and have had a role in generating billions of dollars in profits. Surviving family members have spoken many times about seeking a share of those profits in the name of social justice; they intend to file lawsuits today. Such cases will succeed or fail on their own merits. But regardless of their specific outcomes, one can hope that they spark a larger public discussion of the role of patients in the biomedical research enterprise and lead to establishing a legal and financial claim for their contributions toward the next generation of biomedical research.

New elevators could lift up our access to space

A space elevator would be cheaper and cleaner than using rockets

Story by Big Think

When people first started exploring space in the 1960s, it cost upwards of $80,000 (adjusted for inflation) to put a single pound of payload into low-Earth orbit.

A major reason for this high cost was the need to build a new, expensive rocket for every launch. That really started to change when SpaceX began making cheap, reusable rockets, and today, the company is ferrying customer payloads to LEO at a price of just $1,300 per pound.

This is making space accessible to scientists, startups, and tourists who never could have afforded it previously, but the cheapest way to reach orbit might not be a rocket at all — it could be an elevator.

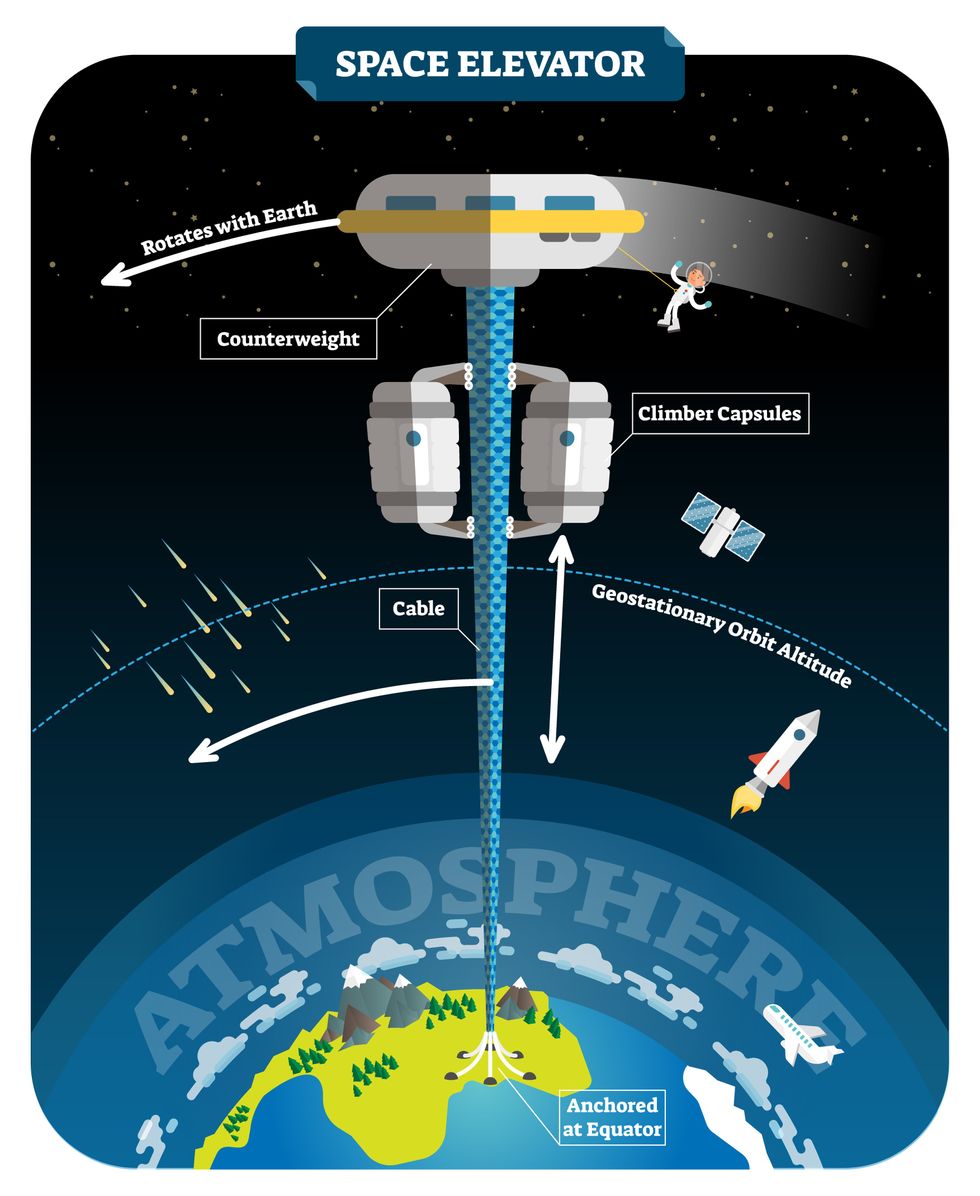

The space elevator

The seeds for a space elevator were first planted by Russian scientist Konstantin Tsiolkovsky in 1895, who, after visiting the 1,000-foot (305 m) Eiffel Tower, published a paper theorizing about the construction of a structure 22,000 miles (35,400 km) high.

This would provide access to geostationary orbit, an altitude where objects appear to remain fixed above Earth’s surface, but Tsiolkovsky conceded that no material could support the weight of such a tower.

We could then send electrically powered “climber” vehicles up and down the tether to deliver payloads to any Earth orbit.

In 1959, soon after Sputnik, Russian engineer Yuri N. Artsutanov proposed a way around this issue: instead of building a space elevator from the ground up, start at the top. More specifically, he suggested placing a satellite in geostationary orbit and dropping a tether from it down to Earth’s equator. As the tether descended, the satellite would ascend. Once attached to Earth’s surface, the tether would be kept taut, thanks to a combination of gravitational and centrifugal forces.

We could then send electrically powered “climber” vehicles up and down the tether to deliver payloads to any Earth orbit. According to physicist Bradley Edwards, who researched the concept for NASA about 20 years ago, it’d cost $10 billion and take 15 years to build a space elevator, but once operational, the cost of sending a payload to any Earth orbit could be as low as $100 per pound.

“Once you reduce the cost to almost a Fed-Ex kind of level, it opens the doors to lots of people, lots of countries, and lots of companies to get involved in space,” Edwards told Space.com in 2005.

In addition to the economic advantages, a space elevator would also be cleaner than using rockets — there’d be no burning of fuel, no harmful greenhouse emissions — and the new transport system wouldn’t contribute to the problem of space junk to the same degree that expendable rockets do.

So, why don’t we have one yet?

Tether troubles

Edwards wrote in his report for NASA that all of the technology needed to build a space elevator already existed except the material needed to build the tether, which needs to be light but also strong enough to withstand all the huge forces acting upon it.

The good news, according to the report, was that the perfect material — ultra-strong, ultra-tiny “nanotubes” of carbon — would be available in just two years.

“[S]teel is not strong enough, neither is Kevlar, carbon fiber, spider silk, or any other material other than carbon nanotubes,” wrote Edwards. “Fortunately for us, carbon nanotube research is extremely hot right now, and it is progressing quickly to commercial production.”Unfortunately, he misjudged how hard it would be to synthesize carbon nanotubes — to date, no one has been able to grow one longer than 21 inches (53 cm).

Further research into the material revealed that it tends to fray under extreme stress, too, meaning even if we could manufacture carbon nanotubes at the lengths needed, they’d be at risk of snapping, not only destroying the space elevator, but threatening lives on Earth.

Looking ahead

Carbon nanotubes might have been the early frontrunner as the tether material for space elevators, but there are other options, including graphene, an essentially two-dimensional form of carbon that is already easier to scale up than nanotubes (though still not easy).

Contrary to Edwards’ report, Johns Hopkins University researchers Sean Sun and Dan Popescu say Kevlar fibers could work — we would just need to constantly repair the tether, the same way the human body constantly repairs its tendons.

“Using sensors and artificially intelligent software, it would be possible to model the whole tether mathematically so as to predict when, where, and how the fibers would break,” the researchers wrote in Aeon in 2018.

“When they did, speedy robotic climbers patrolling up and down the tether would replace them, adjusting the rate of maintenance and repair as needed — mimicking the sensitivity of biological processes,” they continued.Astronomers from the University of Cambridge and Columbia University also think Kevlar could work for a space elevator — if we built it from the moon, rather than Earth.

They call their concept the Spaceline, and the idea is that a tether attached to the moon’s surface could extend toward Earth’s geostationary orbit, held taut by the pull of our planet’s gravity. We could then use rockets to deliver payloads — and potentially people — to solar-powered climber robots positioned at the end of this 200,000+ mile long tether. The bots could then travel up the line to the moon’s surface.

This wouldn’t eliminate the need for rockets to get into Earth’s orbit, but it would be a cheaper way to get to the moon. The forces acting on a lunar space elevator wouldn’t be as strong as one extending from Earth’s surface, either, according to the researchers, opening up more options for tether materials.

“[T]he necessary strength of the material is much lower than an Earth-based elevator — and thus it could be built from fibers that are already mass-produced … and relatively affordable,” they wrote in a paper shared on the preprint server arXiv.

After riding up the Earth-based space elevator, a capsule would fly to a space station attached to the tether of the moon-based one.

Electrically powered climber capsules could go up down the tether to deliver payloads to any Earth orbit.

Adobe Stock

Some Chinese researchers, meanwhile, aren’t giving up on the idea of using carbon nanotubes for a space elevator — in 2018, a team from Tsinghua University revealed that they’d developed nanotubes that they say are strong enough for a tether.

The researchers are still working on the issue of scaling up production, but in 2021, state-owned news outlet Xinhua released a video depicting an in-development concept, called “Sky Ladder,” that would consist of space elevators above Earth and the moon.

After riding up the Earth-based space elevator, a capsule would fly to a space station attached to the tether of the moon-based one. If the project could be pulled off — a huge if — China predicts Sky Ladder could cut the cost of sending people and goods to the moon by 96 percent.

The bottom line

In the 120 years since Tsiolkovsky looked at the Eiffel Tower and thought way bigger, tremendous progress has been made developing materials with the properties needed for a space elevator. At this point, it seems likely we could one day have a material that can be manufactured at the scale needed for a tether — but by the time that happens, the need for a space elevator may have evaporated.

Several aerospace companies are making progress with their own reusable rockets, and as those join the market with SpaceX, competition could cause launch prices to fall further.

California startup SpinLaunch, meanwhile, is developing a massive centrifuge to fling payloads into space, where much smaller rockets can propel them into orbit. If the company succeeds (another one of those big ifs), it says the system would slash the amount of fuel needed to reach orbit by 70 percent.

Even if SpinLaunch doesn’t get off the ground, several groups are developing environmentally friendly rocket fuels that produce far fewer (or no) harmful emissions. More work is needed to efficiently scale up their production, but overcoming that hurdle will likely be far easier than building a 22,000-mile (35,400-km) elevator to space.

This article originally appeared on Big Think, home of the brightest minds and biggest ideas of all time.

New tech aims to make the ocean healthier for marine life

Overabundance of dissolved carbon dioxide poses a threat to marine life. A new system detects elevated levels of the greenhouse gases and mitigates them on the spot.

A defunct drydock basin arched by a rusting 19th century steel bridge seems an incongruous place to conduct state-of-the-art climate science. But this placid and protected sliver of water connecting Brooklyn’s Navy Yard to the East River was just right for Garrett Boudinot to float a small dock topped with water carbon-sensing gear. And while his system right now looks like a trio of plastic boxes wired up together, it aims to mediate the growing ocean acidification problem, caused by overabundance of dissolved carbon dioxide.

Boudinot, a biogeochemist and founder of a carbon-management startup called Vycarb, is honing his method for measuring CO2 levels in water, as well as (at least temporarily) correcting their negative effects. It’s a challenge that’s been occupying numerous climate scientists as the ocean heats up, and as states like New York recognize that reducing emissions won’t be enough to reach their climate goals; they’ll have to figure out how to remove carbon, too.

To date, though, methods for measuring CO2 in water at scale have been either intensely expensive, requiring fancy sensors that pump CO2 through membranes; or prohibitively complicated, involving a series of lab-based analyses. And that’s led to a bottleneck in efforts to remove carbon as well.

But recently, Boudinot cracked part of the code for measurement and mitigation, at least on a small scale. While the rest of the industry sorts out larger intricacies like getting ocean carbon markets up and running and driving carbon removal at billion-ton scale in centralized infrastructure, his decentralized method could have important, more immediate implications.

Specifically, for shellfish hatcheries, which grow seafood for human consumption and for coastal restoration projects. Some of these incubators for oysters and clams and scallops are already feeling the negative effects of excess carbon in water, and Vycarb’s tech could improve outcomes for the larval- and juvenile-stage mollusks they’re raising. “We’re learning from these folks about what their needs are, so that we’re developing our system as a solution that’s relevant,” Boudinot says.

Ocean acidification can wreak havoc on developing shellfish, inhibiting their shells from growing and leading to mass die-offs.

Ocean waters naturally absorb CO2 gas from the atmosphere. When CO2 accumulates faster than nature can dissipate it, it reacts with H2O molecules, forming carbonic acid, H2CO3, which makes the water column more acidic. On the West Coast, acidification occurs when deep, carbon dioxide-rich waters upwell onto the coast. This can wreak havoc on developing shellfish, inhibiting their shells from growing and leading to mass die-offs; this happened, disastrously, at Pacific Northwest oyster hatcheries in 2007.

This type of acidification will eventually come for the East Coast, too, says Ryan Wallace, assistant professor and graduate director of environmental studies and sciences at Long Island’s Adelphi University, who studies acidification. But at the moment, East Coast acidification has other sources: agricultural runoff, usually in the form of nitrogen, and human and animal waste entering coastal areas. These excess nutrient loads cause algae to grow, which isn’t a problem in and of itself, Wallace says; but when algae die, they’re consumed by bacteria, whose respiration in turn bumps up CO2 levels in water.

“Unfortunately, this is occurring at the bottom [of the water column], where shellfish organisms live and grow,” Wallace says. Acidification on the East Coast is minutely localized, occurring closest to where nutrients are being released, as well as seasonally; at least one local shellfish farm, on Fishers Island in the Long Island Sound, has contended with its effects.

The second Vycarb pilot, ready to be installed at the East Hampton shellfish hatchery.

Courtesy of Vycarb

Besides CO2, ocean water contains two other forms of dissolved carbon — carbonate (CO3-) and bicarbonate (HCO3) — at all times, at differing levels. At low pH (acidic), CO2 prevails; at medium pH, HCO3 is the dominant form; at higher pH, CO3 dominates. Boudinot’s invention is the first real-time measurement for all three, he says. From the dock at the Navy Yard, his pilot system uses carefully calibrated but low-cost sensors to gauge the water’s pH and its corresponding levels of CO2. When it detects elevated levels of the greenhouse gas, the system mitigates it on the spot. It does this by adding a bicarbonate powder that’s a byproduct of agricultural limestone mining in nearby Pennsylvania. Because the bicarbonate powder is alkaline, it increases the water pH and reduces the acidity. “We drive a chemical reaction to increase the pH to convert greenhouse gas- and acid-causing CO2 into bicarbonate, which is HCO3,” Boudinot says. “And HCO3 is what shellfish and fish and lots of marine life prefers over CO2.”

This de-acidifying “buffering” is something shellfish operations already do to water, usually by adding soda ash (NaHCO3), which is also alkaline. Some hatcheries add soda ash constantly, just in case; some wait till acidification causes significant problems. Generally, for an overly busy shellfish farmer to detect acidification takes time and effort. “We’re out there daily, taking a look at the pH and figuring out how much we need to dose it,” explains John “Barley” Dunne, director of the East Hampton Shellfish Hatchery on Long Island. “If this is an automatic system…that would be much less labor intensive — one less thing to monitor when we have so many other things we need to monitor.”

Across the Sound at the hatchery he runs, Dunne annually produces 30 million hard clams, 6 million oysters, and “if we’re lucky, some years we get a million bay scallops,” he says. These mollusks are destined for restoration projects around the town of East Hampton, where they’ll create habitat, filter water, and protect the coastline from sea level rise and storm surge. So far, Dunne’s hatchery has largely escaped the ill effects of acidification, although his bay scallops are having a finicky year and he’s checking to see if acidification might be part of the problem. But “I think it's important to have these solutions ready-at-hand for when the time comes,” he says. That’s why he’s hosting a second, 70-liter Vycarb pilot starting this summer on a dock adjacent to his East Hampton operation; it will amp up to a 50,000 liter-system in a few months.

If it can buffer water over a large area, absolutely this will benefit natural spawns. -- John “Barley” Dunne.

Boudinot hopes this new pilot will act as a proof of concept for hatcheries up and down the East Coast. The area from Maine to Nova Scotia is experiencing the worst of Atlantic acidification, due in part to increased Arctic meltwater combining with Gulf of St. Lawrence freshwater; that decreases saturation of calcium carbonate, making the water more acidic. Boudinot says his system should work to adjust low pH regardless of the cause or locale. The East Hampton system will eventually test and buffer-as-necessary the water that Dunne pumps from the Sound into 100-gallon land-based tanks where larvae grow for two weeks before being transferred to an in-Sound nursery to plump up.

Dunne says this could have positive effects — not only on his hatchery but on wild shellfish populations, too, reducing at least one stressor their larvae experience (others include increasing water temperatures and decreased oxygen levels). “If it can buffer water over a large area, absolutely this will [benefit] natural spawns,” he says.

No one believes the Vycarb model — even if it proves capable of functioning at much greater scale — is the sole solution to acidification in the ocean. Wallace says new water treatment plants in New York City, which reduce nitrogen released into coastal waters, are an important part of the equation. And “certainly, some green infrastructure would help,” says Boudinot, like restoring coastal and tidal wetlands to help filter nutrient runoff.

In the meantime, Boudinot continues to collect data in advance of amping up his own operations. Still unknown is the effect of releasing huge amounts of alkalinity into the ocean. Boudinot says a pH of 9 or higher can be too harsh for marine life, plus it can also trigger a release of CO2 from the water back into the atmosphere. For a third pilot, on Governor’s Island in New York Harbor, Vycarb will install yet another system from which Boudinot’s team will frequently sample to analyze some of those and other impacts. “Let's really make sure that we know what the results are,” he says. “Let's have data to show, because in this carbon world, things behave very differently out in the real world versus on paper.”