Beyond Henrietta Lacks: How the Law Has Denied Every American Ownership Rights to Their Own Cells

A 2017 portrait of Henrietta Lacks.

The common perception is that Henrietta Lacks was a victim of poverty and racism when in 1951 doctors took samples of her cervical cancer without her knowledge or permission and turned them into the world's first immortalized cell line, which they called HeLa. The cell line became a workhorse of biomedical research and facilitated the creation of medical treatments and cures worth untold billions of dollars. Neither Lacks nor her family ever received a penny of those riches.

But racism and poverty is not to blame for Lacks' exploitation—the reality is even worse. In fact all patients, then and now, regardless of social or economic status, have absolutely no right to cells that are taken from their bodies. Some have called this biological slavery.

How We Got Here

The case that established this legal precedent is Moore v. Regents of the University of California.

John Moore was diagnosed with hairy-cell leukemia in 1976 and his spleen was removed as part of standard treatment at the UCLA Medical Center. On initial examination his physician, David W. Golde, had discovered some unusual qualities to Moore's cells and made plans prior to the surgery to have the tissue saved for research rather than discarded as waste. That research began almost immediately.

"On both sides of the case, legal experts and cultural observers cautioned that ownership of a human body was the first step on the slippery slope to 'bioslavery.'"

Even after Moore moved to Seattle, Golde kept bringing him back to Los Angeles to collect additional samples of blood and tissue, saying it was part of his treatment. When Moore asked if the work could be done in Seattle, he was told no. Golde's charade even went so far as claiming to find a low-income subsidy to pay for Moore's flights and put him up in a ritzy hotel to get him to return to Los Angeles, while paying for those out of his own pocket.

Moore became suspicious when he was asked to sign new consent forms giving up all rights to his biological samples and he hired an attorney to look into the matter. It turned out that Golde had been lying to his patient all along; he had been collecting samples unnecessary to Moore's treatment and had turned them into a cell line that he and UCLA had patented and already collected millions of dollars in compensation. The market for the cell lines was estimated at $3 billion by 1990.

Moore felt he had been taken advantage of and filed suit to claim a share of the money that had been made off of his body. "On both sides of the case, legal experts and cultural observers cautioned that ownership of a human body was the first step on the slippery slope to 'bioslavery,'" wrote Priscilla Wald, a professor at Duke University whose career has focused on issues of medicine and culture. "Moore could be viewed as asking to commodify his own body part or be seen as the victim of the theft of his most private and inalienable information."

The case bounced around different levels of the court system with conflicting verdicts for nearly six years until the California Supreme Court ruled on July 9, 1990 that Moore had no legal rights to cells and tissue once they were removed from his body.

The court made a utilitarian argument that the cells had no value until scientists manipulated them in the lab. And it would be too burdensome for researchers to track individual donations and subsequent cell lines to assure that they had been ethically gathered and used. It would impinge on the free sharing of materials between scientists, slow research, and harm the public good that arose from such research.

"In effect, what Moore is asking us to do is impose a tort duty on scientists to investigate the consensual pedigree of each human cell sample used in research," the majority wrote. In other words, researchers don't need to ask any questions about the materials they are using.

One member of the court did not see it that way. In his dissent, Stanley Mosk raised the specter of slavery that "arises wherever scientists or industrialists claim, as defendants have here, the right to appropriate and exploit a patient's tissue for their sole economic benefit—the right, in other words, to freely mine or harvest valuable physical properties of the patient's body. … This is particularly true when, as here, the parties are not in equal bargaining positions."

Mosk also cited the appeals court decision that the majority overturned: "If this science has become for profit, then we fail to see any justification for excluding the patient from participation in those profits."

But the majority bought the arguments that Golde, UCLA, and the nascent biotechnology industry in California had made in amici briefs filed throughout the legal proceedings. The road was now cleared for them to develop products worth billions without having to worry about or share with the persons who provided the raw materials upon which their research was based.

Critical Views

Biomedical research requires a continuous and ever-growing supply of human materials for the foundation of its ongoing work. If an increasing number of patients come to feel as John Moore did, that the system is ripping them off, then they become much less likely to consent to use of their materials in future research.

Some legal and ethical scholars say that donors should be able to limit the types of research allowed for their tissues and researchers should be monitored to assure compliance with those agreements. For example, today it is commonplace for companies to certify that their clothing is not made by child labor, their coffee is grown under fair trade conditions, that food labeled kosher is properly handled. Should we ask any less of our pharmaceuticals than that the donors whose cells made such products possible have been treated honestly and fairly, and share in the financial bounty that comes from such drugs?

Protection of individual rights is a hallmark of the American legal system, says Lisa Ikemoto, a law professor at the University of California Davis. "Putting the needs of a generalized public over the interests of a few often rests on devaluation of the humanity of the few," she writes in a reimagined version of the Moore decision that upholds Moore's property claims to his excised cells. The commentary is in a chapter of a forthcoming book in the Feminist Judgment series, where authors may only use legal precedent in effect at the time of the original decision.

"Why is the law willing to confer property rights upon some while denying the same rights to others?" asks Radhika Rao, a professor at the University of California, Hastings College of the Law. "The researchers who invest intellectual capital and the companies and universities that invest financial capital are permitted to reap profits from human research, so why not those who provide the human capital in the form of their own bodies?" It might be seen as a kind of sweat equity where cash strapped patients make a valuable in kind contribution to the enterprise.

The Moore court also made a big deal about inhibiting the free exchange of samples between scientists. That has become much less the situation over the more than three decades since the decision was handed down. Ironically, this decision, as well as other laws and regulations, have since strengthened the power of patents in biomedicine and by doing so have increased secrecy and limited sharing.

"Although the research community theoretically endorses the sharing of research, in reality sharing is commonly compromised by the aggressive pursuit and defense of patents and by the use of licensing fees that hinder collaboration and development," Robert D. Truog, Harvard Medical School ethicist and colleagues wrote in 2012 in the journal Science. "We believe that measures are required to ensure that patients not bear all of the altruistic burden of promoting medical research."

Additionally, the increased complexity of research and the need for exacting standardization of materials has given rise to an industry that supplies certified chemical reagents, cell lines, and whole animals bred to have specific genetic traits to meet research needs. This has been more efficient for research and has helped to ensure that results from one lab can be reproduced in another.

The Court's rationale of fostering collaboration and free exchange of materials between researchers also has been undercut by the changing structure of that research. Big pharma has shrunk the size of its own research labs and over the last decade has worked out cooperative agreements with major research universities where the companies contribute to the research budget and in return have first dibs on any findings (and sometimes a share of patent rights) that come out of those university labs. It has had a chilling effect on the exchange of materials between universities.

Perhaps tracking cell line donors and use restrictions on those donations might have been burdensome to researchers when Moore was being litigated. Some labs probably still kept their cell line records on 3x5 index cards, computers were primarily expensive room-size behemoths with limited capacity, the internet barely existed, and there was no cloud storage.

But that was the dawn of a new technological age and standards have changed. Now cell lines are kept in state-of-the-art sub zero storage units, tagged with the source, type of tissue, date gathered and often other information. Adding a few more data fields and contacting the donor if and when appropriate does not seem likely to disrupt the research process, as the court asserted.

Forging the Future

"U.S. universities are awarded almost 3,000 patents each year. They earn more than $2 billion each year from patent royalties. Sharing a modest portion of these profits is a novel method for creating a greater sense of fairness in research relationships that we think is worth exploring," wrote Mark Yarborough, a bioethicist at the University of California Davis Medical School, and colleagues. That was penned nearly a decade ago and those numbers have only grown.

The Michigan BioTrust for Health might serve as a useful model in tackling some of these issues. Dried blood spots have been collected from all newborns for half a century to be tested for certain genetic diseases, but controversy arose when the huge archive of dried spots was used for other research projects. As a result, the state created a nonprofit organization to in essence become a biobank and manage access to these spots only for specific purposes, and also to share any revenue that might arise from that research.

"If there can be no property in a whole living person, does it stand to reason that there can be no property in any part of a living person? If there were, can it be said that this could equate to some sort of 'biological slavery'?" Irish ethicist Asim A. Sheikh wrote several years ago. "Any amount of effort spent pondering the issue of 'ownership' in human biological materials with existing law leaves more questions than answers."

Perhaps the biggest question will arise when -- not if but when -- it becomes possible to clone a human being. Would a human clone be a legal person or the property of those who created it? Current legal precedent points to it being the latter.

Today, October 4, is the 70th anniversary of Henrietta Lacks' death from cancer. Over those decades her immortalized cells have helped make possible miraculous advances in medicine and have had a role in generating billions of dollars in profits. Surviving family members have spoken many times about seeking a share of those profits in the name of social justice; they intend to file lawsuits today. Such cases will succeed or fail on their own merits. But regardless of their specific outcomes, one can hope that they spark a larger public discussion of the role of patients in the biomedical research enterprise and lead to establishing a legal and financial claim for their contributions toward the next generation of biomedical research.

Researchers are looking to engineer chocolate with less oil, which could reduce some of its detriments to health.

Creamy milk with velvety texture. Dark with sprinkles of sea salt. Crunchy hazelnut-studded chunks. Chocolate is a treat that appeals to billions of people worldwide, no matter the age. And it’s not only the taste, but the feel of a chocolate morsel slowly melting in our mouths—the smoothness and slipperiness—that’s part of the overwhelming satisfaction. Why is it so enjoyable?

That’s what an interdisciplinary research team of chocolate lovers from the University of Leeds School of Food Science and Nutrition and School of Mechanical Engineering in the U.K. resolved to study in 2021. They wanted to know, “What is making chocolate that desirable?” says Siavash Soltanahmadi, one of the lead authors of a new study about chocolates hedonistic quality.

Besides addressing the researchers’ general curiosity, their answers might help chocolate manufacturers make the delicacy even more enjoyable and potentially healthier. After all, chocolate is a billion-dollar industry. Revenue from chocolate sales, whether milk or dark, is forecasted to grow 13 percent by 2027 in the U.K. In the U.S., chocolate and candy sales increased by 11 percent from 2020 to 2021, on track to reach $44.9 billion by 2026. Figuring out how chocolate affects the human palate could up the ante even more.

Building a 3D tongue

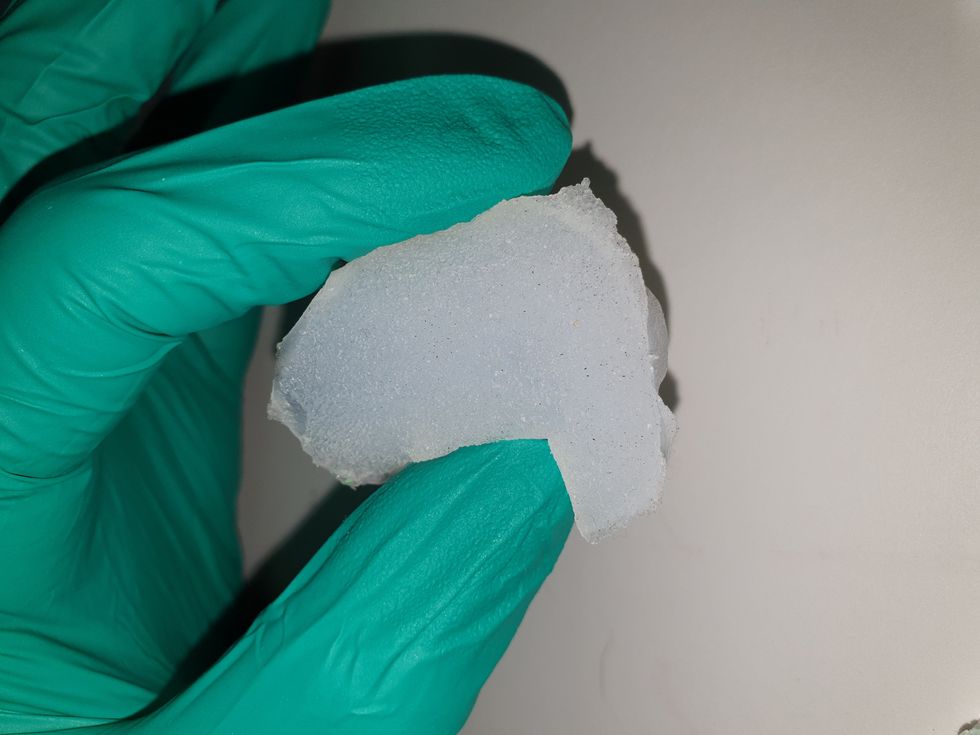

The team began by building a 3D tongue to analyze the physical process by which chocolate breaks down inside the mouth.

As part of the effort, reported earlier this year in the scientific journal ACS Applied Materials and Interfaces, the team studied a large variety of human tongues with the intention to build an “average” 3D model, says Soltanahmadi, a lubrication scientist. When it comes to edible substances, lubrication science looks at how food feels in the mouth and can help design foods that taste better and have more satisfying texture or health benefits.

There are variations in how people enjoy chocolate; some chew it while others “lick it” inside their mouths.

Tongue impressions from human participants studied using optical imaging helped the team build a tongue with key characteristics. “Our tongue is not a smooth muscle, it’s got some texture, it has got some roughness,” Soltanahmadi says. From those images, the team came up with a digital design of an average tongue and, using 3D printed molds, built a “mimic tongue.” They also added elastomers—such as silicone or polyurethane—to mimic the roughness, the texture and the mechanical properties of a real tongue. “Wettability" was another key component of the 3D tongue, Soltanahmadi says, referring to whether a surface mixes with water (hydrophilic) or, in the case of oil, resists it (hydrophobic).

Notably, the resulting artificial 3D-tongues looked nothing like the human version, but they were good mimics. The scientists also created “testing kits” that produced data on various physical parameters. One such parameter was viscosity, the measure of how gooey a food or liquid is — honey is more viscous compared to water, for example. Another was tribology, which defines how slippery something is — high fat yogurt is more slippery than low fat yogurt; milk can be more slippery than water. The researchers then mixed chocolate with artificial saliva and spread it on the 3D tongue to measure the tribology and the viscosity. From there they were able to study what happens inside the mouth when we eat chocolate.

The team focused on the stages of lubrication and the location of the fat in the chocolate, a process that has rarely been researched.

The artificial 3D-tongues look nothing like human tongues, but they function well enough to do the job.

Courtesy Anwesha Sarkar and University of Leeds

The oral processing of chocolate

We process food in our mouths in several stages, Soltanahmadi says. And there is variation in these stages depending on the type of food. So, the oral processing of a piece of meat would be different from, say, the processing of jelly or popcorn.

There are variations with chocolate, in particular; some people chew it while others use their tongues to explore it (within their mouths), Soltanahmadi explains. “Usually, from a consumer perspective, what we find is that if you have a luxury kind of a chocolate, then people tend to start with licking the chocolate rather than chewing it.” The researchers used a luxury brand of dark chocolate and focused on the process of licking rather than chewing.

As solid cocoa particles and fat are released, the emulsion envelops the tongue and coats the palette creating a smooth feeling of chocolate all over the mouth. That tactile sensation is part of the chocolate’s hedonistic appeal we crave.

Understanding the make-up of the chocolate was also an important step in the study. “Chocolate is a composite material. So, it has cocoa butter, which is oil, it has some particles in it, which is cocoa solid, and it has sugars," Soltanahmadi says. "Dark chocolate has less oil, for example, and less sugar in it, most of the time."

The researchers determined that the oral processing of chocolate begins as soon as it enters a person’s mouth; it starts melting upon exposure to one’s body temperature, even before the tongue starts moving, Soltanahmadi says. Then, lubrication begins. “[Saliva] mixes with the oily chocolate and it makes an emulsion." An emulsion is a fluid with a watery (or aqueous) phase and an oily phase. As chocolate breaks down in the mouth, that solid piece turns into a smooth emulsion with a fatty film. “The oil from the chocolate becomes droplets in a continuous aqueous phase,” says Soltanahmadi. In other words, as solid cocoa particles and fat are released, the emulsion envelops the tongue and coats the palette, creating a smooth feeling of chocolate all over the mouth. That tactile sensation is part of the chocolate’s hedonistic appeal we crave, says Soltanahmadi.

Finding the sweet spot

After determining how chocolate is orally processed, the research team wanted to find the exact sweet spot of the breakdown of solid cocoa particles and fat as they are released into the mouth. They determined that the epicurean pleasure comes only from the chocolate's outer layer of fat; the secondary fatty layers inside the chocolate don’t add to the sensation. It was this final discovery that helped the team determine that it might be possible to produce healthier chocolate that would contain less oil, says Soltanahmadi. And therefore, less fat.

Rongjia Tao, a physicist at Temple University in Philadelphia, thinks the Leeds study and the concept behind it is “very interesting.” Tao, himself, did a study in 2016 and found he could reduce fat in milk chocolate by 20 percent. He believes that the Leeds researchers’ discovery about the first layer of fat being more important for taste than the other layer can inform future chocolate manufacturing. “As a scientist I consider this significant and an important starting point,” he says.

Chocolate is rich in polyphenols, naturally occurring compounds also found in fruits and vegetables, such as grapes, apples and berries. Research found that plant polyphenols can protect against cancer, diabetes and osteoporosis as well as cardiovascular ad neurodegenerative diseases.

Not everyone thinks it’s a good idea, such as chef Michael Antonorsi, founder and owner of Chuao Chocolatier, one of the leading chocolate makers in the U.S. First, he says, “cacao fat is definitely a good fat.” Second, he’s not thrilled that science is trying to interfere with nature. “Every time we've tried to intervene and change nature, we get things out of balance,” says Antonorsi. “There’s a reason cacao is botanically known as food of the gods. The botanical name is the Theobroma cacao: Theobroma in ancient Greek, Theo is God and Brahma is food. So it's a food of the gods,” Antonorsi explains. He’s doubtful that a chocolate made only with a top layer of fat will produce the same epicurean satisfaction. “You're not going to achieve the same sensation because that surface fat is going to dissipate and there is no fat from behind coming to take over,” he says.

Without layers of fat, Antonorsi fears the deeply satisfying experiential part of savoring chocolate will be lost. The University of Leeds team, however, thinks that it may be possible to make chocolate healthier - when consumed in limited amounts - without sacrificing its taste. They believe the concept of less fatty but no less slick chocolate will resonate with at least some chocolate-makers and consumers, too.

Chocolate already contains some healthful compounds. Its cocoa particles have “loads of health benefits,” says Soltanahmadi. Dark chocolate usually has more cocoa than milk chocolate. Some experts recommend that dark chocolate should contain at least 70 percent cocoa in order for it to offer some health benefit. Research has shown that the cocoa in chocolate is rich in polyphenols, naturally occurring compounds also found in fruits and vegetables, such as grapes, apples and berries. Research has shown that consuming plant polyphenols can be protective against cancer, diabetes and osteoporosis as well as cardiovascular and neurodegenerative diseases.

“So keeping the healthy part of it and reducing the oily part of it, which is not healthy, but is giving you that indulgence of it … that was the final aim,” Soltanahmadi says. He adds that the team has been approached by individuals in the chocolate industry about their research. “Everyone wants to have a healthy chocolate, which at the same time tastes brilliant and gives you that self-indulging experience.”

Probiotic bacteria can be engineered to fight antibiotic-resistant superbugs by releasing chemicals that kill them.

In 1945, almost two decades after Alexander Fleming discovered penicillin, he warned that as antibiotics use grows, they may lose their efficiency. He was prescient—the first case of penicillin resistance was reported two years later. Back then, not many people paid attention to Fleming’s warning. After all, the “golden era” of the antibiotics age had just began. By the 1950s, three new antibiotics derived from soil bacteria — streptomycin, chloramphenicol, and tetracycline — could cure infectious diseases like tuberculosis, cholera, meningitis and typhoid fever, among others.

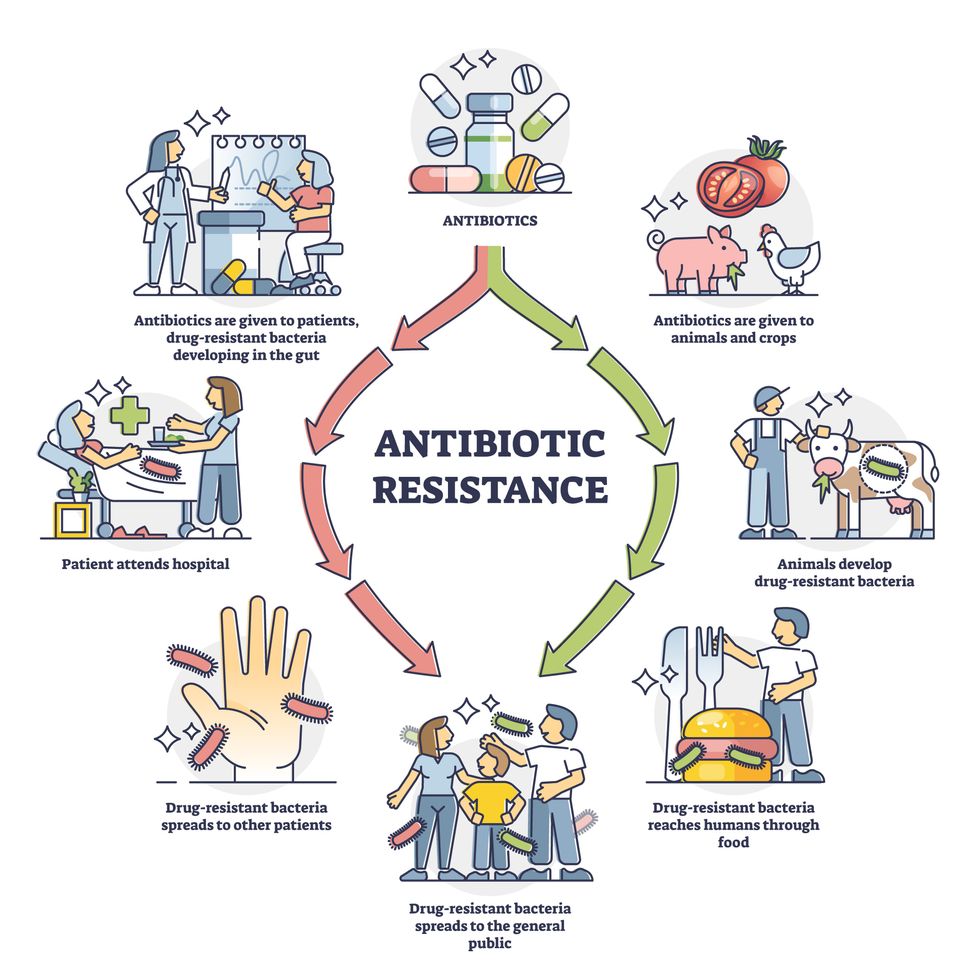

Today, these antibiotics and many of their successors developed through the 1980s are gradually losing their effectiveness. The extensive overuse and misuse of antibiotics led to the rise of drug resistance. The livestock sector buys around 80 percent of all antibiotics sold in the U.S. every year. Farmers feed cows and chickens low doses of antibiotics to prevent infections and fatten up the animals, which eventually causes resistant bacterial strains to evolve. If manure from cattle is used on fields, the soil and vegetables can get contaminated with antibiotic-resistant bacteria. Another major factor is doctors overprescribing antibiotics to humans, particularly in low-income countries. Between 2000 to 2018, the global rates of human antibiotic consumption shot up by 46 percent.

In recent years, researchers have been exploring a promising avenue: the use of synthetic biology to engineer new bacteria that may work better than antibiotics. The need continues to grow, as a Lancet study linked antibiotic resistance to over 1.27 million deaths worldwide in 2019, surpassing HIV/AIDS and malaria. The western sub-Saharan Africa region had the highest death rate (27.3 people per 100,000).

Researchers warn that if nothing changes, by 2050, antibiotic resistance could kill 10 million people annually.

To make it worse, our remedy pipelines are drying up. Out of the 18 biggest pharmaceutical companies, 15 abandoned antibiotic development by 2013. According to the AMR Action Fund, venture capital has remained indifferent towards biotech start-ups developing new antibiotics. In 2019, at least two antibiotic start-ups filed for bankruptcy. As of December 2020, there were 43 new antibiotics in clinical development. But because they are based on previously known molecules, scientists say they are inadequate for treating multidrug-resistant bacteria. Researchers warn that if nothing changes, by 2050, antibiotic resistance could kill 10 million people annually.

The rise of synthetic biology

To circumvent this dire future, scientists have been working on alternative solutions using synthetic biology tools, meaning genetically modifying good bacteria to fight the bad ones.

From the time life evolved on earth around 3.8 billion years ago, bacteria have engaged in biological warfare. They constantly strategize new methods to combat each other by synthesizing toxic proteins that kill competition.

For example, Escherichia coli produces bacteriocins or toxins to kill other strains of E.coli that attempt to colonize the same habitat. Microbes like E.coli (which are not all pathogenic) are also naturally present in the human microbiome. The human microbiome harbors up to 100 trillion symbiotic microbial cells. The majority of them are beneficial organisms residing in the gut at different compositions.

The chemicals that these “good bacteria” produce do not pose any health risks to us, but can be toxic to other bacteria, particularly to human pathogens. For the last three decades, scientists have been manipulating bacteria’s biological warfare tactics to our collective advantage.

In the late 1990s, researchers drew inspiration from electrical and computing engineering principles that involve constructing digital circuits to control devices. In certain ways, every cell in living organisms works like a tiny computer. The cell receives messages in the form of biochemical molecules that cling on to its surface. Those messages get processed within the cells through a series of complex molecular interactions.

Synthetic biologists can harness these living cells’ information processing skills and use them to construct genetic circuits that perform specific instructions—for example, secrete a toxin that kills pathogenic bacteria. “Any synthetic genetic circuit is merely a piece of information that hangs around in the bacteria’s cytoplasm,” explains José Rubén Morones-Ramírez, a professor at the Autonomous University of Nuevo León, Mexico. Then the ribosome, which synthesizes proteins in the cell, processes that new information, making the compounds scientists want bacteria to make. “The genetic circuit remains separated from the living cell’s DNA,” Morones-Ramírez explains. When the engineered bacteria replicates, the genetic circuit doesn’t become part of its genome.

Highly intelligent by bacterial standards, some multidrug resistant V. cholerae strains can also “collaborate” with other intestinal bacterial species to gain advantage and take hold of the gut.

In 2000, Boston-based researchers constructed an E.coli with a genetic switch that toggled between turning genes on and off two. Later, they built some safety checks into their bacteria. “To prevent unintentional or deleterious consequences, in 2009, we built a safety switch in the engineered bacteria’s genetic circuit that gets triggered after it gets exposed to a pathogen," says James Collins, a professor of biological engineering at MIT and faculty member at Harvard University’s Wyss Institute. “After getting rid of the pathogen, the engineered bacteria is designed to switch off and leave the patient's body.”

Overuse and misuse of antibiotics causes resistant strains to evolve

Adobe Stock

Seek and destroy

As the field of synthetic biology developed, scientists began using engineered bacteria to tackle superbugs. They first focused on Vibrio cholerae, which in the 19th and 20th century caused cholera pandemics in India, China, the Middle East, Europe, and Americas. Like many other bacteria, V. cholerae communicate with each other via quorum sensing, a process in which the microorganisms release different signaling molecules, to convey messages to its brethren. Highly intelligent by bacterial standards, some multidrug resistant V. cholerae strains can also “collaborate” with other intestinal bacterial species to gain advantage and take hold of the gut. When untreated, cholera has a mortality rate of 25 to 50 percent and outbreaks frequently occur in developing countries, especially during floods and droughts.

Sometimes, however, V. cholerae makes mistakes. In 2008, researchers at Cornell University observed that when quorum sensing V. cholerae accidentally released high concentrations of a signaling molecule called CAI-1, it had a counterproductive effect—the pathogen couldn’t colonize the gut.

So the group, led by John March, professor of biological and environmental engineering, developed a novel strategy to combat V. cholerae. They genetically engineered E.coli to eavesdrop on V. cholerae communication networks and equipped it with the ability to release the CAI-1 molecules. That interfered with V. cholerae progress. Two years later, the Cornell team showed that V. cholerae-infected mice treated with engineered E.coli had a 92 percent survival rate.

These findings inspired researchers to sic the good bacteria present in foods like yogurt and kimchi onto the drug-resistant ones.

Three years later in 2011, Singapore-based scientists engineered E.coli to detect and destroy Pseudomonas aeruginosa, an often drug-resistant pathogen that causes pneumonia, urinary tract infections, and sepsis. Once the genetically engineered E.coli found its target through its quorum sensing molecules, it then released a peptide, that could eradicate 99 percent of P. aeruginosa cells in a test-tube experiment. The team outlined their work in a Molecular Systems Biology study.

“At the time, we knew that we were entering new, uncharted territory,” says lead author Matthew Chang, an associate professor and synthetic biologist at the National University of Singapore and lead author of the study. “To date, we are still in the process of trying to understand how long these microbes stay in our bodies and how they might continue to evolve.”

More teams followed the same path. In a 2013 study, MIT researchers also genetically engineered E.coli to detect P. aeruginosa via the pathogen’s quorum-sensing molecules. It then destroyed the pathogen by secreting a lab-made toxin.

Probiotics that fight

A year later in 2014, a Nature study found that the abundance of Ruminococcus obeum, a probiotic bacteria naturally occurring in the human microbiome, interrupts and reduces V.cholerae’s colonization— by detecting the pathogen’s quorum sensing molecules. The natural accumulation of R. obeum in Bangladeshi adults helped them recover from cholera despite living in an area with frequent outbreaks.

The findings from 2008 to 2014 inspired Collins and his team to delve into how good bacteria present in foods like yogurt and kimchi can attack drug-resistant bacteria. In 2018, Collins and his team developed the engineered probiotic strategy. They tweaked a bacteria commonly found in yogurt called Lactococcus lactis to treat cholera.

Engineered bacteria can be trained to target pathogens when they are at their most vulnerable metabolic stage in the human gut. --José Rubén Morones-Ramírez.

More scientists followed with more experiments. So far, researchers have engineered various probiotic organisms to fight pathogenic bacteria like Staphylococcus aureus (leading cause of skin, tissue, bone, joint and blood infections) and Clostridium perfringens (which causes watery diarrhea) in test-tube and animal experiments. In 2020, Russian scientists engineered a probiotic called Pichia pastoris to produce an enzyme called lysostaphin that eradicated S. aureus in vitro. Another 2020 study from China used an engineered probiotic bacteria Lactobacilli casei as a vaccine to prevent C. perfringens infection in rabbits.

In a study last year, Ramírez’s group at the Autonomous University of Nuevo León, engineered E. coli to detect quorum-sensing molecules from Methicillin-resistant Staphylococcus aureus or MRSA, a notorious superbug. The E. coli then releases a bacteriocin that kills MRSA. “An antibiotic is just a molecule that is not intelligent,” says Ramírez. “On the other hand, engineered bacteria can be trained to target pathogens when they are at their most vulnerable metabolic stage in the human gut.”

Collins and Timothy Lu, an associate professor of biological engineering at MIT, found that engineered E. coli can help treat other conditions—such as phenylketonuria, a rare metabolic disorder, that causes the build-up of an amino acid phenylalanine. Their start-up Synlogic aims to commercialize the technology, and has completed a phase 2 clinical trial.

Circumventing the challenges

The bacteria-engineering technique is not without pitfalls. One major challenge is that beneficial gut bacteria produce their own quorum-sensing molecules that can be similar to those that pathogens secrete. If an engineered bacteria’s biosensor is not specific enough, it will be ineffective.

Another concern is whether engineered bacteria might mutate after entering the gut. “As with any technology, there are risks where bad actors could have the capability to engineer a microbe to act quite nastily,” says Collins of MIT. But Collins and Ramírez both insist that the chances of the engineered bacteria mutating on its own are virtually non-existent. “It is extremely unlikely for the engineered bacteria to mutate,” Ramírez says. “Coaxing a living cell to do anything on command is immensely challenging. Usually, the greater risk is that the engineered bacteria entirely lose its functionality.”

However, the biggest challenge is bringing the curative bacteria to consumers. Pharmaceutical companies aren’t interested in antibiotics or their alternatives because it’s less profitable than developing new medicines for non-infectious diseases. Unlike the more chronic conditions like diabetes or cancer that require long-term medications, infectious diseases are usually treated much quicker. Running clinical trials are expensive and antibiotic-alternatives aren’t lucrative enough.

“Unfortunately, new medications for antibiotic resistant infections have been pushed to the bottom of the field,” says Lu of MIT. “It's not because the technology does not work. This is more of a market issue. Because clinical trials cost hundreds of millions of dollars, the only solution is that governments will need to fund them.” Lu stresses that societies must lobby to change how the modern healthcare industry works. “The whole world needs better treatments for antibiotic resistance.”