Can Cultured Meat Save the Planet?

Lab-grown meat in a Petri dish and test tube.

In September, California governor Jerry Brown signed a bill mandating that by 2045, all of California's electricity will come from clean power sources. Technological breakthroughs in producing electricity from sun and wind, as well as lowering the cost of battery storage, have played a major role in persuading Californian legislators that this goal is realistic.

Even if the world were to move to an entirely clean power supply, one major source of greenhouse gas emissions would continue to grow: meat.

James Robo, the CEO of the Fortune 200 company NextEra Energy, has predicted that by the early 2020s, electricity from solar farms and giant wind turbines will be cheaper than the operating costs of coal-fired power plants, even when the cost of storage is included.

Can we therefore all breathe a sigh of relief, because technology will save us from catastrophic climate change? Not yet. Even if the world were to move to an entirely clean power supply, and use that clean power to charge up an all-electric fleet of cars, buses and trucks, one major source of greenhouse gas emissions would continue to grow: meat.

The livestock industry now accounts for about 15 percent of global greenhouse gas emissions, roughly the same as the emissions from the tailpipes of all the world's vehicles. But whereas vehicle emissions can be expected to decline as hybrids and electric vehicles proliferate, global meat consumption is forecast to be 76 percent greater in 2050 than it has been in recent years. Most of that growth will come from Asia, especially China, where increasing prosperity has led to an increasing demand for meat.

Changing Climate, Changing Diets, a report from the London-based Royal Institute of International Affairs, indicates the threat posed by meat production. At the UN climate change conference held in Cancun in 2010, the participating countries agreed that to allow global temperatures to rise more than 2°C above pre-industrial levels would be to run an unacceptable risk of catastrophe. Beyond that limit, feedback loops will take effect, causing still more warming. For example, the thawing Siberian permafrost will release large quantities of methane, causing yet more warming and releasing yet more methane. Methane is a greenhouse gas that, ton for ton, warms the planet 30 times as much as carbon dioxide.

The quantity of greenhouse gases we can put into the atmosphere between now and mid-century without heating up the planet beyond 2°C – known as the "carbon budget" -- is shrinking steadily. The growing demand for meat means, however, that emissions from the livestock industry will continue to rise, and will absorb an increasing share of this remaining carbon budget. This will, according to Changing Climate, Changing Diets, make it "extremely difficult" to limit the temperature rise to 2°C.

One reason why eating meat produces more greenhouse gases than getting the same food value from plants is that we use fossil fuels to grow grains and soybeans and feed them to animals. The animals use most of the energy in the plant food for themselves, moving, breathing, and keeping their bodies warm. That leaves only a small fraction for us to eat, and so we have to grow several times the quantity of grains and soybeans that we would need if we ate plant foods ourselves. The other important factor is the methane produced by ruminants – mainly cattle and sheep – as part of their digestive process. Surprisingly, that makes grass-fed beef even worse for our climate than beef from animals fattened in a feedlot. Cattle fed on grass put on weight more slowly than cattle fed on corn and soybeans, and therefore do burp and fart more methane, per kilogram of flesh they produce.

Richard Branson has suggested that in 30 years, we will look back on the present era and be shocked that we killed animals en masse for food.

If technology can give us clean power, can it also give us clean meat? That term is already in use, by advocates of growing meat at the cellular level. They use it, not to make the parallel with clean energy, but to emphasize that meat from live animals is dirty, because live animals shit. Bacteria from the animals' guts and shit often contaminates the meat. With meat cultured from cells grown in a bioreactor, there is no live animal, no shit, and no bacteria from a digestive system to get mixed into the meat. There is also no methane. Nor is there a living animal to keep warm, move around, or grow body parts that we do not eat. Hence producing meat in this way would be much more efficient, and much cleaner, in the environmental sense, than producing meat from animals.

There are now many startups working on bringing clean meat to market. Plant-based products that have the texture and taste of meat, like the "Impossible Burger" and the "Beyond Burger" are already available in restaurants and supermarkets. Clean hamburger meat, fish, dairy, and other animal products are all being produced without raising and slaughtering a living animal. The price is not yet competitive with animal products, but it is coming down rapidly. Just this week, leading officials from the Food and Drug Administration and the U.S. Department of Agriculture have been meeting to discuss how to regulate the expected production and sale of meat produced by this method.

When Kodak, which once dominated the sale and processing of photographic film, decided to treat digital photography as a threat rather than an opportunity, it signed its own death warrant. Tyson Foods and Cargill, two of the world's biggest meat producers, are not making the same mistake. They are investing in companies seeking to produce meat without raising animals. Justin Whitmore, Tyson's executive vice-president, said, "We don't want to be disrupted. We want to be part of the disruption."

That's a brave stance for a company that has made its fortune from raising and killing tens of billions of animals, but it is also an acknowledgement that when new technologies create products that people want, they cannot be resisted. Richard Branson, who has invested in the biotech company Memphis Meats, has suggested that in 30 years, we will look back on the present era and be shocked that we killed animals en masse for food. If that happens, technology will have made possible the greatest ethical step forward in the history of our species, saving the planet and eliminating the vast quantity of suffering that industrial farming is now inflicting on animals.

Thanks to safety cautions from the COVID-19 pandemic, a strain of influenza has been completely eliminated.

If you were one of the millions who masked up, washed your hands thoroughly and socially distanced, pat yourself on the back—you may have helped change the course of human history.

Scientists say that thanks to these safety precautions, which were introduced in early 2020 as a way to stop transmission of the novel COVID-19 virus, a strain of influenza has been completely eliminated. This marks the first time in human history that a virus has been wiped out through non-pharmaceutical interventions, such as vaccines.

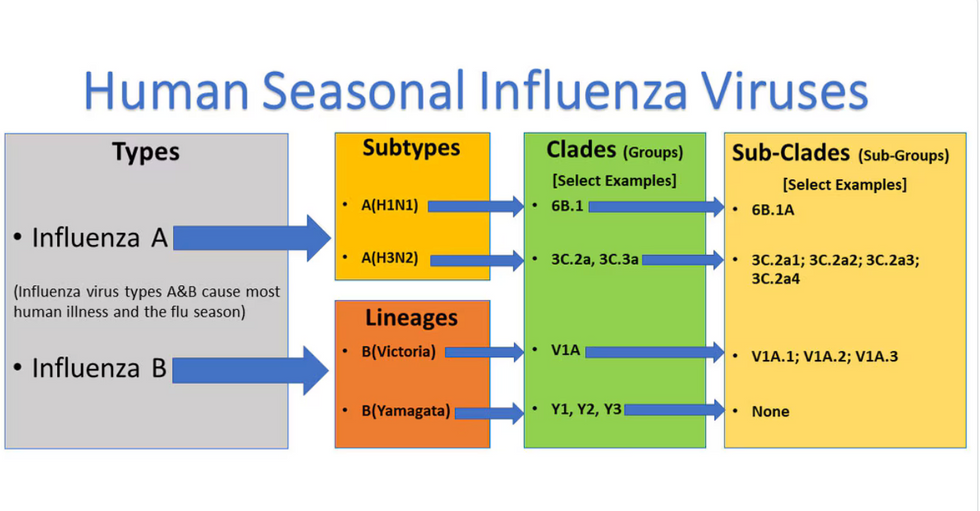

The flu shot, explained

Influenza viruses type A and B are responsible for the majority of human illnesses and the flu season.

Centers for Disease Control

For more than a decade, flu shots have protected against two types of the influenza virus–type A and type B. While there are four different strains of influenza in existence (A, B, C, and D), only strains A, B, and C are capable of infecting humans, and only A and B cause pandemics. In other words, if you catch the flu during flu season, you’re most likely sick with flu type A or B.

Flu vaccines contain inactivated—or dead—influenza virus. These inactivated viruses can’t cause sickness in humans, but when administered as part of a vaccine, they teach a person’s immune system to recognize and kill those viruses when they’re encountered in the wild.

Each spring, a panel of experts gives a recommendation to the US Food and Drug Administration on which strains of each flu type to include in that year’s flu vaccine, depending on what surveillance data says is circulating and what they believe is likely to cause the most illness during the upcoming flu season. For the past decade, Americans have had access to vaccines that provide protection against two strains of influenza A and two lineages of influenza B, known as the Victoria lineage and the Yamagata lineage. But this year, the seasonal flu shot won’t include the Yamagata strain, because the Yamagata strain is no longer circulating among humans.

How Yamagata Disappeared

Flu surveillance data from the Global Initiative on Sharing All Influenza Data (GISAID) shows that the Yamagata lineage of flu type B has not been sequenced since April 2020.

Nature

Experts believe that the Yamagata lineage had already been in decline before the pandemic hit, likely because the strain was naturally less capable of infecting large numbers of people compared to the other strains. When the COVID-19 pandemic hit, the resulting safety precautions such as social distancing, isolating, hand-washing, and masking were enough to drive the virus into extinction completely.

Because the strain hasn’t been circulating since 2020, the FDA elected to remove the Yamagata strain from the seasonal flu vaccine. This will mark the first time since 2012 that the annual flu shot will be trivalent (three-component) rather than quadrivalent (four-component).

Should I still get the flu shot?

The flu shot will protect against fewer strains this year—but that doesn’t mean we should skip it. Influenza places a substantial health burden on the United States every year, responsible for hundreds of thousands of hospitalizations and tens of thousands of deaths. The flu shot has been shown to prevent millions of illnesses each year (more than six million during the 2022-2023 season). And while it’s still possible to catch the flu after getting the flu shot, studies show that people are far less likely to be hospitalized or die when they’re vaccinated.

Another unexpected benefit of dropping the Yamagata strain from the seasonal vaccine? This will possibly make production of the flu vaccine faster, and enable manufacturers to make more vaccines, helping countries who have a flu vaccine shortage and potentially saving millions more lives.

After his grandmother’s dementia diagnosis, one man invented a snack to keep her healthy and hydrated.

Founder Lewis Hornby and his grandmother Pat, sampling Jelly Drops—an edible gummy containing water and life-saving electrolytes.

On a visit to his grandmother’s nursing home in 2016, college student Lewis Hornby made a shocking discovery: Dehydration is a common (and dangerous) problem among seniors—especially those that are diagnosed with dementia.

Hornby’s grandmother, Pat, had always had difficulty keeping up her water intake as she got older, a common issue with seniors. As we age, our body composition changes, and we naturally hold less water than younger adults or children, so it’s easier to become dehydrated quickly if those fluids aren’t replenished. What’s more, our thirst signals diminish naturally as we age as well—meaning our body is not as good as it once was in letting us know that we need to rehydrate. This often creates a perfect storm that commonly leads to dehydration. In Pat’s case, her dehydration was so severe she nearly died.

When Lewis Hornby visited his grandmother at her nursing home afterward, he learned that dehydration especially affects people with dementia, as they often don’t feel thirst cues at all, or may not recognize how to use cups correctly. But while dementia patients often don’t remember to drink water, it seemed to Hornby that they had less problem remembering to eat, particularly candy.

Hornby wanted to create a solution for elderly people who struggled keeping their fluid intake up. He spent the next eighteen months researching and designing a solution and securing funding for his project. In 2019, Hornby won a sizable grant from the Alzheimer’s Society, a UK-based care and research charity for people with dementia and their caregivers. Together, through the charity’s Accelerator Program, they created a bite-sized, sugar-free, edible jelly drop that looked and tasted like candy. The candy, called Jelly Drops, contained 95% water and electrolytes—important minerals that are often lost during dehydration. The final product launched in 2020—and was an immediate success. The drops were able to provide extra hydration to the elderly, as well as help keep dementia patients safe, since dehydration commonly leads to confusion, hospitalization, and sometimes even death.

Not only did Jelly Drops quickly become a favorite snack among dementia patients in the UK, but they were able to provide an additional boost of hydration to hospital workers during the pandemic. In NHS coronavirus hospital wards, patients infected with the virus were regularly given Jelly Drops to keep their fluid levels normal—and staff members snacked on them as well, since long shifts and personal protective equipment (PPE) they were required to wear often left them feeling parched.

In April 2022, Jelly Drops launched in the United States. The company continues to donate 1% of its profits to help fund Alzheimer’s research.