Eight Big Medical and Science Trends to Watch in 2021

Promising developments underway include advancements in gene and cell therapy, better testing for COVID, and a renewed focus on climate change.

The world as we know it has forever changed. With a greater focus on science and technology than before, experts in the biotech and life sciences spaces are grappling with what comes next as SARS-CoV-2, the coronavirus that causes the COVID-19 illness, has spread and mutated across the world.

Even with vaccines being distributed, so much still remains unknown.

Jared Auclair, Technical Supervisor for the Northeastern University's Life Science Testing Center in Burlington, Massachusetts, guides a COVID testing lab that cranks out thousands of coronavirus test results per day. His lab is also focused on monitoring the quality of new cell and gene therapy products coming to the market.

Here are trends Auclair and other experts are watching in 2021.

Better Diagnostic Testing for COVID

Expect improvements in COVID diagnostic testing and the ability to test at home.

There are currently three types of coronavirus tests. The molecular test—also known as the RT-PCR test, detects the virus's genetic material, and is highly accurate, but it can take days to receive results. There are also antibody tests, done through a blood draw, designed to test whether you've had COVID in the past. Finally, there's the quick antigen test that isn't as accurate as the PCR test, but can identify if people are going to infect others.

Last month, Lucira Health secured the U.S. FDA Emergency Use Authorization for the first prescription molecular diagnostic test for COVID-19 that can be performed at home. On December 15th, the Ellume Covid-19 Home Test received authorization as the first over-the-counter COVID-19 diagnostic antigen test that can be done at home without a prescription. The test uses a nasal swab that is connected to a smartphone app and returns results in 15-20 minutes. Similarly, the BinaxNOW COVID-19 Ag Card Home Test received authorization on Dec. 16 for its 15-minute antigen test that can be used within the first seven days of onset of COIVD-19 symptoms.

Home testing has the possibility to impact the pandemic pretty drastically, Auclair says, but there are other considerations: the type and timing of test that is administered, how expensive is the test (and if it is financially feasible for the general public) and the ability of a home test taker to accurately administer the test.

"The vaccine roll-out will not eliminate the need for testing until late 2021 or early 2022."

Ideally, everyone would frequently get tested, but that would mean the cost of a single home test—which is expected to be around $30 or more—would need to be much cheaper, more in the $5 range.

Auclair expects "innovations in the diagnostic space to explode" with the need for more accurate, inexpensive, quicker COVID tests. Auclair foresees innovations to be at first focused on COVID point-of-care testing, but he expects improvements within diagnostic testing for other types of viruses and diseases too.

"We still need more testing to get the pandemic under control, likely over the next 12 months," Auclair says. "The vaccine roll-out will not eliminate the need for testing until late 2021 or early 2022."

Rise of mRNA-based Vaccines and Therapies

A year ago, vaccines weren't being talked about like they are today.

"But clearly vaccines are the talk of the town," Auclair says. "The reason we got a vaccine so fast was there was so much money thrown at it."

A vaccine can take more than 10 years to fully develop, according to the World Economic Forum. Prior to the new COVID vaccines, which were remarkably developed and tested in under a year, the fastest vaccine ever made was for mumps -- and it took four years.

"Normally you have to produce a protein. This is typically done in eggs. It takes forever," says Catherine Dulac, a neuroscientist and developmental biologist at Harvard University who won the 2021 Breakthrough Prize in Life Sciences. "But an mRNA vaccine just enabled [us] to skip all sorts of steps [compared with burdensome conventional manufacturing] and go directly to a product that can be injected into people."

Non-traditional medicines based on genetic research are in their infancy. With mRNA-based vaccines hitting the market for the first time, look for more vaccines to be developed for whatever viruses we don't currently have vaccines for, like dengue virus and Ebola, Auclair says.

"There's a whole bunch of things that could be explored now that haven't been thought about in the past," Auclair says. "It could really be a game changer."

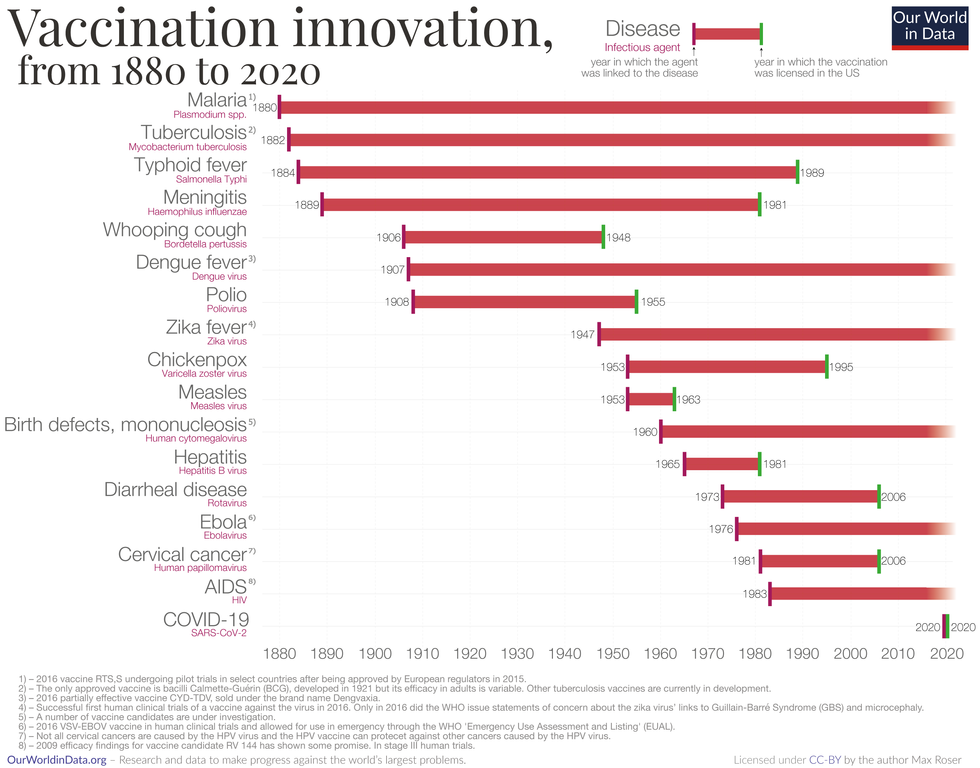

Vaccine Innovation over the last 140 years.

Max Roser/Our World in Data (Creative Commons license)

Advancements in Cell and Gene Therapies

CRISPR, a type of gene editing, is going to be huge in 2021, especially after the Nobel Prize in Chemistry was awarded to Emmanuelle Charpentier and Jennifer Doudna in October for pioneering the technology.

Right now, CRISPR isn't completely precise and can cause deletions or rearrangements of DNA.

"It's definitely not there yet, but over the next year it's going to get a lot closer and you're going to have a lot of momentum in this space," Auclair says. "CRISPR is one of the technologies I'm most excited about and 2021 is the year for it."

Gene therapies are typically used on rare genetic diseases. They work by replacing the faulty dysfunctional genes with corrected DNA codes.

"Cell and gene therapies are really where the field is going," Auclair says. "There is so much opportunity....For the first time in our life, in our existence as a species, we may actually be able to cure disease by using [techniques] like gene editing, where you cut in and out of pieces of DNA that caused a disease and put in healthy DNA," Auclair says.

For example, Spinal Muscular Atrophy is a rare genetic disorder that leads to muscle weakness, paralysis and death in children by age two. As of last year, afflicted children can take a gene therapy drug called Zolgensma that targets the missing or nonworking SMN1 gene with a new copy.

Another recent breakthrough uses gene editing for sickle cell disease. Victoria Gray, a mom from Mississippi who was exclusively followed by NPR, was the first person in the United States to be successfully treated for the genetic disorder with the help of CRISPR. She has continued to improve since her landmark treatment on July 2, 2019 and her once-debilitating pain has greatly eased.

"This is really a life-changer for me," she told NPR. "It's magnificent."

"You are going to see bigger leaps in gene therapies."

Look out also for improvements in cell therapies, but on a much lesser scale.

Cell therapies remove immune cells from a person or use cells from a donor. The cells are modified or cultured in lab, multiplied by the millions and then injected back into patients. These include stem cell therapies as well as CAR-T cell therapies, which are typically therapies of last resort and used in cancers like leukemia, Auclair says.

"You are going to see bigger leaps in gene therapies," Auclair says. "It's being heavily researched and we understand more about how to do gene therapies. Cell therapies will lie behind it a bit because they are so much more difficult to work with right now."

More Monoclonal Antibody Therapies

Look for more customized drugs to personalize medicine even more in the biotechnology space.

In 2019, the FDA anticipated receiving more than 200 Investigational New Drug (IND) applications in 2020. But with COVID, the number of INDs skyrocketed to 6,954 applications for the 2020 fiscal year, which ended September 30, 2020, according to the FDA's online tracker. Look for antibody therapies to play a bigger role.

Monoclonal antibodies are lab-grown proteins that mimic or enhance the immune system's response to fight off pathogens, like viruses, and they've been used to treat cancer. Now they are being used to treat patients with COVID-19.

President Donald Trump received a monoclonal antibody cocktail, called REGEN-COV2, which later received FDA emergency use authorization.

A newer type of monoclonal antibody therapy is Antibody-Drug Conjugates, also called ADCs. It's something we're going to be hearing a lot about in 2021, Auclair says.

"Antibody-Drug Conjugates is a monoclonal antibody with a chemical, we consider it a chemical warhead on it," Auclair says. "The monoclonal antibody binds to a specific antigen in your body or protein and delivers a chemical to that location and kills the infected cell."

Moving Beyond Male-Centric Lab Testing

Scientific testing for biology has, until recently, focused on testing males. Dulac, a Howard Hughes Medical Investigator and professor of molecular and cellular biology at Harvard University, challenged that idea to find brain circuitry behind sex-specific behaviors.

"For the longest time, until now, all the model systems in biology, are male," Dulac says. "The idea is if you do testing on males, you don't need to do testing on females."

Clinical models are done in male animals, as well as fundamental research. Because biological research is always done on male models, Dulac says the outcomes and understanding in biology is geared towards understanding male biology.

"All the drugs currently on the market and diagnoses of diseases are biased towards the understanding of male biology," Dulac says. "The diagnostics of diseases is way weaker in women than men."

That means the treatment isn't necessarily as good for women as men, she says, including what is known and understood about pain medication.

"So pain medication doesn't work well in women," Dulac says. "It works way better in men. It's true for almost all diseases that I know. Why? because you have a science that is dominated by males."

Although some in the scientific community challenge that females are not interesting or too complicated with their hormonal variations, Dulac says that's simply not true.

"There's absolutely no reason to decide 50% of life forms are interesting and the other 50% are not interesting. What about looking at both?" says Dulac, who was awarded the $3 million Breakthrough Prize in Life Sciences in September for connecting specific neural mechanisms to male and female parenting behaviors.

Disease Research on Single Cells

To better understand how diseases manifest in the body's cell and tissues, many researchers are looking at single-cell biology. Cells are the most fundamental building blocks of life. Much still needs to be learned.

"A remarkable development this year is the massive use of analysis of gene expression and chromosomal regulation at the single-cell level," Dulac says.

Much is focused on the Human Cell Atlas (HCA), a global initiative to map all cells in healthy humans and to better identify which genes associated with diseases are active in a person's body. Most estimates put the number of cells around 30 trillion.

Dulac points to work being conducted by the Cell Census Network (BICCN) Brain Initiative, an initiative by the National Institutes of Health to come up with an atlas of cell types in mouse, human and non-human primate brains, and the Chan Zuckerberg Initiative's funding of single-cell biology projects, including those focused on single-cell analysis of inflammation.

"Our body and our brain are made of a large number of cell types," Dulac says. "The ability to explore and identify differences in gene expression and regulation in massively multiplex ways by analyzing millions of cells is extraordinarily important."

Converting Plastics into Food

Yep, you heard it right, plastics may eventually be turned into food. The Defense Advanced Research Projects Agency, better known as DARPA, is funding a project—formally titled "Production of Macronutrients from Thermally Oxo-Degraded Wastes"—and asking researchers how to do this.

"When I first heard about this challenge, I thought it was absolutely absurd," says Dr. Robert Brown, director of the Bioeconomy Institute at Iowa State University and the project's principal investigator, who is working with other research partners at the University of Delaware, Sandia National Laboratories, and the American Institute of Chemical Engineering (AIChE)/RAPID Institute.

But then Brown realized plastics will slowly start oxidizing—taking in oxygen—and microorganisms can then consume it. The oxidation process at room temperature is extremely slow, however, which makes plastics essentially not biodegradable, Brown says.

That changes when heat is applied at brick pizza oven-like temperatures around 900-degrees Fahrenheit. The high temperatures get compounds to oxidize rapidly. Plastics are synthetic polymers made from petroleum—large molecules formed by linking many molecules together in a chain. Heated, these polymers will melt and crack into smaller molecules, causing them to vaporize in a process called devolatilization. Air is then used to cause oxidation in plastics and produce oxygenated compounds—fatty acids and alcohols—that microorganisms will eat and grow into single-cell proteins that can be used as an ingredient or substitute in protein-rich foods.

"The caveat is the microorganisms must be food-safe, something that we can consume," Brown says. "Like supplemental or nutritional yeast, like we use to brew beer and to make bread or is used in Australia to make Vegemite."

What do the microorganisms look like? For any home beer brewers, it's the "gunky looking stuff you'd find at the bottom after the fermentation process," Brown says. "That's cellular biomass. Like corn grown in the field, yeast or other microorganisms like bacteria can be harvested as macro-nutrients."

Brown says DARPA's ReSource program has challenged all the project researchers to find ways for microorganisms to consume any plastics found in the waste stream coming out of a military expeditionary force, including all the packaging of food and supplies. Then the researchers aim to remake the plastic waste into products soldiers can use, including food. The project is in the first of three phases.

"We are talking about polyethylene, polypropylene, like PET plastics used in water bottles and converting that into macronutrients that are food," says Brown.

Renewed Focus on Climate Change

The Union of Concerned Scientists say carbon dioxide levels are higher today than any point in at least 800,000 years.

"Climate science is so important for all of humankind. It is critical because the quality of life of humans on the planet depends on it."

Look for technology to help locate large-scale emitters of carbon dioxide, including sensors on satellites and artificial intelligence to optimize energy usage, especially in data centers.

Other technologies focus on alleviating the root cause of climate change: emissions of heat-trapping gasses that mainly come from burning fossil fuels.

Direct air carbon capture, an emerging effort to capture carbon dioxide directly from ambient air, could play a role.

The technology is in the early stages of development and still highly uncertain, says Peter Frumhoff, director of science and policy at Union of Concerned Scientists. "There are a lot of questions about how to do that at sufficiently low costs...and how to scale it up so you can get carbon dioxide stored in the right way," he says, and it can be very energy intensive.

One of the oldest solutions is planting new forests, or restoring old ones, which can help convert carbon dioxide into oxygen through photosynthesis. Hence the Trillion Trees Initiative launched by the World Economic Forum. Trees are only part of the solution, because planting trees isn't enough on its own, Frumhoff says. That's especially true, since 2020 was the year that human-made, artificial stuff now outweighs all life on earth.

More research is also going into artificial photosynthesis for solar fuels. The U.S. Department of Energy awarded $100 million in 2020 to two entities that are conducting research. Look also for improvements in battery storage capacity to help electric vehicles, as well as back-up power sources for solar and wind power, Frumhoff says.

Another method to combat climate change is solar geoengineering, also called solar radiation management, which reflects sunlight back to space. The idea stems from a volcanic eruption in 1991 that released a tremendous amount of sulfate aerosol particles into the stratosphere, reflecting the sunlight away from Earth. The planet cooled by a half degree for nearly a year, Frumhoff says. However, he acknowledges, "there's a lot of things we don't know about the potential impacts and risks" involved in this controversial approach.

Whatever the approach, scientific solutions to climate change are attracting renewed attention. Under President Trump, the White House Office of Science and Technology Policy didn't have an acting director for almost two years. Expect that to change when President-elect Joe Biden takes office.

"Climate science is so important for all of humankind," Dulac says. "It is critical because the quality of life of humans on the planet depends on it."

Staying well in the 21st century is like playing a game of chess

The control of infectious diseases was considered to be one of the “10 Great Public Health Achievements.” What we didn’t take into account was the very concept of evolution: as we built better protections, our enemies eventually boosted their attacking prowess, so soon enough we found ourselves on the defensive once again.

This article originally appeared in One Health/One Planet, a single-issue magazine that explores how climate change and other environmental shifts are increasing vulnerabilities to infectious diseases by land and by sea. The magazine probes how scientists are making progress with leaders in other fields toward solutions that embrace diverse perspectives and the interconnectedness of all lifeforms and the planet.

On July 30, 1999, the Centers for Disease Control and Prevention published a report comparing data on the control of infectious disease from the beginning of the 20th century to the end. The data showed that deaths from infectious diseases declined markedly. In the early 1900s, pneumonia, tuberculosis and diarrheal diseases were the three leading killers, accounting for one-third of total deaths in the U.S.—with 40 percent being children under five.

Mass vaccinations, the discovery of antibiotics and overall sanitation and hygiene measures eventually eradicated smallpox, beat down polio, cured cholera, nearly rid the world of tuberculosis and extended the U.S. life expectancy by 25 years. By 1997, there was a shift in population health in the U.S. such that cancer, diabetes and heart disease were now the leading causes of death.

The control of infectious diseases is considered to be one of the “10 Great Public Health Achievements.” Yet on the brink of the 21st century, new trouble was already brewing. Hospitals were seeing periodic cases of antibiotic-resistant infections. Novel viruses, or those that previously didn’t afflict humans, began to emerge, causing outbreaks of West Nile, SARS, MERS or swine flu.In the years that followed, tuberculosis made a comeback, at least in certain parts of the world. What we didn’t take into account was the very concept of evolution: as we built better protections, our enemies eventually boosted their attacking prowess, so soon enough we found ourselves on the defensive once again.

At the same time, new, previously unknown or extremely rare disorders began to rise, such as autoimmune or genetic conditions. Two decades later, scientists began thinking about health differently—not as a static achievement guaranteed to last, but as something dynamic and constantly changing—and sometimes, for the worse.

What emerged since then is a different paradigm that makes our interactions with the microbial world more like a biological chess match, says Victoria McGovern, a biochemist and program officer for the Burroughs Wellcome Fund’s Infectious Disease and Population Sciences Program. In this chess game, humans may make a clever strategic move, which could involve creating a new vaccine or a potent antibiotic, but that advantage is fleeting. At some point, the organisms we are up against could respond with a move of their own—such as developing resistance to medication or genetic mutations that attack our bodies. Simply eradicating the “opponent,” or the pathogenic microbes, as efficiently as possible isn’t enough to keep humans healthy long-term.

Instead, scientists should focus on studying the complexity of interactions between humans and their pathogens. “We need to better understand the lifestyles of things that afflict us,” McGovern says. “The solutions are going to be in understanding various parts of their biology so we can influence how they behave around our systems.”

Genetics and cell biology, combined with imaging techniques that allow one to see tissues and individual cells in actions, will enable scientists to define and quantify what it means to be healthy at the molecular level.

What is being proposed will require a pivot to basic biology and other disciplines that have suffered from lack of research funding in recent years. Yet, according to McGovern, the research teams of funded proposals are answering bigger questions. “We look for people exploring questions about hosts and pathogens, and what happens when they touch, but we’re also looking for people with big ideas,” she says. For example, if one specific infection causes a chain of pathological events in the body, can other infections cause them too? And if we find a way to break that chain for one pathogen, can we play the same trick on another? “We really want to see people thinking of not just one experiment but about big implications of their work,” McGovern says.

Jonah Cool, a cell biologist, geneticist and science officer at the Chan Zuckerberg Initiative, says that it’s necessary to define what constitutes a healthy organism and how it overcomes infections or environmental assaults, such as pollution from forest fires or toxins from industrial smokestacks. An organism that catches a disease isn’t necessarily an unhealthy one, as long as it fights it off successfully—an ability that arises from the complex interplay of its genes, the immune system, age, stress levels and other factors. Modern science allows many of these factors to be measured, recorded and compared. “We need a data-driven, deep-phenotyping approach to defining healthy biological systems and their responses to insults—which can be infectious disease or environmental exposures—and their ability to navigate their way through that space,” Cool says.

Genetics and cell biology, combined with imaging techniques that allow one to see tissues and individual cells in actions, will enable scientists to define and quantify what it means to be healthy at the molecular level. “As a geneticist and cell biologist, I believe in all these molecular underpinnings and how they arise in phenotypic differences in cells, genes, proteins—and how their combinations form complex cellular states,” Cool says.

Julie Graves, a physician, public health consultant, former adjunct professor of management, policy and community health at the University of Texas Health Science Center in Houston, stresses the necessity of nutritious diets. According to the Rockefeller Food Initiative, “poor diet is the leading risk factor for disease, disability and premature death in the majority of countries around the world.” Adequate nutrition is critical for maintaining human health and life. Yet, Western diets are often low in essential nutrients, high in calories and heavy on processed foods. Overconsumption of these foods has contributed to high rates of obesity and chronic disease in the U.S. In fact, more than half of American adults have at least one chronic disease, and 27 percent have more than one—which increases vulnerability to COVID-19 infections, according to the 2018 National Health Interview Survey.

Further, the contamination of our food supply with various agricultural and industrial toxins—petrochemicals, pesticides, PFAS and others—has implications for morbidity, mortality, and overall quality of life. “These chemicals are insidiously in everything, including our bodies,” Graves says—and they are interfering with our normal biological functions. “We need to stop how we manufacture food,” she adds, and rid our sustenance of these contaminants.

According to the Humane Society of the United States, factory farms result in nearly 40 percent of emissions of methane. Concentrated animal feeding operations or CAFOs may serve as breeding grounds for pandemics, scientists warn, so humans should research better ways to raise and treat livestock. Diego Rose, a professor of food and nutrition policy at Tulane University School of Public Health & Tropical Medicine, and his colleagues found that “20 percent of Americans’ diets account for about 45 percent of the environmental impacts [that come from food].” A subsequent study explored the impacts of specific foods and found that substituting beef for chicken lowers an individual’s carbon footprint by nearly 50 percent, with water usage decreased by 30 percent. Notably, however, eating too much red meat has been associated with a variety of illnesses.

In some communities, the option to swap food types is limited or impossible. For example, “many populations live in relative food deserts where there’s not a local grocery store that has any fresh produce,” says Louis Muglia, the president and CEO of Burroughs Wellcome. Individuals in these communities suffer from an insufficient intake of beneficial macronutrients, and they’re “probably being exposed to phenols and other toxins that are in the packaging.” An equitable, sustainable and nutritious food supply will be vital to humanity’s wellbeing in the era of climate change, unpredictable weather and spillover events.

A recent report by See Change Institute and the Climate Mental Health Network showed that people who are experiencing socioeconomic inequalities, including many people of color, contribute the least to climate change, yet they are impacted the most. For example, people in low-income communities are disproportionately exposed to vehicle emissions, Muglia says. Through its Climate Change and Human Health Seed Grants program, Burroughs Wellcome funds research that aims to understand how various factors related to climate change and environmental chemicals contribute to premature births, associated with health vulnerabilities over the course of a person’s life—and map such hot spots.

“It’s very complex, the combinations of socio-economic environment, race, ethnicity and environmental exposure, whether that’s heat or toxic chemicals,” Muglia explains. “Disentangling those things really requires a very sophisticated, multidisciplinary team. That’s what we’ve put together to describe where these hotspots are and see how they correlate with different toxin exposure levels.”

In addition to mapping the risks, researchers are developing novel therapeutics that will be crucial to our armor arsenal, but we will have to be smarter at designing and using them. We will need more potent, better-working monoclonal antibodies. Instead of directly attacking a pathogen, we may have to learn to stimulate the immune system—training it to fight the disease-causing microbes on its own. And rather than indiscriminately killing all bacteria with broad-scope drugs, we would need more targeted medications. “Instead of wiping out the entire gut flora, we will need to come up with ways that kill harmful bacteria but not healthy ones,” Graves says. Training our immune systems to recognize and react to pathogens by way of vaccination will keep us ahead of our biological opponents, too. “Continued development of vaccines against infectious diseases is critical,” says Graves.

With all of the unpredictable events that lie ahead, it is difficult to foresee what achievements in public health will be reported at the end of the 21st century. Yet, technological advances, better modeling and pursuing bigger questions in science, along with education and working closely with communities will help overcome the challenges. The Chan Zuckerberg Initiative displays an optimistic message on its website: “Is it possible to cure, prevent, or manage all diseases by the end of this century? We think so.” Cool shares the view of his employer—and believes that science can get us there. Just give it some time and a chance. “It’s a big, bold statement,” he says, “but the end of the century is a long way away.”Lina Zeldovich has written about science, medicine and technology for Popular Science, Smithsonian, National Geographic, Scientific American, Reader’s Digest, the New York Times and other major national and international publications. A Columbia J-School alumna, she has won several awards for her stories, including the ASJA Crisis Coverage Award for Covid reporting, and has been a contributing editor at Nautilus Magazine. In 2021, Zeldovich released her first book, The Other Dark Matter, published by the University of Chicago Press, about the science and business of turning waste into wealth and health. You can find her on http://linazeldovich.com/ and @linazeldovich.

Alzheimer’s prevention may be less about new drugs, more about income, zip code and education

(Left to right) Vickie Naylor, Bernadine Clay, and Donna Maxey read a memory prompt as they take part in the Sharing History through Active Reminiscence and Photo-Imagery (SHARP) study, September 20, 2017.

That your risk of Alzheimer’s disease depends on your salary, what you ate as a child, or the block where you live may seem implausible. But researchers are discovering that social determinants of health (SDOH) play an outsized role in Alzheimer’s disease and related dementias, possibly more than age, and new strategies are emerging for how to address these factors.

At the 2022 Alzheimer’s Association International Conference, a series of presentations offered evidence that a string of socioeconomic factors—such as employment status, social support networks, education and home ownership—significantly affected dementia risk, even when adjusting data for genetic risk. What’s more, memory declined more rapidly in people who earned lower wages and slower in people who had parents of higher socioeconomic status.

In 2020, a first-of-its kind study in JAMA linked Alzheimer’s incidence to “neighborhood disadvantage,” which is based on SDOH indicators. Through autopsies, researchers analyzed brain tissue markers related to Alzheimer’s and found an association with these indicators. In 2022, Ryan Powell, the lead author of that study, published further findings that neighborhood disadvantage was connected with having more neurofibrillary tangles and amyloid plaques, the main pathological features of Alzheimer's disease.

As of yet, little is known about the biological processes behind this, says Powell, director of data science at the Center for Health Disparities Research at the University of Wisconsin School of Medicine and Public Health. “We know the association but not the direct causal pathway.”

The corroborative findings keep coming. In a Nature study published a few months after Powell’s study, every social determinant investigated affected Alzheimer’s risk except for marital status. The links were highest for income, education, and occupational status.

Clinical trials on new Alzheimer’s medications get all the headlines but preventing dementia through policy and public health interventions should not be underestimated.

The potential for prevention is significant. One in three older adults dies with Alzheimer's or another dementia—more than breast and prostate cancers combined. Further, a 2020 report from the Lancet Commission determined that about 40 percent of dementia cases could theoretically be prevented or delayed by managing the risk factors that people can modify.

Take inactivity. Older adults who took 9,800 steps daily were half as likely to develop dementia over the next 7 years, in a 2022 JAMA study. Hearing loss, another risk factor that can be managed, accounts for about 9 percent of dementia cases.

Clinical trials on new Alzheimer’s medications get all the headlines but preventing dementia through policy and public health interventions should not be underestimated. Simply slowing the course of Alzheimer’s or delaying its onset by five years would cut the incidence in half, according to the Global Council on Brain Health.

Minorities Hit the Hardest

The World Health Organization defines SDOH as “conditions in which people are born, work, live, and age, and the wider set of forces and systems shaping the conditions of daily life.”

Anyone who exists on processed food, smokes cigarettes, or skimps on sleep has heightened risks for dementia. But minority groups get hit harder. Older Black Americans are twice as likely to have Alzheimer’s or another form of dementia as white Americans; older Hispanics are about one and a half times more likely.

This is due in part to higher rates of diabetes, obesity, and high blood pressure within these communities. These diseases are linked to Alzheimer’s, and SDOH factors multiply the risks. Blacks and Hispanics earn less income on average than white people. This means they are more likely to live in neighborhoods with limited access to healthy food, medical care, and good schools, and suffer greater exposure to noise (which impairs hearing) and air pollution—additional risk factors for dementia.

Related Reading: The Toxic Effects of Noise and What We're Not Doing About it

Plus, when Black people are diagnosed with dementia, their cognitive impairment and neuropsychiatric symptom are more advanced than in white patients. Why? Some African-Americans delay seeing a doctor because of perceived discrimination and a sense they will not be heard, says Carl V. Hill, chief diversity, equity, and inclusion officer at the Alzheimer’s Association.

Misinformation about dementia is another issue in Black communities. The thinking is that Alzheimer’s is genetic or age-related, not realizing that diet and physical activity can improve brain health, Hill says.

African Americans are severely underrepresented in clinical trials for Alzheimer’s, too. So, researchers miss the opportunity to learn more about health disparities. “It’s a bioethical issue,” Hill says. “The people most likely to have Alzheimer’s aren’t included in the trials.”

The Cure: Systemic Change

People think of lifestyle as a choice but there are limitations, says Muniza Anum Majoka, a geriatric psychiatrist and assistant professor of psychiatry at Yale University, who published an overview of SDOH factors that impact dementia. “For a lot of people, those choices [to improve brain health] are not available,” she says. If you don’t live in a safe neighborhood, for example, walking for exercise is not an option.

Hill wants to see the focus of prevention shift from individual behavior change to ensuring everyone has access to the same resources. Advice about healthy eating only goes so far if someone lives in a food desert. Systemic change also means increasing the number of minority physicians and recruiting minorities in clinical drug trials so studies will be relevant to these communities, Hill says.

Based on SDOH impact research, raising education levels has the most potential to prevent dementia. One theory is that highly educated people have a greater brain reserve that enables them to tolerate pathological changes in the brain, thus delaying dementia, says Majoka. Being curious, learning new things and problem-solving also contribute to brain health, she adds. Plus, having more education may be associated with higher socioeconomic status, more access to accurate information and healthier lifestyle choices.

New Strategies

The chasm between what researchers know about brain health and how the knowledge is being applied is huge. “There’s an explosion of interest in this area. We’re just in the first steps,” says Powell. One day, he predicts that physicians will manage Alzheimer’s through precision medicine customized to the patient’s specific risk factors and needs.

Raina Croff, assistant professor of neurology at Oregon Health & Science University School of Medicine, created the SHARP (Sharing History through Active Reminiscence and Photo-imagery) walking program to forestall memory loss in African Americans with mild cognitive impairment or early dementia.

Participants and their caregivers walk in historically black neighborhoods three times a week over six months. A smart tablet provides information about “Memory Markers” they pass, such as the route of a civil rights march. People celebrate their community and culture while “brain health is running in the background,” Croff says.

Photos and memory prompts engage participants in the SHARP program.

OHSU/Kristyna Wentz-Graff

The project began in 2015 as a pilot study in Croff’s hometown of Portland, Ore., expanded to Seattle, and will soon start in Oakland, Calif. “Walking is good for slowing [brain] decline,” she says. A post-study assessment of 40 participants in 2017 showed that half had higher cognitive scores after the program; 78 percent had lower blood pressure; and 44 percent lost weight. Those with mild cognitive impairment showed the most gains. The walkers also reported improved mood and energy along with increased involvement in other activities.

It’s never too late to reap the benefits of working your brain and being socially engaged, Majoka says.

In Milwaukee, the Wisconsin Alzheimer’s Institute launched the The Amazing Grace Chorus® to stave off cognitive decline in seniors. People in early stages of Alzheimer’s practice and perform six concerts each year. The activity provides opportunities for social engagement, mental stimulation, and a support network. Among the benefits, 55 percent reported better communication at home and nearly half of participants said they got involved with more activities after participating in the chorus.

Private companies are offering intervention services to healthcare providers and insurers to manage SDOH, too. One such service, MyHello, makes calls to at-risk people to assess their needs—be it food, transportation or simply a friendly voice. Having a social support network is critical for seniors, says Majoka, noting there was a steep decline in cognitive function among isolated elders during Covid lockdowns.

About 1 in 9 Americans age 65 or older live with Alzheimer’s today. With a surge in people with the disease predicted, public health professionals have to think more broadly about resource targets and effective intervention points, Powell says.

Beyond breakthrough pills, that is. Like Dorothy in Kansas discovering happiness was always in her own backyard, we are beginning to learn that preventing Alzheimer’s is in our reach if only we recognized it.