Eight Big Medical and Science Trends to Watch in 2021

Promising developments underway include advancements in gene and cell therapy, better testing for COVID, and a renewed focus on climate change.

The world as we know it has forever changed. With a greater focus on science and technology than before, experts in the biotech and life sciences spaces are grappling with what comes next as SARS-CoV-2, the coronavirus that causes the COVID-19 illness, has spread and mutated across the world.

Even with vaccines being distributed, so much still remains unknown.

Jared Auclair, Technical Supervisor for the Northeastern University's Life Science Testing Center in Burlington, Massachusetts, guides a COVID testing lab that cranks out thousands of coronavirus test results per day. His lab is also focused on monitoring the quality of new cell and gene therapy products coming to the market.

Here are trends Auclair and other experts are watching in 2021.

Better Diagnostic Testing for COVID

Expect improvements in COVID diagnostic testing and the ability to test at home.

There are currently three types of coronavirus tests. The molecular test—also known as the RT-PCR test, detects the virus's genetic material, and is highly accurate, but it can take days to receive results. There are also antibody tests, done through a blood draw, designed to test whether you've had COVID in the past. Finally, there's the quick antigen test that isn't as accurate as the PCR test, but can identify if people are going to infect others.

Last month, Lucira Health secured the U.S. FDA Emergency Use Authorization for the first prescription molecular diagnostic test for COVID-19 that can be performed at home. On December 15th, the Ellume Covid-19 Home Test received authorization as the first over-the-counter COVID-19 diagnostic antigen test that can be done at home without a prescription. The test uses a nasal swab that is connected to a smartphone app and returns results in 15-20 minutes. Similarly, the BinaxNOW COVID-19 Ag Card Home Test received authorization on Dec. 16 for its 15-minute antigen test that can be used within the first seven days of onset of COIVD-19 symptoms.

Home testing has the possibility to impact the pandemic pretty drastically, Auclair says, but there are other considerations: the type and timing of test that is administered, how expensive is the test (and if it is financially feasible for the general public) and the ability of a home test taker to accurately administer the test.

"The vaccine roll-out will not eliminate the need for testing until late 2021 or early 2022."

Ideally, everyone would frequently get tested, but that would mean the cost of a single home test—which is expected to be around $30 or more—would need to be much cheaper, more in the $5 range.

Auclair expects "innovations in the diagnostic space to explode" with the need for more accurate, inexpensive, quicker COVID tests. Auclair foresees innovations to be at first focused on COVID point-of-care testing, but he expects improvements within diagnostic testing for other types of viruses and diseases too.

"We still need more testing to get the pandemic under control, likely over the next 12 months," Auclair says. "The vaccine roll-out will not eliminate the need for testing until late 2021 or early 2022."

Rise of mRNA-based Vaccines and Therapies

A year ago, vaccines weren't being talked about like they are today.

"But clearly vaccines are the talk of the town," Auclair says. "The reason we got a vaccine so fast was there was so much money thrown at it."

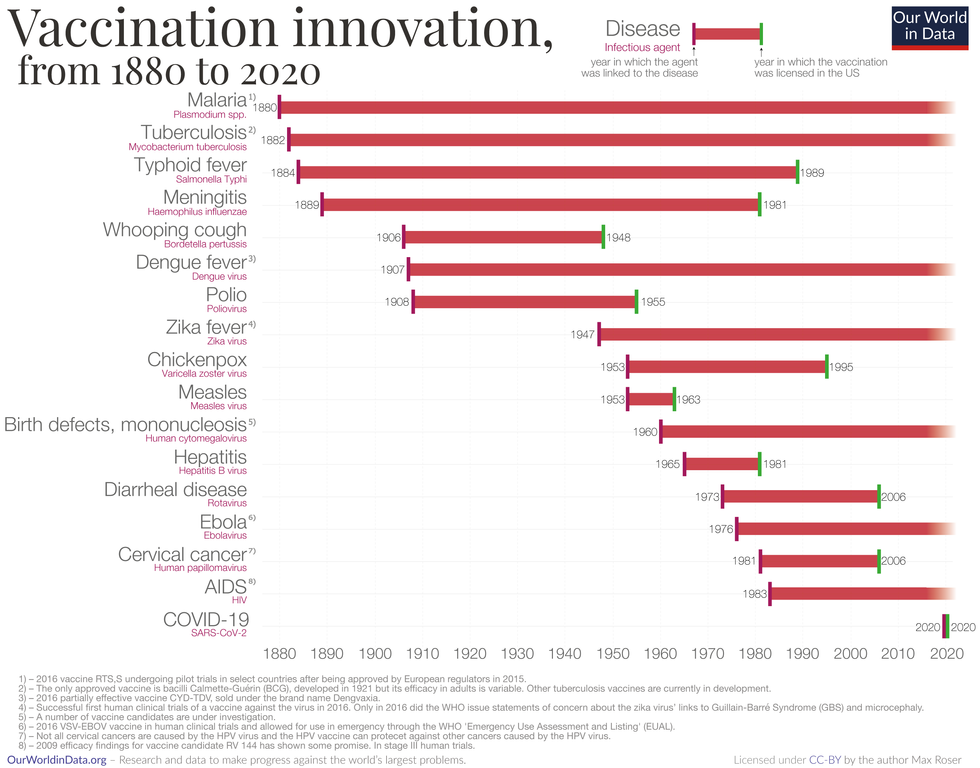

A vaccine can take more than 10 years to fully develop, according to the World Economic Forum. Prior to the new COVID vaccines, which were remarkably developed and tested in under a year, the fastest vaccine ever made was for mumps -- and it took four years.

"Normally you have to produce a protein. This is typically done in eggs. It takes forever," says Catherine Dulac, a neuroscientist and developmental biologist at Harvard University who won the 2021 Breakthrough Prize in Life Sciences. "But an mRNA vaccine just enabled [us] to skip all sorts of steps [compared with burdensome conventional manufacturing] and go directly to a product that can be injected into people."

Non-traditional medicines based on genetic research are in their infancy. With mRNA-based vaccines hitting the market for the first time, look for more vaccines to be developed for whatever viruses we don't currently have vaccines for, like dengue virus and Ebola, Auclair says.

"There's a whole bunch of things that could be explored now that haven't been thought about in the past," Auclair says. "It could really be a game changer."

Vaccine Innovation over the last 140 years.

Max Roser/Our World in Data (Creative Commons license)

Advancements in Cell and Gene Therapies

CRISPR, a type of gene editing, is going to be huge in 2021, especially after the Nobel Prize in Chemistry was awarded to Emmanuelle Charpentier and Jennifer Doudna in October for pioneering the technology.

Right now, CRISPR isn't completely precise and can cause deletions or rearrangements of DNA.

"It's definitely not there yet, but over the next year it's going to get a lot closer and you're going to have a lot of momentum in this space," Auclair says. "CRISPR is one of the technologies I'm most excited about and 2021 is the year for it."

Gene therapies are typically used on rare genetic diseases. They work by replacing the faulty dysfunctional genes with corrected DNA codes.

"Cell and gene therapies are really where the field is going," Auclair says. "There is so much opportunity....For the first time in our life, in our existence as a species, we may actually be able to cure disease by using [techniques] like gene editing, where you cut in and out of pieces of DNA that caused a disease and put in healthy DNA," Auclair says.

For example, Spinal Muscular Atrophy is a rare genetic disorder that leads to muscle weakness, paralysis and death in children by age two. As of last year, afflicted children can take a gene therapy drug called Zolgensma that targets the missing or nonworking SMN1 gene with a new copy.

Another recent breakthrough uses gene editing for sickle cell disease. Victoria Gray, a mom from Mississippi who was exclusively followed by NPR, was the first person in the United States to be successfully treated for the genetic disorder with the help of CRISPR. She has continued to improve since her landmark treatment on July 2, 2019 and her once-debilitating pain has greatly eased.

"This is really a life-changer for me," she told NPR. "It's magnificent."

"You are going to see bigger leaps in gene therapies."

Look out also for improvements in cell therapies, but on a much lesser scale.

Cell therapies remove immune cells from a person or use cells from a donor. The cells are modified or cultured in lab, multiplied by the millions and then injected back into patients. These include stem cell therapies as well as CAR-T cell therapies, which are typically therapies of last resort and used in cancers like leukemia, Auclair says.

"You are going to see bigger leaps in gene therapies," Auclair says. "It's being heavily researched and we understand more about how to do gene therapies. Cell therapies will lie behind it a bit because they are so much more difficult to work with right now."

More Monoclonal Antibody Therapies

Look for more customized drugs to personalize medicine even more in the biotechnology space.

In 2019, the FDA anticipated receiving more than 200 Investigational New Drug (IND) applications in 2020. But with COVID, the number of INDs skyrocketed to 6,954 applications for the 2020 fiscal year, which ended September 30, 2020, according to the FDA's online tracker. Look for antibody therapies to play a bigger role.

Monoclonal antibodies are lab-grown proteins that mimic or enhance the immune system's response to fight off pathogens, like viruses, and they've been used to treat cancer. Now they are being used to treat patients with COVID-19.

President Donald Trump received a monoclonal antibody cocktail, called REGEN-COV2, which later received FDA emergency use authorization.

A newer type of monoclonal antibody therapy is Antibody-Drug Conjugates, also called ADCs. It's something we're going to be hearing a lot about in 2021, Auclair says.

"Antibody-Drug Conjugates is a monoclonal antibody with a chemical, we consider it a chemical warhead on it," Auclair says. "The monoclonal antibody binds to a specific antigen in your body or protein and delivers a chemical to that location and kills the infected cell."

Moving Beyond Male-Centric Lab Testing

Scientific testing for biology has, until recently, focused on testing males. Dulac, a Howard Hughes Medical Investigator and professor of molecular and cellular biology at Harvard University, challenged that idea to find brain circuitry behind sex-specific behaviors.

"For the longest time, until now, all the model systems in biology, are male," Dulac says. "The idea is if you do testing on males, you don't need to do testing on females."

Clinical models are done in male animals, as well as fundamental research. Because biological research is always done on male models, Dulac says the outcomes and understanding in biology is geared towards understanding male biology.

"All the drugs currently on the market and diagnoses of diseases are biased towards the understanding of male biology," Dulac says. "The diagnostics of diseases is way weaker in women than men."

That means the treatment isn't necessarily as good for women as men, she says, including what is known and understood about pain medication.

"So pain medication doesn't work well in women," Dulac says. "It works way better in men. It's true for almost all diseases that I know. Why? because you have a science that is dominated by males."

Although some in the scientific community challenge that females are not interesting or too complicated with their hormonal variations, Dulac says that's simply not true.

"There's absolutely no reason to decide 50% of life forms are interesting and the other 50% are not interesting. What about looking at both?" says Dulac, who was awarded the $3 million Breakthrough Prize in Life Sciences in September for connecting specific neural mechanisms to male and female parenting behaviors.

Disease Research on Single Cells

To better understand how diseases manifest in the body's cell and tissues, many researchers are looking at single-cell biology. Cells are the most fundamental building blocks of life. Much still needs to be learned.

"A remarkable development this year is the massive use of analysis of gene expression and chromosomal regulation at the single-cell level," Dulac says.

Much is focused on the Human Cell Atlas (HCA), a global initiative to map all cells in healthy humans and to better identify which genes associated with diseases are active in a person's body. Most estimates put the number of cells around 30 trillion.

Dulac points to work being conducted by the Cell Census Network (BICCN) Brain Initiative, an initiative by the National Institutes of Health to come up with an atlas of cell types in mouse, human and non-human primate brains, and the Chan Zuckerberg Initiative's funding of single-cell biology projects, including those focused on single-cell analysis of inflammation.

"Our body and our brain are made of a large number of cell types," Dulac says. "The ability to explore and identify differences in gene expression and regulation in massively multiplex ways by analyzing millions of cells is extraordinarily important."

Converting Plastics into Food

Yep, you heard it right, plastics may eventually be turned into food. The Defense Advanced Research Projects Agency, better known as DARPA, is funding a project—formally titled "Production of Macronutrients from Thermally Oxo-Degraded Wastes"—and asking researchers how to do this.

"When I first heard about this challenge, I thought it was absolutely absurd," says Dr. Robert Brown, director of the Bioeconomy Institute at Iowa State University and the project's principal investigator, who is working with other research partners at the University of Delaware, Sandia National Laboratories, and the American Institute of Chemical Engineering (AIChE)/RAPID Institute.

But then Brown realized plastics will slowly start oxidizing—taking in oxygen—and microorganisms can then consume it. The oxidation process at room temperature is extremely slow, however, which makes plastics essentially not biodegradable, Brown says.

That changes when heat is applied at brick pizza oven-like temperatures around 900-degrees Fahrenheit. The high temperatures get compounds to oxidize rapidly. Plastics are synthetic polymers made from petroleum—large molecules formed by linking many molecules together in a chain. Heated, these polymers will melt and crack into smaller molecules, causing them to vaporize in a process called devolatilization. Air is then used to cause oxidation in plastics and produce oxygenated compounds—fatty acids and alcohols—that microorganisms will eat and grow into single-cell proteins that can be used as an ingredient or substitute in protein-rich foods.

"The caveat is the microorganisms must be food-safe, something that we can consume," Brown says. "Like supplemental or nutritional yeast, like we use to brew beer and to make bread or is used in Australia to make Vegemite."

What do the microorganisms look like? For any home beer brewers, it's the "gunky looking stuff you'd find at the bottom after the fermentation process," Brown says. "That's cellular biomass. Like corn grown in the field, yeast or other microorganisms like bacteria can be harvested as macro-nutrients."

Brown says DARPA's ReSource program has challenged all the project researchers to find ways for microorganisms to consume any plastics found in the waste stream coming out of a military expeditionary force, including all the packaging of food and supplies. Then the researchers aim to remake the plastic waste into products soldiers can use, including food. The project is in the first of three phases.

"We are talking about polyethylene, polypropylene, like PET plastics used in water bottles and converting that into macronutrients that are food," says Brown.

Renewed Focus on Climate Change

The Union of Concerned Scientists say carbon dioxide levels are higher today than any point in at least 800,000 years.

"Climate science is so important for all of humankind. It is critical because the quality of life of humans on the planet depends on it."

Look for technology to help locate large-scale emitters of carbon dioxide, including sensors on satellites and artificial intelligence to optimize energy usage, especially in data centers.

Other technologies focus on alleviating the root cause of climate change: emissions of heat-trapping gasses that mainly come from burning fossil fuels.

Direct air carbon capture, an emerging effort to capture carbon dioxide directly from ambient air, could play a role.

The technology is in the early stages of development and still highly uncertain, says Peter Frumhoff, director of science and policy at Union of Concerned Scientists. "There are a lot of questions about how to do that at sufficiently low costs...and how to scale it up so you can get carbon dioxide stored in the right way," he says, and it can be very energy intensive.

One of the oldest solutions is planting new forests, or restoring old ones, which can help convert carbon dioxide into oxygen through photosynthesis. Hence the Trillion Trees Initiative launched by the World Economic Forum. Trees are only part of the solution, because planting trees isn't enough on its own, Frumhoff says. That's especially true, since 2020 was the year that human-made, artificial stuff now outweighs all life on earth.

More research is also going into artificial photosynthesis for solar fuels. The U.S. Department of Energy awarded $100 million in 2020 to two entities that are conducting research. Look also for improvements in battery storage capacity to help electric vehicles, as well as back-up power sources for solar and wind power, Frumhoff says.

Another method to combat climate change is solar geoengineering, also called solar radiation management, which reflects sunlight back to space. The idea stems from a volcanic eruption in 1991 that released a tremendous amount of sulfate aerosol particles into the stratosphere, reflecting the sunlight away from Earth. The planet cooled by a half degree for nearly a year, Frumhoff says. However, he acknowledges, "there's a lot of things we don't know about the potential impacts and risks" involved in this controversial approach.

Whatever the approach, scientific solutions to climate change are attracting renewed attention. Under President Trump, the White House Office of Science and Technology Policy didn't have an acting director for almost two years. Expect that to change when President-elect Joe Biden takes office.

"Climate science is so important for all of humankind," Dulac says. "It is critical because the quality of life of humans on the planet depends on it."

Leaders at Google and other companies are trying to get workers to return to the office, saying remote and hybrid work disrupt work-life boundaries and well-being. These arguments conflict with research on remote work and wellness.

Many leaders at top companies are trying to get workers to return to the office. They say remote and hybrid work are bad for their employees’ mental well-being and lead to a sense of social isolation, meaninglessness, and lack of work-life boundaries, so we should just all go back to office-centric work.

One example is Google, where the company’s leadership is defending its requirement of mostly in-office work for all staff as necessary to protect social capital, meaning people’s connections to and trust in one another. That’s despite a survey of over 1,000 Google employees showing that two-thirds feel unhappy about being forced to work in the office three days per week. In internal meetings and public letters, many have threatened to leave, and some are already quitting to go to other companies with more flexible options.

Last month, GM rolled out a policy similar to Google’s, but had to backtrack because of intense employee opposition. The same is happening in some places outside of the U.S. For instance, three-fifths of all Chinese employers are refusing to offer permanent remote work options, according to a survey this year from The Paper.

For their claims that remote work hurts well-being, some of these office-centric traditionalists cite a number of prominent articles. For example, Arthur Brooks claimed in an essay that “aggravation from commuting is no match for the misery of loneliness, which can lead to depression, substance abuse, sedentary behavior, and relationship damage, among other ills.” An article in Forbes reported that over two-thirds of employees who work from home at least part of the time had trouble getting away from work at the end of the day. And Fast Company has a piece about how remote work can “exacerbate existing mental health issues” like depression and anxiety.

For his part, author Malcolm Gladwell has also championed a swift return to the office, saying there is a “core psychological truth, which is we want you to have a feeling of belonging and to feel necessary…I know it’s a hassle to come into the office, but if you’re just sitting in your pajamas in your bedroom, is that the work life you want to live?”

These arguments may sound logical to some, but they fly in the face of research and my own experience as a behavioral scientist and as a consultant to Fortune 500 companies. In these roles, I have seen the pitfalls of in-person work, which can be just as problematic, if not more so. Remote work is not without its own challenges, but I have helped 21 companies implement a series of simple steps to address them.

Research finds that remote work is actually better for you

The trouble with the articles described above - and claims by traditionalist business leaders and gurus - stems from a sneaky misdirection. They decry the negative impact of remote and hybrid work for wellbeing. Yet they gloss over the damage to wellbeing caused by the alternative, namely office-centric work.

It’s like comparing remote and hybrid work to a state of leisure. Sure, people would feel less isolated if they could hang out and have a beer with their friends instead of working. They could take care of their existing mental health issues if they could visit a therapist. But that’s not in the cards. What’s in the cards is office-centric work. That means the frustration of a long commute to the office, sitting at your desk in an often-uncomfortable and oppressive open office for at least 8 hours, having a sad desk lunch and unhealthy snacks, sometimes at an insanely expensive cost and, for making it through this series of insults, you’re rewarded with more frustration while commuting back home.

In a 2022 survey, the vast majority of respondents felt that working remotely improved their work-life balance. Much of that improvement stemmed from saving time due to not needing to commute and having a more flexible schedule.

So what happens when we compare apples to apples? That’s when we need to hear from the horse’s mouth: namely, surveys of employees themselves, who experienced both in-office work before the pandemic, and hybrid and remote work after COVID struck.

Consider a 2022 survey by Cisco of 28,000 full-time employees around the globe. Nearly 80 percent of respondents say that remote and hybrid work improved their overall well-being: that applies to 83 percent of Millennials, 82 percent of Gen Z, 76 percent of Gen Z, and 66 percent of Baby Boomers. The vast majority of respondents felt that working remotely improved their work-life balance.

Much of that improvement stemmed from saving time due to not needing to commute and having a more flexible schedule: 90 percent saved 4 to 8 hours or more per week. What did they do with that extra time? The top choice for almost half was spending more time with family, friends and pets, which certainly helped address the problem of isolation from the workplace. Indeed, three-quarters of them report that working from home improved their family relationships, and 51 percent strengthened their friendships. Twenty percent used the freed up hours for self-care.

Of the small number who report their work-life balance has not improved or even worsened, the number one reason is the difficulty of disconnecting from work, but 82 percent report that working from anywhere has made them happier. Over half say that remote work decreased their stress levels.

Other surveys back up Cisco’s findings. For example, a 2022 Future Forum survey compared knowledge workers who worked full-time in the office, in a hybrid modality, and fully remote. It found that full-time in-office workers felt the least satisfied with work-life balance, hybrid workers were in the middle, and fully remote workers felt most satisfied. The same distribution applied to questions about stress and anxiety. A mental health website called Tracking Happiness found in a 2022 survey of over 12,000 workers that fully remote employees report a happiness level about 20 percent greater than office-centric ones. Another survey by CNBC in June found that fully remote workers are more often very satisfied with their jobs than workers who are fully in-person.

Academic peer-reviewed research provides further support. Consider a 2022 study published in the International Journal of Environmental Research and Public Health of bank workers who worked on the same tasks of advising customers either remotely or in-person. It found that fully remote workers experienced higher meaningfulness, self-actualization, happiness, and commitment than in-person workers. Another study, published by the National Bureau of Economic Research, reported that hybrid workers, compared to office-centric ones, experienced higher satisfaction with work and had 35 percent more job retention.

What about the supposed burnout crisis associated with remote work? Indeed, burnout is a concern. A survey by Deloitte finds that 77 percent of workers experienced burnout at their current job. Gallup came up with a slightly lower number of 67 percent in its survey. But guess what? Both of those surveys are from 2018, long before the era of widespread remote work.

By contrast, in a Gallup survey in late 2021, 58 percent of respondents reported less burnout. An April 2021 McKinsey survey found burnout in 54 percent of Americans and 49 percent globally. A September 2021 survey by The Hartford reported 61 percent burnout. Arguably, the increase in full or part-time remote opportunities during the pandemic helped to address feelings of burnout, rather than increasing them. Indeed, that finding aligns with the earlier surveys and peer-reviewed research suggesting remote and hybrid work improves wellbeing.

Remote work isn’t perfect – here’s how to fix its shortcomings

Still, burnout is a real problem for hybrid and remote workers, as it is for in-office workers. Employers need to offer mental health benefits with online options to help employees address these challenges, regardless of where they’re working.

Moreover, while they’re better overall for wellbeing, remote and hybrid work arrangements do have specific disadvantages around work-life separation. To address work-life issues, I advise my clients who I helped make the transition to hybrid and remote work to establish norms and policies that focus on clear expectations and setting boundaries.

For working at home and collaborating with others, there’s sometimes an unhealthy expectation that once you start your workday in your home office chair, and that you’ll work continuously while sitting there.

Some people expect their Slack or Microsoft Teams messages to be answered within an hour, while others check Slack once a day. Some believe email requires a response within three hours, and others feel three days is fine. As a result of such uncertainty and lack of clarity about what’s appropriate, too many people feel uncomfortable disconnecting and not replying to messages or doing work tasks after hours. That might stem from a fear of not meeting their boss’s expectations or not wanting to let their colleagues down.

To solve this problem, companies need to establish and incentivize clear expectations and boundaries. They should develop policies and norms around response times for different channels of communication. They also need to clarify work-life boundaries – for example, the frequency and types of unusual circumstances that will require employees to work outside of regular hours.

Moreover, for working at home and collaborating with others, there’s sometimes an unhealthy expectation that once you start your workday in your home office chair, and that you’ll work continuously while sitting there (except for your lunch break). That’s not how things work in the office, which has physical and mental breaks built in throughout the day. You took 5-10 minutes to walk from one meeting to another, or you went to get your copies from the printer and chatted with a coworker on the way.

Those and similar physical and mental breaks, research shows, decrease burnout, improve productivity, and reduce mistakes. That’s why companies should strongly encourage employees to take at least a 10-minute break every hour during remote work. At least half of those breaks should involve physical activity, such as stretching or walking around, to counteract the dangerous effects of prolonged sitting. Other breaks should be restorative mental activities, such as meditation, brief naps, walking outdoors, or whatever else feels restorative to you.

To facilitate such breaks, my client organizations such as the University of Southern California’s Information Sciences Institute shortened hour-long meetings to 50 minutes and half-hour meetings to 25 minutes, to give everyone – both in-person and remote workers – a mental and physical break and transition time.

Very few people will be reluctant to have shorter meetings. After that works out, move to other aspects of setting boundaries and expectations. Doing so will require helping team members get on the same page and reduce conflicts and tensions. By setting clear expectations, you’ll address the biggest challenge for wellbeing for remote and hybrid work: establishing clear work-life boundaries.

A company has slashed the cost of assessing a person's genome to just $100. With lower costs - and as other genetic tools mature and evolve - a wave of new therapies could be coming in the near future.

In May 2022, Californian biotech Ultima Genomics announced that its UG 100 platform was capable of sequencing an entire human genome for just $100, a landmark moment in the history of the field. The announcement was particularly remarkable because few had previously heard of the company, a relative unknown in an industry long dominated by global giant Illumina which controls about 80 percent of the world’s sequencing market.

Ultima’s secret was to completely revamp many technical aspects of the way Illumina have traditionally deciphered DNA. The process usually involves first splitting the double helix DNA structure into single strands, then breaking these strands into short fragments which are laid out on a glass surface called a flow cell. When this flow cell is loaded into the sequencing machine, color-coded tags are attached to each individual base letter. A laser scans the bases individually while a camera simultaneously records the color associated with them, a process which is repeated until every single fragment has been sequenced.

Instead, Ultima has found a series of shortcuts to slash the cost and boost efficiency. “Ultima Genomics has developed a fundamentally new sequencing architecture designed to scale beyond conventional approaches,” says Josh Lauer, Ultima’s chief commercial officer.

This ‘new architecture’ is a series of subtle but highly impactful tweaks to the sequencing process ranging from replacing the costly flow cell with a silicon wafer which is both cheaper and allows more DNA to be read at once, to utilizing machine learning to convert optical data into usable information.

To put $100 genome in perspective, back in 2012 the cost of sequencing a single genome was around $10,000, a price tag which dropped to $1,000 a few years later. Before Ultima’s announcement, the cost of sequencing an individual genome was around $600.

Several studies have found that nearly 12 percent of healthy people who have their genome sequenced, then discover they have a variant pointing to a heightened risk of developing a disease that can be monitored, treated or prevented.

While Ultima’s new machine is not widely available yet, Illumina’s response has been rapid. Last month the company unveiled the NovaSeq X series, which it describes as its fastest most cost-efficient sequencing platform yet, capable of sequencing genomes at $200, with further price cuts likely to follow.

But what will the rapidly tumbling cost of sequencing actually mean for medicine? “Well to start with, obviously it’s going to mean more people getting their genome sequenced,” says Michael Snyder, professor of genetics at Stanford University. “It'll be a lot more accessible to people.”

At the moment sequencing is mainly limited to certain cancer patients where it is used to inform treatment options, and individuals with undiagnosed illnesses. In the past, initiatives such as SeqFirst have attempted further widen access to genome sequencing based on growing amounts of research illustrating the potential benefits of the technology in healthcare. Several studies have found that nearly 12 percent of healthy people who have their genome sequenced, then discover they have a variant pointing to a heightened risk of developing a disease that can be monitored, treated or prevented.

“While whole genome sequencing is not yet widely used in the U.S., it has started to come into pediatric critical care settings such as newborn intensive care units,” says Professor Michael Bamshad, who heads the genetic medicine division in the University of Washington’s pediatrics department. “It is also being used more often in outpatient clinical genetics services, particularly when conventional testing fails to identify explanatory variants.”

But the cost of sequencing itself is only one part of the price tag. The subsequent clinical interpretation and genetic counselling services often come to several thousand dollars, a cost which insurers are not always willing to pay.

As a result, while Bamshad and others hope that the arrival of the $100 genome will create new opportunities to use genetic testing in innovative ways, the most immediate benefits are likely to come in the realm of research.

Bigger Data

There are numerous ways in which cheaper sequencing is likely to advance scientific research, for example the ability to collect data on much larger patient groups. This will be a major boon to scientists working on complex heterogeneous diseases such as schizophrenia or depression where there are many genes involved which all exert subtle effects, as well as substantial variance across the patient population. Bigger studies could help scientists identify subgroups of patients where the disease appears to be driven by similar gene variants, who can then be more precisely targeted with specific drugs.

If insurers can figure out the economics, Snyder even foresees a future where at a certain age, all of us can qualify for annual sequencing of our blood cells to search for early signs of cancer or the potential onset of other diseases like type 2 diabetes.

David Curtis, a genetics professor at University College London, says that scientists studying these illnesses have previously been forced to rely on genome-wide association studies which are limited because they only identify common gene variants. “We might see a significant increase in the number of large association studies using sequence data,” he says. “It would be far preferable to use this because it provides information about rare, potentially functional variants.”

Cheaper sequencing will also aid researchers working on diseases which have traditionally been underfunded. Bamshad cites cystic fibrosis, a condition which affects around 40,000 children and adults in the U.S., as one particularly pertinent example.

“Funds for gene discovery for rare diseases are very limited,” he says. “We’re one of three sites that did whole genome sequencing on 5,500 people with cystic fibrosis, but our statistical power is limited. A $100 genome would make it much more feasible to sequence everyone in the U.S. with cystic fibrosis and make it more likely that we discover novel risk factors and pathways influencing clinical outcomes.”

For progressive diseases that are more common like cancer and type 2 diabetes, as well as neurodegenerative conditions like multiple sclerosis and ALS, geneticists will be able to go even further and afford to sequence individual tumor cells or neurons at different time points. This will enable them to analyze how individual DNA modifications like methylation, change as the disease develops.

In the case of cancer, this could help scientists understand how tumors evolve to evade treatments. Within in a clinical setting, the ability to sequence not just one, but many different cells across a patient’s tumor could point to the combination of treatments which offer the best chance of eradicating the entire cancer.

“What happens at the moment with a solid tumor is you treat with one drug, and maybe 80 percent of that tumor is susceptible to that drug,” says Neil Ward, vice president and general manager in the EMEA region for genomics company PacBio. “But the other 20 percent of the tumor has already got mutations that make it resistant, which is probably why a lot of modern therapies extend life for sadly only a matter of months rather than curing, because they treat a big percentage of the tumor, but not the whole thing. So going forwards, I think that we will see genomics play a huge role in cancer treatments, through using multiple modalities to treat someone's cancer.”

If insurers can figure out the economics, Snyder even foresees a future where at a certain age, all of us can qualify for annual sequencing of our blood cells to search for early signs of cancer or the potential onset of other diseases like type 2 diabetes.

“There are companies already working on looking for cancer signatures in methylated DNA,” he says. “If it was determined that you had early stage cancer, pre-symptomatically, that could then be validated with targeted MRI, followed by surgery or chemotherapy. It makes a big difference catching cancer early. If there were signs of type 2 diabetes, you could start taking steps to mitigate your glucose rise, and possibly prevent it or at least delay the onset.”

This would already revolutionize the way we seek to prevent a whole range of illnesses, but others feel that the $100 genome could also usher in even more powerful and controversial preventative medicine schemes.

Newborn screening

In the eyes of Kári Stefánsson, the Icelandic neurologist who been a visionary for so many advances in the field of human genetics over the last 25 years, the falling cost of sequencing means it will be feasible to sequence the genomes of every baby born.

“We have recently done an analysis of genomes in Iceland and the UK Biobank, and in 4 percent of people you find mutations that lead to serious disease, that can be prevented or dealt with,” says Stefansson, CEO of deCODE genetics, a subsidiary of the pharmaceutical company Amgen. “This could transform our healthcare systems.”

As well as identifying newborns with rare diseases, this kind of genomic information could be used to compute a person’s risk score for developing chronic illnesses later in life. If for example, they have a higher than average risk of colon or breast cancer, they could be pre-emptively scheduled for annual colonoscopies or mammograms as soon as they hit adulthood.

To a limited extent, this is already happening. In the UK, Genomics England has launched the Newborn Genomes Programme, which plans to undertake whole-genome sequencing of up to 200,000 newborn babies, with the aim of enabling the early identification of rare genetic diseases.

"I have not had my own genome sequenced and I would not have wanted my parents to have agreed to this," Curtis says. "I don’t see that sequencing children for the sake of some vague, ill-defined benefits could ever be justifiable.”

However, some scientists feel that it is tricky to justify sequencing the genomes of apparently healthy babies, given the data privacy issues involved. They point out that we still know too little about the links which can be drawn between genetic information at birth, and risk of chronic illness later in life.

“I think there are very difficult ethical issues involved in sequencing children if there are no clear and immediate clinical benefits,” says Curtis. “They cannot consent to this process. I have not had my own genome sequenced and I would not have wanted my parents to have agreed to this. I don’t see that sequencing children for the sake of some vague, ill-defined benefits could ever be justifiable.”

Curtis points out that there are many inherent risks about this data being available. It may fall into the hands of insurance companies, and it could even be used by governments for surveillance purposes.

“Genetic sequence data is very useful indeed for forensic purposes. Its full potential has yet to be realized but identifying rare variants could provide a quick and easy way to find relatives of a perpetrator,” he says. “If large numbers of people had been sequenced in a healthcare system then it could be difficult for a future government to resist the temptation to use this as a resource to investigate serious crimes.”

While sequencing becoming more widely available will present difficult ethical and moral challenges, it will offer many benefits for society as a whole. Cheaper sequencing will help boost the diversity of genomic datasets which have traditionally been skewed towards individuals of white, European descent, meaning that much of the actionable medical information which has come out of these studies is not relevant to people of other ethnicities.

Ward predicts that in the coming years, the growing amount of genetic information will ultimately change the outcomes for many with rare, previously incurable illnesses.

“If you're the parent of a child that has a susceptible or a suspected rare genetic disease, their genome will get sequenced, and while sadly that doesn’t always lead to treatments, it’s building up a knowledge base so companies can spring up and target that niche of a disease,” he says. “As a result there’s a whole tidal wave of new therapies that are going to come to market over the next five years, as the genetic tools we have, mature and evolve.”