Elizabeth Holmes Through the Director’s Lens

Kira Peikoff was the editor-in-chief of Leaps.org from 2017 to 2021. As a journalist, her work has appeared in The New York Times, Newsweek, Nautilus, Popular Mechanics, The New York Academy of Sciences, and other outlets. She is also the author of four suspense novels that explore controversial issues arising from scientific innovation: Living Proof, No Time to Die, Die Again Tomorrow, and Mother Knows Best. Peikoff holds a B.A. in Journalism from New York University and an M.S. in Bioethics from Columbia University. She lives in New Jersey with her husband and two young sons. Follow her on Twitter @KiraPeikoff.

Elizabeth Holmes.

"The Inventor," a chronicle of Theranos's storied downfall, premiered recently on HBO. Leapsmag reached out to director Alex Gibney, whom The New York Times has called "one of America's most successful and prolific documentary filmmakers," for his perspective on Elizabeth Holmes and the world she inhabited.

Do you think Elizabeth Holmes was a charismatic sociopath from the start — or is she someone who had good intentions, over-promised, and began the lies to keep her business afloat, a "fake it till you make it" entrepreneur like Thomas Edison?

I'm not qualified to say if EH was or is a sociopath. I don't think she started Theranos as a scam whose only purpose was to make money. If she had done so, she surely would have taken more money for herself along the way. I do think that she had good intentions and that she, as you say, "began the lies to keep her business afloat." ([Reporter John] Carreyrou's book points out that those lies began early.) I think that the Edison comparison is instructive for a lot of reasons.

First, Edison was the original "fake-it-till-you-make-it" entrepreneur. That puts this kind of behavior in the mainstream of American business. By saying that, I am NOT endorsing the ethic, just the opposite. As one Enron executive mused about the mendacity there, "Was it fraud or was it bad marketing?" That gives you a sense of how baked-in the "fake it" sensibility is.

"Having a thirst for fame and a noble cause enabled her to think it was OK to lie in service of those goals."

I think EH shares one other thing with Edison, which is a huge ego coupled with a talent for storytelling as long as she is the heroic, larger-than-life main character. It's interesting that EH calls her initial device "Edison." Edison was the world's most famous "inventor," both because of the devices that came out of his shop and and for his ability for "self-invention." As Randall Stross notes in "The Wizard of Menlo Park," he was the first celebrity businessman. In addition to her "good intentions," EH was certainly motivated by fame and glory and many of her lies were in service to those goals.

Having a thirst for fame and a noble cause enabled her to think it was OK to lie in service of those goals. That doesn't excuse the lies. But those noble goals may have allowed EH to excuse them for herself or, more perniciously, to make believe that they weren't lies at all. This is where we get into scary psychological territory.

But rather than thinking of it as freakish, I think it's more productive to think of it as an exaggeration of the way we all lie to others and to ourselves. That's the point of including the Dan Ariely experiment with the dice. In that experiment, most of the subjects cheated more when they thought they were doing it for a good cause. Even more disturbing, that "good cause" allowed them to lie much more effectively because they had come to believe they weren't doing anything wrong. As it turns out, economics isn't a rational practice; it's the practice of rationalizing.

Where EH and Edison differ is that Edison had a firm grip on reality. He knew he could find a way to make the incandescent lightbulb work. There is no evidence that EH was close to making her "Edison" work. But rather than face reality (and possibly adjust her goals) she pretended that her dream was real. That kind of "over-promising" or "bold vision" is one thing when you are making a prototype in the lab. It's a far more serious matter when you are using a deeply flawed system on real patients. EH can tell herself that she had to do that (Walgreens was ready to walk away if she hadn't "gone live") or else Theranos would have run out of money.

But look at the calculation she made: she thought it was worth putting lives at risk in order to make her dream come true. Now we're getting into the realm of the sociopath. But my experience leads me to believe that -- as in the case of the Milgram experiment -- most people don't do terrible things right away, they come to crimes gradually as they become more comfortable with bigger and bigger rationalizations. At Theranos, the more valuable the company became, the bigger grew the lies.

The two whistleblowers come across as courageous heroes, going up against the powerful and intimidating company. The contrast between their youth and lack of power and the old elite backers of Theronos is staggering, and yet justice triumphed. Were the whistleblowers hesitant or afraid to appear in the film, or were they eager to share their stories?

By the time I got to them, they were willing and eager to tell their stories, once I convinced them that I would honor their testimony. In the case of Erika and Tyler, they were nudged to participate by John Carreyrou, in whom they had enormous trust.

"It's simply crazy that no one demanded to see an objective demonstration of the magic box."

Why do you think so many elite veterans of politics and venture capitalism succumbed to Holmes' narrative in the first place, without checking into the details of its technology or financials?

The reasons are all in the film. First, Channing Robertson and many of the old men on her board were clearly charmed by her and maybe attracted to her. They may have rationalized their attraction by convincing themselves it was for a good cause! Second, as Dan Ariely tells us, we all respond to stories -- more than graphs and data -- because they stir us emotionally. EH was a great storyteller. Third, the story of her as a female inventor and entrepreneur in male-dominated Silicon Valley is a tale that they wanted to invest in.

There may have been other factors. EH was very clever about the way she put together an ensemble of credibility. How could Channing Robertson, George Shultz, Henry Kissinger and Jim Mattis all be wrong? And when Walgreens put the Wellness Centers in stores, investors like Rupert Murdoch assumed that Walgreens must have done its due diligence. But they hadn't!

It's simply crazy that no one demanded to see an objective demonstration of the magic box. But that blind faith, as it turns out, is more a part of capitalism than we have been taught.

Do you think that Roger Parloff deserves any blame for the glowing Fortune story on Theranos, since he appears in the film to blame himself? Or was he just one more victim of Theranos's fraud?

He put her on the cover of Fortune so he deserves some blame for the fraud. He still blames himself. That willingness to hold himself to account shows how seriously he takes the job of a journalist. Unlike Elizabeth, Roger has the honesty and moral integrity to admit that he made a mistake. He owned up to it and published a mea culpa. That said, Roger was also a victim because Elizabeth lied to him.

Do you think investors in Silicon Valley, with their FOMO attitudes and deep pockets, are vulnerable to making the same mistake again with a shiny new startup, or has this saga been a sober reminder to do their due diligence first?

Many of the mistakes made with Theranos were the same mistakes made with Enron. We must learn to recognize that we are, by nature, trusting souls. Knowing that should lead us to a guiding slogan: "trust but verify."

The irony of Holmes dancing to "I Can't Touch This" is almost too perfect. How did you find that footage?

It was leaked to us.

"Elizabeth Holmes is now famous for her fraud. Who better to host the re-boot of 'The Apprentice.'"

Holmes is facing up to 20 years in prison for federal fraud charges, but Vanity Fair recently reported that she is seeking redemption, taking meetings with filmmakers for a possible documentary to share her "real" story. What do you think will become of Holmes in the long run?

It's usually a mistake to handicap a trial. My guess is that she will be convicted and do some prison time. But maybe she can convince jurors -- the way she convinced journalists, her board, and her investors -- that, on account of her noble intentions, she deserves to be found not guilty. "Somewhere, over the rainbow…"

After the trial, and possibly prison, I'm sure that EH will use her supporters (like Tim Draper) to find a way to use the virtual currency of her celebrity to rebrand herself and launch something new. Fitzgerald famously said that "there are no second acts in American lives." That may be the stupidest thing he ever said.

Donald Trump failed at virtually every business he ever embarked on. But he became a celebrity for being a fake businessman and used that celebrity -- and phony expertise -- to become president of the United States. Elizabeth Holmes is now famous for her fraud. Who better to host the re-boot of "The Apprentice." And then?

"You Can't Touch This!"

Kira Peikoff was the editor-in-chief of Leaps.org from 2017 to 2021. As a journalist, her work has appeared in The New York Times, Newsweek, Nautilus, Popular Mechanics, The New York Academy of Sciences, and other outlets. She is also the author of four suspense novels that explore controversial issues arising from scientific innovation: Living Proof, No Time to Die, Die Again Tomorrow, and Mother Knows Best. Peikoff holds a B.A. in Journalism from New York University and an M.S. in Bioethics from Columbia University. She lives in New Jersey with her husband and two young sons. Follow her on Twitter @KiraPeikoff.

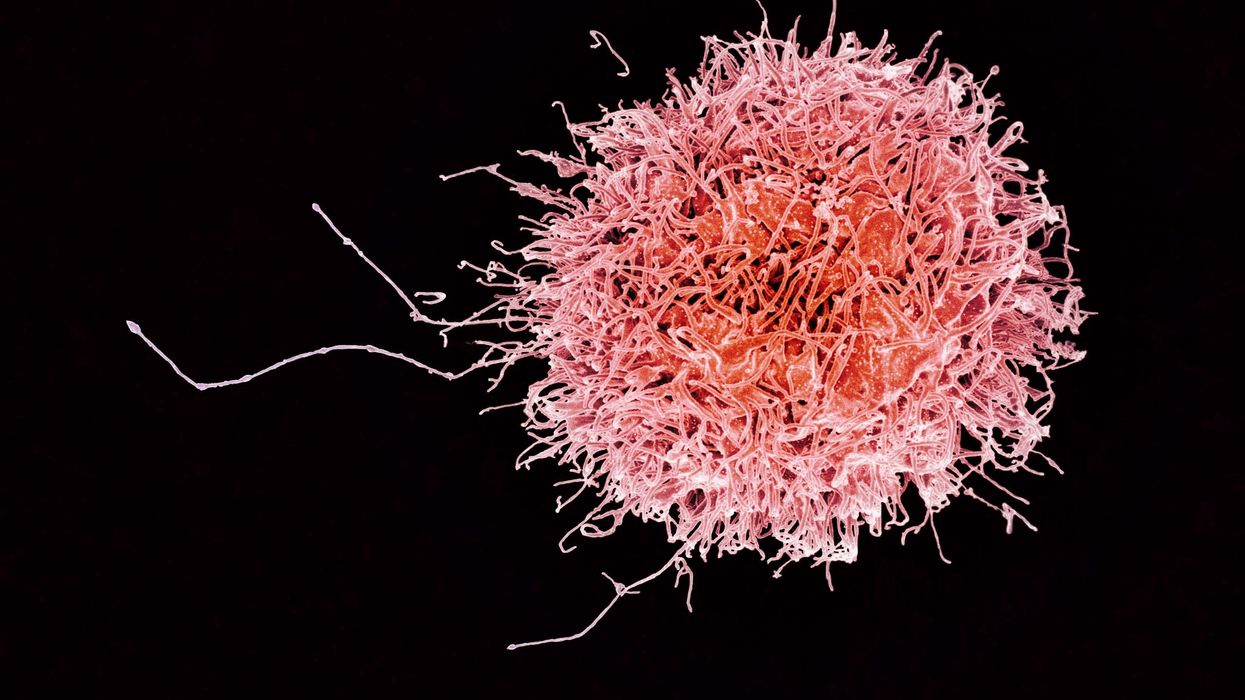

On today’s episode of Making Sense of Science, I’m honored to be joined by Dr. Paul Song, a physician, oncologist, progressive activist and biotech chief medical officer. Through his company, NKGen Biotech, Dr. Song is leveraging the power of patients’ own immune systems by supercharging the body’s natural killer cells to make new treatments for Alzheimer’s and cancer.

Whereas other treatments for Alzheimer’s focus directly on reducing the build-up of proteins in the brain such as amyloid and tau in patients will mild cognitive impairment, NKGen is seeking to help patients that much of the rest of the medical community has written off as hopeless cases, those with late stage Alzheimer’s. And in small studies, NKGen has shown remarkable results, even improvement in the symptoms of people with these very progressed forms of Alzheimer’s, above and beyond slowing down the disease.

In the realm of cancer, Dr. Song is similarly setting his sights on another group of patients for whom treatment options are few and far between: people with solid tumors. Whereas some gradual progress has been made in treating blood cancers such as certain leukemias in past few decades, solid tumors have been even more of a challenge. But Dr. Song’s approach of using natural killer cells to treat solid tumors is promising. You may have heard of CAR-T, which uses genetic engineering to introduce cells into the body that have a particular function to help treat a disease. NKGen focuses on other means to enhance the 40 plus receptors of natural killer cells, making them more receptive and sensitive to picking out cancer cells.

Paul Y. Song, MD is currently CEO and Vice Chairman of NKGen Biotech. Dr. Song’s last clinical role was Asst. Professor at the Samuel Oschin Cancer Center at Cedars Sinai Medical Center.

Dr. Song served as the very first visiting fellow on healthcare policy in the California Department of Insurance in 2013. He is currently on the advisory board of the Pritzker School of Molecular Engineering at the University of Chicago and a board member of Mercy Corps, The Center for Health and Democracy, and Gideon’s Promise.

Dr. Song graduated with honors from the University of Chicago and received his MD from George Washington University. He completed his residency in radiation oncology at the University of Chicago where he served as Chief Resident and did a brachytherapy fellowship at the Institute Gustave Roussy in Villejuif, France. He was also awarded an ASTRO research fellowship in 1995 for his research in radiation inducible gene therapy.

With Dr. Song’s leadership, NKGen Biotech’s work on natural killer cells represents cutting-edge science leading to key findings and important pieces of the puzzle for treating two of humanity’s most intractable diseases.

Show links

- Paul Song LinkedIn

- NKGen Biotech on Twitter - @NKGenBiotech

- NKGen Website: https://nkgenbiotech.com/

- NKGen appoints Paul Song

- Patient Story: https://pix11.com/news/local-news/long-island/promising-new-treatment-for-advanced-alzheimers-patients/

- FDA Clearance: https://nkgenbiotech.com/nkgen-biotech-receives-ind-clearance-from-fda-for-snk02-allogeneic-natural-killer-cell-therapy-for-solid-tumors/Q3 earnings data: https://www.nasdaq.com/press-release/nkgen-biotech-inc.-reports-third-quarter-2023-financial-results-and-business

Is there a robot nanny in your child's future?

Some researchers argue that active, playful engagement with a "robot nanny" for a few hours a day is better than several hours in front of a TV or with an iPad.

From ROBOTS AND THE PEOPLE WHO LOVE THEM: Holding on to Our Humanity in an Age of Social Robots by Eve Herold. Copyright © 2024 by the author and reprinted by permission of St. Martin’s Publishing Group.

Could the use of robots take some of the workload off teachers, add engagement among students, and ultimately invigorate learning by taking it to a new level that is more consonant with the everyday experiences of young people? Do robots have the potential to become full-fledged educators and further push human teachers out of the profession? The preponderance of opinion on this subject is that, just as AI and medical technology are not going to eliminate doctors, robot teachers will never replace human teachers. Rather, they will change the job of teaching.

A 2017 study led by Google executive James Manyika suggested that skills like creativity, emotional intelligence, and communication will always be needed in the classroom and that robots aren’t likely to provide them at the same level that humans naturally do. But robot teachers do bring advantages, such as a depth of subject knowledge that teachers can’t match, and they’re great for student engagement.

The teacher and robot can complement each other in new ways, with the teacher facilitating interactions between robots and students. So far, this is the case with teaching “assistants” being adopted now in China, Japan, the U.S., and Europe. In this scenario, the robot (usually the SoftBank child-size robot NAO) is a tool for teaching mainly science, technology, engineering, and math (the STEM subjects), but the teacher is very involved in planning, overseeing, and evaluating progress. The students get an entertaining and enriched learning experience, and some of the teaching load is taken off the teacher. At least, that’s what researchers have been able to observe so far.

To be sure, there are some powerful arguments for having robots in the classroom. A not-to-be-underestimated one is that robots “speak the language” of today’s children, who have been steeped in technology since birth. These children are adept at navigating a media-rich environment that is highly visual and interactive. They are plugged into the Internet 24-7. They consume music, games, and huge numbers of videos on a weekly basis. They expect to be dazzled because they are used to being dazzled by more and more spectacular displays of digital artistry. Education has to compete with social media and the entertainment vehicles of students’ everyday lives.

Another compelling argument for teaching robots is that they help prepare students for the technological realities they will encounter in the real world when robots will be ubiquitous. From childhood on, they will be interacting and collaborating with robots in every sphere of their lives from the jobs they do to dealing with retail robots and helper robots in the home. Including robots in the classroom is one way of making sure that children of all socioeconomic backgrounds will be better prepared for a highly automated age, when successfully using robots will be as essential as reading and writing. We’ve already crossed this threshold with computers and smartphones.

Students need multimedia entertainment with their teaching. This is something robots can provide through their ability to connect to the Internet and act as a centralized host to videos, music, and games. Children also need interaction, something robots can deliver up to a point, but which humans can surpass. The education of a child is not just intended to make them technologically functional in a wired world, it’s to help them grow in intellectual, creative, social, and emotional ways. When considered through this perspective, it opens the door to questions concerning just how far robots should go. Robots don’t just teach and engage children; they’re designed to tug at their heartstrings.

It’s no coincidence that many toy makers and manufacturers are designing cute robots that look and behave like real children or animals, says Turkle. “When they make eye contact and gesture toward us, they predispose us to view them as thinking and caring,” she has written in The Washington Post. “They are designed to be cute, to provide a nurturing response” from the child. As mentioned previously, this nurturing experience is a powerful vehicle for drawing children in and promoting strong attachment. But should children really love their robots?

ROBOTS AND THE PEOPLE WHO LOVE THEM: Holding on to Our Humanity in an Age of Social Robots by Eve Herold (January 9, 2024).

St. Martin’s Publishing Group

The problem, once again, is that a child can be lulled into thinking that she’s in an actual relationship, when a robot can’t possibly love her back. If adults have these vulnerabilities, what might such asymmetrical relationships do to the emotional development of a small child? Turkle notes that while we tend to ascribe a mind and emotions to a socially interactive robot, “simulated thinking may be thinking, but simulated feeling is never feeling, and simulated love is never love.”

Always a consideration is the fact that in the first few years of life, a child’s brain is undergoing rapid growth and development that will form the foundation of their lifelong emotional health. These formative experiences are literally shaping the child’s brain, their expectations, and their view of the world and their place in it. In Alone Together, Turkle asks: What are we saying to children about their importance to us when we’re willing to outsource their care to a robot? A child might be superficially entertained by the robot while his self-esteem is systematically undermined.

Research has emerged showing that there are clear downsides to child-robot relationships.

Still, in the case of robot nannies in the home, is active, playful engagement with a robot for a few hours a day any more harmful than several hours in front of a TV or with an iPad? Some, like Xiong, regard interacting with a robot as better than mere passive entertainment. iPal’s manufacturers say that their robot can’t replace parents or teachers and is best used by three- to eight-year-olds after school, while they wait for their parents to get off work. But as robots become ever-more sophisticated, they’re expected to perform more of the tasks of day-to-day care and to be much more emotionally advanced. There is no question children will form deep attachments to some of them. And research has emerged showing that there are clear downsides to child-robot relationships.

Some studies, performed by Turkle and fellow MIT colleague Cynthia Breazeal, have revealed a darker side to the child-robot bond. Turkle has reported extensively on these studies in The Washington Post and in her book Alone Together. Most children love robots, but some act out their inner bully on the hapless machines, hitting and kicking them and otherwise trying to hurt them. The trouble is that the robot can’t fight back, teaching children that they can bully and abuse without consequences. As in any other robot relationship, such harmful behavior could carry over into the child’s human relationships.

And, ironically, it turns out that communicative machines don’t actually teach kids good communication skills. It’s well known that parent-child communication in the first three years of life sets the stage for a very young child’s intellectual and academic success. Verbal back-and-forth with parents and care-givers is like fuel for a child’s growing brain. One article that examined several types of play and their effect on children’s communication skills, published in JAMA Pediatrics in 2015, showed that babies who played with electronic toys—like the popular robot dog Aibo—show a decrease in both the quantity and quality of their language skills.

Anna V. Sosa of the Child Speech and Language Lab at Northern Arizona University studied twenty-six ten- to sixteen- month-old infants to compare the growth of their language skills after they played with three types of toys: electronic toys like a baby laptop and talking farm; traditional toys like wooden puzzles and building blocks; and books read aloud by their parents. The play that produced the most growth in verbal ability was having books read to them by a caregiver, followed by play with traditional toys. Language gains after playing with electronic toys came dead last. This form of play involved the least use of adult words, the least conversational turntaking, and the least verbalizations from the children. While the study sample was small, it’s not hard to extrapolate that no electronic toy or even more abled robot could supply the intimate responsiveness of a parent reading stories to a child, explaining new words, answering the child’s questions, and modeling the kind of back- and-forth interaction that promotes empathy and reciprocity in relationships.

***

Most experts acknowledge that robots can be valuable educational tools. But they can’t make a child feel truly loved, validated, and valued. That’s the job of parents, and when parents abdicate this responsibility, it’s not only the child who misses out on one of life’s most profound experiences.

We really don’t know how the tech-savvy children of today will ultimately process their attachments to robots and whether they will be excessively predisposed to choosing robot companionship over that of humans. It’s possible their techno literacy will draw for them a bold line between real life and a quasi-imaginary history with a robot. But it will be decades before we see long-term studies culminating in sufficient data to help scientists, and the rest of us, to parse out the effects of a lifetime spent with robots.

This is an excerpt from ROBOTS AND THE PEOPLE WHO LOVE THEM: Holding on to Our Humanity in an Age of Social Robots by Eve Herold. The book will be published on January 9, 2024.