Genetic Test Scores Predicting Intelligence Are Not the New Eugenics

A thinking person.

"A world where people are slotted according to their inborn ability – well, that is Gattaca. That is eugenics."

This was the assessment of Dr. Catherine Bliss, a sociologist who wrote a new book on social science genetics, when asked by MIT Technology Review about polygenic scores that can predict a person's intelligence or performance in school. Like a credit score, a polygenic score is statistical tool that combines a lot of information about a person's genome into a single number. Fears about using polygenic scores for genetic discrimination are understandable, given this country's ugly history of using the science of heredity to justify atrocities like forcible sterilization. But polygenic scores are not the new eugenics. And, rushing to discuss polygenic scores in dystopian terms only contributes to widespread public misunderstanding about genetics.

Can we start genotyping toddlers to identify the budding geniuses among them? The short answer is no.

Let's begin with some background on how polygenic scores are developed. In a genome wide-association study, researchers conduct millions of statistical tests to identify small differences in people's DNA sequence that are correlated with differences in a target outcome (beyond what can attributed to chance or ancestry differences). Successful studies of this sort require enormous sample sizes, but companies like 23andMe are now contributing genetic data from their consumers to research studies, and national biorepositories like U.K. Biobank have put genetic information from hundreds of thousands of people online. When applied to studying blood lipids or myopia, this kind of study strikes people as a straightforward and uncontroversial scientific tool. But it can also be conducted for cognitive and behavioral outcomes, like how many years of school a person has completed. When researchers have finished a genome-wide association study, they are left with a dataset with millions of rows (one for each genetic variant analyzed) and one column with the correlations between each variant and the outcome being studied.

The trick to polygenic scoring is to use these results and apply them to people who weren't participants in the original study. Measure the genes of a new person, weight each one of her millions of genetic variants by its correlation with educational attainment from a genome-wide association study, and then simply add everything up into a single number. Voila! -- you've created a polygenic score for educational attainment. On its face, the idea of "scoring" a person's genotype does immediately suggest Gattaca-type applications. Can we now start screening embryos for their "inborn ability," as Bliss called it? Can we start genotyping toddlers to identify the budding geniuses among them?

The short answer is no. Here are four reasons why dystopian projections about polygenic scores are out of touch with the current science:

The phrase "DNA tests for IQ" makes for an attention-grabbing headline, but it's scientifically meaningless.

First, a polygenic score currently predicts the life outcomes of an individual child with a great deal of uncertainty. The amount of uncertainty around polygenic predictions will decrease in the future, as genetic discovery samples get bigger and genetic studies include more of the variation in the genome, including rare variants that are particular to a few families. But for now, knowing a child's polygenic score predicts his ultimate educational attainment about as well as knowing his family's income, and slightly worse than knowing how far his mother went in school. These pieces of information are also readily available about children before they are born, but no one is writing breathless think-pieces about the dystopian outcomes that will result from knowing whether a pregnant woman graduated from college.

Second, using polygenic scoring for embryo selection requires parents to create embryos using reproductive technology, rather than conceiving them by having sex. The prediction that many women will endure medically-unnecessary IVF, in order to select the embryo with the highest polygenic score, glosses over the invasiveness, indignity, pain, and heartbreak that these hormonal and surgical procedures can entail.

Third, and counterintuitively, a polygenic score might be using DNA to measure aspects of the child's environment. Remember, a child inherits her DNA from her parents, who typically also shape the environment she grows up in. And, children's environments respond to their unique personalities and temperaments. One Icelandic study found that parents' polygenic scores predicted their children's educational attainment, even if the score was constructed using only the half of the parental genome that the child didn't inherit. For example, imagine mom has genetic variant X that makes her more likely to smoke during her pregnancy. Prenatal exposure to nicotine, in turn, affects the child's neurodevelopment, leading to behavior problems in school. The school responds to his behavioral problems with suspension, causing him to miss out on instructional content. A genome-wide association study will collapse this long and winding causal path into a simple correlation -- "genetic variant X is correlated with academic achievement." But, a child's polygenic score, which includes variant X, will partly reflect his likelihood of being exposed to adverse prenatal and school environments.

Finally, the phrase "DNA tests for IQ" makes for an attention-grabbing headline, but it's scientifically meaningless. As I've written previously, it makes sense to talk about a bacterial test for strep throat, because strep throat is a medical condition defined as having streptococcal bacteria growing in the back of your throat. If your strep test is positive, you have strep throat, no matter how serious your symptoms are. But a polygenic score is not a test "for" IQ, because intelligence is not defined at the level of someone's DNA. It doesn't matter how high your polygenic score is, if you can't reason abstractly or learn from experience. Equating your intelligence, a cognitive capacity that is tested behaviorally, with your polygenic score, a number that is a weighted sum of genetic variants discovered to be statistically associated with educational attainment in a hypothesis-free data mining exercise, is misleading about what intelligence is and is not.

The task for many scientists like me, who are interested in understanding why some children do better in school than other children, is to disentangle correlations from causation.

So, if we're not going to build a Gattaca-style genetic hierarchy, what are polygenic scores good for? They are not useless. In fact, they give scientists a valuable new tool for studying how to improve children's lives. The task for many scientists like me, who are interested in understanding why some children do better in school than other children, is to disentangle correlations from causation. The best way to do that is to run an experiment where children are randomized to environments, but often a true experiment is unethical or impractical. You can't randomize children to be born to a teenage mother or to go to school with inexperienced teachers. By statistically controlling for some of the relevant genetic differences between people using a polygenic score, scientists are better able to identify potential environmental causes of differences in children's life outcomes. As we have seen with other methods from genetics, like twin studies, understanding genes illuminates the environment.

Research that examines genetics in relation to social inequality, such as differences in higher education outcomes, will obviously remind people of the horrors of the eugenics movement. Wariness regarding how genetic science will be applied is certainly warranted. But, polygenic scores are not pure measures of "inborn ability," and genome-wide association studies of human intelligence and educational attainment are not inevitably ushering in a new eugenics age.

Thanks to safety cautions from the COVID-19 pandemic, a strain of influenza has been completely eliminated.

If you were one of the millions who masked up, washed your hands thoroughly and socially distanced, pat yourself on the back—you may have helped change the course of human history.

Scientists say that thanks to these safety precautions, which were introduced in early 2020 as a way to stop transmission of the novel COVID-19 virus, a strain of influenza has been completely eliminated. This marks the first time in human history that a virus has been wiped out through non-pharmaceutical interventions, such as vaccines.

The flu shot, explained

Influenza viruses type A and B are responsible for the majority of human illnesses and the flu season.

Centers for Disease Control

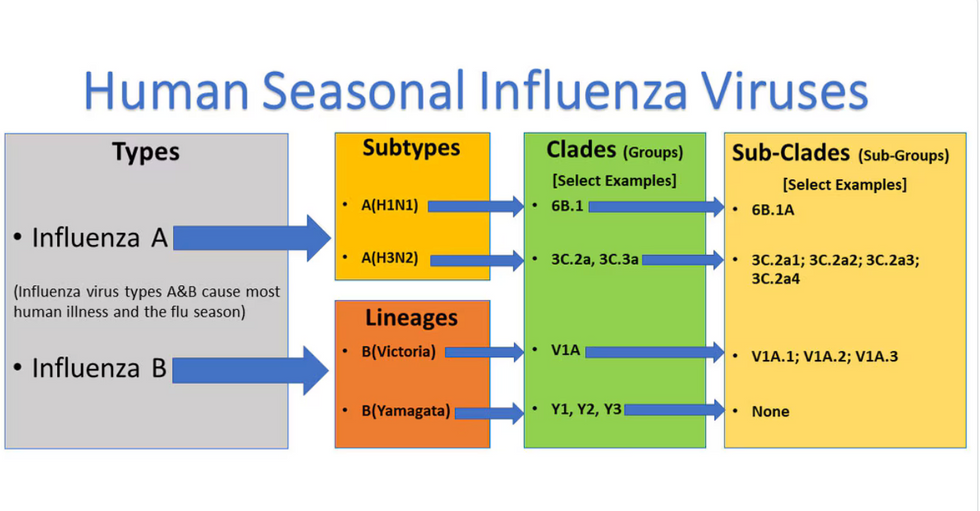

For more than a decade, flu shots have protected against two types of the influenza virus–type A and type B. While there are four different strains of influenza in existence (A, B, C, and D), only strains A, B, and C are capable of infecting humans, and only A and B cause pandemics. In other words, if you catch the flu during flu season, you’re most likely sick with flu type A or B.

Flu vaccines contain inactivated—or dead—influenza virus. These inactivated viruses can’t cause sickness in humans, but when administered as part of a vaccine, they teach a person’s immune system to recognize and kill those viruses when they’re encountered in the wild.

Each spring, a panel of experts gives a recommendation to the US Food and Drug Administration on which strains of each flu type to include in that year’s flu vaccine, depending on what surveillance data says is circulating and what they believe is likely to cause the most illness during the upcoming flu season. For the past decade, Americans have had access to vaccines that provide protection against two strains of influenza A and two lineages of influenza B, known as the Victoria lineage and the Yamagata lineage. But this year, the seasonal flu shot won’t include the Yamagata strain, because the Yamagata strain is no longer circulating among humans.

How Yamagata Disappeared

Flu surveillance data from the Global Initiative on Sharing All Influenza Data (GISAID) shows that the Yamagata lineage of flu type B has not been sequenced since April 2020.

Nature

Experts believe that the Yamagata lineage had already been in decline before the pandemic hit, likely because the strain was naturally less capable of infecting large numbers of people compared to the other strains. When the COVID-19 pandemic hit, the resulting safety precautions such as social distancing, isolating, hand-washing, and masking were enough to drive the virus into extinction completely.

Because the strain hasn’t been circulating since 2020, the FDA elected to remove the Yamagata strain from the seasonal flu vaccine. This will mark the first time since 2012 that the annual flu shot will be trivalent (three-component) rather than quadrivalent (four-component).

Should I still get the flu shot?

The flu shot will protect against fewer strains this year—but that doesn’t mean we should skip it. Influenza places a substantial health burden on the United States every year, responsible for hundreds of thousands of hospitalizations and tens of thousands of deaths. The flu shot has been shown to prevent millions of illnesses each year (more than six million during the 2022-2023 season). And while it’s still possible to catch the flu after getting the flu shot, studies show that people are far less likely to be hospitalized or die when they’re vaccinated.

Another unexpected benefit of dropping the Yamagata strain from the seasonal vaccine? This will possibly make production of the flu vaccine faster, and enable manufacturers to make more vaccines, helping countries who have a flu vaccine shortage and potentially saving millions more lives.

After his grandmother’s dementia diagnosis, one man invented a snack to keep her healthy and hydrated.

Founder Lewis Hornby and his grandmother Pat, sampling Jelly Drops—an edible gummy containing water and life-saving electrolytes.

On a visit to his grandmother’s nursing home in 2016, college student Lewis Hornby made a shocking discovery: Dehydration is a common (and dangerous) problem among seniors—especially those that are diagnosed with dementia.

Hornby’s grandmother, Pat, had always had difficulty keeping up her water intake as she got older, a common issue with seniors. As we age, our body composition changes, and we naturally hold less water than younger adults or children, so it’s easier to become dehydrated quickly if those fluids aren’t replenished. What’s more, our thirst signals diminish naturally as we age as well—meaning our body is not as good as it once was in letting us know that we need to rehydrate. This often creates a perfect storm that commonly leads to dehydration. In Pat’s case, her dehydration was so severe she nearly died.

When Lewis Hornby visited his grandmother at her nursing home afterward, he learned that dehydration especially affects people with dementia, as they often don’t feel thirst cues at all, or may not recognize how to use cups correctly. But while dementia patients often don’t remember to drink water, it seemed to Hornby that they had less problem remembering to eat, particularly candy.

Hornby wanted to create a solution for elderly people who struggled keeping their fluid intake up. He spent the next eighteen months researching and designing a solution and securing funding for his project. In 2019, Hornby won a sizable grant from the Alzheimer’s Society, a UK-based care and research charity for people with dementia and their caregivers. Together, through the charity’s Accelerator Program, they created a bite-sized, sugar-free, edible jelly drop that looked and tasted like candy. The candy, called Jelly Drops, contained 95% water and electrolytes—important minerals that are often lost during dehydration. The final product launched in 2020—and was an immediate success. The drops were able to provide extra hydration to the elderly, as well as help keep dementia patients safe, since dehydration commonly leads to confusion, hospitalization, and sometimes even death.

Not only did Jelly Drops quickly become a favorite snack among dementia patients in the UK, but they were able to provide an additional boost of hydration to hospital workers during the pandemic. In NHS coronavirus hospital wards, patients infected with the virus were regularly given Jelly Drops to keep their fluid levels normal—and staff members snacked on them as well, since long shifts and personal protective equipment (PPE) they were required to wear often left them feeling parched.

In April 2022, Jelly Drops launched in the United States. The company continues to donate 1% of its profits to help fund Alzheimer’s research.