AI and you: Is the promise of personalized nutrition apps worth the hype?

Personalized nutrition apps could provide valuable data to people trying to eat healthier, though more research must be done to show effectiveness.

As a type 2 diabetic, Michael Snyder has long been interested in how blood sugar levels vary from one person to another in response to the same food, and whether a more personalized approach to nutrition could help tackle the rapidly cascading levels of diabetes and obesity in much of the western world.

Eight years ago, Snyder, who directs the Center for Genomics and Personalized Medicine at Stanford University, decided to put his theories to the test. In the 2000s continuous glucose monitoring, or CGM, had begun to revolutionize the lives of diabetics, both type 1 and type 2. Using spherical sensors which sit on the upper arm or abdomen – with tiny wires that pierce the skin – the technology allowed patients to gain real-time updates on their blood sugar levels, transmitted directly to their phone.

It gave Snyder an idea for his research at Stanford. Applying the same technology to a group of apparently healthy people, and looking for ‘spikes’ or sudden surges in blood sugar known as hyperglycemia, could provide a means of observing how their bodies reacted to an array of foods.

“We discovered that different foods spike people differently,” he says. “Some people spike to pasta, others to bread, others to bananas, and so on. It’s very personalized and our feeling was that building programs around these devices could be extremely powerful for better managing people’s glucose.”

Unbeknown to Snyder at the time, thousands of miles away, a group of Israeli scientists at the Weizmann Institute of Science were doing exactly the same experiments. In 2015, they published a landmark paper which used CGM to track the blood sugar levels of 800 people over several days, showing that the biological response to identical foods can vary wildly. Like Snyder, they theorized that giving people a greater understanding of their own glucose responses, so they spend more time in the normal range, may reduce the prevalence of type 2 diabetes.

The commercial potential of such apps is clear, but the underlying science continues to generate intriguing findings.

“At the moment 33 percent of the U.S. population is pre-diabetic, and 70 percent of those pre-diabetics will become diabetic,” says Snyder. “Those numbers are going up, so it’s pretty clear we need to do something about it.”

Fast forward to 2022,and both teams have converted their ideas into subscription-based dietary apps which use artificial intelligence to offer data-informed nutritional and lifestyle recommendations. Snyder’s spinoff, January AI, combines CGM information with heart rate, sleep, and activity data to advise on foods to avoid and the best times to exercise. DayTwo–a start-up which utilizes the findings of Weizmann Institute of Science–obtains microbiome information by sequencing stool samples, and combines this with blood glucose data to rate ‘good’ and ‘bad’ foods for a particular person.

“CGMs can be used to devise personalized diets,” says Eran Elinav, an immunology professor and microbiota researcher at the Weizmann Institute of Science in addition to serving as a scientific consultant for DayTwo. “However, this process can be cumbersome. Therefore, in our lab we created an algorithm, based on data acquired from a big cohort of people, which can accurately predict post-meal glucose responses on a personal basis.”

The commercial potential of such apps is clear. DayTwo, who market their product to corporate employers and health insurers rather than individual consumers, recently raised $37 million in funding. But the underlying science continues to generate intriguing findings.

Last year, Elinav and colleagues published a study on 225 individuals with pre-diabetes which found that they achieved better blood sugar control when they followed a personalized diet based on DayTwo’s recommendations, compared to a Mediterranean diet. The journal Cell just released a new paper from Snyder’s group which shows that different types of fibre benefit people in different ways.

“The idea is you hear different fibres are good for you,” says Snyder. “But if you look at fibres they’re all over the map—it’s like saying all animals are the same. The responses are very individual. For a lot of people [a type of fibre called] arabinoxylan clearly reduced cholesterol while the fibre inulin had no effect. But in some people, it was the complete opposite.”

Eight years ago, Stanford's Michael Snyder began studying how continuous glucose monitors could be used by patients to gain real-time updates on their blood sugar levels, transmitted directly to their phone.

The Snyder Lab, Stanford Medicine

Because of studies like these, interest in precision nutrition approaches has exploded in recent years. In January, the National Institutes of Health announced that they are spending $170 million on a five year, multi-center initiative which aims to develop algorithms based on a whole range of data sources from blood sugar to sleep, exercise, stress, microbiome and even genomic information which can help predict which diets are most suitable for a particular individual.

“There's so many different factors which influence what you put into your mouth but also what happens to different types of nutrients and how that ultimately affects your health, which means you can’t have a one-size-fits-all set of nutritional guidelines for everyone,” says Bruce Y. Lee, professor of health policy and management at the City University of New York Graduate School of Public Health.

With the falling costs of genomic sequencing, other precision nutrition clinical trials are choosing to look at whether our genomes alone can yield key information about what our diets should look like, an emerging field of research known as nutrigenomics.

The ASPIRE-DNA clinical trial at Imperial College London is aiming to see whether particular genetic variants can be used to classify individuals into two groups, those who are more glucose sensitive to fat and those who are more sensitive to carbohydrates. By following a tailored diet based on these sensitivities, the trial aims to see whether it can prevent people with pre-diabetes from developing the disease.

But while much hope is riding on these trials, even precision nutrition advocates caution that the field remains in the very earliest of stages. Lars-Oliver Klotz, professor of nutrigenomics at Friedrich-Schiller-University in Jena, Germany, says that while the overall goal is to identify means of avoiding nutrition-related diseases, genomic data alone is unlikely to be sufficient to prevent obesity and type 2 diabetes.

“Genome data is rather simple to acquire these days as sequencing techniques have dramatically advanced in recent years,” he says. “However, the predictive value of just genome sequencing is too low in the case of obesity and prediabetes.”

Others say that while genomic data can yield useful information in terms of how different people metabolize different types of fat and specific nutrients such as B vitamins, there is a need for more research before it can be utilized in an algorithm for making dietary recommendations.

“I think it’s a little early,” says Eileen Gibney, a professor at University College Dublin. “We’ve identified a limited number of gene-nutrient interactions so far, but we need more randomized control trials of people with different genetic profiles on the same diet, to see whether they respond differently, and if that can be explained by their genetic differences.”

Some start-ups have already come unstuck for promising too much, or pushing recommendations which are not based on scientifically rigorous trials. The world of precision nutrition apps was dubbed a ‘Wild West’ by some commentators after the founders of uBiome – a start-up which offered nutritional recommendations based on information obtained from sequencing stool samples –were charged with fraud last year. The weight-loss app Noom, which was valued at $3.7 billion in May 2021, has been criticized on Twitter by a number of users who claimed that its recommendations have led to them developed eating disorders.

With precision nutrition apps marketing their technology at healthy individuals, question marks have also been raised about the value which can be gained through non-diabetics monitoring their blood sugar through CGM. While some small studies have found that wearing a CGM can make overweight or obese individuals more motivated to exercise, there is still a lack of conclusive evidence showing that this translates to improved health.

However, independent researchers remain intrigued by the technology, and say that the wealth of data generated through such apps could be used to help further stratify the different types of people who become at risk of developing type 2 diabetes.

“CGM not only enables a longer sampling time for capturing glucose levels, but will also capture lifestyle factors,” says Robert Wagner, a diabetes researcher at University Hospital Düsseldorf. “It is probable that it can be used to identify many clusters of prediabetic metabolism and predict the risk of diabetes and its complications, but maybe also specific cardiometabolic risk constellations. However, we still don’t know which forms of diabetes can be prevented by such approaches and how feasible and long-lasting such self-feedback dietary modifications are.”

Snyder himself has now been wearing a CGM for eight years, and he credits the insights it provides with helping him to manage his own diabetes. “My CGM still gives me novel insights into what foods and behaviors affect my glucose levels,” he says.

He is now looking to run clinical trials with his group at Stanford to see whether following a precision nutrition approach based on CGM and microbiome data, combined with other health information, can be used to reverse signs of pre-diabetes. If it proves successful, January AI may look to incorporate microbiome data in future.

“Ultimately, what I want to do is be able take people’s poop samples, maybe a blood draw, and say, ‘Alright, based on these parameters, this is what I think is going to spike you,’ and then have a CGM to test that out,” he says. “Getting very predictive about this, so right from the get go, you can have people better manage their health and then use the glucose monitor to help follow that.”

Coronavirus Risk Calculators: What You Need to Know

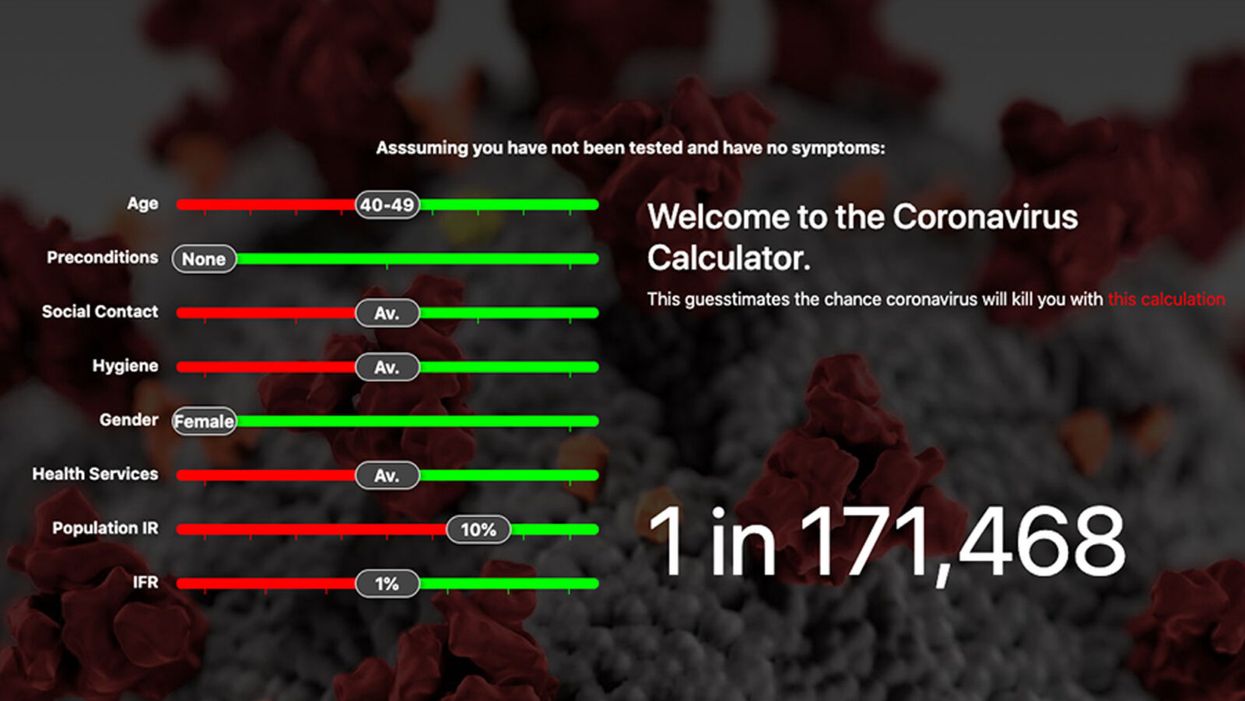

A screenshot of one coronavirus risk calculator.

People in my family seem to develop every ailment in the world, including feline distemper and Dutch elm disease, so I naturally put fingers to keyboard when I discovered that COVID-19 risk calculators now exist.

"It's best to look at your risk band. This will give you a more useful insight into your personal risk."

But the results – based on my answers to questions -- are bewildering.

A British risk calculator developed by the Nexoid software company declared I have a 5 percent, or 1 in 20, chance of developing COVID-19 and less than 1 percent risk of dying if I get it. Um, great, I think? Meanwhile, 19 and Me, a risk calculator created by data scientists, says my risk of infection is 0.01 percent per week, or 1 in 10,000, and it gave me a risk score of 44 out of 100.

Confused? Join the club. But it's actually possible to interpret numbers like these and put them to use. Here are five tips about using coronavirus risk calculators:

1. Make Sure the Calculator Is Designed For You

Not every COVID-19 risk calculator is designed to be used by the general public. Cleveland Clinic's risk calculator, for example, is only a tool for medical professionals, not sick people or the "worried well," said Dr. Lara Jehi, Cleveland Clinic's chief research information officer.

Unfortunately, the risk calculator's web page fails to explicitly identify its target audience. But there are hints that it's not for lay people such as its references to "platelets" and "chlorides."

The 19 and Me or the Nexoid risk calculators, in contrast, are both designed for use by everyone, as is a risk calculator developed by Emory University.

2. Take a Look at the Calculator's Privacy Policy

COVID-19 risk calculators ask for a lot of personal information. The Nexoid calculator, for example, wanted to know my age, weight, drug and alcohol history, pre-existing conditions, blood type and more. It even asked me about the prescription drugs I take.

It's wise to check the privacy policy and be cautious about providing an email address or other personal information. Nexoid's policy says it provides the information it gathers to researchers but it doesn't release IP addresses, which can reveal your location in certain circumstances.

John-Arne Skolbekken, a professor and risk specialist at Norwegian University of Science and Technology, entered his own data in the Nexoid calculator after being contacted by LeapsMag for comment. He noted that the calculator, among other things, asks for information about use of recreational drugs that could be illegal in some places. "I have given away some of my personal data to a company that I can hope will not misuse them," he said. "Let's hope they are trustworthy."

The 19 and Me calculator, by contrast, doesn't gather any data from users, said Cindy Hu, data scientist at Mathematica, which created it. "As soon as the window is closed, that data is gone and not captured."

The Emory University risk calculator, meanwhile, has a long privacy policy that states "the information we collect during your assessment will not be correlated with contact information if you provide it." However, it says personal information can be shared with third parties.

3. Keep an Eye on Time Horizons

Let's say a risk calculator says you have a 1 percent risk of infection. That's fairly low if we're talking about this year as a whole, but it's quite worrisome if the risk percentage refers to today and jumps by 1 percent each day going forward. That's why it's helpful to know exactly what the numbers mean in terms of time.

Unfortunately, this information isn't always readily available. You may have to dig around for it or contact a risk calculator's developers for more information. The 19 and Me calculator's risk percentages refer to this current week based on your behavior this week, Hu said. The Nexoid calculator, by contrast, has an "infinite timeline" that assumes no vaccine is developed, said Jonathon Grantham, the company's managing director. But your results will vary over time since the calculator's developers adjust it to reflect new data.

When you use a risk calculator, focus on this question: "How does your risk compare to the risk of an 'average' person?"

4. Focus on the Big Picture

The Nexoid calculator gave me numbers of 5 percent (getting COVID-19) and 99.309 percent (surviving it). It even provided betting odds for gambling types: The odds are in favor of me not getting infected (19-to-1) and not dying if I get infected (144-to-1).

However, Grantham told me that these numbers "are not the whole story." Instead, he said, "it's best to look at your risk band. This will give you a more useful insight into your personal risk." Risk bands refer to a segmentation of people into five categories, from lowest to highest risk, according to how a person's result sits relative to the whole dataset.

The Nexoid calculator says I'm in the "lowest risk band" for getting COVID-19, and a "high risk band" for dying of it if I get it. That suggests I'd better stay in the lowest-risk category because my pre-existing risk factors could spell trouble for my survival if I get infected.

Michael J. Pencina, a professor and biostatistician at Duke University School of Medicine, agreed that focusing on your general risk level is better than focusing on numbers. When you use a risk calculator, he said, focus on this question: "How does your risk compare to the risk of an 'average' person?"

The 19 and Me calculator, meanwhile, put my risk at 44 out of 100. Hu said that a score of 50 represents the typical person's risk of developing serious consequences from another disease – the flu.

5. Remember to Take Action

Hu, who helped develop the 19 and Me risk calculator, said it's best to use it to "understand the relative impact of different behaviors." As she noted, the calculator is designed to allow users to plug in different answers about their behavior and immediately see how their risk levels change.

This information can help us figure out if we should change the way we approach the world by, say, washing our hands more or avoiding more personal encounters.

"Estimation of risk is only one part of prevention," Pencina said. "The other is risk factors and our ability to reduce them." In other words, odds, percentages and risk bands can be revealing, but it's what we do to change them that matters.

Pseudoscience Is Rampant: How Not to Fall for It

A metaphorical rendering of scientific truth gone awry.

Whom to believe?

The relentless and often unpredictable coronavirus (SARS-CoV-2) has, among its many quirky terrors, dredged up once again the issue that will not die: science versus pseudoscience.

How does one learn to spot the con without getting a Ph.D. and spending years in a laboratory?

The scientists, experts who would be the first to admit they are not infallible, are now in danger of being drowned out by the growing chorus of pseudoscientists, conspiracy theorists, and just plain troublemakers that seem to be as symptomatic of the virus as fever and weakness.

How is the average citizen to filter this cacophony of information and misinformation posing as science alongside real science? While all that noise makes it difficult to separate the real stuff from the fakes, there is at least one positive aspect to it all.

A famous aphorism by one Charles Caleb Colton, a popular 19th-century English cleric and writer, says that "imitation is the sincerest form of flattery."

The frauds and the paranoid conspiracy mongers who would perpetrate false science on a susceptible public are at least recognizing the value of science—they imitate it. They imitate the ways in which science works and make claims as if they were scientists, because even they recognize the power of a scientific approach. They are inadvertently showing us how much we value science. Unfortunately they are just shabby counterfeits.

Separating real science from pseudoscience is not a new problem. Philosophers, politicians, scientists, and others have been worrying about this perhaps since science as we know it, a science based entirely on experiment and not opinion, arrived in the 1600s. The original charter of the British Royal Society, the first organized scientific society, stated that at their formal meetings there would be no discussion of politics, religion, or perpetual motion machines.

The first two of those for the obvious purpose of keeping the peace. But the third is interesting because at that time perpetual motion machines were one of the main offerings of the imitators, the bogus scientists who were sure that you could find ways around the universal laws of energy and make a buck on it. The motto adopted by the society was, and remains, Nullius in verba, Latin for "take nobody's word for it." Kind of an early version of Missouri's venerable state motto: "Show me."

You might think that telling phony science from the real thing wouldn't be so difficult, but events, historical and current, tell a very different story—often with tragic outcomes. Just one terrible example is the estimated 350,000 additional HIV deaths in South Africa directly caused by the now-infamous conspiracy theories of their own elected President no less (sound familiar?). It's surprisingly easy to dress up phony science as the real thing by simply adopting, or appearing to adopt, the trappings of science.

Thus, the anti-vaccine movement claims to be based on suspicion of authority, beginning with medical authority in this case, stemming from the fraudulent data published by the now-disgraced Andrew Wakefield, an English gastroenterologist. And it's true that much of science is based on suspicion of authority. Science got its start when the likes of Galileo and Copernicus claimed that the Church, the State, even Aristotle, could no longer be trusted as authoritative sources of knowledge.

But Galileo and those who followed him produced alternative explanations, and those alternatives were based on data that arose independently from many sources and generated a great deal of debate and, most importantly, could be tested by experiments that could prove them wrong. The anti-vaccine movement imitates science, still citing the discredited Wakefield report, but really offers nothing but suspicion—and that is paranoia, not science.

Similarly, there are those who try to cloak their nefarious motives in the trappings of science by claiming that they are taking the scientific posture of doubt. Science after all depends on doubt—every scientist doubts every finding they make. Every scientist knows that they can't possibly foresee all possible instances or situations in which they could be proven wrong, no matter how strong their data. Einstein was doubted for two decades, and cosmologists are still searching for experimental proofs of relativity. Science indeed progresses by doubt. In science revision is a victory.

But the imitators merely use doubt to suggest that science is not dependable and should not be used for informing policy or altering our behavior. They claim to be taking the legitimate scientific stance of doubt. Of course, they don't doubt everything, only what is problematic for their individual enterprises. They don't doubt the value of blood pressure medicine to control their hypertension. But they should, because every medicine has side effects and we don't completely understand how blood pressure is regulated and whether there may not be still better ways of controlling it.

But we use the pills we have because the science is sound even when it is not completely settled. Ask a hypertensive oil executive who would like you to believe that climate science should be ignored because there are too many uncertainties in the data, if he is willing to forgo his blood pressure medicine—because it, too, has its share of uncertainties and unwanted side effects.

The apparent success of pseudoscience is not due to gullibility on the part of the public. The problem is that science is recognized as valuable and that the imitators are unfortunately good at what they do. They take a scientific pose to gain your confidence and then distort the facts to their own purposes. How does one learn to spot the con without getting a Ph.D. and spending years in a laboratory?

"If someone claims to have the ultimate answer or that they know something for certain, the only thing for sure is that they are trying to fool you."

What can be done to make the distinction clearer? Several solutions have been tried—and seem to have failed. Radio and television shows about the latest scientific breakthroughs are a noble attempt to give the public a taste of good science, but they do nothing to help you distinguish between them and the pseudoscience being purveyed on the neighboring channel and its "scientific investigations" of haunted houses.

Similarly, attempts to inculcate what are called "scientific habits of mind" are of little practical help. These habits of mind are not so easy to adopt. They invariably require some amount of statistics and probability and much of that is counterintuitive—one of the great values of science is to help us to counter our normal biases and expectations by showing that the actual measurements may not bear them out.

Additionally, there is math—no matter how much you try to hide it, much of the language of science is math (Galileo said that). And half the audience is gone with each equation (Stephen Hawking said that). It's hard to imagine a successful program of making a non-scientifically trained public interested in adopting the rigors of scientific habits of mind. Indeed, I suspect there are some people, artists for example, who would be rightfully suspicious of changing their thinking to being habitually scientific. Many scientists are frustrated by the public's inability to think like a scientist, but in fact it is neither easy nor always desirable to do so. And it is certainly not practical.

There is a more intuitive and simpler way to tell the difference between the real thing and the cheap knock-off. In fact, it is not so much intuitive as it is counterintuitive, so it takes a little bit of mental work. But the good thing is it works almost all the time by following a simple, if as I say, counterintuitive, rule.

True science, you see, is mostly concerned with the unknown and the uncertain. If someone claims to have the ultimate answer or that they know something for certain, the only thing for sure is that they are trying to fool you. Mystery and uncertainty may not strike you right off as desirable or strong traits, but that is precisely where one finds the creative solutions that science has historically arrived at. Yes, science accumulates factual knowledge, but it is at its best when it generates new and better questions. Uncertainty is not a place of worry, but of opportunity. Progress lives at the border of the unknown.

How much would it take to alter the public perception of science to appreciate unknowns and uncertainties along with facts and conclusions? Less than you might think. In fact, we make decisions based on uncertainty every day—what to wear in case of 60 percent chance of rain—so it should not be so difficult to extend that to science, in spite of what you were taught in school about all the hard facts in those giant textbooks.

You can believe science that says there is clear evidence that takes us this far… and then we have to speculate a bit and it could go one of two or three ways—or maybe even some way we don't see yet. But like your blood pressure medicine, the stuff we know is reliable even if incomplete. It will lower your blood pressure, no matter that better treatments with fewer side effects may await us in the future.

Unsettled science is not unsound science. The honesty and humility of someone who is willing to tell you that they don't have all the answers, but they do have some thoughtful questions to pursue, are easy to distinguish from the charlatans who have ready answers or claim that nothing should be done until we are an impossible 100-percent sure.

Imitation may be the sincerest form of flattery.

The problem, as we all know, is that flattery will get you nowhere.

[Editor's Note: This article was originally published on June 8th, 2020 as part of a standalone magazine called GOOD10: The Pandemic Issue. Produced as a partnership among LeapsMag, The Aspen Institute, and GOOD, the magazine is available for free online.]