Is Finding Out Your Baby’s Genetics A New Responsibility of Parenting?

A doctor pricks the heel of a newborn for a blood test.

Hours after a baby is born, its heel is pricked with a lancet. Drops of the infant's blood are collected on a porous card, which is then mailed to a state laboratory. The dried blood spots are screened for around thirty conditions, including phenylketonuria (PKU), the metabolic disorder that kick-started this kind of newborn screening over 60 years ago. In the U.S., parents are not asked for permission to screen their child. Newborn screening programs are public health programs, and the assumption is that no good parent would refuse a screening test that could identify a serious yet treatable condition in their baby.

Learning as much as you can about your child's health might seem like a natural obligation of parenting. But it's an assumption that I think needs to be much more closely examined.

Today, with the introduction of genome sequencing into clinical medicine, some are asking whether newborn screening goes far enough. As the cost of sequencing falls, should parents take a more expansive look at their children's health, learning not just whether they have a rare but treatable childhood condition, but also whether they are at risk for untreatable conditions or for diseases that, if they occur at all, will strike only in adulthood? Should genome sequencing be a part of every newborn's care?

It's an idea that appeals to Anne Wojcicki, the founder and CEO of the direct-to-consumer genetic testing company 23andMe, who in a 2016 interview with The Guardian newspaper predicted that having newborns tested would soon be considered standard practice—"as critical as testing your cholesterol"—and a new responsibility of parenting. Wojcicki isn't the only one excited to see everyone's genes examined at birth. Francis Collins, director of the National Institutes of Health and perhaps the most prominent advocate of genomics in the United States, has written that he is "almost certain … that whole-genome sequencing will become part of new-born screening in the next few years." Whether that would happen through state-mandated screening programs, or as part of routine pediatric care—or perhaps as a direct-to-consumer service that parents purchase at birth or receive as a baby-shower gift—is not clear.

Learning as much as you can about your child's health might seem like a natural obligation of parenting. But it's an assumption that I think needs to be much more closely examined, both because the results that genome sequencing can return are more complex and more uncertain than one might expect, and because parents are not actually responsible for their child's lifelong health and well-being.

What is a parent supposed to do about such a risk except worry?

Existing newborn screening tests look for the presence of rare conditions that, if identified early in life, before the child shows any symptoms, can be effectively treated. Sequencing could identify many of these same kinds of conditions (and it might be a good tool if it could be targeted to those conditions alone), but it would also identify gene variants that confer an increased risk rather than a certainty of disease. Occasionally that increased risk will be significant. About 12 percent of women in the general population will develop breast cancer during their lives, while those who have a harmful BRCA1 or BRCA2 gene variant have around a 70 percent chance of developing the disease. But for many—perhaps most—conditions, the increased risk associated with a particular gene variant will be very small. Researchers have identified over 600 genes that appear to be associated with schizophrenia, for example, but any one of those confers only a tiny increase in risk for the disorder. What is a parent supposed to do about such a risk except worry?

Sequencing results are uncertain in other important ways as well. While we now have the ability to map the genome—to create a read-out of the pairs of genetic letters that make up a person's DNA—we are still learning what most of it means for a person's health and well-being. Researchers even have a name for gene variants they think might be associated with a disease or disorder, but for which they don't have enough evidence to be sure. They are called "variants of unknown (or uncertain) significance (VUS), and they pop up in most people's sequencing results. In cancer genetics, where much research has been done, about 1 in 5 gene variants are reclassified over time. Most are downgraded, which means that a good number of VUS are eventually designated benign.

While one parent might reasonably decide to learn about their child's risk for a condition about which nothing can be done medically, a different, yet still thoroughly reasonable, parent might prefer to remain ignorant so that they can enjoy the time before their child is afflicted.

Then there's the puzzle of what to do about results that show increased risk or even certainty for a condition that we have no idea how to prevent. Some genomics advocates argue that even if a result is not "medically actionable," it might have "personal utility" because it allows parents to plan for their child's future needs, to enroll them in research, or to connect with other families whose children carry the same genetic marker.

Finding a certain gene variant in one child might inform parents' decisions about whether to have another—and if they do, about whether to use reproductive technologies or prenatal testing to select against that variant in a future child. I have no doubt that for some parents these personal utility arguments are persuasive, but notice how far we've now strayed from the serious yet treatable conditions that motivated governments to set up newborn screening programs, and to mandate such testing for all.

Which brings me to the other problem with the call for sequencing newborn babies: the idea that even if it's not what the law requires, it's what good parents should do. That idea is very compelling when we're talking about sequencing results that show a serious threat to the child's health, especially when interventions are available to prevent or treat that condition. But as I have shown, many sequencing results are not of this type.

While one parent might reasonably decide to learn about their child's risk for a condition about which nothing can be done medically, a different, yet still thoroughly reasonable, parent might prefer to remain ignorant so that they can enjoy the time before their child is afflicted. This parent might decide that the worry—and the hypervigilence it could inspire in them—is not in their child's best interest, or indeed in their own. This parent might also think that it should be up to the child, when he or she is older, to decide whether to learn about his or her risk for adult-onset conditions, especially given that many adults at high familial risk for conditions like Alzheimer's or Huntington's disease choose never to be tested. This parent will value the child's future autonomy and right not to know more than they value the chance to prepare for a health risk that won't strike the child until 40 or 50 years in the future.

Parents are not obligated to learn about their children's risk for a condition that cannot be prevented, has a small risk of occurring, or that would appear only in adulthood.

Contemporary understandings of parenting are famously demanding. We are asked to do everything within our power to advance our children's health and well-being—to act always in our children's best interests. Against that backdrop, the need to sequence every newborn baby's genome might seem obvious. But we should be skeptical. Many sequencing results are complex and uncertain. Parents are not obligated to learn about their children's risk for a condition that cannot be prevented, has a small risk of occurring, or that would appear only in adulthood. To suggest otherwise is to stretch parental responsibilities beyond the realm of childhood and beyond factors that parents can control.

Out of Thin Air: A Fresh Solution to Farming’s Water Shortages

Dry, arid and remote farming regions are vulnerable to water shortages, but scientists are working on a promising new solution.

California has been plagued by perilous droughts for decades. Freshwater shortages have sparked raging wildfires and killed fruit and vegetable crops. And California is not alone in its danger of running out of water for farming; parts of the Southwest, including Texas, are battling severe drought conditions, according to the North American Drought Monitor. These two states account for 316,900 of the 2 million total U.S. farms.

But even as farming becomes more vulnerable due to water shortages, the world's demand for food is projected to increase 70 percent by 2050, according to Guihua Yu, an associate professor of materials science at The University of Texas at Austin.

"Water is the most limiting natural resource for agricultural production because of the freshwater shortage and enormous water consumption needed for irrigation," Yu said.

As scientists have searched for solutions, an alternative water supply has been hiding in plain sight: Water vapor in the atmosphere. It is abundant, available, and endlessly renewable, just waiting for the moment that technological innovation and necessity converged to make it fit for use. Now, new super-moisture-absorbent gels developed by Yu and a team of researchers can pull that moisture from the air and bring it into soil, potentially expanding the map of farmable land around the globe to dry and remote regions that suffer from water shortages.

"This opens up opportunities to turn those previously poor-quality or inhospitable lands to become useable and without need of centralized water and power supplies," Yu said.

A renewable source of freshwater

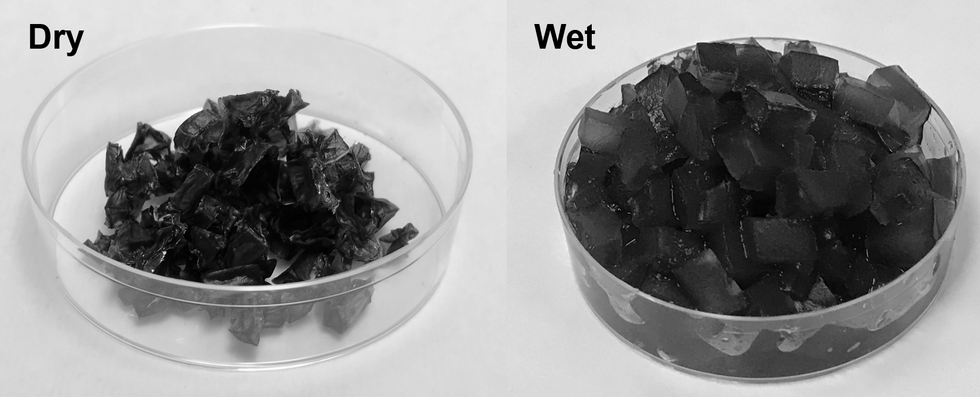

The hydrogels are a gelatin-like substance made from synthetic materials. The gels activate in cooler, humid overnight periods and draw water from the air. During a four-week experiment, Yu's team observed that soil with these gels provided enough water to support seed germination and plant growth without an additional liquid water supply. And the soil was able to maintain the moist environment for more than a month, according to Yu.

The super absorbent gels developed at the University of Texas at Austin.

Xingyi Zhou, UT Austin

"It is promising to liberate underdeveloped and drought areas from the long-distance water and power supplies for agricultural production," Yu said.

Crops also rely on fertilizer to maintain soil fertility and increase the production yield, but it is easily lost through leaching. Runoff increases agricultural costs and contributes to environmental pollution. The interaction between the gels and agrochemicals offer slow and controlled fertilizer release to maintain the balance between the root of the plant and the soil.

The possibilities are endless

Harvesting atmospheric water is exciting on multiple fronts. The super-moisture-absorbent gel can also be used for passively cooling solar panels. Solar radiation is the magic behind the process. Overnight, as temperatures cool, the gels absorb water hanging in the atmosphere. The moisture is stored inside the gels until the thermometer rises. Heat from the sun serves as the faucet that turns the gels on so they can release the stored water and cool down the panels. Effective cooling of the solar panels is important for sustainable long-term power generation.

In addition to agricultural uses and cooling for energy devices, atmospheric water harvesting technologies could even reach people's homes.

"They could be developed to enable easy access to drinking water through individual systems for household usage," Yu said.

Next steps

Yu and the team are now focused on affordability and developing practical applications for use. The goal is to optimize the gel materials to achieve higher levels of water uptake from the atmosphere.

"We are exploring different kinds of polymers and solar absorbers while exploring low-cost raw materials for production," Yu said.

The ability to transform atmospheric water vapor into a cheap and plentiful water source would be a game-changer. One day in the not-too-distant future, if climate change intensifies and droughts worsen, this innovation may become vital to our very survival.

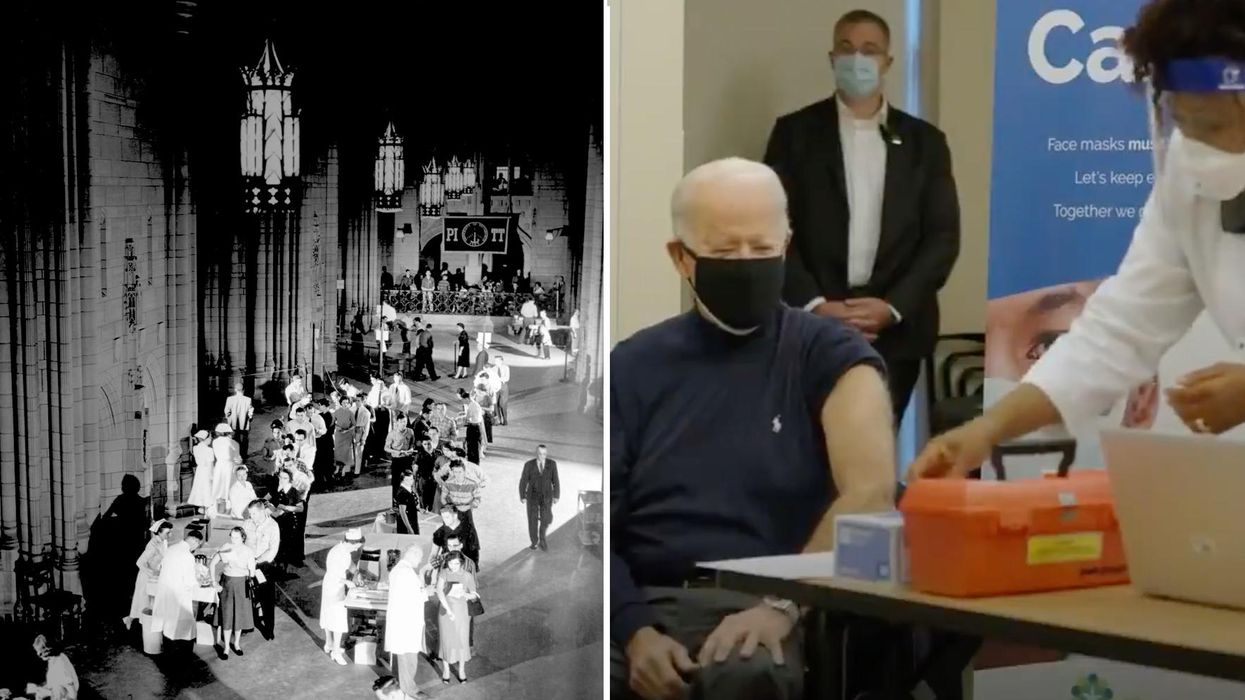

On left, people excitedly line up for Salk's polio vaccine in 1957; on right, Joe Biden gets one of the COVID vaccines on December 21, 2020.

On the morning of April 12, 1955, newsrooms across the United States inked headlines onto newsprint: the Salk Polio vaccine was "safe, effective, and potent." This was long-awaited news. Americans had limped through decades of fear, unaware of what caused polio or how to cure it, faced with the disease's terrifying, visible power to paralyze and kill, particularly children.

The announcement of the polio vaccine was celebrated with noisy jubilation: church bells rang, factory whistles sounded, people wept in the streets. Within weeks, mass inoculation began as the nation put its faith in a vaccine that would end polio.

Today, most of us are blissfully ignorant of child polio deaths, making it easier to believe that we have not personally benefited from the development of vaccines. According to Dr. Steven Pinker, cognitive psychologist and author of the bestselling book Enlightenment Now, we've become blasé to the gifts of science. "The default expectation is not that disease is part of life and science is a godsend, but that health is the default, and any disease is some outrage," he says.

We're now in the early stages of another vaccine rollout, one we hope will end the ravages of the COVID-19 pandemic. And yet, the Pfizer, Moderna, and AstraZeneca vaccines are met with far greater hesitancy and skepticism than the polio vaccine was in the 50s.

In 2021, concerns over the speed and safety of vaccine development and technology plague this heroic global effort, but the roots of vaccine hesitancy run far deeper. Vaccine hesitancy has always existed in the U.S., even in the polio era, motivated in part by fears around "living virus" in a bad batch of vaccines produced by Cutter Laboratories in 1955. But in the last half century, we've witnessed seismic cultural shifts—loss of public trust, a rise in misinformation, heightened racial and socioeconomic inequality, and political polarization have all intensified vaccine-related fears and resistance. Making sense of how we got here may help us understand how to move forward.

The Rise and Fall of Public Trust

When the polio vaccine was released in 1955, "we were nearing an all-time high point in public trust," says Matt Baum, Harvard Kennedy School professor and lead author of several reports measuring public trust and vaccine confidence. Baum explains that the U.S. was experiencing a post-war boom following the Allied triumph in WWII, a popular Roosevelt presidency, and the rapid innovation that elevated the country to an international superpower.

The 1950s witnessed the emergence of nuclear technology, a space program, and unprecedented medical breakthroughs, adds Emily Brunson, Texas State University anthropologist and co-chair of the Working Group on Readying Populations for COVID-19 Vaccine. "Antibiotics were a game changer," she states. While before, people got sick with pneumonia for a month, suddenly they had access to pills that accelerated recovery.

During this period, science seemed to hold all the answers; people embraced the idea that we could "come to know the world with an absolute truth," Brunson explains. Doctors were portrayed as unquestioned gods, so Americans were primed to trust experts who told them the polio vaccine was safe.

"The emotional tone of the news has gone downward since the 1940s, and journalists consider it a professional responsibility to cover the negative."

That blind acceptance eroded in the 1960s and 70s as people came to understand that science can be inherently political. "Getting to an absolute truth works out great for white men, but these things affect people socially in radically different ways," Brunson says. As the culture began questioning the white, patriarchal biases of science, doctors lost their god-like status and experts were pushed off their pedestals. This trend continues with greater intensity today, as President Trump has led a campaign against experts and waged a war on science that began long before the pandemic.

The Shift in How We Consume Information

In the 1950s, the media created an informational consensus. The fundamental ideas the public consumed about the state of the world were unified. "People argued about the best solutions, but didn't fundamentally disagree on the factual baseline," says Baum. Indeed, the messaging around the polio vaccine was centralized and consistent, led by President Roosevelt's successful March of Dimes crusade. People of lower socioeconomic status with limited access to this information were less likely to have confidence in the vaccine, but most people consumed media that assured them of the vaccine's safety and mobilized them to receive it.

Today, the information we consume is no longer centralized—in fact, just the opposite. "When you take that away, it's hard for people to know what to trust and what not to trust," Baum explains. We've witnessed an increase in polarization and the technology that makes it easier to give people what they want to hear, reinforcing the human tendencies to vilify the other side and reinforce our preexisting ideas. When information is engineered to further an agenda, each choice and risk calculation made while navigating the COVID-19 pandemic is deeply politicized.

This polarization maps onto a rise in socioeconomic inequality and economic uncertainty. These factors, associated with a sense of lost control, prime people to embrace misinformation, explains Baum, especially when the situation is difficult to comprehend. "The beauty of conspiratorial thinking is that it provides answers to all these questions," he says. Today's insidious fragmentation of news media accelerates the circulation of mis- and disinformation, reaching more people faster, regardless of veracity or motivation. In the case of vaccines, skepticism around their origin, safety, and motivation is intensified.

Alongside the rise in polarization, Pinker says "the emotional tone of the news has gone downward since the 1940s, and journalists consider it a professional responsibility to cover the negative." Relentless focus on everything that goes wrong further erodes public trust and paints a picture of the world getting worse. "Life saved is not a news story," says Pinker, but perhaps it should be, he continues. "If people were more aware of how much better life was generally, they might be more receptive to improvements that will continue to make life better. These improvements don't happen by themselves."

The Future Depends on Vaccine Confidence

So far, the U.S. has been unable to mitigate the catastrophic effects of the pandemic through social distancing, testing, and contact tracing. President Trump has downplayed the effects and threat of the virus, censored experts and scientists, given up on containing the spread, and mobilized his base to protest masks. The Trump Administration failed to devise a national plan, so our national plan has defaulted to hoping for the "miracle" of a vaccine. And they are "something of a miracle," Pinker says, describing vaccines as "the most benevolent invention in the history of our species." In record-breaking time, three vaccines have arrived. But their impact will be weakened unless we achieve mass vaccination. As Brunson notes, "The technology isn't the fix; it's people taking the technology."

Significant challenges remain, including facilitating widespread access and supporting on-the-ground efforts to allay concerns and build trust with specific populations with historic reasons for distrust, says Brunson. Baum predicts continuing delays as well as deaths from other causes that will be linked to the vaccine.

Still, there's every reason for hope. The new administration "has its eyes wide open to these challenges. These are the kind of problems that are amenable to policy solutions if we have the will," Baum says. He forecasts widespread vaccination by late summer and a bounce back from the economic damage, a "Good News Story" that will bolster vaccine acceptance in the future. And Pinker reminds us that science, medicine, and public health have greatly extended our lives in the last few decades, a trend that can only continue if we're willing to roll up our sleeves.