Is It Possible to Predict Your Face, Voice, and Skin Color from Your DNA?

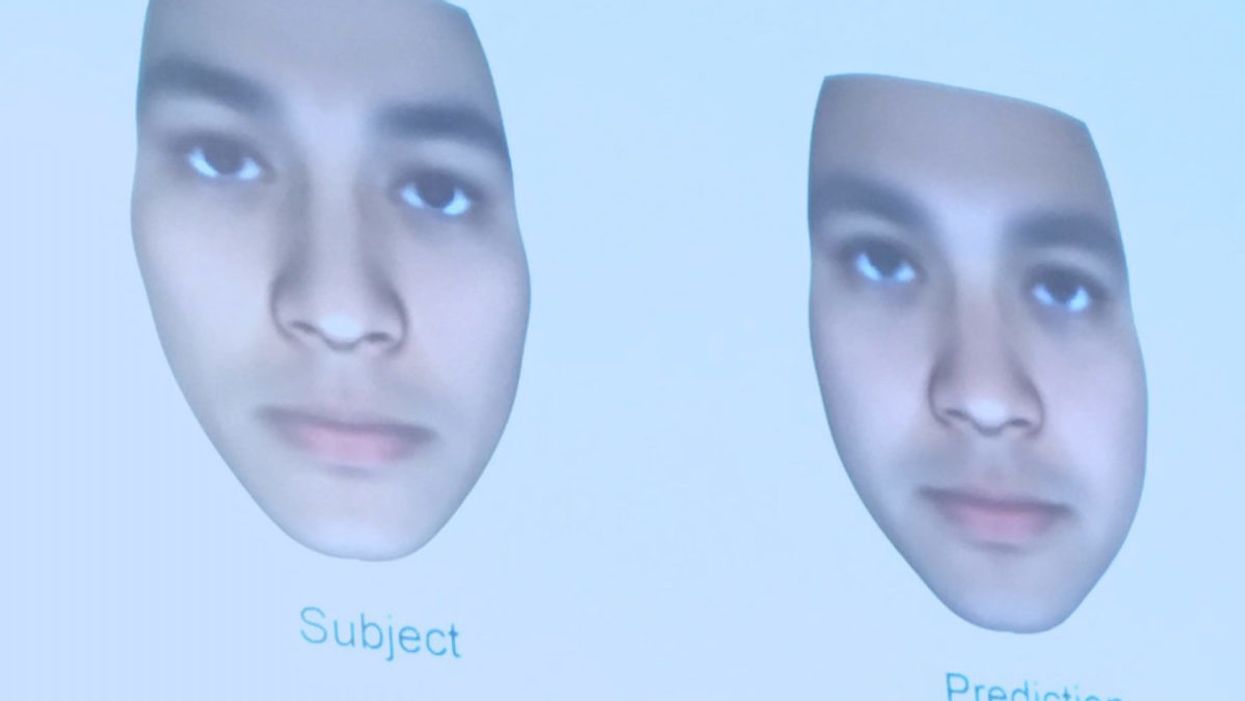

A slide from J. Craig Venter's recent study on facial prediction presented at the Summit Conference in Los Angeles on Nov. 3, 2017.

Renowned genetics pioneer Dr. J Craig Venter is no stranger to controversy.

Back in 2000, he famously raced the public Human Genome Project to decode all three billion letters of the human genome for the first time. A decade later, he ignited a new debate when his team created a bacterial cell with a synthesized genome.

Most recently, he's jumped back into the fray with a study in the September issue of the Proceedings of the National Academy of Sciences about the predictive potential of genomic data to identify individual traits such as voice, facial structure and skin color.

The new study raises significant questions about the privacy of genetic data.

His study applied whole-genome sequencing and statistical modeling to predict traits in 1,061 people of diverse ancestry. His approach aimed to reconstruct a person's physical characteristics based on DNA, and 74 percent of the time, his algorithm could correctly identify the individual in a random lineup of 10 people from his company's database.

While critics have been quick to cast doubt on the plausibility of his claims, the ability to discern people's observable traits, or phenotypes, from their genomes may grow more precise as technology improves, raising significant questions about the privacy and usage of genetic information in the long term.

J. Craig Venter showing slides from his recent study on facial prediction at the Summit Conference in Los Angeles on Nov. 3, 2017.

(Courtesy of Kira Peikoff)

Critics: Study Was Incomplete, Problematic

Before even redressing these potential legal and ethical considerations, some scientists simply said the study's main result was invalid. They pointed out that the methodology worked much better in distinguishing between people of different ethnicities than those of the same ethnicity. One of the most outspoken critics, Yaniv Erlich, a geneticist at Columbia University, said, "The method doesn't work. The results were like, 'If you have a lineup of ten people, you can predict eight."

Erlich, who reviewed Venter's paper for Science, where it was rejected, said that he came up with the same results—correctly predicting eight of ten people—by just looking at demographic factors such as age, gender and ethnicity. He added that Venter's recent rebuttal to his criticism was that 'Once we have thousands of phenotypes, it might work better.' But that, Erlich argued, would be "a major breach of privacy. Nobody has thousands of phenotypes for people."

Other critics suggested that the study's results discourage the sharing of genetic data, which is becoming increasingly important for medical research. They go one step further and imply that people's possible hesitation to share their genetic information in public databases may actually play into Venter's hands.

Venter's own company, Human Longevity Inc., aims to build the world's most comprehensive private database on human genotypes and phenotypes. The vastness of this information stands to improve the accuracy of whole genome and microbiome sequencing for individuals—analyses that come at a hefty price tag. Today, Human Longevity Inc. will sequence your genome and perform a battery of other health-related tests at an entry cost of $4900, going up to $25,000. Venter initially agreed to comment for this article, but then could not be reached.

"The bigger issue is how do we understand and use genetic information and avoid harming people."

Opens Up Pandora's Box of Ethical Issues

Whether Venter's study is valid may not be as important as the Pandora's box of potential ethical and legal issues that it raises for future consideration. "I think this story is one along a continuum of stories we've had on the issue of identifiability based on genomic information in the past decade," said Amy McGuire, a biomedical ethics professor at Baylor College of Medicine. "It does raise really interesting and important questions about privacy, and socially, how we respond to these types of scientific advancements. A lot of our focus from a policy and ethics perspective is to protect privacy."

McGuire, who is also the Director of the Center for Medical Ethics and Health Policy at Baylor, added that while protecting privacy is very important, "the bigger issue is how do we understand and use genetic information and avoid harming people." While we've taken "baby steps," she said, towards enacting laws in the U.S. that fight genetic determinism—such as the Genetic Information and Nondiscrimination Act, which prohibits discrimination based on genetic information in health insurance and employment—some areas remain unprotected, such as for life insurance and disability.

J. Craig Venter showing slides from his recent study on facial prediction at the Summit Conference in Los Angeles on Nov. 3, 2017.

(Courtesy of Kira Peikoff)

Physical reconstructions like those in Venter's study could also be inappropriately used by law enforcement, said Leslie Francis, a law and philosophy professor at the University of Utah, who has written about the ethical and legal issues related to sharing genomic data.

"If [Venter's] findings, or findings like them, hold up, the implications would be significant," Francis said. Law enforcement is increasingly using DNA identification from genetic material left at crime scenes to weed out innocent and guilty suspects, she explained. This adds another potentially complicating layer.

"There is a shift here, from using DNA sequencing techniques to match other DNA samples—as when semen obtained from a rape victim is then matched (or not) with a cheek swab from a suspect—to using DNA sequencing results to predict observable characteristics," Francis said. She added that while the former necessitates having an actual DNA sample for a match, the latter can use DNA to pre-emptively (and perhaps inaccurately) narrow down suspects.

"My worry is that if this [the study's methodology] turns out to be sort-of accurate, people will think it is better than what it is," said Francis. "If law enforcement comes to rely on it, there will be a host of false positives and false negatives. And we'll face new questions, [such as] 'Which is worse? Picking an innocent as guilty, or failing to identify someone who is guilty?'"

Risking Privacy Involves a Tradeoff

When people voluntarily risk their own privacy, that involves a tradeoff, McGuire said. A 2014 study that she conducted among people who were very sick, or whose children were very sick, found that more than half were willing to share their health information, despite concerns about privacy, because they saw a big benefit in advancing research on their conditions.

"We've focused a lot of our policy attention on restricting access, but we don't have a system of accountability when there's a breach."

"To make leaps and bounds in medicine and genomics, we need to create a database of millions of people signing on to share their genetic and health information in order to improve research and clinical care," McGuire said. "They are going to risk their privacy, and we have a social obligation to protect them."

That also means "punishing bad actors," she continued. "We've focused a lot of our policy attention on restricting access, but we don't have a system of accountability when there's a breach."

Even though most people using genetic information have good intentions, the consequences if not are troubling. "All you need is one bad actor who decimates the trust in the system, and it has catastrophic consequences," she warned. That hasn't happened on a massive scale yet, and even if it did, some experts argue that obtaining the data is not the real risk; what is more concerning is hacking individuals' genetic information to be used against them, such as to prove someone is unfit for a particular job because of a genetic condition like Alzheimer's, or that a parent is unfit for custody because of a genetic disposition to mental illness.

Venter, in fact, told an audience at the recent Summit conference in Los Angeles that his new study's approach could not only predict someone's physical appearance from their DNA, but also some of their psychological traits, such as the propensity for an addictive personality. In the future, he said, it will be possible to predict even more about mental health from the genome.

What is most at risk on a massive scale, however, is not so much genetic information as demographic identifiers included in medical records, such as birth dates and social security numbers, said Francis, the law and philosophy professor. "The much more interesting and lucrative security breaches typically involve not people interested in genetic information per se, but people interested in the information in health records that you can't change."

Hospitals have been hacked for this kind of information, including an incident at the Veterans Administration in 2006, in which the laptop and external hard drive of an agency employee that contained unencrypted information on 26.5 million patients were stolen from the employee's house.

So, what can people do to protect themselves? "Don't share anything you wouldn't want the world to see," Francis said. "And don't click 'I agree' without actually reading privacy policies or terms and conditions. They may surprise you."

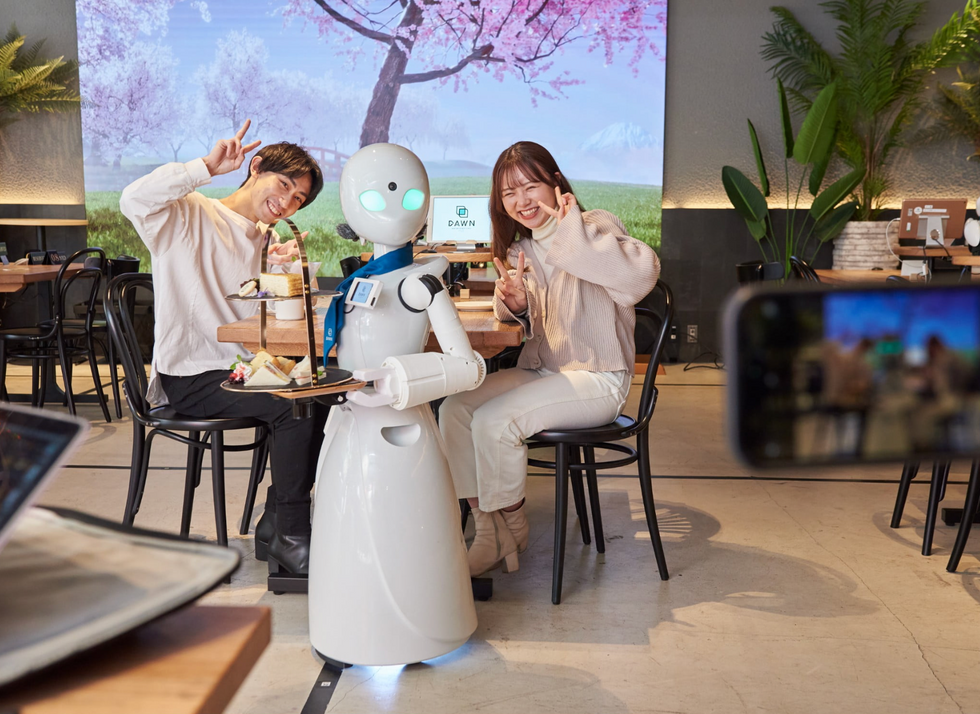

A robot server, controlled remotely by a disabled worker, delivers drinks to patrons at the DAWN cafe in Tokyo.

A sleek, four-foot tall white robot glides across a cafe storefront in Tokyo’s Nihonbashi district, holding a two-tiered serving tray full of tea sandwiches and pastries. The cafe’s patrons smile and say thanks as they take the tray—but it’s not the robot they’re thanking. Instead, the patrons are talking to the person controlling the robot—a restaurant employee who operates the avatar from the comfort of their home.

It’s a typical scene at DAWN, short for Diverse Avatar Working Network—a cafe that launched in Tokyo six years ago as an experimental pop-up and quickly became an overnight success. Today, the cafe is a permanent fixture in Nihonbashi, staffing roughly 60 remote workers who control the robots remotely and communicate to customers via a built-in microphone.

More than just a creative idea, however, DAWN is being hailed as a life-changing opportunity. The workers who control the robots remotely (known as “pilots”) all have disabilities that limit their ability to move around freely and travel outside their homes. Worldwide, an estimated 16 percent of the global population lives with a significant disability—and according to the World Health Organization, these disabilities give rise to other problems, such as exclusion from education, unemployment, and poverty.

These are all problems that Kentaro Yoshifuji, founder and CEO of Ory Laboratory, which supplies the robot servers at DAWN, is looking to correct. Yoshifuji, who was bedridden for several years in high school due to an undisclosed health problem, launched the company to help enable people who are house-bound or bedridden to more fully participate in society, as well as end the loneliness, isolation, and feelings of worthlessness that can sometimes go hand-in-hand with being disabled.

“It’s heartbreaking to think that [people with disabilities] feel they are a burden to society, or that they fear their families suffer by caring for them,” said Yoshifuji in an interview in 2020. “We are dedicating ourselves to providing workable, technology-based solutions. That is our purpose.”

Shota, Kuwahara, a DAWN employee with muscular dystrophy, agrees. "There are many difficulties in my daily life, but I believe my life has a purpose and is not being wasted," he says. "Being useful, able to help other people, even feeling needed by others, is so motivational."

A woman receives a mammogram, which can detect the presence of tumors in a patient's breast.

When a patient is diagnosed with early-stage breast cancer, having surgery to remove the tumor is considered the standard of care. But what happens when a patient can’t have surgery?

Whether it’s due to high blood pressure, advanced age, heart issues, or other reasons, some breast cancer patients don’t qualify for a lumpectomy—one of the most common treatment options for early-stage breast cancer. A lumpectomy surgically removes the tumor while keeping the patient’s breast intact, while a mastectomy removes the entire breast and nearby lymph nodes.

Fortunately, a new technique called cryoablation is now available for breast cancer patients who either aren’t candidates for surgery or don’t feel comfortable undergoing a surgical procedure. With cryoablation, doctors use an ultrasound or CT scan to locate any tumors inside the patient’s breast. They then insert small, needle-like probes into the patient's breast which create an “ice ball” that surrounds the tumor and kills the cancer cells.

Cryoablation has been used for decades to treat cancers of the kidneys and liver—but only in the past few years have doctors been able to use the procedure to treat breast cancer patients. And while clinical trials have shown that cryoablation works for tumors smaller than 1.5 centimeters, a recent clinical trial at Memorial Sloan Kettering Cancer Center in New York has shown that it can work for larger tumors, too.

In this study, doctors performed cryoablation on patients whose tumors were, on average, 2.5 centimeters. The cryoablation procedure lasted for about 30 minutes, and patients were able to go home on the same day following treatment. Doctors then followed up with the patients after 16 months. In the follow-up, doctors found the recurrence rate for tumors after using cryoablation was only 10 percent.

For patients who don’t qualify for surgery, radiation and hormonal therapy is typically used to treat tumors. However, said Yolanda Brice, M.D., an interventional radiologist at Memorial Sloan Kettering Cancer Center, “when treated with only radiation and hormonal therapy, the tumors will eventually return.” Cryotherapy, Brice said, could be a more effective way to treat cancer for patients who can’t have surgery.

“The fact that we only saw a 10 percent recurrence rate in our study is incredibly promising,” she said.