This breath test can detect liver disease earlier

A company in England has made a test that picks out the compounds from breath that reveal if people have liver disease.

Every year, around two million people worldwide die of liver disease. While some people inherit the disease, it’s most commonly caused by hepatitis, obesity and alcoholism. These underlying conditions kill liver cells, causing scar tissue to form until eventually the liver cannot function properly. Since 1979, deaths due to liver disease have increased by 400 percent.

The sooner the disease is detected, the more effective treatment can be. But once symptoms appear, the liver is already damaged. Around 50 percent of cases are diagnosed only after the disease has reached the final stages, when treatment is largely ineffective.

To address this problem, Owlstone Medical, a biotech company in England, has developed a breath test that can detect liver disease earlier than conventional approaches. Human breath contains volatile organic compounds (VOCs) that change in the first stages of liver disease. Owlstone’s breath test can reliably collect, store and detect VOCs, while picking out the specific compounds that reveal liver disease.

“There’s a need to screen more broadly for people with early-stage liver disease,” says Owlstone’s CEO Billy Boyle. “Equally important is having a test that's non-invasive, cost effective and can be deployed in a primary care setting.”

The standard tool for detection is a biopsy. It is invasive and expensive, making it impractical to use for people who aren't yet symptomatic. Meanwhile, blood tests are less invasive, but they can be inaccurate and can’t discriminate between different stages of the disease.

In the past, breath tests have not been widely used because of the difficulties of reliably collecting and storing breath. But Owlstone’s technology could help change that.

The team is testing patients in the early stages of advanced liver disease, or cirrhosis, to identify and detect these biomarkers. In an initial study, Owlstone’s breathalyzer was able to pick out patients who had early cirrhosis with 83 percent sensitivity.

Boyle’s work is personally motivated. His wife died of colorectal cancer after she was diagnosed with a progressed form of the disease. “That was a big impetus for me to see if this technology could work in early detection,” he says. “As a company, Owlstone is interested in early detection across a range of diseases because we think that's a way to save lives and a way to save costs.”

How it works

In the past, breath tests have not been widely used because of the difficulties of reliably collecting and storing breath. But Owlstone’s technology could help change that.

Study participants breathe into a mouthpiece attached to a breath sampler developed by Owlstone. It has cartridges are designed and optimized to collect gases. The sampler specifically targets VOCs, extracting them from atmospheric gases in breath, to ensure that even low levels of these compounds are captured.

The sampler can store compounds stably before they are assessed through a method called mass spectrometry, in which compounds are converted into charged atoms, before electromagnetic fields filter and identify even the tiniest amounts of charged atoms according to their weight and charge.

The top four compounds in our breath

In an initial study, Owlstone captured VOCs in breath to see which ones could help them tell the difference between people with and without liver disease. They tested the breath of 46 patients with liver disease - most of them in the earlier stages of cirrhosis - and 42 healthy people. Using this data, they were able to create a diagnostic model. Individually, compounds like 2-Pentanone and limonene performed well as markers for liver disease. Owlstone achieved even better performance by examining the levels of the top four compounds together, distinguishing between liver disease cases and controls with 95 percent accuracy.

“It was a good proof of principle since it looks like there are breath biomarkers that can discriminate between diseases,” Boyle says. “That was a bit of a stepping stone for us to say, taking those identified, let’s try and dose with specific concentrations of probes. It's part of building the evidence and steering the clinical trials to get to liver disease sensitivity.”

Sabine Szunerits, a professor of chemistry in Institute of Electronics at the University of Lille, sees the potential of Owlstone’s technology.

“Breath analysis is showing real promise as a clinical diagnostic tool,” says Szunerits, who has no ties with the company. “Owlstone Medical’s technology is extremely effective in collecting small volatile organic biomarkers in the breath. In combination with pattern recognition it can give an answer on liver disease severity. I see it as a very promising way to give patients novel chances to be cured.”

Improving the breath sampling process

Challenges remain. With more than one thousand VOCs found in the breath, it can be difficult to identify markers for liver disease that are consistent across many patients.

Julian Gardner is a professor of electrical engineering at Warwick University who researches electronic sensing devices. “Everyone’s breath has different levels of VOCs and different ones according to gender, diet, age etc,” Gardner says. “It is indeed very challenging to selectively detect the biomarkers in the breath for liver disease.”

So Owlstone is putting chemicals in the body that they know interact differently with patients with liver disease, and then using the breath sampler to measure these specific VOCs. The chemicals they administer are called Exogenous Volatile Organic Compound) probes, or EVOCs.

Most recently, they used limonene as an EVOC probe, testing 29 patients with early cirrhosis and 29 controls. They gave the limonene to subjects at specific doses to measure how its concentrations change in breath. The aim was to try and see what was happening in their livers.

“They are proposing to use drugs to enhance the signal as they are concerned about the sensitivity and selectivity of their method,” Gardner says. “The approach of EVOC probes is probably necessary as you can then eliminate the person-to-person variation that will be considerable in the soup of VOCs in our breath.”

Through these probes, Owlstone could identify patients with liver disease with 83 percent sensitivity. By targeting what they knew was a disease mechanism, they were able to amplify the signal. The company is starting a larger clinical trial, and the plan is to eventually use a panel of EVOC probes to make sure they can see diverging VOCs more clearly.

“I think the approach of using probes to amplify the VOC signal will ultimately increase the specificity of any VOC breath tests, and improve their practical usability,” says Roger Yazbek, who leads the South Australian Breath Analysis Research (SABAR) laboratory in Flinders University. “Whilst the findings are interesting, it still is only a small cohort of patients in one location.”

The future of breath diagnosis

Owlstone wants to partner with pharmaceutical companies looking to learn if their drugs have an effect on liver disease. They’ve also developed a microchip, a miniaturized version of mass spectrometry instruments, that can be used with the breathalyzer. It is less sensitive but will enable faster detection.

Boyle says the company's mission is for their tests to save 100,000 lives. "There are lots of risks and lots of challenges. I think there's an opportunity to really establish breath as a new diagnostic class.”

Dr. May Edward Chinn, Kizzmekia Corbett, PhD., and Alice Ball, among others, have been behind some of the most important scientific work of the last century.

If you look back on the last century of scientific achievements, you might notice that most of the scientists we celebrate are overwhelmingly white, while scientists of color take a backseat. Since the Nobel Prize was introduced in 1901, for example, no black scientists have landed this prestigious award.

The work of black women scientists has gone unrecognized in particular. Their work uncredited and often stolen, black women have nevertheless contributed to some of the most important advancements of the last 100 years, from the polio vaccine to GPS.

Here are five black women who have changed science forever.

Dr. May Edward Chinn

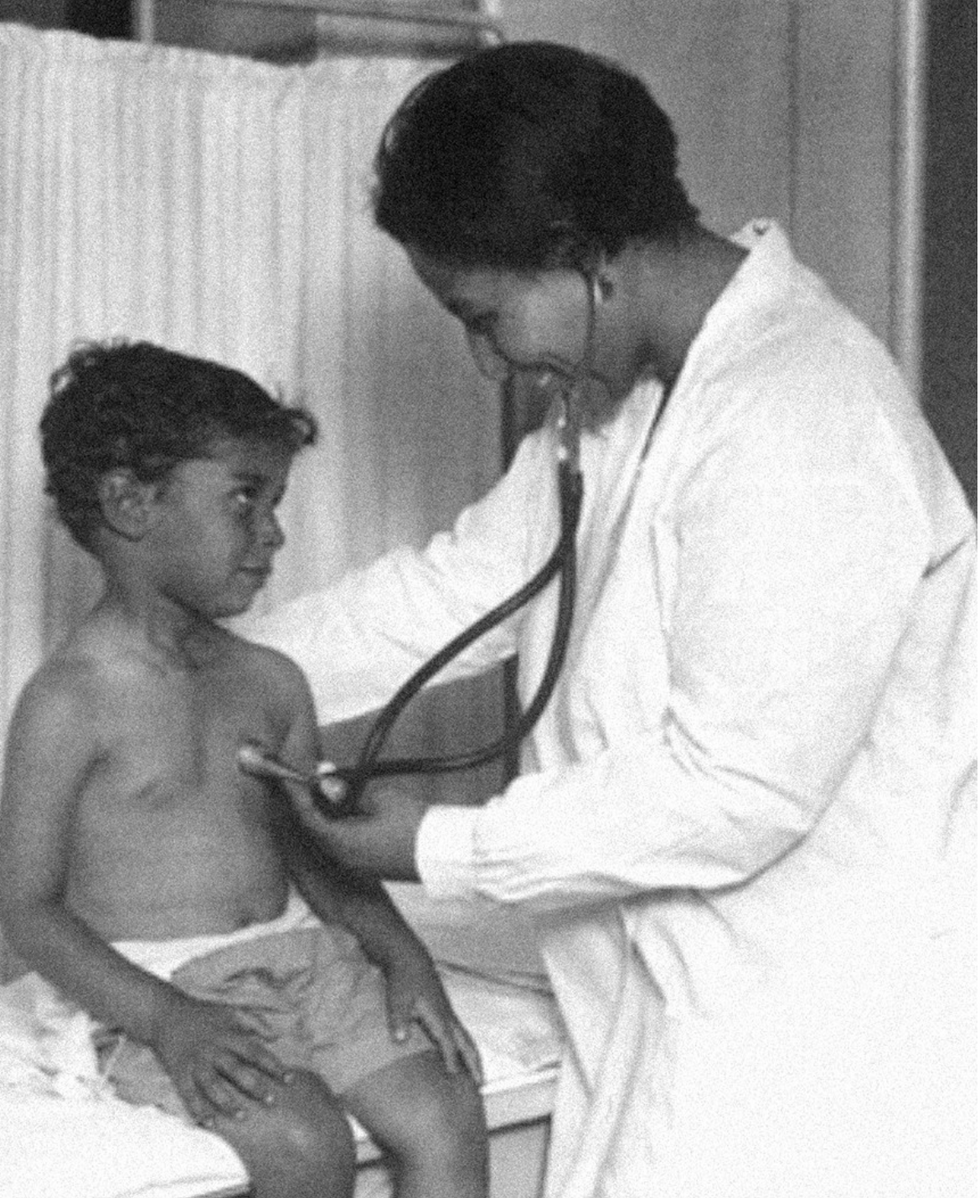

Dr. May Edward Chinn practicing medicine in Harlem

George B. Davis, PhD.

Chinn was born to poor parents in New York City just before the start of the 20th century. Although she showed great promise as a pianist, playing with the legendary musician Paul Robeson throughout the 1920s, she decided to study medicine instead. Chinn, like other black doctors of the time, were barred from studying or practicing in New York hospitals. So Chinn formed a private practice and made house calls, sometimes operating in patients’ living rooms, using an ironing board as a makeshift operating table.

Chinn worked among the city’s poor, and in doing this, started to notice her patients had late-stage cancers that often had gone undetected or untreated for years. To learn more about cancer and its prevention, Chinn begged information off white doctors who were willing to share with her, and even accompanied her patients to other clinic appointments in the city, claiming to be the family physician. Chinn took this information and integrated it into her own practice, creating guidelines for early cancer detection that were revolutionary at the time—for instance, checking patient health histories, checking family histories, performing routine pap smears, and screening patients for cancer even before they showed symptoms. For years, Chinn was the only black female doctor working in Harlem, and she continued to work closely with the poor and advocate for early cancer screenings until she retired at age 81.

Alice Ball

Pictorial Press Ltd/Alamy

Alice Ball was a chemist best known for her groundbreaking work on the development of the “Ball Method,” the first successful treatment for those suffering from leprosy during the early 20th century.

In 1916, while she was an undergraduate student at the University of Hawaii, Ball studied the effects of Chaulmoogra oil in treating leprosy. This oil was a well-established therapy in Asian countries, but it had such a foul taste and led to such unpleasant side effects that many patients refused to take it.

So Ball developed a method to isolate and extract the active compounds from Chaulmoogra oil to create an injectable medicine. This marked a significant breakthrough in leprosy treatment and became the standard of care for several decades afterward.

Unfortunately, Ball died before she could publish her results, and credit for this discovery was given to another scientist. One of her colleagues, however, was able to properly credit her in a publication in 1922.

Henrietta Lacks

onathan Newton/The Washington Post/Getty

The person who arguably contributed the most to scientific research in the last century, surprisingly, wasn’t even a scientist. Henrietta Lacks was a tobacco farmer and mother of five children who lived in Maryland during the 1940s. In 1951, Lacks visited Johns Hopkins Hospital where doctors found a cancerous tumor on her cervix. Before treating the tumor, the doctor who examined Lacks clipped two small samples of tissue from Lacks’ cervix without her knowledge or consent—something unthinkable today thanks to informed consent practices, but commonplace back then.

As Lacks underwent treatment for her cancer, her tissue samples made their way to the desk of George Otto Gey, a cancer researcher at Johns Hopkins. He noticed that unlike the other cell cultures that came into his lab, Lacks’ cells grew and multiplied instead of dying out. Lacks’ cells were “immortal,” meaning that because of a genetic defect, they were able to reproduce indefinitely as long as certain conditions were kept stable inside the lab.

Gey started shipping Lacks’ cells to other researchers across the globe, and scientists were thrilled to have an unlimited amount of sturdy human cells with which to experiment. Long after Lacks died of cervical cancer in 1951, her cells continued to multiply and scientists continued to use them to develop cancer treatments, to learn more about HIV/AIDS, to pioneer fertility treatments like in vitro fertilization, and to develop the polio vaccine. To this day, Lacks’ cells have saved an estimated 10 million lives, and her family is beginning to get the compensation and recognition that Henrietta deserved.

Dr. Gladys West

Andre West

Gladys West was a mathematician who helped invent something nearly everyone uses today. West started her career in the 1950s at the Naval Surface Warfare Center Dahlgren Division in Virginia, and took data from satellites to create a mathematical model of the Earth’s shape and gravitational field. This important work would lay the groundwork for the technology that would later become the Global Positioning System, or GPS. West’s work was not widely recognized until she was honored by the US Air Force in 2018.

Dr. Kizzmekia "Kizzy" Corbett

TIME Magazine

At just 35 years old, immunologist Kizzmekia “Kizzy” Corbett has already made history. A viral immunologist by training, Corbett studied coronaviruses at the National Institutes of Health (NIH) and researched possible vaccines for coronaviruses such as SARS (Severe Acute Respiratory Syndrome) and MERS (Middle East Respiratory Syndrome).

At the start of the COVID pandemic, Corbett and her team at the NIH partnered with pharmaceutical giant Moderna to develop an mRNA-based vaccine against the virus. Corbett’s previous work with mRNA and coronaviruses was vital in developing the vaccine, which became one of the first to be authorized for emergency use in the United States. The vaccine, along with others, is responsible for saving an estimated 14 million lives.On today’s episode of Making Sense of Science, I’m honored to be joined by Dr. Paul Song, a physician, oncologist, progressive activist and biotech chief medical officer. Through his company, NKGen Biotech, Dr. Song is leveraging the power of patients’ own immune systems by supercharging the body’s natural killer cells to make new treatments for Alzheimer’s and cancer.

Whereas other treatments for Alzheimer’s focus directly on reducing the build-up of proteins in the brain such as amyloid and tau in patients will mild cognitive impairment, NKGen is seeking to help patients that much of the rest of the medical community has written off as hopeless cases, those with late stage Alzheimer’s. And in small studies, NKGen has shown remarkable results, even improvement in the symptoms of people with these very progressed forms of Alzheimer’s, above and beyond slowing down the disease.

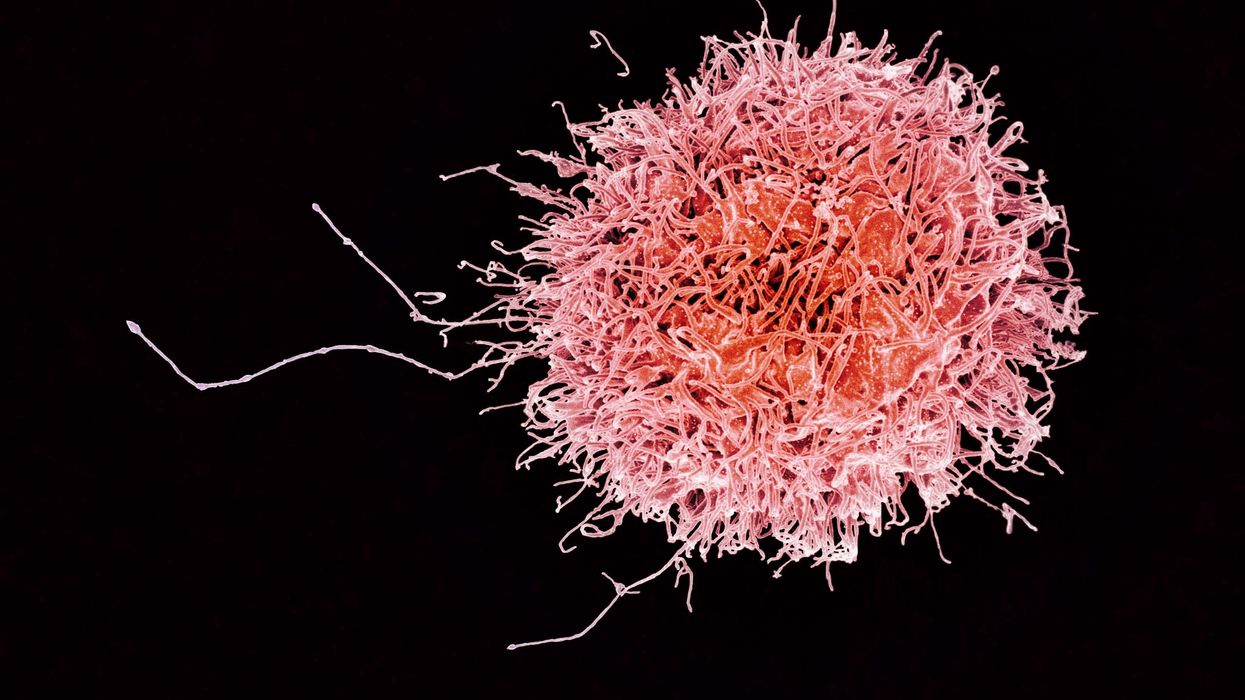

In the realm of cancer, Dr. Song is similarly setting his sights on another group of patients for whom treatment options are few and far between: people with solid tumors. Whereas some gradual progress has been made in treating blood cancers such as certain leukemias in past few decades, solid tumors have been even more of a challenge. But Dr. Song’s approach of using natural killer cells to treat solid tumors is promising. You may have heard of CAR-T, which uses genetic engineering to introduce cells into the body that have a particular function to help treat a disease. NKGen focuses on other means to enhance the 40 plus receptors of natural killer cells, making them more receptive and sensitive to picking out cancer cells.

Paul Y. Song, MD is currently CEO and Vice Chairman of NKGen Biotech. Dr. Song’s last clinical role was Asst. Professor at the Samuel Oschin Cancer Center at Cedars Sinai Medical Center.

Dr. Song served as the very first visiting fellow on healthcare policy in the California Department of Insurance in 2013. He is currently on the advisory board of the Pritzker School of Molecular Engineering at the University of Chicago and a board member of Mercy Corps, The Center for Health and Democracy, and Gideon’s Promise.

Dr. Song graduated with honors from the University of Chicago and received his MD from George Washington University. He completed his residency in radiation oncology at the University of Chicago where he served as Chief Resident and did a brachytherapy fellowship at the Institute Gustave Roussy in Villejuif, France. He was also awarded an ASTRO research fellowship in 1995 for his research in radiation inducible gene therapy.

With Dr. Song’s leadership, NKGen Biotech’s work on natural killer cells represents cutting-edge science leading to key findings and important pieces of the puzzle for treating two of humanity’s most intractable diseases.

Show links

- Paul Song LinkedIn

- NKGen Biotech on Twitter - @NKGenBiotech

- NKGen Website: https://nkgenbiotech.com/

- NKGen appoints Paul Song

- Patient Story: https://pix11.com/news/local-news/long-island/promising-new-treatment-for-advanced-alzheimers-patients/

- FDA Clearance: https://nkgenbiotech.com/nkgen-biotech-receives-ind-clearance-from-fda-for-snk02-allogeneic-natural-killer-cell-therapy-for-solid-tumors/Q3 earnings data: https://www.nasdaq.com/press-release/nkgen-biotech-inc.-reports-third-quarter-2023-financial-results-and-business