Scientists forecast new disease outbreaks

A mosquito under the microscope.

Two years, six million deaths and still counting, scientists are searching for answers to prevent another COVID-19-like tragedy from ever occurring again. And it’s a gargantuan task.

Our disturbed ecosystems are creating more favorable conditions for the spread of infectious disease. Global warming, deforestation, rising sea levels and flooding have contributed to a rise in mosquito-borne infections and longer tick seasons. Disease-carrying animals are in closer range to other species and humans as they migrate to escape the heat. Bats are thought to have carried the SARS-CoV-2 virus to Wuhan, either directly or through another host animal, but thousands of novel viruses are lurking within other wild creatures.

Understanding how climate change contributes to the spread of disease is critical in predicting and thwarting future calamities. But the problem is that predictive models aren’t yet where they need to be for forecasting with certainty beyond the next year, as we could for weather, for instance.

The association between climate and infectious disease is poorly understood, says Irina Tezaur, a computational scientist at Sandia National Laboratories. “Correlations have been observed but it’s not known if these correlations translate to causal relationships.”

To make accurate longer-term predictions, scientists need more empirical data, multiple datasets specific to locations and diseases, and the ability to calculate risks that depend on unpredictable nature and human behavior. Another obstacle is that climate scientists and epidemiologists are not collaborating effectively, so some researchers are calling for a multidisciplinary approach, a new field called Outbreak Science.

Climate scientists are far ahead of epidemiologists in gathering essential data.

Earth System Models—combining the interactions of atmosphere, ocean, land, ice and biosphere—have been in place for two decades to monitor the effects of global climate change. These models must be combined with epidemiological and human model research, areas that are easily skewed by unpredictable elements, from extreme weather events to public environmental policy shifts.

“There is never just one driver in tracking the impact of climate on infectious disease,” says Joacim Rocklöv, a professor at the Heidelberg Institute of Global Health & Heidelberg Interdisciplinary Centre for Scientific Computing in Germany. Rocklöv has studied how climate affects vector-borne diseases—those transmitted to humans by mosquitoes, ticks or fleas. “You need to disentangle the variables to find out how much difference climate makes to the outcome and how much is other factors.” Determinants from deforestation to population density to lack of healthcare access influence the spread of disease.

Even though climate change is not the primary driver of infectious disease today, it poses a major threat to public health in the future, says Rocklöv.

The promise of predictive modeling

“Models are simplifications of a system we’re trying to understand,” says Jeremy Hess, who directs the Center for Health and the Global Environment at University of Washington in Seattle. “They’re tools for learning that improve over time with new observations.”

Accurate predictions depend on high-quality, long-term observational data but models must start with assumptions. “It’s not possible to apply an evidence-based approach for the next 40 years,” says Rocklöv. “Using models to experiment and learn is the only way to figure out what climate means for infectious disease. We collect data and analyze what already happened. What we do today will not make a difference for several decades.”

To improve accuracy, scientists develop and draw on thousands of models to cover as many scenarios as possible. One model may capture the dynamics of disease transmission while another focuses on immunity data or ocean influences or seasonal components of a virus. Further, each model needs to be disease-specific and often location-specific to be useful.

“All models have biases so it’s important to use a suite of models,” Tezaur stresses.

The modeling scientist chooses the drivers of change and parameters based on the question explored. The drivers could be increased precipitation, poverty or mosquito prevalence, for instance. Later, the scientist may need to isolate the effect of one driver so that will require another model.

There have been some related successes, such as the latest models for mosquito-borne diseases like Dengue, Zika and malaria as well as those for flu and tick-borne diseases, says Hess.

Rocklöv was part of a research team that used test data from 2018 and 2019 to identify regions at risk for West Nile virus outbreaks. Using AI, scientists were able to forecast outbreaks of the virus for the entire transmission season in Europe. “In the end, we want data-driven models; that’s what AI can accomplish,” says Rocklöv. Other researchers are making an important headway in creating a framework to predict novel host–parasite interactions.

Modeling studies can run months, years or decades. “The scientist is working with layers of data. The challenge is how to transform and couple different models together on a planetary scale,” says Jeanne Fair, a scientist at Los Alamos National Laboratory, Biosecurity and Public Health, in New Mexico.

Disease forecasting will require a significant investment into the infrastructure needed to collect data about the environment, vectors, and hosts a tall spatial and temporal resolutions.

And it’s a constantly changing picture. A modeling study in an April 2022 issue of Nature predicted that thousands of animals will migrate to cooler locales as temperatures rise. This means that various species will come into closer contact with people and other mammals for the first time. This is likely to increase the risk of emerging infectious disease transmitted from animals to humans, especially in Africa and Asia.

Other things can happen too. Global warming could precipitate viral mutations or new infectious diseases that don’t respond to antimicrobial treatments. Insecticide-resistant mosquitoes could evolve. Weather-related food insecurity could increase malnutrition and weaken people’s immune systems. And the impact of an epidemic will be worse if it co-occurs during a heatwave, flood, or drought, says Hess.

The devil is in the climate variables

Solid predictions about the future of climate and disease are not possible with so many uncertainties. Difficult-to-measure drivers must be added to the empirical model mix, such as land and water use, ecosystem changes or the public’s willingness to accept a vaccine or practice social distancing. Nor is there any precedent for calculating the effect of climate changes that are accelerating at a faster speed than ever before.

The most critical climate variables thought to influence disease spread are temperature, precipitation, humidity, sunshine and wind, according to Tezaur’s research. And then there are variables within variables. Influenza scientists, for example, found that warm winters were predictors of the most severe flu seasons in the following year.

The human factor may be the most challenging determinant. To what degree will people curtail greenhouse gas emissions, if at all? The swift development of effective COVID-19 vaccines was a game-changer, but will scientists be able to repeat it during the next pandemic? Plus, no model could predict the amount of internet-fueled COVID-19 misinformation, Fair noted. To tackle this issue, infectious disease teams are looking to include more sociologists and political scientists in their modeling.

Addressing the gaps

Currently, researchers are focusing on the near future, predicting for next year, says Fair. “When it comes to long-term, that’s where we have the most work to do.” While scientists cannot foresee how political influences and misinformation spread will affect models, they are positioned to make headway in collecting and assessing new data streams that have never been merged.

Disease forecasting will require a significant investment into the infrastructure needed to collect data about the environment, vectors, and hosts at all spatial and temporal resolutions, Fair and her co-authors stated in their recent study. For example real-time data on mosquito prevalence and diversity in various settings and times is limited or non-existent. Fair also would like to see standards set in mosquito data collection in every country. “Standardizing across the US would be a huge accomplishment,” she says.

Understanding how climate change contributes to the spread of disease is critical for thwarting future calamities.

Jeanne Fair

Hess points to a dearth of data in local and regional datasets about how extreme weather events play out in different geographic locations. His research indicates that Africa and the Middle East experienced substantial climate shifts, for example, but are unrepresented in the evidentiary database, which limits conclusions. “A model for dengue may be good in Singapore but not necessarily in Port-au-Prince,” Hess explains. And, he adds, scientists need a way of evaluating models for how effective they are.

The hope, Rocklöv says, is that in the future we will have data-driven models rather than theoretical ones. In turn, sharper statistical analyses can inform resource allocation and intervention strategies to prevent outbreaks.

Most of all, experts emphasize that epidemiologists and climate scientists must stop working in silos. If scientists can successfully merge epidemiological data with climatic, biological, environmental, ecological and demographic data, they will make better predictions about complex disease patterns. Modeling “cross talk” and among disciplines and, in some cases, refusal to release data between countries is hindering discovery and advances.

It’s time for bold transdisciplinary action, says Hess. He points to initiatives that need funding in disease surveillance and control; developing and testing interventions; community education and social mobilization; decision-support analytics to predict when and where infections will emerge; advanced methodologies to improve modeling; training scientists in data management and integrated surveillance.

Establishing a new field of Outbreak Science to coordinate collaboration would accelerate progress. Investment in decision-support modeling tools for public health teams, policy makers, and other long-term planning stakeholders is imperative, too. We need to invest in programs that encourage people from climate modeling and epidemiology to work together in a cohesive fashion, says Tezaur. Joining forces is the only way to solve the formidable challenges ahead.

This article originally appeared in One Health/One Planet, a single-issue magazine that explores how climate change and other environmental shifts are increasing vulnerabilities to infectious diseases by land and by sea. The magazine probes how scientists are making progress with leaders in other fields toward solutions that embrace diverse perspectives and the interconnectedness of all lifeforms and the planet.

Researchers claimed they built a breakthrough superconductor. Social media shot it down almost instantly.

In July, South Korean scientists posted a paper finding they had achieved superconductivity - a claim that was debunked within days.

Harsh Mathur was a graduate physics student at Yale University in late 1989 when faculty announced they had failed to replicate claims made by scientists at the University of Utah and the University of Wolverhampton in England.

Such work is routine. Replicating or attempting to replicate the contraptions, calculations and conclusions crafted by colleagues is foundational to the scientific method. But in this instance, Yale’s findings were reported globally.

“I had a ringside view, and it was crazy,” recalls Mathur, now a professor of physics at Case Western Reserve University in Ohio.

Yale’s findings drew so much attention because initial experiments by Stanley Pons of Utah and Martin Fleischmann of Wolverhampton led to a startling claim: They were able to fuse atoms at room temperature – a scientific El Dorado known as “cold fusion.”

Nuclear fusion powers the stars in the universe. However, star cores must be at least 23.4 million degrees Fahrenheit and under extraordinary pressure to achieve fusion. Pons and Fleischmann claimed they had created an almost limitless source of power achievable at any temperature.

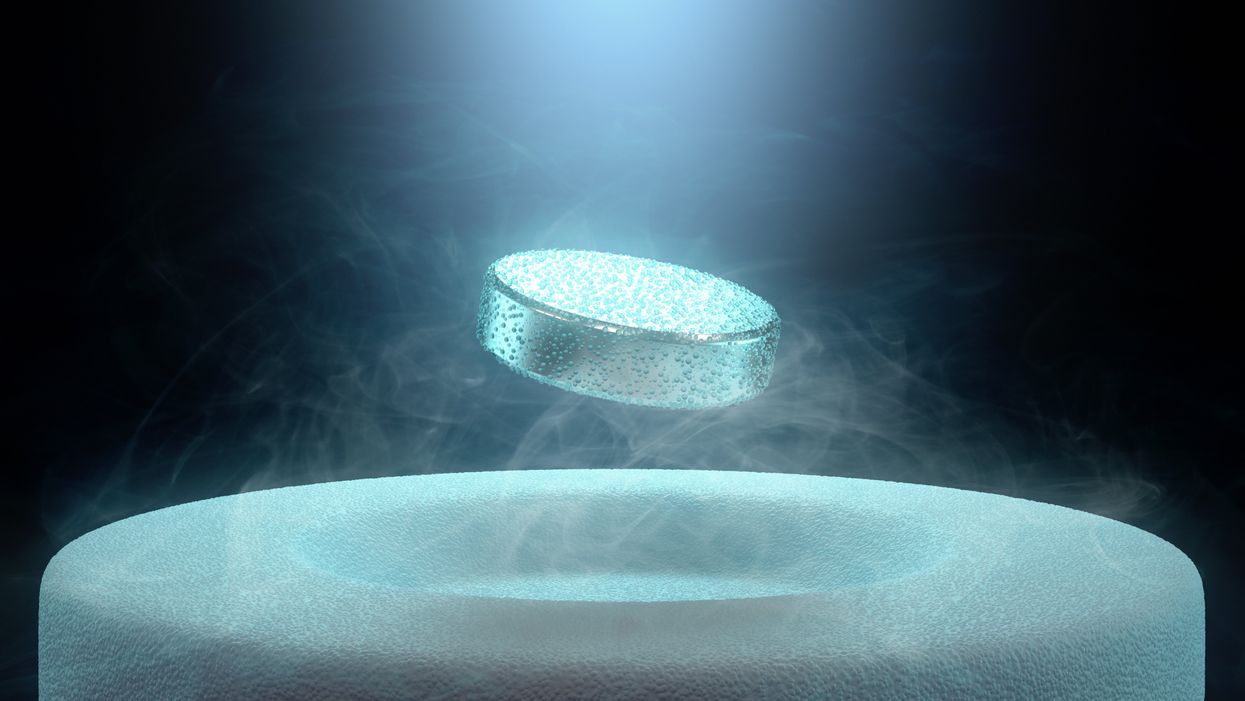

Like fusion, superconductivity can only be achieved in mostly impractical circumstances.

But about six months after they made their startling announcement, the pair’s findings were discredited by researchers at Yale and the California Institute of Technology. It was one of the first instances of a major scientific debunking covered by mass media.

Some scholars say the media attention for cold fusion stemmed partly from a dazzling announcement made three years prior in 1986: Scientists had created the first “superconductor” – material that could transmit electrical current with little or no resistance. It drew global headlines – and whetted the public’s appetite for announcements of scientific breakthroughs that could cause economic transformations.

But like fusion, superconductivity can only be achieved in mostly impractical circumstances: It must operate either at temperatures of at least negative 100 degrees Fahrenheit, or under pressures of around 150,000 pounds per square inch. Superconductivity that functions in closer to a normal environment would cut energy costs dramatically while also opening infinite possibilities for computing, space travel and other applications.

In July, a group of South Korean scientists posted material claiming they had created an iron crystalline substance called LK-99 that could achieve superconductivity at slightly above room temperature and at ambient pressure. The group partners with the Quantum Energy Research Centre, a privately-held enterprise in Seoul, and their claims drew global headlines.

Their work was also debunked. But in the age of internet and social media, the process was compressed from half-a-year into days. And it did not require researchers at world-class universities.

One of the most compelling critiques came from Derrick VanGennep. Although he works in finance, he holds a Ph.D. in physics and held a postdoctoral position at Harvard. The South Korean researchers had posted a video of a nugget of LK-99 in what they claimed was the throes of the Meissner effect – an expulsion of the substance’s magnetic field that would cause it to levitate above a magnet. Unless Hollywood magic is involved, only superconducting material can hover in this manner.

That claim made VanGennep skeptical, particularly since LK-99’s levitation appeared unenthusiastic at best. In fact, a corner of the material still adhered to the magnet near its center. He thought the video demonstrated ferromagnetism – two magnets repulsing one another. He mixed powdered graphite with super glue, stuck iron filings to its surface and mimicked the behavior of LK-99 in his own video, which was posted alongside the researchers’ video.

VanGennep believes the boldness of the South Korean claim was what led to him and others in the scientific community questioning it so quickly.

“The swift replication attempts stemmed from the combination of the extreme claim, the fact that the synthesis for this material is very straightforward and fast, and the amount of attention that this story was getting on social media,” he says.

But practicing scientists were suspicious of the data as well. Michael Norman, director of the Argonne Quantum Institute at the Argonne National Laboratory just outside of Chicago, had doubts immediately.

Will this saga hurt or even affect the careers of the South Korean researchers? Possibly not, if the previous fusion example is any indication.

“It wasn’t a very polished paper,” Norman says of the Korean scientists’ work. That opinion was reinforced, he adds, when it turned out the paper had been posted online by one of the researchers prior to seeking publication in a peer-reviewed journal. Although Norman and Mathur say that is routine with scientific research these days, Norman notes it was posted by one of the junior researchers over the doubts of two more senior scientists on the project.

Norman also raises doubts about the data reported. Among other issues, he observes that the samples created by the South Korean researchers contained traces of copper sulfide that could inadvertently amplify findings of conductivity.

The lack of the Meissner effect also caught Mathur’s attention. “Ferromagnets tend to be unstable when they levitate,” he says, adding that the video “just made me feel unconvinced. And it made me feel like they hadn't made a very good case for themselves.”

Will this saga hurt or even affect the careers of the South Korean researchers? Possibly not, if the previous fusion example is any indication. Despite being debunked, cold fusion claimants Pons and Fleischmann didn’t disappear. They moved their research to automaker Toyota’s IMRA laboratory in France, which along with the Japanese government spent tens of millions of dollars on their work before finally pulling the plug in 1998.

Fusion has since been created in laboratories, but being unable to reproduce the density of a star’s core would require excruciatingly high temperatures to achieve – about 160 million degrees Fahrenheit. A recently released Government Accountability Office report concludes practical fusion likely remains at least decades away.

However, like Pons and Fleischman, the South Korean researchers are not going anywhere. They claim that LK-99’s Meissner effect is being obscured by the fact the substance is both ferromagnetic and diamagnetic. They have filed for a patent in their country. But for now, those claims remain chimerical.

In the meantime, the consensus as to when a room temperature superconductor will be achieved is mixed. VenGennep – who studied the issue during his graduate and postgraduate work – puts the chance of creating such a superconductor by 2050 at perhaps 50-50. Mathur believes it could happen sooner, but adds that research on the topic has been going on for nearly a century, and that it has seen many plateaus.

“There's always this possibility that there's going to be something out there that we're going to discover unexpectedly,” Norman notes. The only certainty in this age of social media is that it will be put through the rigors of replication instantly.

Scientists implant brain cells to counter Parkinson's disease

In a recent research trial, patients with Parkinson's disease reported that their symptoms had improved after stem cells were implanted into their brains. Martin Taylor, far right, was diagnosed at age 32.

Martin Taylor was only 32 when he was diagnosed with Parkinson's, a disease that causes tremors, stiff muscles and slow physical movement - symptoms that steadily get worse as time goes on.

“It's horrible having Parkinson's,” says Taylor, a data analyst, now 41. “It limits my ability to be the dad and husband that I want to be in many cruel and debilitating ways.”

Today, more than 10 million people worldwide live with Parkinson's. Most are diagnosed when they're considerably older than Taylor, after age 60. Although recent research has called into question certain aspects of the disease’s origins, Parkinson’s eventually kills the nerve cells in the brain that produce dopamine, a signaling chemical that carries messages around the body to control movement. Many patients have lost 60 to 80 percent of these cells by the time they are diagnosed.

For years, there's been little improvement in the standard treatment. Patients are typically given the drug levodopa, a chemical that's absorbed by the brain’s nerve cells, or neurons, and converted into dopamine. This drug addresses the symptoms but has no impact on the course of the disease as patients continue to lose dopamine producing neurons. Eventually, the treatment stops working effectively.

BlueRock Therapeutics, a cell therapy company based in Massachusetts, is taking a different approach by focusing on the use of stem cells, which can divide into and generate new specialized cells. The company makes the dopamine-producing cells that patients have lost and inserts these cells into patients' brains. “We have a disease with a high unmet need,” says Ahmed Enayetallah, the senior vice president and head of development at BlueRock. “We know [which] cells…are lost to the disease, and we can make them. So it really came together to use stem cells in Parkinson's.”

In a phase 1 research trial announced late last month, patients reported that their symptoms had improved after a year of treatment. Brain scans also showed an increased number of neurons generating dopamine in patients’ brains.

Increases in dopamine signals

The recent phase 1 trial focused on deploying BlueRock’s cell therapy, called bemdaneprocel, to treat 12 patients suffering from Parkinson’s. The team developed the new nerve cells and implanted them into specific locations on each side of the patient's brain through two small holes in the skull made by a neurosurgeon. “We implant cells into the places in the brain where we think they have the potential to reform the neural networks that are lost to Parkinson's disease,” Enayetallah says. The goal is to restore motor function to patients over the long-term.

Five patients were given a relatively low dose of cells while seven got higher doses. Specialized brain scans showed evidence that the transplanted cells had survived, increasing the overall number of dopamine producing cells. The team compared the baseline number of these cells before surgery to the levels one year later. “The scans tell us there is evidence of increased dopamine signals in the part of the brain affected by Parkinson's,” Enayetallah says. “Normally you’d expect the signal to go down in untreated Parkinson’s patients.”

"I think it has a real chance to reverse motor symptoms, essentially replacing a missing part," says Tilo Kunath, a professor of regenerative neurobiology at the University of Edinburgh.

The team also asked patients to use a specific type of home diary to log the times when symptoms were well controlled and when they prevented normal activity. After a year of treatment, patients taking the higher dose reported symptoms were under control for an average of 2.16 hours per day above their baselines. At the smaller dose, these improvements were significantly lower, 0.72 hours per day. The higher-dose patients reported a corresponding decrease in the amount of time when symptoms were uncontrolled, by an average of 1.91 hours, compared to 0.75 hours for the lower dose. The trial was safe, and patients tolerated the year of immunosuppression needed to make sure their bodies could handle the foreign cells.

Claire Bale, the associate director of research at Parkinson's U.K., sees the promise of BlueRock's approach, while noting the need for more research on a possible placebo effect. The trial participants knew they were getting the active treatment, and placebo effects are known to be a potential factor in Parkinson’s research. Even so, “The results indicate that this therapy produces improvements in symptoms for Parkinson's, which is very encouraging,” Bale says.

Tilo Kunath, a professor of regenerative neurobiology at the University of Edinburgh, also finds the results intriguing. “I think it's excellent,” he says. “I think it has a real chance to reverse motor symptoms, essentially replacing a missing part.” However, it could take time for this therapy to become widely available, Kunath says, and patients in the late stages of the disease may not benefit as much. “Data from cell transplantation with fetal tissue in the 1980s and 90s show that cells did not survive well and release dopamine in these [late-stage] patients.”

Searching for the right approach

There's a long history of using cell therapy as a treatment for Parkinson's. About four decades ago, scientists at the University of Lund in Sweden developed a method in which they transferred parts of fetal brain tissue to patients with Parkinson's so that their nerve cells would produce dopamine. Many benefited, and some were able to stop their medication. However, the use of fetal tissue was highly controversial at that time, and the tissues were difficult to obtain. Later trials in the U.S. showed that people benefited only if a significant amount of the tissue was used, and several patients experienced side effects. Eventually, the work lost momentum.

“Like many in the community, I'm aware of the long history of cell therapy,” says Taylor, the patient living with Parkinson's. “They've long had that cure over the horizon.”

In 2000, Lorenz Studer led a team at the Memorial Sloan Kettering Centre, in New York, to find the chemical signals needed to get stem cells to differentiate into cells that release dopamine. Back then, the team managed to make cells that produced some dopamine, but they led to only limited improvements in animals. About a decade later, in 2011, Studer and his team found the specific signals needed to guide embryonic cells to become the right kind of dopamine producing cells. Their experiments in mice, rats and monkeys showed that their implanted cells had a significant impact, restoring lost movement.

Studer then co-founded BlueRock Therapeutics in 2016. Forming the most effective stem cells has been one of the biggest challenges, says Enayetallah, the BlueRock VP. “It's taken a lot of effort and investment to manufacture and make the cells at the right scale under the right conditions.” The team is now using cells that were first isolated in 1998 at the University of Wisconsin, a major advantage because they’re available in a virtually unlimited supply.

Other efforts underway

In the past several years, University of Lund researchers have begun to collaborate with the University of Cambridge on a project to use embryonic stem cells, similar to BlueRock’s approach. They began clinical trials this year.

A company in Japan called Sumitomo is using a different strategy; instead of stem cells from embryos, they’re reprogramming adults' blood or skin cells into induced pluripotent stem cells - meaning they can turn into any cell type - and then directing them into dopamine producing neurons. Although Sumitomo started clinical trials earlier than BlueRock, they haven’t yet revealed any results.

“It's a rapidly evolving field,” says Emma Lane, a pharmacologist at the University of Cardiff who researches clinical interventions for Parkinson’s. “But BlueRock’s trial is the first full phase 1 trial to report such positive findings with stem cell based therapies.” The company’s upcoming phase 2 research will be critical to show how effectively the therapy can improve disease symptoms, she added.

The cure over the horizon

BlueRock will continue to look at data from patients in the phase 1 trial to monitor the treatment’s effects over a two-year period. Meanwhile, the team is planning the phase 2 trial with more participants, including a placebo group.

For patients with Parkinson’s like Martin Taylor, the therapy offers some hope, though Taylor recognizes that more research is needed.

BlueRock Therapeutics

“Like many in the community, I'm aware of the long history of cell therapy,” he says. “They've long had that cure over the horizon.” His expectations are somewhat guarded, he says, but, “it's certainly positive to see…movement in the field again.”

"If we can demonstrate what we’re seeing today in a more robust study, that would be great,” Enayetallah says. “At the end of the day, we want to address that unmet need in a field that's been waiting for a long time.”

Editor's note: The company featured in this piece, BlueRock Therapeutics, is a portfolio company of Leaps by Bayer, which is a sponsor of Leaps.org. BlueRock was acquired by Bayer Pharmaceuticals in 2019. Leaps by Bayer and other sponsors have never exerted influence over Leaps.org content or contributors.