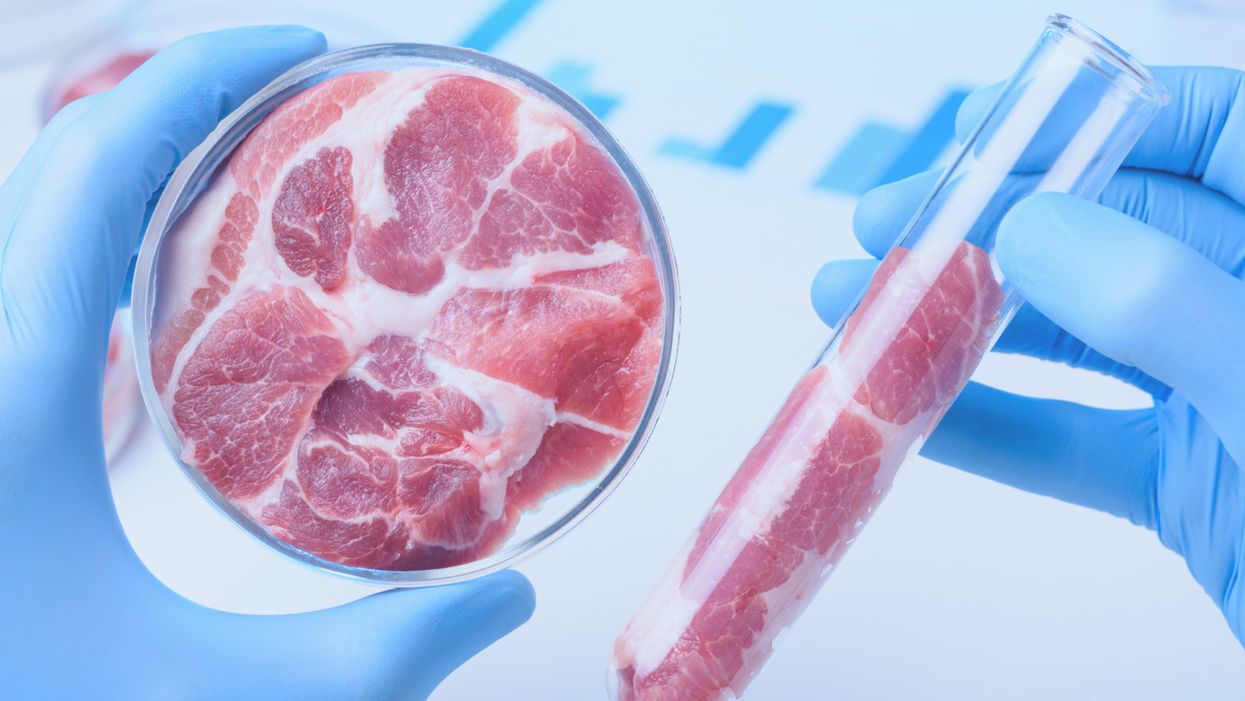

Lab-grown meat in a Petri dish and test tube.

In September, California governor Jerry Brown signed a bill mandating that by 2045, all of California's electricity will come from clean power sources. Technological breakthroughs in producing electricity from sun and wind, as well as lowering the cost of battery storage, have played a major role in persuading Californian legislators that this goal is realistic.

Even if the world were to move to an entirely clean power supply, one major source of greenhouse gas emissions would continue to grow: meat.

James Robo, the CEO of the Fortune 200 company NextEra Energy, has predicted that by the early 2020s, electricity from solar farms and giant wind turbines will be cheaper than the operating costs of coal-fired power plants, even when the cost of storage is included.

Can we therefore all breathe a sigh of relief, because technology will save us from catastrophic climate change? Not yet. Even if the world were to move to an entirely clean power supply, and use that clean power to charge up an all-electric fleet of cars, buses and trucks, one major source of greenhouse gas emissions would continue to grow: meat.

The livestock industry now accounts for about 15 percent of global greenhouse gas emissions, roughly the same as the emissions from the tailpipes of all the world's vehicles. But whereas vehicle emissions can be expected to decline as hybrids and electric vehicles proliferate, global meat consumption is forecast to be 76 percent greater in 2050 than it has been in recent years. Most of that growth will come from Asia, especially China, where increasing prosperity has led to an increasing demand for meat.

Changing Climate, Changing Diets, a report from the London-based Royal Institute of International Affairs, indicates the threat posed by meat production. At the UN climate change conference held in Cancun in 2010, the participating countries agreed that to allow global temperatures to rise more than 2°C above pre-industrial levels would be to run an unacceptable risk of catastrophe. Beyond that limit, feedback loops will take effect, causing still more warming. For example, the thawing Siberian permafrost will release large quantities of methane, causing yet more warming and releasing yet more methane. Methane is a greenhouse gas that, ton for ton, warms the planet 30 times as much as carbon dioxide.

The quantity of greenhouse gases we can put into the atmosphere between now and mid-century without heating up the planet beyond 2°C – known as the "carbon budget" -- is shrinking steadily. The growing demand for meat means, however, that emissions from the livestock industry will continue to rise, and will absorb an increasing share of this remaining carbon budget. This will, according to Changing Climate, Changing Diets, make it "extremely difficult" to limit the temperature rise to 2°C.

One reason why eating meat produces more greenhouse gases than getting the same food value from plants is that we use fossil fuels to grow grains and soybeans and feed them to animals. The animals use most of the energy in the plant food for themselves, moving, breathing, and keeping their bodies warm. That leaves only a small fraction for us to eat, and so we have to grow several times the quantity of grains and soybeans that we would need if we ate plant foods ourselves. The other important factor is the methane produced by ruminants – mainly cattle and sheep – as part of their digestive process. Surprisingly, that makes grass-fed beef even worse for our climate than beef from animals fattened in a feedlot. Cattle fed on grass put on weight more slowly than cattle fed on corn and soybeans, and therefore do burp and fart more methane, per kilogram of flesh they produce.

Richard Branson has suggested that in 30 years, we will look back on the present era and be shocked that we killed animals en masse for food.

If technology can give us clean power, can it also give us clean meat? That term is already in use, by advocates of growing meat at the cellular level. They use it, not to make the parallel with clean energy, but to emphasize that meat from live animals is dirty, because live animals shit. Bacteria from the animals' guts and shit often contaminates the meat. With meat cultured from cells grown in a bioreactor, there is no live animal, no shit, and no bacteria from a digestive system to get mixed into the meat. There is also no methane. Nor is there a living animal to keep warm, move around, or grow body parts that we do not eat. Hence producing meat in this way would be much more efficient, and much cleaner, in the environmental sense, than producing meat from animals.

There are now many startups working on bringing clean meat to market. Plant-based products that have the texture and taste of meat, like the "Impossible Burger" and the "Beyond Burger" are already available in restaurants and supermarkets. Clean hamburger meat, fish, dairy, and other animal products are all being produced without raising and slaughtering a living animal. The price is not yet competitive with animal products, but it is coming down rapidly. Just this week, leading officials from the Food and Drug Administration and the U.S. Department of Agriculture have been meeting to discuss how to regulate the expected production and sale of meat produced by this method.

When Kodak, which once dominated the sale and processing of photographic film, decided to treat digital photography as a threat rather than an opportunity, it signed its own death warrant. Tyson Foods and Cargill, two of the world's biggest meat producers, are not making the same mistake. They are investing in companies seeking to produce meat without raising animals. Justin Whitmore, Tyson's executive vice-president, said, "We don't want to be disrupted. We want to be part of the disruption."

That's a brave stance for a company that has made its fortune from raising and killing tens of billions of animals, but it is also an acknowledgement that when new technologies create products that people want, they cannot be resisted. Richard Branson, who has invested in the biotech company Memphis Meats, has suggested that in 30 years, we will look back on the present era and be shocked that we killed animals en masse for food. If that happens, technology will have made possible the greatest ethical step forward in the history of our species, saving the planet and eliminating the vast quantity of suffering that industrial farming is now inflicting on animals.

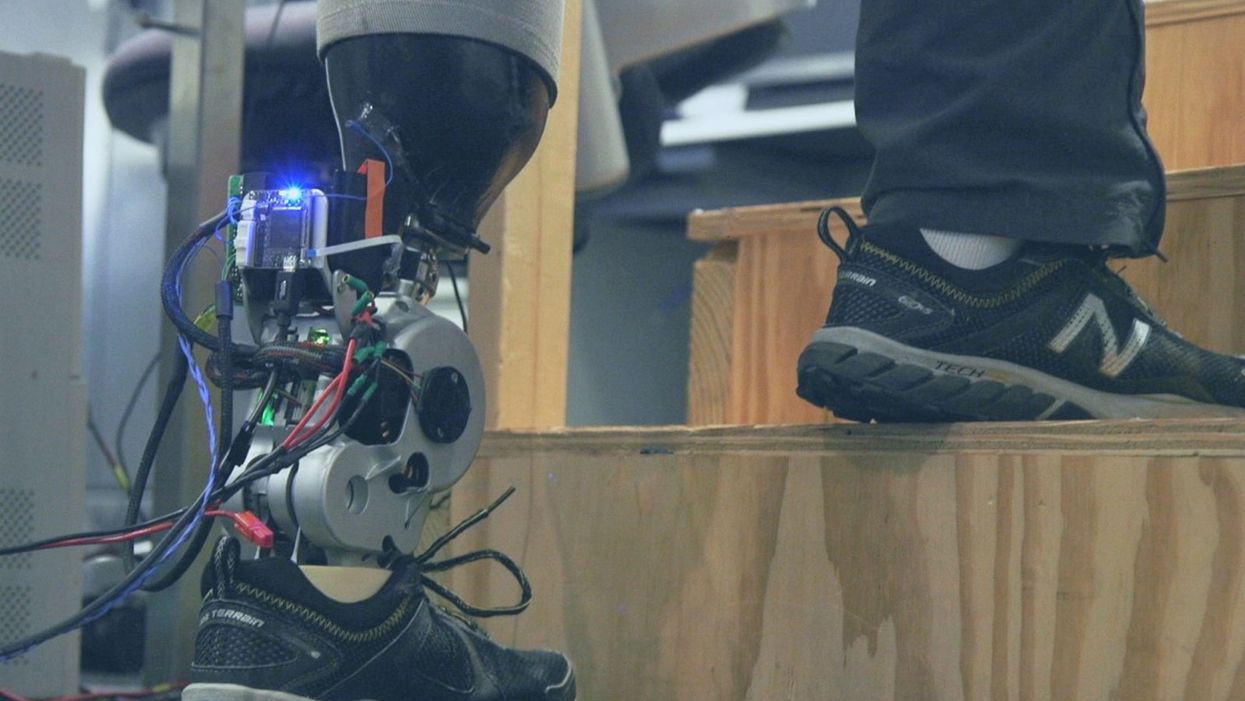

A patient with below-knee AMI amputation walks up the stairs.

"Here's a question for you," I say to our dinner guests, dodging a knowing glance from my wife. "Imagine a future in which you could surgically replace your legs with robotic substitutes that had all the functionality and sensation of their biological counterparts. Let's say these new legs would allow you to run all day at 20 miles per hour without getting tired. Would you have the surgery?"

Why are we so married to the arbitrary distinction between rehabilitating and augmenting?

Like most people I pose this question to, our guests respond with some variation on the theme of "no way"; the idea of undergoing a surgical procedure with the sole purpose of augmenting performance beyond traditional human limits borders on the unthinkable.

"Would your answer change if you had arthritis in your knees?" This is where things get interesting. People think differently about intervention when injury or illness is involved. The idea of a major surgery becomes more tractable to us in the setting of rehabilitation.

Consider the simplistic example of human walking speed. The average human walks at a baseline three miles per hour. If someone is only able to walk at one mile per hour, we do everything we can to increase their walking ability. However, to take a person who is already able to walk at three miles per hour and surgically alter their body so that they can walk twice as fast seems, to us, unreasonable.

What fascinates me about this is that the three-mile-per-hour baseline is set by arbitrary limitations of the healthy human body. If we ignore this reference point altogether, and consider that each case simply offers an improvement in walking ability, the line between augmentation and rehabilitation all but disappears. Why, then, are we so married to this arbitrary distinction between rehabilitating and augmenting? What makes us hold so tightly to baseline human function?

Where We Stand Now

As the functionality of advanced prosthetic devices continues to increase at an astounding rate, questions like these are becoming more relevant. Experimental prostheses, intended for the rehabilitation of people with amputation, are now able to replicate the motions of biological limbs with high fidelity. Neural interfacing technologies enable a person with amputation to control these devices with their brain and nervous system. Before long, synthetic body parts will outperform biological ones.

Our approach allows people to not only control a prosthesis with their brain, but also to feel its movements as if it were their own limb.

Against this backdrop, my colleagues and I developed a methodology to improve the connection between the biological body and a synthetic limb. Our approach, known as the agonist-antagonist myoneural interface ("AMI" for short), enables us to reflect joint movement sensations from a prosthetic limb onto the human nervous system. In other words, the AMI allows people to not only control a prosthesis with their brain, but also to feel its movements as if it were their own limb. The AMI involves a reimagining of the amputation surgery, so that the resultant residual limb is better suited to interact with a neurally-controlled prosthesis. In addition to increasing functionality, the AMI was designed with the primary goal of enabling adoption of a prosthetic limb as part of a patient's physical identity (known as "embodiment").

Early results have been remarkable. Patients with below-knee AMI amputation are better able to control an experimental prosthetic leg, compared to people who had their legs amputated in the traditional way. In addition, the AMI patients show increased evidence of embodiment. They identify with the device, and describe feeling as though it is part of them, part of self.

Where We're Going

True embodiment of robotic devices has the potential to fundamentally alter humankind's relationship with the built world. Throughout history, humans have excelled as tool builders. We innovate in ways that allow us to design and augment the world around us. However, tools for augmentation are typically external to our body identity; there is a clean line drawn between smart phone and self. As we advance our ability to integrate synthetic systems with physical identity, humanity will have the capacity to sculpt that very identity, rather than just the world in which it exists.

For this potential to be realized, we will need to let go of our reservations about surgery for augmentation. In reality, this shift has already begun. Consider the approximately 17.5 million surgical and minimally invasive cosmetic procedures performed in the United States in 2017 alone. Many of these represent patients with no demonstrated medical need, who have opted to undergo a surgical procedure for the sole purpose of synthetically enhancing their body. The ethical basis for such a procedure is built on the individual perception that the benefits of that procedure outweigh its costs.

At present, it seems absurd that amputation would ever reach this point. However, as robotic technology improves and becomes more integrated with self, the balance of cost and benefit will shift, lending a new perspective on what now seems like an unfathomable decision to electively amputate a healthy limb. When this barrier is crossed, we will collide head-on with the question of whether it is acceptable for a person to "upgrade" such an essential part of their body.

At a societal level, the potential benefits of physical augmentation are far-reaching. The world of robotic limb augmentation will be a world of experienced surgeons whose hands are perfectly steady, firefighters whose legs allow them to kick through walls, and athletes who never again have to worry about injury. It will be a world in which a teenage boy and his grandmother embark together on a four-hour sprint through the woods, for the sheer joy of it. It will be a world in which the human experience is fundamentally enriched, because our bodies, which play such a defining role in that experience, are truly malleable.

This is not to say that such societal benefits stand without potential costs. One justifiable concern is the misuse of augmentative technologies. We are all quite familiar with the proverbial supervillain whose nervous system has been fused to that of an all-powerful robot.

The world of robotic limb augmentation will be a world of experienced surgeons whose hands are perfectly steady.

In reality, misuse is likely to be both subtler and more insidious than this. As with all new technology, careful legislation will be necessary to work against those who would hijack physical augmentations for violent or oppressive purposes. It will also be important to ensure broad access to these technologies, to protect against further socioeconomic stratification. This particular issue is helped by the tendency of the cost of a technology to scale inversely with market size. It is my hope that when robotic augmentations are as ubiquitous as cell phones, the technology will serve to equalize, rather than to stratify.

In our future bodies, when we as a society decide that the benefits of augmentation outweigh the costs, it will no longer matter whether the base materials that make us up are biological or synthetic. When our AMI patients are connected to their experimental prosthesis, it is irrelevant to them that the leg is made of metal and carbon fiber; to them, it is simply their leg. After our first patient wore the experimental prosthesis for the first time, he sent me an email that provides a look at the immense possibility the future holds:

What transpired is still slowly sinking in. I keep trying to describe the sensation to people. Then this morning my daughter asked me if I felt like a cyborg. The answer was, "No, I felt like I had a foot."

Do New Tools Need New Ethics?

Symbols of countries on a chessboard.

Scarcely a week goes by without the announcement of another breakthrough owing to advancing biotechnology. Recent examples include the use of gene editing tools to successfully alter human embryos or clone monkeys; new immunotherapy-based treatments offering longer lives or even potential cures for previously deadly cancers; and the creation of genetically altered mosquitos using "gene drives" to quickly introduce changes into the population in an ecosystem and alter the capacity to carry disease.

The environment for conducting science is dramatically different today than it was in the 1970s, 80s, or even the early 2000s.

Each of these examples puts pressure on current policy guidelines and approaches, some existing since the late 1970s, which were created to help guide the introduction of controversial new life sciences technologies. But do the policies that made sense decades ago continue to make sense today, or do the tools created during different eras in science demand new ethics guidelines and policies?

Advances in biotechnology aren't new of course, and in fact have been the hallmark of science since the creation of the modern U.S. National Institutes of Health in the 1940s and similar government agencies elsewhere. Funding agencies focused on health sciences research with the hope of creating breakthroughs in human health, and along the way, basic science discoveries led to the creation of new scientific tools that offered the ability to approach life, death, and disease in fundamentally new ways.

For example, take the discovery in the 1970s of the "chemical scissors" in living cells called restriction enzymes, which could be controlled and used to introduce cuts at predictable locations in a strand of DNA. This led to the creation of tools that for the first time allowed for genetic modification of any organism with DNA, which meant bacteria, plants, animals, and even humans could in theory have harmful mutations repaired, but also that changes could be made to alter or even add genetic traits, with potentially ominous implications.

The scientists involved in that early research convened a small conference to discuss not only the science, but how to responsibly control its potential uses and their implications. The meeting became known as the Asilomar Conference for the meeting center where it was held, and is often noted as the prime example of the scientific community policing itself. While the Asilomar recommendations were not sufficient from a policy standpoint, they offered a blueprint on which policies could be based and presented a model of the scientific community setting responsible controls for itself.

But the environment for conducting science changed over the succeeding decades and it is dramatically different today than it was in the 1970s, 80s, or even the early 2000s. The regime for oversight and regulation that has provided controls for the introduction of so-called "gene therapy" in humans starting in the mid-1970s is beginning to show signs of fraying. The vast majority of such research was performed in the U.S., U.K., and Europe, where policies were largely harmonized. But as the tools for manipulating humans at the molecular level advanced, they also became more reliable and more precise, as well as cheaper and easier to use—think CRISPR—and therefore more accessible to more people in many more countries, many without clear oversight or policies laying out responsible controls.

There is no precedent for global-scale science policy, though that is exactly what this moment seems to demand.

As if to make the point through news headlines, scientists in China announced in 2017 that they had attempted to perform gene editing on in vitro human embryos to repair an inherited mutation for beta thalassemia--research that would not be permitted in the U.S. and most European countries and at the time was also banned in the U.K. Similarly, specialists from a reproductive medicine clinic in the U.S. announced in 2016 that they had performed a highly controversial reproductive technology by which DNA from two women is combined (so-called "three parent babies"), in a satellite clinic they had opened in Mexico to avoid existing prohibitions on the technique passed by the U.S. Congress in 2015.

In both cases, genetic changes were introduced into human embryos that if successful would lead to the birth of a child with genetically modified germline cells—the sperm in boys or eggs in girls—with those genetic changes passed on to all future generations of related offspring. Those are just two very recent examples, and it doesn't require much imagination to predict the list of controversial possible applications of advancing biotechnologies: attempts at genetic augmentation or even cloning in humans, and alterations of the natural environment with genetically engineered mosquitoes or other insects in areas with endemic disease. In fact, as soon as this month, scientists in Africa may release genetically modified mosquitoes for the first time.

The technical barriers are falling at a dramatic pace, but policy hasn't kept up, both in terms of what controls make sense and how to address what is an increasingly global challenge. There is no precedent for global-scale science policy, though that is exactly what this moment seems to demand. Mechanisms for policy at global scale are limited–-think UN declarations, signatory countries, and sometimes international treaties, but all are slow, cumbersome and have limited track records of success.

But not all the news is bad. There are ongoing efforts at international discussion, such as an international summit on human genome editing convened in 2015 by the National Academies of Sciences and Medicine (U.S.), Royal Academy (U.K.), and Chinese Academy of Sciences (China), a follow-on international consensus committee whose report was issued in 2017, and an upcoming 2nd international summit in Hong Kong in November this year.

These efforts need to continue to focus less on common regulatory policies, which will be elusive if not impossible to create and implement, but on common ground for the principles that ought to guide country-level rules. Such principles might include those from the list proposed by the international consensus committee, including transparency, due care, responsible science adhering to professional norms, promoting wellbeing of those affected, and transnational cooperation. Work to create a set of shared norms is ongoing and worth continued effort as the relevant stakeholders attempt to navigate what can only be called a brave new world.