Polio to COVID-19: Why Has Vaccine Confidence Eroded Over the Last 70 Years?

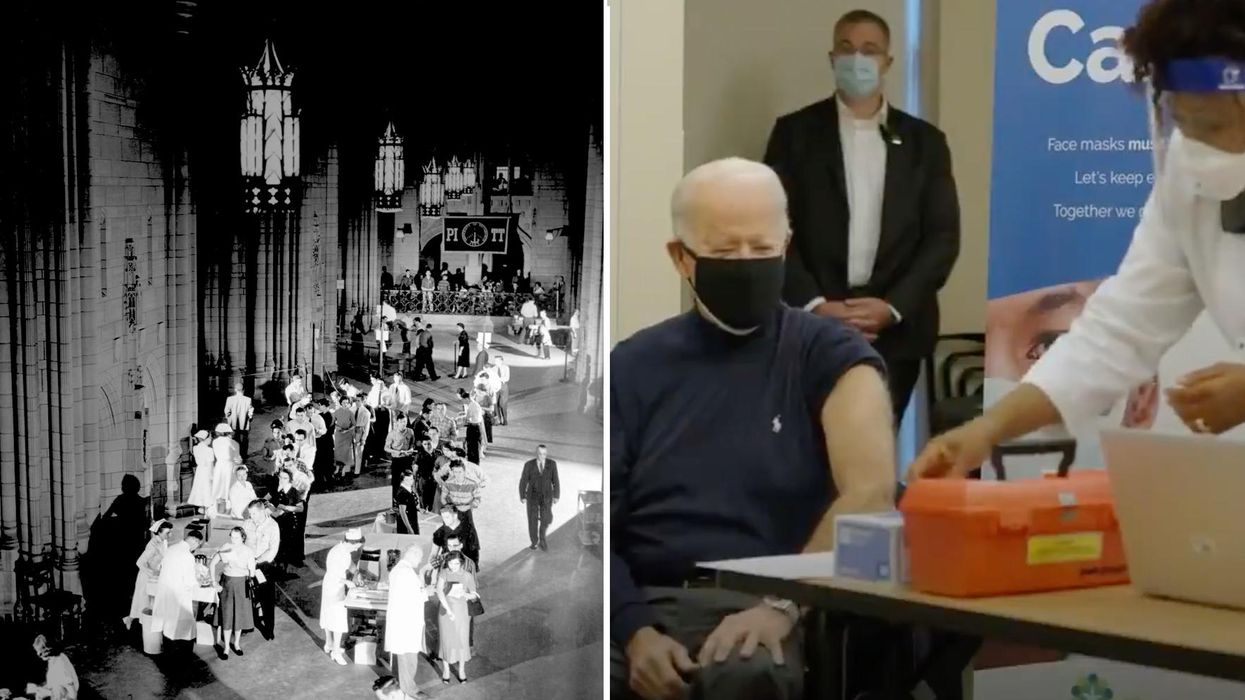

On left, people excitedly line up for Salk's polio vaccine in 1957; on right, Joe Biden gets one of the COVID vaccines on December 21, 2020.

On the morning of April 12, 1955, newsrooms across the United States inked headlines onto newsprint: the Salk Polio vaccine was "safe, effective, and potent." This was long-awaited news. Americans had limped through decades of fear, unaware of what caused polio or how to cure it, faced with the disease's terrifying, visible power to paralyze and kill, particularly children.

The announcement of the polio vaccine was celebrated with noisy jubilation: church bells rang, factory whistles sounded, people wept in the streets. Within weeks, mass inoculation began as the nation put its faith in a vaccine that would end polio.

Today, most of us are blissfully ignorant of child polio deaths, making it easier to believe that we have not personally benefited from the development of vaccines. According to Dr. Steven Pinker, cognitive psychologist and author of the bestselling book Enlightenment Now, we've become blasé to the gifts of science. "The default expectation is not that disease is part of life and science is a godsend, but that health is the default, and any disease is some outrage," he says.

We're now in the early stages of another vaccine rollout, one we hope will end the ravages of the COVID-19 pandemic. And yet, the Pfizer, Moderna, and AstraZeneca vaccines are met with far greater hesitancy and skepticism than the polio vaccine was in the 50s.

In 2021, concerns over the speed and safety of vaccine development and technology plague this heroic global effort, but the roots of vaccine hesitancy run far deeper. Vaccine hesitancy has always existed in the U.S., even in the polio era, motivated in part by fears around "living virus" in a bad batch of vaccines produced by Cutter Laboratories in 1955. But in the last half century, we've witnessed seismic cultural shifts—loss of public trust, a rise in misinformation, heightened racial and socioeconomic inequality, and political polarization have all intensified vaccine-related fears and resistance. Making sense of how we got here may help us understand how to move forward.

The Rise and Fall of Public Trust

When the polio vaccine was released in 1955, "we were nearing an all-time high point in public trust," says Matt Baum, Harvard Kennedy School professor and lead author of several reports measuring public trust and vaccine confidence. Baum explains that the U.S. was experiencing a post-war boom following the Allied triumph in WWII, a popular Roosevelt presidency, and the rapid innovation that elevated the country to an international superpower.

The 1950s witnessed the emergence of nuclear technology, a space program, and unprecedented medical breakthroughs, adds Emily Brunson, Texas State University anthropologist and co-chair of the Working Group on Readying Populations for COVID-19 Vaccine. "Antibiotics were a game changer," she states. While before, people got sick with pneumonia for a month, suddenly they had access to pills that accelerated recovery.

During this period, science seemed to hold all the answers; people embraced the idea that we could "come to know the world with an absolute truth," Brunson explains. Doctors were portrayed as unquestioned gods, so Americans were primed to trust experts who told them the polio vaccine was safe.

"The emotional tone of the news has gone downward since the 1940s, and journalists consider it a professional responsibility to cover the negative."

That blind acceptance eroded in the 1960s and 70s as people came to understand that science can be inherently political. "Getting to an absolute truth works out great for white men, but these things affect people socially in radically different ways," Brunson says. As the culture began questioning the white, patriarchal biases of science, doctors lost their god-like status and experts were pushed off their pedestals. This trend continues with greater intensity today, as President Trump has led a campaign against experts and waged a war on science that began long before the pandemic.

The Shift in How We Consume Information

In the 1950s, the media created an informational consensus. The fundamental ideas the public consumed about the state of the world were unified. "People argued about the best solutions, but didn't fundamentally disagree on the factual baseline," says Baum. Indeed, the messaging around the polio vaccine was centralized and consistent, led by President Roosevelt's successful March of Dimes crusade. People of lower socioeconomic status with limited access to this information were less likely to have confidence in the vaccine, but most people consumed media that assured them of the vaccine's safety and mobilized them to receive it.

Today, the information we consume is no longer centralized—in fact, just the opposite. "When you take that away, it's hard for people to know what to trust and what not to trust," Baum explains. We've witnessed an increase in polarization and the technology that makes it easier to give people what they want to hear, reinforcing the human tendencies to vilify the other side and reinforce our preexisting ideas. When information is engineered to further an agenda, each choice and risk calculation made while navigating the COVID-19 pandemic is deeply politicized.

This polarization maps onto a rise in socioeconomic inequality and economic uncertainty. These factors, associated with a sense of lost control, prime people to embrace misinformation, explains Baum, especially when the situation is difficult to comprehend. "The beauty of conspiratorial thinking is that it provides answers to all these questions," he says. Today's insidious fragmentation of news media accelerates the circulation of mis- and disinformation, reaching more people faster, regardless of veracity or motivation. In the case of vaccines, skepticism around their origin, safety, and motivation is intensified.

Alongside the rise in polarization, Pinker says "the emotional tone of the news has gone downward since the 1940s, and journalists consider it a professional responsibility to cover the negative." Relentless focus on everything that goes wrong further erodes public trust and paints a picture of the world getting worse. "Life saved is not a news story," says Pinker, but perhaps it should be, he continues. "If people were more aware of how much better life was generally, they might be more receptive to improvements that will continue to make life better. These improvements don't happen by themselves."

The Future Depends on Vaccine Confidence

So far, the U.S. has been unable to mitigate the catastrophic effects of the pandemic through social distancing, testing, and contact tracing. President Trump has downplayed the effects and threat of the virus, censored experts and scientists, given up on containing the spread, and mobilized his base to protest masks. The Trump Administration failed to devise a national plan, so our national plan has defaulted to hoping for the "miracle" of a vaccine. And they are "something of a miracle," Pinker says, describing vaccines as "the most benevolent invention in the history of our species." In record-breaking time, three vaccines have arrived. But their impact will be weakened unless we achieve mass vaccination. As Brunson notes, "The technology isn't the fix; it's people taking the technology."

Significant challenges remain, including facilitating widespread access and supporting on-the-ground efforts to allay concerns and build trust with specific populations with historic reasons for distrust, says Brunson. Baum predicts continuing delays as well as deaths from other causes that will be linked to the vaccine.

Still, there's every reason for hope. The new administration "has its eyes wide open to these challenges. These are the kind of problems that are amenable to policy solutions if we have the will," Baum says. He forecasts widespread vaccination by late summer and a bounce back from the economic damage, a "Good News Story" that will bolster vaccine acceptance in the future. And Pinker reminds us that science, medicine, and public health have greatly extended our lives in the last few decades, a trend that can only continue if we're willing to roll up our sleeves.

Chicken that is grown entirely in a laboratory, without harming a single bird, could be sold in supermarkets in the coming months. But critics say the doubts about lab-grown meat have not been appropriately explored.

Last November, when the U.S. Food and Drug Administration disclosed that chicken from a California firm called UPSIDE Foods did not raise safety concerns, it drily upended how humans have obtained animal protein for thousands of generations.

“The FDA is ready to work with additional firms developing cultured animal cell food and production processes to ensure their food is safe and lawful,” the agency said in a statement at the time.

Assuming UPSIDE obtains clearances from the U.S. Department of Agriculture, its chicken – grown entirely in a laboratory without harming a single bird – could be sold in supermarkets in the coming months.

“Ultimately, we want our products to be available everywhere meat is sold, including retail and food service channels,” a company spokesperson said. The upscale French restaurant Atelier Crenn in San Francisco will have UPSIDE chicken on its menu once it is approved, she added.

Known as lab-grown or cultured meat, a product such as UPSIDE’s is created using stem cells and other tissue obtained from a chicken, cow or other livestock. Those cells are then multiplied in a nutrient-dense environment, usually in conjunction with a “scaffold” of plant-based materials or gelatin to give them a familiar form, such as a chicken breast or a ribeye steak. A Dutch company called Mosa Meat claims it can produce 80,000 hamburgers derived from a cluster of tissue the size of a sesame seed.

Critics say the doubts about lab-grown meat and the possibility it could merge “Brave New World” with “The Jungle” and “Soylent Green” have not been appropriately explored.

That’s a far cry from when it took months of work to create the first lab-grown hamburger a decade ago. That minuscule patty – which did not contain any fat and was literally plucked from a Petri dish to go into a frying pan – cost about $325,000 to produce.

Just a decade later, an Israeli company called Future Meat said it can produce lab-grown meat for about $1.70 per pound. It plans to open a production facility in the U.S. sometime in 2023 and distribute its products under the brand name “Believer.”

Costs for production have sunk so low that researchers at Carnegie Mellon University in Pittsburgh expect sometime in early 2024 to produce lab-grown Wagyu steak to showcase the viability of growing high-end cuts of beef cheaply. The Carnegie Mellon team is producing its Wagyu using a consumer 3-D printer bought secondhand on eBay and modified to print the highly marbled flesh using a method developed by the university. The device costs $200 – about the same as a pound of Wagyu in the U.S. The initiative’s modest five-figure budget was successfully crowdfunded last year.

“The big cost is going to be the cells (which are being extracted by a cow somewhere in Pennsylvania), but otherwise printing doesn’t add much to the process,” said Rosalyn Abbott, a Carnegie Mellon assistant professor of bioengineering who is co-leader on the project. “But it adds value, unlike doing this with ground meat.”

Lab-Grown Meat’s Promise

Proponents of lab-grown meat say it will cut down on traditional agriculture, which has been a leading contributor to deforestation, water shortages and contaminated waterways from animal waste, as well as climate change.

An Oxford University study from 2011 concludes lab-grown meat could have greenhouse emissions 96 percent lower compared to traditionally raised livestock. Moreover, proponents of lab-grown meat claim that the suffering of animals would decline dramatically, as they would no longer need to be warehoused and slaughtered. A recently opened 26-story high-rise in China dedicated to the raising and slaughtering of pigs illustrates the current plight of livestock in stark terms.

Scientists may even learn how to tweak lab-grown meat to make it more nutritious. Natural red meat is high in saturated fat and, if it’s eaten too often, can lead to chronic diseases. In lab versions, the saturated fat could be swapped for healthier, omega-3 fatty acids.

But critics say the doubts about lab-grown meat and the possibility it could merge “Brave New World” with “The Jungle” and “Soylent Green” have not been appropriately explored.

A Slippery Slope?

Some academics who have studied the moral and ethical issues surrounding lab-grown meat believe it will have a tough path ahead gaining acceptance by consumers. Should it actually succeed in gaining acceptance, many ethical questions must be answered.

“People might be interested” in lab-grown meat, perhaps as a curiosity, said Carlos Alvaro, an associate professor of philosophy at the New York City College of Technology, part of the City University of New York. But the allure of traditionally sourced meat has been baked – or perhaps grilled – into people’s minds for so long that they may not want to make the switch. Plant-based meat provides a recent example of the uphill battle involved in changing old food habits, with Beyond Meat’s stock prices dipping nearly 80 percent in 2022.

"There are many studies showing that people don’t really care about the environment (to that extent)," Alvaro said. "So I don’t know how you would convince people to do this because of the environment.”

“From my research, I understand that the taste (of lab-grown meat) is not quite there,” Alvaro said, noting that the amino acids, sugars and other nutrients required to grow cultivated meat do not mimic what livestock are fed. He also observed that the multiplication of cells as part of the process “really mimic cancer cells” in the way they grow, another off-putting thought for would-be consumers of the product.

Alvaro is also convinced the public will not buy into any argument that lab-grown meat is more environmentally friendly.

“If people care about the environment, they either try and consume considerably less meat and other animal products, or they go vegan or vegetarian,” he said. “But there are many studies showing that people don’t really care about the environment (to that extent). So I don’t know how you would convince people to do this because of the environment.”

Ben Bramble, a professor at Australian National University who previously held posts at Princeton and Trinity College in Ireland, takes a slightly different tack. He noted that “if lab-grown meat becomes cheaper, healthier, or tastier than regular meat, there will be a large market for it. If it becomes all of these things, it will dominate the market.”

However, Bramble has misgivings about that occurring. He believes a smooth transition from traditionally sourced meat to a lab-grown version would allow humans to elide over the decades of animal cruelty perpetrated by large-scale agriculture, without fully reckoning with and learning from this injustice.

“My fear is that if we all switch over to lab-grown meat because it has become cheaper, healthier, or tastier than regular meat, we might never come to realize what we have done, and the terrible things we are capable of,” he said. “This would be a catastrophe.”

Bramble’s writings about cultured meat also raise some serious moral conundrums. If, for example, animal meat may be cultivated without killing animals, why not create products from human protein?

Actually, that’s already happened.

It occurred in 2019, when Orkan Telhan, a professor of fine arts at the University of Pennsylvania, collaborated with two scientists to create an art exhibit at the Philadelphia Museum of Art on the future of foodstuffs.

Although the exhibit included bioengineered bread and genetically modified salmon, it was an installation called “Ouroboros Steak” that drew the most attention. That was comprised of pieces of human flesh grown in a lab from cultivated cells and expired blood products obtained from online sources.

The exhibit was presented as four tiny morsels of red meat – shaped in patterns suggesting an ouroboros, a dragon eating its own tail. They were placed in tiny individual saucers atop a larger plate and placemat with a calico pattern, suggesting an item to order in a diner. The artwork drew international headlines – as well as condemnation for Telhan’s vision.

Telhan’s artwork is intended to critique the overarching assumption that lab-grown meat will eventually replace more traditional production methods, as well as the lack of transparency surrounding many processed foodstuffs. “They think that this problem (from industrial-scale agriculture) is going be solved by this new technology,” Telhan said. “I am critical (of) that perspective.”

Unlike Bramble, Telhan is not against lab-grown meat, so long as its producers are transparent about the sourcing of materials and its cultivation. But he believes that large-scale agricultural meat production – which dates back centuries – is not going to be replaced so quickly.

“We see this again and again with different industries, like algae-based fuels. A lot of companies were excited about this, and promoted it,” Telhan said. “And years later, we know these fuels work. But to be able to displace the oil industry means building the infrastructure to scale takes billions of dollars, and nobody has the patience or money to do it.”

Alvaro concurred on this point, which he believes is already weakened because a large swath of consumers aren’t concerned about environmental degradation.

“They’re going to have to sell this big, but in order to convince people to do so, they have to convince them to eat this product instead of regular meat,” Alvaro said.

Hidden Tweaks?

Moreover, if lab-based meat does obtain a significant market share, Telhan suggested companies may do things to the product – such as to genetically modify it to become more profitable – and never notify consumers. That is a particular concern in the U.S., where regulations regarding such modifications are vastly more relaxed than in the European Union.

“I think that they have really good objectives, and they aspire to good objectives,” Telhan said. “But the system itself doesn't really allow for that much transparency.”

No matter what the future holds, sometime next year Carnegie Mellon is expected to hold a press conference announcing it has produced a cut of the world’s most expensive beef with the help of a modified piece of consumer electronics. It will likely take place at around the same time UPSIDE chicken will be available for purchase in supermarkets and restaurants, pending the USDA’s approvals.

Abbott, the Carnegie Mellon professor, suggested the future event will be both informative and celebratory.

“I think Carnegie Mellon would have someone potentially cook it for us,” she said. “Like have a really good chef in New York City do it.”

In this week's Friday Five, breathing this way may cut down on anxiety, a fasting regimen that could make you sick, this type of job makes men more virile, 3D printed hearts could save your life, and the role of metformin in preventing dementia.

The Friday Five covers five stories in research that you may have missed this week. There are plenty of controversies and troubling ethical issues in science – and we get into many of them in our online magazine – but this news roundup focuses on scientific creativity and progress to give you a therapeutic dose of inspiration headed into the weekend.

Here are the promising studies covered in this week's Friday Five, featuring interviews with Dr. David Spiegel, associate chair of psychiatry and behavioral sciences at Stanford, and Dr. Filip Swirski, professor of medicine and cardiology at the Icahn School of Medicine at Mount Sinai.

Listen on Apple | Listen on Spotify | Listen on Stitcher | Listen on Amazon | Listen on Google

Here are the promising studies covered in this week's Friday Five, featuring interviews with Dr. David Spiegel, associate chair of psychiatry and behavioral sciences at Stanford, and Dr. Filip Swirski, professor of medicine and cardiology at the Icahn School of Medicine at Mount Sinai.

- Breathing this way cuts down on anxiety*

- Could your fasting regimen make you sick?

- This type of job makes men more virile

- 3D printed hearts could save your life

- Yet another potential benefit of metformin

* This video with Dr. Andrew Huberman of Stanford shows exactly how to do the breathing practice.