Researchers Behaving Badly: Known Frauds Are "the Tip of the Iceberg"

Disgraced stem cell researcher and celebrity surgeon Paolo Macchiarini.

Last week, the whistleblowers in the Paolo Macchiarini affair at Sweden's Karolinska Institutet went on the record here to detail the retaliation they suffered for trying to expose a star surgeon's appalling research misconduct.

Scientific fraud of the type committed by Macchiarini is rare, but studies suggest that it's on the rise.

The whistleblowers had discovered that in six published papers, Macchiarini falsified data, lied about the condition of patients and circumvented ethical approvals. As a result, multiple patients suffered and died. But Karolinska turned a blind eye for years.

Scientific fraud of the type committed by Macchiarini is rare, but studies suggest that it's on the rise. Just this week, for example, Retraction Watch and STAT together broke the news that a Harvard Medical School cardiologist and stem cell researcher, Piero Anversa, falsified data in a whopping 31 papers, which now have to be retracted. Anversa had claimed that he could regenerate heart muscle by injecting bone marrow cells into damaged hearts, a result that no one has been able to duplicate.

A 2009 study published in the Public Library of Science (PLOS) found that about two percent of scientists admitted to committing fabrication, falsification or plagiarism in their work. That's a small number, but up to one third of scientists admit to committing "questionable research practices" that fall into a gray area between rigorous accuracy and outright fraud.

These dubious practices may include misrepresentations, research bias, and inaccurate interpretations of data. One common questionable research practice entails formulating a hypothesis after the research is done in order to claim a successful premise. Another highly questionable practice that can shape research is ghost-authoring by representatives of the pharmaceutical industry and other for-profit fields. Still another is gifting co-authorship to unqualified but powerful individuals who can advance one's career. Such practices can unfairly bolster a scientist's reputation and increase the likelihood of getting the work published.

The above percentages represent what scientists admit to doing themselves; when they evaluate the practices of their colleagues, the numbers jump dramatically. In a 2012 study published in the Journal of Research in Medical Sciences, researchers estimated that 14 percent of other scientists commit serious misconduct, while up to 72 percent engage in questionable practices. While these are only estimates, the problem is clearly not one of just a few bad apples.

In the PLOS study, Daniele Fanelli says that increasing evidence suggests the known frauds are "just the 'tip of the iceberg,' and that many cases are never discovered" because fraud is extremely hard to detect.

Essentially everyone wants to be associated with big breakthroughs, and they may overlook scientifically shaky foundations when a major advance is claimed.

In addition, it's likely that most cases of scientific misconduct go unreported because of the high price of whistleblowing. Those in the Macchiarini case showed extraordinary persistence in their multi-year campaign to stop his deadly trachea implants, while suffering serious damage to their careers. Such heroic efforts to unmask fraud are probably rare.

To make matters worse, there are numerous players in the scientific world who may be complicit in either committing misconduct or covering it up. These include not only primary researchers but co-authors, institutional executives, journal editors, and industry leaders. Essentially everyone wants to be associated with big breakthroughs, and they may overlook scientifically shaky foundations when a major advance is claimed.

Another part of the problem is that it's rare for students in science and medicine to receive an education in ethics. And studies have shown that older, more experienced and possibly jaded researchers are more likely to fudge results than their younger, more idealistic colleagues.

So, given the steep price that individuals and institutions pay for scientific misconduct, what compels them to go down that road in the first place? According to the JRMS study, individuals face intense pressures to publish and to attract grant money in order to secure teaching positions at universities. Once they have acquired positions, the pressure is on to keep the grants and publishing credits coming in order to obtain tenure, be appointed to positions on boards, and recruit flocks of graduate students to assist in research. And not to be underestimated is the human ego.

Paolo Macchiarini is an especially vivid example of a scientist seeking not only fortune, but fame. He liberally (and falsely) claimed powerful politicians and celebrities, even the Pope, as patients or admirers. He may be an extreme example, but we live in an age of celebrity scientists who bring huge amounts of grant money and high prestige to the institutions that employ them.

The media plays a significant role in both glorifying stars and unmasking frauds. In the Macchiarini scandal, the media first lifted him up, as in NBC's laudatory documentary, "A Leap of Faith," which painted him as a kind of miracle-worker, and then brought him down, as in the January 2016 documentary, "The Experiments," which chronicled the agonizing death of one of his patients.

Institutions can also play a crucial role in scientific fraud by putting more emphasis on the number and frequency of papers published than on their quality. The whole course of a scientist's career is profoundly affected by something called the h-index. This is a number based on both the frequency of papers published and how many times the papers are cited by other researchers. Raising one's ranking on the h-index becomes an overriding goal, sometimes eclipsing the kind of patient, time-consuming research that leads to true breakthroughs based on reliable results.

Universities also create a high-pressured environment that encourages scientists to cut corners. They, too, place a heavy emphasis on attracting large monetary grants and accruing fame and prestige. This can lead them, just as it led Karolinska, to protect a star scientist's sloppy or questionable research. According to Dr. Andrew Rosenberg, who is director of the Center for Science and Democracy at the U.S.-based Union of Concerned Scientists, "Karolinska defended its investment in an individual as opposed to the long-term health of the institution. People were dying, and they should have outsourced the investigation from the very beginning."

Having institutions investigate their own practices is a conflict of interest from the get-go, says Rosenberg.

Scientists, universities, and research institutions are also not immune to fads. "Hot" subjects attract grant money and confer prestige, incentivizing scientists to shift their research priorities in a direction that garners more grants. This can mean neglecting the scientist's true area of expertise and interests in favor of a subject that's more likely to attract grant money. In Macchiarini's case, he was allegedly at the forefront of the currently sexy field of regenerative medicine -- a field in which Karolinska was making a huge investment.

The relative scarcity of resources intensifies the already significant pressure on scientists. They may want to publish results rapidly, since they face many competitors for limited grant money, academic positions, students, and influence. The scarcity means that a great many researchers will fail while only a few succeed. Once again, the temptation may be to rush research and to show it in the most positive light possible, even if it means fudging or exaggerating results.

Though the pressures facing scientists are very real, the problem of misconduct is not inevitable.

Intense competition can have a perverse effect on researchers, according to a 2007 study in the journal Science of Engineering and Ethics. Not only does it place undue pressure on scientists to succeed, it frequently leads to the withholding of information from colleagues, which undermines a system in which new discoveries build on the previous work of others. Researchers may feel compelled to withhold their results because of the pressure to be the first to publish. The study's authors propose that more investment in basic research from governments could alleviate some of these competitive pressures.

Scientific journals, although they play a part in publishing flawed science, can't be expected to investigate cases of suspected fraud, says the German science blogger Leonid Schneider. Schneider's writings helped to expose the Macchiarini affair.

"They just basically wait for someone to retract problematic papers," he says.

He also notes that, while American scientists can go to the Office of Research Integrity to report misconduct, whistleblowers in Europe have no external authority to whom they can appeal to investigate cases of fraud.

"They have to go to their employer, who has a vested interest in covering up cases of misconduct," he says.

Science is increasingly international. Major studies can include collaborators from several different countries, and he suggests there should be an international body accessible to all researchers that will investigate suspected fraud.

Ultimately, says Rosenberg, the scientific system must incorporate trust. "You trust co-authors when you write a paper, and peer reviewers at journals trust that scientists at research institutions like Karolinska are acting with integrity."

Without trust, the whole system falls apart. It's the trust of the public, an elusive asset once it has been betrayed, that science depends upon for its very existence. Scientific research is overwhelmingly financed by tax dollars, and the need for the goodwill of the public is more than an abstraction.

The Macchiarini affair raises a profound question of trust and responsibility: Should multiple co-authors be held responsible for a lead author's misconduct?

Karolinska apparently believes so. When the institution at last owned up to the scandal, it vindictively found Karl Henrik-Grinnemo, one of the whistleblowers, guilty of scientific misconduct as well. It also designated two other whistleblowers as "blameworthy" for their roles as co-authors of the papers on which Macchiarini was the lead author.

As a result, the whistleblowers' reputations and employment prospects have become collateral damage. Accusations of research misconduct can be a career killer. Research grants dry up, employment opportunities evaporate, publishing becomes next to impossible, and collaborators vanish into thin air.

Grinnemo contends that co-authors should only be responsible for their discrete contributions, not for the data supplied by others.

"Different aspects of a paper are highly specialized," he says, "and that's why you have multiple authors. You cannot go through every single bit of data because you don't understand all the parts of the article."

This is especially true in multidisciplinary, translational research, where there are sometimes 20 or more authors. "You have to trust co-authors, and if you find something wrong you have to notify all co-authors. But you couldn't go through everything or it would take years to publish an article," says Grinnemo.

Though the pressures facing scientists are very real, the problem of misconduct is not inevitable. Along with increased support from governments and industry, a change in academic culture that emphasizes quality over quantity of published studies could help encourage meritorious research.

But beyond that, trust will always play a role when numerous specialists unite to achieve a common goal: the accumulation of knowledge that will promote human health, wealth, and well-being.

[Correction: An earlier version of this story mistakenly credited The New York Times with breaking the news of the Anversa retractions, rather than Retraction Watch and STAT, which jointly published the exclusive on October 14th. The piece in the Times ran on October 15th. We regret the error.]

Bobby Brooke Herrera, the co-founder and CEO of e25Bio, demonstrates the company's rapid paper-strip test for detecting the coronavirus.

You're lying in bed late at night, the foggy swirl of the pandemic's 8th month just beginning to fall behind you, when you detect a slight tickle at the back of your throat.

"If half of people choose to use these tests every other day, then we can stop transmission faster than a vaccine can."

Suddenly fully awake, a jolt of panicked electricity races through your body. Has COVID-19 come for you? In the U.S., answering this simple question is incredibly difficult.

Now, you might have to wait for hours in line in your car to get a test for $100, only to find out your result 10-14 days later -- much too late to matter in stopping an outbreak. Due to such obstacles, a recent report in JAMA Internal Medicine estimated that 9 out of 10 infections in the U.S. are being missed.

But what if you could use a paper strip in the privacy of your own home, like a pregnancy test, and find out if you are contagious in real time?

e25 Bio, a small company in Cambridge, Mass., has already created such a test and it has been sitting on a lab bench, inaccessible, since April. It is an antigen test, which looks for proteins on the outside of a virus, and can deliver results in about 15 minutes. Also like an over-the-counter pregnancy test, e25 envisions its paper strips as a public health screening tool, rather than a definitive diagnostic test. People who see a positive result would be encouraged to then seek out a physician-administered, gold-standard diagnostic test: the more sensitive PCR.

Typically, hospitals and other health facilities rely on PCR tests to diagnose viruses. This test can detect small traces of genetic material that a virus leaves behind in the human body, which tells a clinician that the patient is either actively infected with or recently cleared that virus. PCR is quite sensitive, meaning that it is able to detect the presence of a virus' genetic material very accurately.

But although PCR is the gold-standard for diagnostics, it's also the most labor-intensive way to test for a virus and takes a relatively long time to produce results. That's not a good match for stopping super-spreader events during an unchecked pandemic. PCR is also not great at identifying the infected people when they are most at risk of potentially transmitting the virus to others.

That's because the viral threshold at which PCR can detect a positive result is so low, that it's actually too sensitive for the purposes of telling whether someone is contagious.

"The majority of time someone is PCR positive, those [genetic] remnants do not indicate transmissible virus," epidemiologist Michael Mina recently Tweeted. "They indicate remnants of a recently cleared infection."

To stop the chain of transmission for COVID-19, he says, "We need a more accurate test than PCR, that turns positive when someone is able to transmit."

In other words, we need a test that is better at detecting whether a person is contagious, as opposed to whether a small amount of virus can be detected in their nose or saliva. This kind of test is especially critical given the research showing that asymptomatic and pre-symptomatic people have high viral loads and are spreading the virus undetected.

The critical question for contagiousness testing, then, is how big a dose of SARS-CoV-2, the virus that causes COVID, does it take to infect most people? Researchers are still actively trying to answer this. As Angela Rasmussen, a coronavirus expert at Columbia University, told STAT: "We don't know the amount that is required to cause an infection, but it seems that it's probably not a really, really small amount, like measles."

Amesh Adalja, an infectious disease physician and a senior scholar at the Johns Hopkins University Center for Health Security, told LeapsMag: "It's still unclear what viral load is associated with contagiousness but it is biologically plausible that higher viral loads, in general, are associated with more efficient transmission especially in symptomatic individuals. In those without symptoms, however, the same relationship may not hold and this may be one of the reasons young children, despite their high viral loads, are not driving outbreaks."

"Antigen tests work best when there's high viral loads. They're catching people who are super spreaders."

Mina and colleagues estimate that widespread use of weekly cheap, rapid tests that are 100 times less sensitive than PCR tests would prevent outbreaks -- as long as the people who are positive self-isolate.

So why can't we buy e25Bio's test at a drugstore right now? Ironically, it's barred for the very reason that it's useful in the first place: Because it is not sensitive enough to satisfy the U.S. Food and Drug Administration, according to the company.

"We're ready to go," says Carlos-Henri Ferré, senior associate of operations and communications at e25. "We've applied to FDA, and now it's in their hands."

The problem, he said, is that the FDA is evaluating applications for antigen tests based on criteria for assessing diagnostics, like PCR, even when the tests serve a different purpose -- as a screening tool.

"Antigen tests work best when there's high viral loads," Ferré says. "They're catching people who are super spreaders, that are capable of continuing the spread of disease … FDA criteria is for diagnostics and not this."

FDA released guidance on July 29th -- 140 days into the pandemic -- recommending that at-home tests should perform with at least 80 percent sensitivity if ordered by prescription, and at least 90 percent sensitivity if purchased over the counter. "The danger of a false negative result is that it can contribute to the spread of COVID-19," according to an FDA spokesperson. "However, oversight of a health care professional who reviews the results, in combination with the patient's symptoms and uses their clinical judgment to recommend additional testing, if needed, among other things, can help mitigate some risks."

Crucially, the 90 percent sensitivity recommendation is judged upon comparison to PCR tests, meaning that if a PCR test is able to detect virus in 100 samples, the at-home antigen test would need to detect virus in at least 90 of those samples. Since antigen tests only detect high viral loads, frustrated critics like Mina say that such guidance is "unreasonable."

"The FDA at this moment is not understanding the true potential for wide-scale frequent testing. In some ways this is not their fault," Mina told LeapsMag. "The FDA does not have any remit to evaluate tests that fall outside of medical diagnostic testing. The proposal I have put forth is not about diagnostic testing (leave that for symptomatic cases reporting to their physician and getting PCR tests)....Daily rapid tests are not about diagnosing people and they are not about public health surveillance and they are not about passports to go to school, out to dinner or into the office. They are about reducing population-level transmission given a similar approach as vaccines."

A reasonable standard, he added, would be to follow the World Health Organization's Target Product Profiles, which are documents to help developers build desirable and minimally acceptable testing products. "A decent limit," Mina says, "is a 70% or 80% sensitivity (if they truly require sensitivity as a metric) to detect virus at Ct values less than 25. This coincides with detection of the most transmissible people, which is important."

(A Ct value is a type of measurement that corresponds inversely to the amount of viral load in a given sample. Researchers have found that Ct values of 13-17 indicate high viral load, whereas Ct values greater than 34 indicate a lack of infectious virus.)

"We believe this should be an at-home test, but [if FDA approval comes through] the first rollout is to do this in laboratories, hospitals, and clinics."

"We believe that population screening devices have an immediate place and use in helping beat the virus," says Ferré. "You can have a significant impact even with a test at 60% sensitivity if you are testing frequently."

When presented with criticism of its recommendations, the FDA indicated that it will not automatically deny any at-home test that fails to meet the 90 percent sensitivity guidance.

"FDA is always open to alternative proposals from developers, including strategies for serial testing with less sensitive tests," a spokesperson wrote in a statement. "For example, it is possible that overall sensitivity of the strategy could be considered cumulatively rather than based on one-time testing….In the case of a manufacturer with an at-home test that can only detect people with COVID-19 when they have a high viral load, we encourage them to talk with us so we can better understand their test, how they propose to use it, and the validation data they have collected to support that use."

However, the FDA's actions so far conflict with its stated openness. e25 ended up adding a step to the protocol in order to better meet FDA standards for sensitivity, but that extra step—sending samples to a laboratory for results—will undercut the test's ability to work as an at-home screening tool.

"We believe this should be an at-home test, but [if FDA approval comes through] the first rollout is to do this in laboratories, hospitals, and clinics," Ferré says.

According to the FDA, no test developers have approached them with a request for an emergency use authorization that proposes an alternate testing paradigm, such as serial testing, to mitigate test sensitivity below 80 percent.

From a scientific perspective, antigen tests like e25Bio's are not the only horse in the race for a simple rapid test with potential for at-home use. CRISPR technology has long been touted as fertile ground for diagnostics, and in an eerily prescient interview with LeapsMag in November, CRISPR pioneer Feng Zhang spoke of its potential application as an at-home diagnostic for an infectious disease specifically.

"I think in the long run it will be great to see this for, say, at-home disease testing, for influenza and other sorts of important public health [concerns]," he said in the fall. "To be able to get a readout at home, people can potentially quarantine themselves rather than traveling to a hospital and then carrying the risk of spreading that disease to other people as they get to the clinic."

Zhang's company Sherlock Biosciences is now working on scaled-up manufacturing of a test to detect SARS CoV-2. Mammoth Biosciences, which secured funding from the National Institutes of Health's Rapid Acceleration of Diagnostics program, is also working on a CRISPR diagnostic for SARS CoV-2. Both would check the box for rapid testing, but so far not for at-home testing, as they would also require laboratory infrastructure to provide results.

If any at-home tests can clear the regulatory hurdles, they would also need to be manufactured on a large scale and be cheap enough to entice people to actually use them. In the world of at-home diagnostics, pregnancy tests have become the sole mainstream victor because they're simple to use, small to carry, easy to interpret, and costs about seven or eight dollars at any ubiquitous store, like Target or Walmart. By comparison, the at-home COVID collection tests that don't even offer diagnostics—you send away your sample to an external lab—all cost over $100 to take just one time.

For the time being, the only available diagnostics for COVID require a lab or an expensive dedicated machine to process. This disconnect could prolong the world's worst health crisis in a century.

"Daily rapid tests have enormous potential to sever transmission chains and create herd effects similar to herd immunity," Mina says. "We all recognize that vaccines and infections can result in herd immunity when something around half of people are no longer susceptible.

"The same thing exists with these tests. These are the intervention to stop the virus. If half of people choose to use these tests every other day, then we can stop transmission faster than a vaccine can. The technology exists, the theory and mathematics back it up, the epidemiology is sound. There is no reason we are not approaching this as strongly as we would be approaching vaccines."

--Additional reporting by Julia Sklar

Kira Peikoff was the editor-in-chief of Leaps.org from 2017 to 2021. As a journalist, her work has appeared in The New York Times, Newsweek, Nautilus, Popular Mechanics, The New York Academy of Sciences, and other outlets. She is also the author of four suspense novels that explore controversial issues arising from scientific innovation: Living Proof, No Time to Die, Die Again Tomorrow, and Mother Knows Best. Peikoff holds a B.A. in Journalism from New York University and an M.S. in Bioethics from Columbia University. She lives in New Jersey with her husband and two young sons. Follow her on Twitter @KiraPeikoff.

Coronavirus Risk Calculators: What You Need to Know

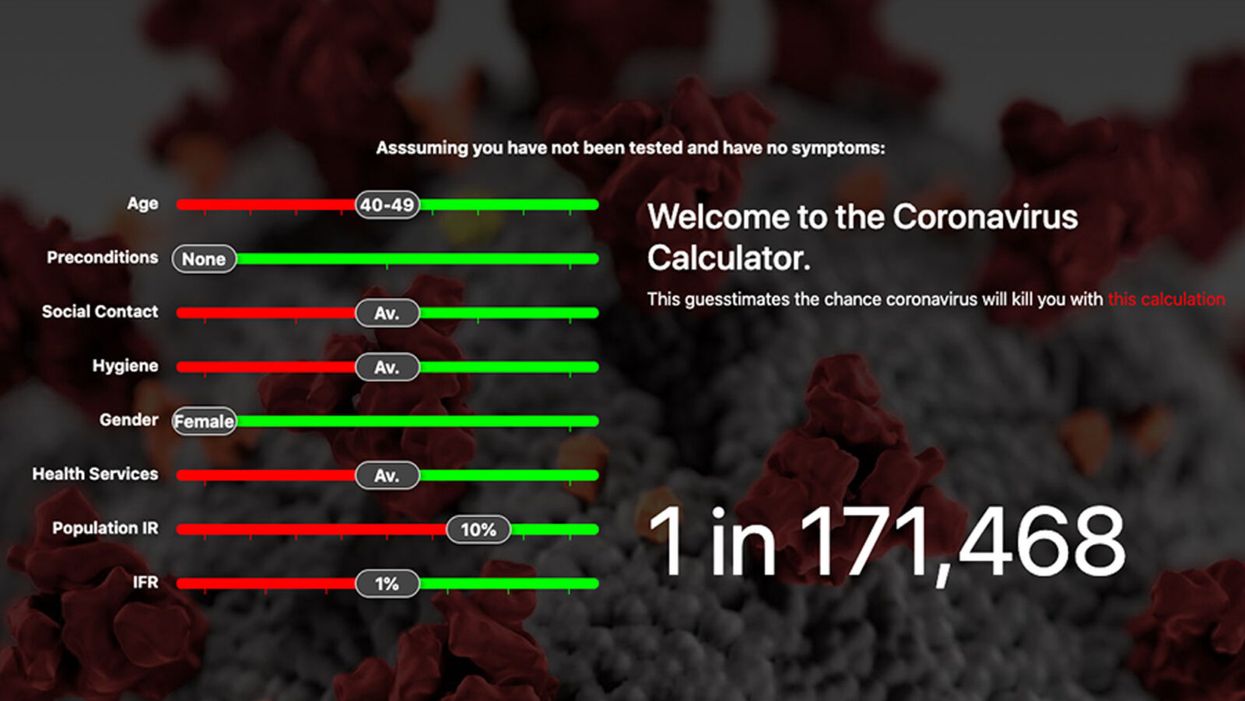

A screenshot of one coronavirus risk calculator.

People in my family seem to develop every ailment in the world, including feline distemper and Dutch elm disease, so I naturally put fingers to keyboard when I discovered that COVID-19 risk calculators now exist.

"It's best to look at your risk band. This will give you a more useful insight into your personal risk."

But the results – based on my answers to questions -- are bewildering.

A British risk calculator developed by the Nexoid software company declared I have a 5 percent, or 1 in 20, chance of developing COVID-19 and less than 1 percent risk of dying if I get it. Um, great, I think? Meanwhile, 19 and Me, a risk calculator created by data scientists, says my risk of infection is 0.01 percent per week, or 1 in 10,000, and it gave me a risk score of 44 out of 100.

Confused? Join the club. But it's actually possible to interpret numbers like these and put them to use. Here are five tips about using coronavirus risk calculators:

1. Make Sure the Calculator Is Designed For You

Not every COVID-19 risk calculator is designed to be used by the general public. Cleveland Clinic's risk calculator, for example, is only a tool for medical professionals, not sick people or the "worried well," said Dr. Lara Jehi, Cleveland Clinic's chief research information officer.

Unfortunately, the risk calculator's web page fails to explicitly identify its target audience. But there are hints that it's not for lay people such as its references to "platelets" and "chlorides."

The 19 and Me or the Nexoid risk calculators, in contrast, are both designed for use by everyone, as is a risk calculator developed by Emory University.

2. Take a Look at the Calculator's Privacy Policy

COVID-19 risk calculators ask for a lot of personal information. The Nexoid calculator, for example, wanted to know my age, weight, drug and alcohol history, pre-existing conditions, blood type and more. It even asked me about the prescription drugs I take.

It's wise to check the privacy policy and be cautious about providing an email address or other personal information. Nexoid's policy says it provides the information it gathers to researchers but it doesn't release IP addresses, which can reveal your location in certain circumstances.

John-Arne Skolbekken, a professor and risk specialist at Norwegian University of Science and Technology, entered his own data in the Nexoid calculator after being contacted by LeapsMag for comment. He noted that the calculator, among other things, asks for information about use of recreational drugs that could be illegal in some places. "I have given away some of my personal data to a company that I can hope will not misuse them," he said. "Let's hope they are trustworthy."

The 19 and Me calculator, by contrast, doesn't gather any data from users, said Cindy Hu, data scientist at Mathematica, which created it. "As soon as the window is closed, that data is gone and not captured."

The Emory University risk calculator, meanwhile, has a long privacy policy that states "the information we collect during your assessment will not be correlated with contact information if you provide it." However, it says personal information can be shared with third parties.

3. Keep an Eye on Time Horizons

Let's say a risk calculator says you have a 1 percent risk of infection. That's fairly low if we're talking about this year as a whole, but it's quite worrisome if the risk percentage refers to today and jumps by 1 percent each day going forward. That's why it's helpful to know exactly what the numbers mean in terms of time.

Unfortunately, this information isn't always readily available. You may have to dig around for it or contact a risk calculator's developers for more information. The 19 and Me calculator's risk percentages refer to this current week based on your behavior this week, Hu said. The Nexoid calculator, by contrast, has an "infinite timeline" that assumes no vaccine is developed, said Jonathon Grantham, the company's managing director. But your results will vary over time since the calculator's developers adjust it to reflect new data.

When you use a risk calculator, focus on this question: "How does your risk compare to the risk of an 'average' person?"

4. Focus on the Big Picture

The Nexoid calculator gave me numbers of 5 percent (getting COVID-19) and 99.309 percent (surviving it). It even provided betting odds for gambling types: The odds are in favor of me not getting infected (19-to-1) and not dying if I get infected (144-to-1).

However, Grantham told me that these numbers "are not the whole story." Instead, he said, "it's best to look at your risk band. This will give you a more useful insight into your personal risk." Risk bands refer to a segmentation of people into five categories, from lowest to highest risk, according to how a person's result sits relative to the whole dataset.

The Nexoid calculator says I'm in the "lowest risk band" for getting COVID-19, and a "high risk band" for dying of it if I get it. That suggests I'd better stay in the lowest-risk category because my pre-existing risk factors could spell trouble for my survival if I get infected.

Michael J. Pencina, a professor and biostatistician at Duke University School of Medicine, agreed that focusing on your general risk level is better than focusing on numbers. When you use a risk calculator, he said, focus on this question: "How does your risk compare to the risk of an 'average' person?"

The 19 and Me calculator, meanwhile, put my risk at 44 out of 100. Hu said that a score of 50 represents the typical person's risk of developing serious consequences from another disease – the flu.

5. Remember to Take Action

Hu, who helped develop the 19 and Me risk calculator, said it's best to use it to "understand the relative impact of different behaviors." As she noted, the calculator is designed to allow users to plug in different answers about their behavior and immediately see how their risk levels change.

This information can help us figure out if we should change the way we approach the world by, say, washing our hands more or avoiding more personal encounters.

"Estimation of risk is only one part of prevention," Pencina said. "The other is risk factors and our ability to reduce them." In other words, odds, percentages and risk bands can be revealing, but it's what we do to change them that matters.