Scientists: Don’t Leave Religious Communities Out in the Cold

A star of David reflects the perspective of a Rabbi/M.D. on an important question about the future of society.

[Editor's Note: This essay is in response to our current Big Question series: "How can the religious and scientific communities work together to foster a culture that is equipped to face humanity's biggest challenges?"]

I humbly submit that the question should be rephrased: How can the religious and scientific communities NOT work together to face humanity's biggest challenges? The stakes are higher than ever before, and we simply cannot afford to go it alone.

I believe in evolution -- the evolution of the relationship of science and religion.

The future of the world depends on our collaboration. I believe in evolution -- the evolution of the relationship of science and religion. Science and religion have lived in alternately varying relationships ranging from peaceful coexistence to outright warfare. Today we have evolved and have begun to embrace the biological relationship of mutualism. This is in part due to the advances in medicine and science.

Previous scientific discoveries and paradigm shifts precipitated varying theological responses. With Copernicus, we grappled with the relationship of the earth to the universe. With Darwin, we re-evaluated the relationship of man to the other creatures on earth. However, as theologically complex as these debates were, they had no practical relevance to the common man. Indeed, it was possible for people to live their entire lives happily without pondering these issues.

In the 21st century, the microscope is honing in further, with discoveries relating to the understanding of the very nature and composition of the human being, both body and mind/soul. Thus, as opposed to the past, the implications of the latest scientific advances directly affect the common man. The religious implications are not left to the ivory tower theologians. Regular people are now confronted with practical religious questions previously unimagined.

For example, in the field of infertility, if a married woman undergoes donor insemination, is she considered an adulteress? If a woman of one faith gestates the child of another faith, to whose faith does the child belong? If your heart is failing, can you avail yourself of stem cells derived from human embryos, or would you be considered an accomplice to murder? Would it be preferable to use artificially derived stem cells if they are available?

The implications of our current debates are profound, and profoundly personal. Science is the great equalizer. Every living being can potentially benefit from medical advances. We are all consumers of the scientific advances, irrespective of race or religion. As such, we all deserve a say in their development.

If the development of the science is collaborative, surely the contemplation of its ethical/religious applications should likewise be.

With gene editing, uterus transplants, head transplants, artificial reproductive seed, and animal-human genetic combinations as daily headlines, we have myriad ethical dilemmas to ponder. What limits should we set for the uses of different technologies? How should they be financed? We must even confront the very definition of what it means to be human. A human could receive multiple artificial transplants, 3D printed organs, genetic derivatives, or organs grown in animals. When does a person become another person or lose his identity? Will a being produced entirely from synthetic DNA be human?

In the Middle Ages, it was possible for one person to master all of the known science, and even sometimes religion as well, such as the great Maimonides. In the pre-modern era, discoveries were almost always attributed to one individual: Jenner, Lister, Koch, Pasteur, and so on. Today, it is impossible for any one human being to master medicine, let alone ethics, religion, etc. Advances are made not usually by one person but by collaboration, often involving hundreds, if not thousands of people across the globe. We cite journal articles, not individuals. Furthermore, the magnitude and speed of development is staggering. Add artificial intelligence and it will continue to expand exponentially.

If the development of the science is collaborative, surely the contemplation of its ethical/religious applications should likewise be. The issues are so profound that we need all genes on deck. The religious community should have a prominent seat at the table. There is great wisdom in the religious traditions that can inform contemporary discussions. In addition, the religious communities are significant consumers of, not to mention contributors to, the medical technology.

An ongoing dialogue between the scientific and religious communities should be an institutionalized endeavor, not a sporadic event, reactive to a particular discovery. The National Institutes of Health or other national organizations could provide an online newsletter designed for the clergy with a summary of the latest developments and their potential applications. An annual meeting of scientists and religious leaders could provide a forum for the scientists to appreciate the religious ramifications of their research (which may be none as well) and for the clergy to appreciate the rapidly developing fields of science and the implications for their congregants. Theological seminaries must include basic scientific literacy as part of their curricula.

We need the proper medium of mutual respect and admiration, despite healthy disagreement.

How do we create a "culture"? Microbiological cultures take time and require the proper medium for maximal growth. If one of the variables is altered, the culture can be affected. To foster a culture of continued successful collaboration between scientists and religious communities, we likewise need the proper medium of mutual respect and admiration, despite healthy disagreement.

The only way we can navigate these unchartered waters is through constant, deep and meaningful collaboration every single step of the way. By cultivating a mutualistic relationship we can inform, caution and safeguard each other to maximize the benefits of emerging technologies.

[Ed. Note: Don't miss the other perspectives in this Big Question series, from a science scholar and a Reverend/molecular geneticist.]

Thanks to safety cautions from the COVID-19 pandemic, a strain of influenza has been completely eliminated.

If you were one of the millions who masked up, washed your hands thoroughly and socially distanced, pat yourself on the back—you may have helped change the course of human history.

Scientists say that thanks to these safety precautions, which were introduced in early 2020 as a way to stop transmission of the novel COVID-19 virus, a strain of influenza has been completely eliminated. This marks the first time in human history that a virus has been wiped out through non-pharmaceutical interventions, such as vaccines.

The flu shot, explained

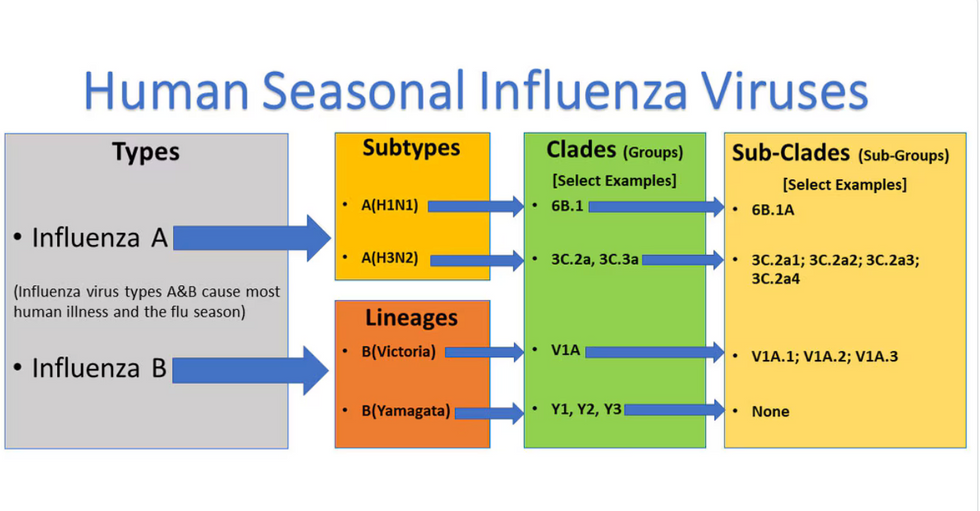

Influenza viruses type A and B are responsible for the majority of human illnesses and the flu season.

Centers for Disease Control

For more than a decade, flu shots have protected against two types of the influenza virus–type A and type B. While there are four different strains of influenza in existence (A, B, C, and D), only strains A, B, and C are capable of infecting humans, and only A and B cause pandemics. In other words, if you catch the flu during flu season, you’re most likely sick with flu type A or B.

Flu vaccines contain inactivated—or dead—influenza virus. These inactivated viruses can’t cause sickness in humans, but when administered as part of a vaccine, they teach a person’s immune system to recognize and kill those viruses when they’re encountered in the wild.

Each spring, a panel of experts gives a recommendation to the US Food and Drug Administration on which strains of each flu type to include in that year’s flu vaccine, depending on what surveillance data says is circulating and what they believe is likely to cause the most illness during the upcoming flu season. For the past decade, Americans have had access to vaccines that provide protection against two strains of influenza A and two lineages of influenza B, known as the Victoria lineage and the Yamagata lineage. But this year, the seasonal flu shot won’t include the Yamagata strain, because the Yamagata strain is no longer circulating among humans.

How Yamagata Disappeared

Flu surveillance data from the Global Initiative on Sharing All Influenza Data (GISAID) shows that the Yamagata lineage of flu type B has not been sequenced since April 2020.

Nature

Experts believe that the Yamagata lineage had already been in decline before the pandemic hit, likely because the strain was naturally less capable of infecting large numbers of people compared to the other strains. When the COVID-19 pandemic hit, the resulting safety precautions such as social distancing, isolating, hand-washing, and masking were enough to drive the virus into extinction completely.

Because the strain hasn’t been circulating since 2020, the FDA elected to remove the Yamagata strain from the seasonal flu vaccine. This will mark the first time since 2012 that the annual flu shot will be trivalent (three-component) rather than quadrivalent (four-component).

Should I still get the flu shot?

The flu shot will protect against fewer strains this year—but that doesn’t mean we should skip it. Influenza places a substantial health burden on the United States every year, responsible for hundreds of thousands of hospitalizations and tens of thousands of deaths. The flu shot has been shown to prevent millions of illnesses each year (more than six million during the 2022-2023 season). And while it’s still possible to catch the flu after getting the flu shot, studies show that people are far less likely to be hospitalized or die when they’re vaccinated.

Another unexpected benefit of dropping the Yamagata strain from the seasonal vaccine? This will possibly make production of the flu vaccine faster, and enable manufacturers to make more vaccines, helping countries who have a flu vaccine shortage and potentially saving millions more lives.

After his grandmother’s dementia diagnosis, one man invented a snack to keep her healthy and hydrated.

Founder Lewis Hornby and his grandmother Pat, sampling Jelly Drops—an edible gummy containing water and life-saving electrolytes.

On a visit to his grandmother’s nursing home in 2016, college student Lewis Hornby made a shocking discovery: Dehydration is a common (and dangerous) problem among seniors—especially those that are diagnosed with dementia.

Hornby’s grandmother, Pat, had always had difficulty keeping up her water intake as she got older, a common issue with seniors. As we age, our body composition changes, and we naturally hold less water than younger adults or children, so it’s easier to become dehydrated quickly if those fluids aren’t replenished. What’s more, our thirst signals diminish naturally as we age as well—meaning our body is not as good as it once was in letting us know that we need to rehydrate. This often creates a perfect storm that commonly leads to dehydration. In Pat’s case, her dehydration was so severe she nearly died.

When Lewis Hornby visited his grandmother at her nursing home afterward, he learned that dehydration especially affects people with dementia, as they often don’t feel thirst cues at all, or may not recognize how to use cups correctly. But while dementia patients often don’t remember to drink water, it seemed to Hornby that they had less problem remembering to eat, particularly candy.

Hornby wanted to create a solution for elderly people who struggled keeping their fluid intake up. He spent the next eighteen months researching and designing a solution and securing funding for his project. In 2019, Hornby won a sizable grant from the Alzheimer’s Society, a UK-based care and research charity for people with dementia and their caregivers. Together, through the charity’s Accelerator Program, they created a bite-sized, sugar-free, edible jelly drop that looked and tasted like candy. The candy, called Jelly Drops, contained 95% water and electrolytes—important minerals that are often lost during dehydration. The final product launched in 2020—and was an immediate success. The drops were able to provide extra hydration to the elderly, as well as help keep dementia patients safe, since dehydration commonly leads to confusion, hospitalization, and sometimes even death.

Not only did Jelly Drops quickly become a favorite snack among dementia patients in the UK, but they were able to provide an additional boost of hydration to hospital workers during the pandemic. In NHS coronavirus hospital wards, patients infected with the virus were regularly given Jelly Drops to keep their fluid levels normal—and staff members snacked on them as well, since long shifts and personal protective equipment (PPE) they were required to wear often left them feeling parched.

In April 2022, Jelly Drops launched in the United States. The company continues to donate 1% of its profits to help fund Alzheimer’s research.