A space elevator would be cheaper and cleaner than using rockets

Story by Big Think

When people first started exploring space in the 1960s, it cost upwards of $80,000 (adjusted for inflation) to put a single pound of payload into low-Earth orbit.

A major reason for this high cost was the need to build a new, expensive rocket for every launch. That really started to change when SpaceX began making cheap, reusable rockets, and today, the company is ferrying customer payloads to LEO at a price of just $1,300 per pound.

This is making space accessible to scientists, startups, and tourists who never could have afforded it previously, but the cheapest way to reach orbit might not be a rocket at all — it could be an elevator.

The space elevator

The seeds for a space elevator were first planted by Russian scientist Konstantin Tsiolkovsky in 1895, who, after visiting the 1,000-foot (305 m) Eiffel Tower, published a paper theorizing about the construction of a structure 22,000 miles (35,400 km) high.

This would provide access to geostationary orbit, an altitude where objects appear to remain fixed above Earth’s surface, but Tsiolkovsky conceded that no material could support the weight of such a tower.

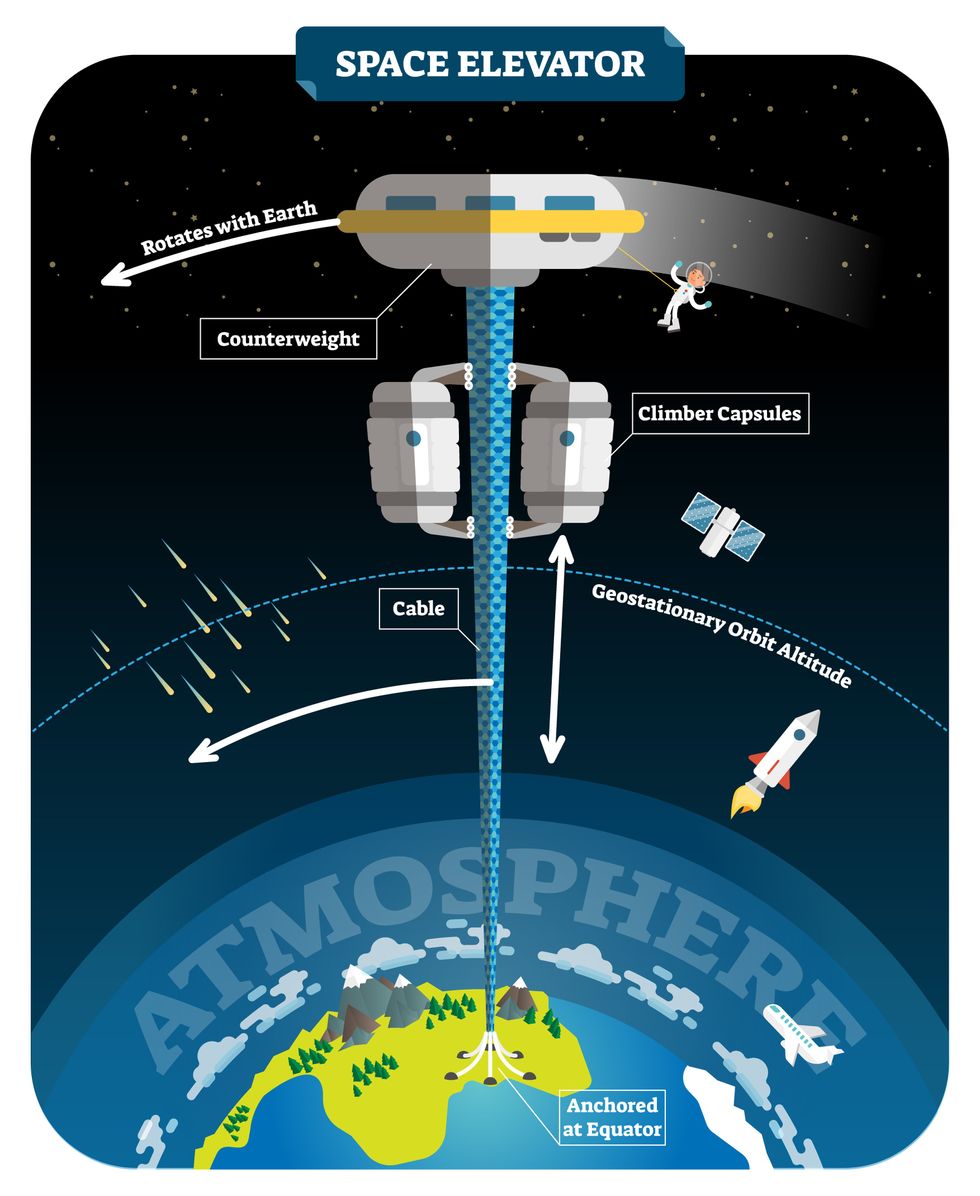

We could then send electrically powered “climber” vehicles up and down the tether to deliver payloads to any Earth orbit.

In 1959, soon after Sputnik, Russian engineer Yuri N. Artsutanov proposed a way around this issue: instead of building a space elevator from the ground up, start at the top. More specifically, he suggested placing a satellite in geostationary orbit and dropping a tether from it down to Earth’s equator. As the tether descended, the satellite would ascend. Once attached to Earth’s surface, the tether would be kept taut, thanks to a combination of gravitational and centrifugal forces.

We could then send electrically powered “climber” vehicles up and down the tether to deliver payloads to any Earth orbit. According to physicist Bradley Edwards, who researched the concept for NASA about 20 years ago, it’d cost $10 billion and take 15 years to build a space elevator, but once operational, the cost of sending a payload to any Earth orbit could be as low as $100 per pound.

“Once you reduce the cost to almost a Fed-Ex kind of level, it opens the doors to lots of people, lots of countries, and lots of companies to get involved in space,” Edwards told Space.com in 2005.

In addition to the economic advantages, a space elevator would also be cleaner than using rockets — there’d be no burning of fuel, no harmful greenhouse emissions — and the new transport system wouldn’t contribute to the problem of space junk to the same degree that expendable rockets do.

So, why don’t we have one yet?

Tether troubles

Edwards wrote in his report for NASA that all of the technology needed to build a space elevator already existed except the material needed to build the tether, which needs to be light but also strong enough to withstand all the huge forces acting upon it.

The good news, according to the report, was that the perfect material — ultra-strong, ultra-tiny “nanotubes” of carbon — would be available in just two years.

“[S]teel is not strong enough, neither is Kevlar, carbon fiber, spider silk, or any other material other than carbon nanotubes,” wrote Edwards. “Fortunately for us, carbon nanotube research is extremely hot right now, and it is progressing quickly to commercial production.”Unfortunately, he misjudged how hard it would be to synthesize carbon nanotubes — to date, no one has been able to grow one longer than 21 inches (53 cm).

Further research into the material revealed that it tends to fray under extreme stress, too, meaning even if we could manufacture carbon nanotubes at the lengths needed, they’d be at risk of snapping, not only destroying the space elevator, but threatening lives on Earth.

Looking ahead

Carbon nanotubes might have been the early frontrunner as the tether material for space elevators, but there are other options, including graphene, an essentially two-dimensional form of carbon that is already easier to scale up than nanotubes (though still not easy).

Contrary to Edwards’ report, Johns Hopkins University researchers Sean Sun and Dan Popescu say Kevlar fibers could work — we would just need to constantly repair the tether, the same way the human body constantly repairs its tendons.

“Using sensors and artificially intelligent software, it would be possible to model the whole tether mathematically so as to predict when, where, and how the fibers would break,” the researchers wrote in Aeon in 2018.

“When they did, speedy robotic climbers patrolling up and down the tether would replace them, adjusting the rate of maintenance and repair as needed — mimicking the sensitivity of biological processes,” they continued.Astronomers from the University of Cambridge and Columbia University also think Kevlar could work for a space elevator — if we built it from the moon, rather than Earth.

They call their concept the Spaceline, and the idea is that a tether attached to the moon’s surface could extend toward Earth’s geostationary orbit, held taut by the pull of our planet’s gravity. We could then use rockets to deliver payloads — and potentially people — to solar-powered climber robots positioned at the end of this 200,000+ mile long tether. The bots could then travel up the line to the moon’s surface.

This wouldn’t eliminate the need for rockets to get into Earth’s orbit, but it would be a cheaper way to get to the moon. The forces acting on a lunar space elevator wouldn’t be as strong as one extending from Earth’s surface, either, according to the researchers, opening up more options for tether materials.

“[T]he necessary strength of the material is much lower than an Earth-based elevator — and thus it could be built from fibers that are already mass-produced … and relatively affordable,” they wrote in a paper shared on the preprint server arXiv.

After riding up the Earth-based space elevator, a capsule would fly to a space station attached to the tether of the moon-based one.

Electrically powered climber capsules could go up down the tether to deliver payloads to any Earth orbit.

Adobe Stock

Some Chinese researchers, meanwhile, aren’t giving up on the idea of using carbon nanotubes for a space elevator — in 2018, a team from Tsinghua University revealed that they’d developed nanotubes that they say are strong enough for a tether.

The researchers are still working on the issue of scaling up production, but in 2021, state-owned news outlet Xinhua released a video depicting an in-development concept, called “Sky Ladder,” that would consist of space elevators above Earth and the moon.

After riding up the Earth-based space elevator, a capsule would fly to a space station attached to the tether of the moon-based one. If the project could be pulled off — a huge if — China predicts Sky Ladder could cut the cost of sending people and goods to the moon by 96 percent.

The bottom line

In the 120 years since Tsiolkovsky looked at the Eiffel Tower and thought way bigger, tremendous progress has been made developing materials with the properties needed for a space elevator. At this point, it seems likely we could one day have a material that can be manufactured at the scale needed for a tether — but by the time that happens, the need for a space elevator may have evaporated.

Several aerospace companies are making progress with their own reusable rockets, and as those join the market with SpaceX, competition could cause launch prices to fall further.

California startup SpinLaunch, meanwhile, is developing a massive centrifuge to fling payloads into space, where much smaller rockets can propel them into orbit. If the company succeeds (another one of those big ifs), it says the system would slash the amount of fuel needed to reach orbit by 70 percent.

Even if SpinLaunch doesn’t get off the ground, several groups are developing environmentally friendly rocket fuels that produce far fewer (or no) harmful emissions. More work is needed to efficiently scale up their production, but overcoming that hurdle will likely be far easier than building a 22,000-mile (35,400-km) elevator to space.

This article originally appeared on Big Think, home of the brightest minds and biggest ideas of all time.

As Our AI Systems Get Better, So Must We

In order to build the future we want, we must also become ever better humans, explains the futurist Jamie Metzl in this opinion essay.

As the power and capability of our AI systems increase by the day, the essential question we now face is what constitutes peak human. If we stay where we are while the AI systems we are unleashing continually get better, they will meet and then exceed our capabilities in an ever-growing number of domains. But while some technology visionaries like Elon Musk call for us to slow down the development of AI systems to buy time, this approach alone will simply not work in our hyper-competitive world, particularly when the potential benefits of AI are so great and our frameworks for global governance are so weak. In order to build the future we want, we must also become ever better humans.

The list of activities we once saw as uniquely human where AIs have now surpassed us is long and growing. First, AI systems could beat our best chess players, then our best Go players, then our best champions of multi-player poker. They can see patterns far better than we can, generate medical and other hypotheses most human specialists miss, predict and map out new cellular structures, and even generate beautiful, and, yes, creative, art.

A recent paper by Microsoft researchers analyzing the significant leap in capabilities in OpenAI’s latest AI bot, ChatGPT-4, asserted that the algorithm can “solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting.” Calling this functionality “strikingly close to human-level performance,” the authors conclude it “could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system.”

The concept of AGI has been around for decades. In its common use, the term suggests a time when individual machines can do many different things at a human level, not just one thing like playing Go or analyzing radiological images. Debating when AGI might arrive, a favorite pastime of computer scientists for years, now has become outdated.

We already have AI algorithms and chatbots that can do lots of different things. Based on the generalist definition, in other words, AGI is essentially already here.

Unfettered by the evolved capacity and storage constraints of our brains, AI algorithms can access nearly all of the digitized cultural inheritance of humanity since the dawn of recorded history and have increasing access to growing pools of digitized biological data from across the spectrum of life.

Once we recognize that both AI systems and humans have unique superpowers, the essential question becomes what each of us can do better than the other and what humans and AIs can best do in active collaboration. The future of our species will depend upon our ability to safely, dynamically, and continually figure that out.

With these ever-larger datasets, rapidly increasing computing and memory power, and new and better algorithms, our AI systems will keep getting better faster than most of us can today imagine. These capabilities have the potential to help us radically improve our healthcare, agriculture, and manufacturing, make our economies more productive and our development more sustainable, and do many important things better.

Soon, they will learn how to write their own code. Like human children, in other words, AI systems will grow up. But even that doesn’t mean our human goose is cooked.

Just like dolphins and dogs, these alternate forms of intelligence will be uniquely theirs, not a lesser or greater version of ours. There are lots of things AI systems can't do and will never be able to do because our AI algorithms, for better and for worse, will never be human. Our embodied human intelligence is its own thing.

Our human intelligence is uniquely ours based on the capacities we have developed in our 3.8-billion-year journey from single cell organisms to us. Our brains and bodies represent continuous adaptations on earlier models, which is why our skeletal systems look like those of lizards and our brains like most other mammals with some extra cerebral cortex mixed in. Human intelligence isn’t just some type of disembodied function but the inextricable manifestation of our evolved physical reality. It includes our sensory analytical skills and all of our animal instincts, intuitions, drives, and perceptions. Disembodied machine intelligence is something different than what we have evolved and possess.

Because of this, some linguists including Noam Chomsky have recently argued that AI systems will never be intelligent as long as they are just manipulating symbols and mathematical tokens without any inherent understanding. Nothing could be further from the truth. Anyone interacting with even first-generation AI chatbots quickly realizes that while these systems are far from perfect or omniscient and can sometimes be stupendously oblivious, they are surprisingly smart and versatile and will get more so… forever. We have little idea even how our own minds work, so judging AI systems based on their output is relatively close to how we evaluate ourselves.

Anyone not awed by the potential of these AI systems is missing the point. AI’s newfound capacities demand that we work urgently to establish norms, standards, and regulations at all levels from local to global to manage the very real risks. Pausing our development of AI systems now doesn’t make sense, however, even if it were possible, because we have no sufficient ways of uniformly enacting such a pause, no plan for how we would use the time, and no common framework for addressing global collective challenges like this.

But if all we feel is a passive awe for these new capabilities, we will also be missing the point.

Human evolution, biology, and cultural history are not just some kind of accidental legacy, disability, or parlor trick, but our inherent superpower. Our ancestors outcompeted rivals for billions of years to make us so well suited to the world we inhabit and helped build. Our social organization at scale has made it possible for us to forge civilizations of immense complexity, engineer biology and novel intelligence, and extend our reach to the stars. Our messy, embodied, intuitive, social human intelligence is roughly mimicable by AI systems but, by definition, never fully replicable by them.

Once we recognize that both AI systems and humans have unique superpowers, the essential question becomes what each of us can do better than the other and what humans and AIs can best do in active collaboration. We still don't know. The future of our species will depend upon our ability to safely, dynamically, and continually figure that out.

As we do, we'll learn that many of our ideas and actions are made up of parts, some of which will prove essentially human and some of which can be better achieved by AI systems. Those in every walk of work and life who most successfully identify the optimal contributions of humans, AIs, and the two together, and who build systems and workflows empowering humans to do human things, machines to do machine things, and humans and machines to work together in ways maximizing the respective strengths of each, will be the champions of the 21st century across all fields.

The dawn of the age of machine intelligence is upon us. It’s a quantum leap equivalent to the domestication of plants and animals, industrialization, electrification, and computing. Each of these revolutions forced us to rethink what it means to be human, how we live, and how we organize ourselves. The AI revolution will happen more suddenly than these earlier transformations but will follow the same general trajectory. Now is the time to aggressively prepare for what is fast heading our way, including by active public engagement, governance, and regulation.

AI systems will not replace us, but, like these earlier technology-driven revolutions, they will force us to become different humans as we co-evolve with our technology. We will never reach peak human in our ongoing evolutionary journey, but we’ve got to manage this transition wisely to build the type of future we’d like to inhabit.

Alongside our ascending AIs, we humans still have a lot of climbing to do.

Story by Big Think

We live in strange times, when the technology we depend on the most is also that which we fear the most. We celebrate cutting-edge achievements even as we recoil in fear at how they could be used to hurt us. From genetic engineering and AI to nuclear technology and nanobots, the list of awe-inspiring, fast-developing technologies is long.

However, this fear of the machine is not as new as it may seem. Technology has a longstanding alliance with power and the state. The dark side of human history can be told as a series of wars whose victors are often those with the most advanced technology. (There are exceptions, of course.) Science, and its technological offspring, follows the money.

This fear of the machine seems to be misplaced. The machine has no intent: only its maker does. The fear of the machine is, in essence, the fear we have of each other — of what we are capable of doing to one another.

How AI changes things

Sure, you would reply, but AI changes everything. With artificial intelligence, the machine itself will develop some sort of autonomy, however ill-defined. It will have a will of its own. And this will, if it reflects anything that seems human, will not be benevolent. With AI, the claim goes, the machine will somehow know what it must do to get rid of us. It will threaten us as a species.

Well, this fear is also not new. Mary Shelley wrote Frankenstein in 1818 to warn us of what science could do if it served the wrong calling. In the case of her novel, Dr. Frankenstein’s call was to win the battle against death — to reverse the course of nature. Granted, any cure of an illness interferes with the normal workings of nature, yet we are justly proud of having developed cures for our ailments, prolonging life and increasing its quality. Science can achieve nothing more noble. What messes things up is when the pursuit of good is confused with that of power. In this distorted scale, the more powerful the better. The ultimate goal is to be as powerful as gods — masters of time, of life and death.

Should countries create a World Mind Organization that controls the technologies that develop AI?

Back to AI, there is no doubt the technology will help us tremendously. We will have better medical diagnostics, better traffic control, better bridge designs, and better pedagogical animations to teach in the classroom and virtually. But we will also have better winnings in the stock market, better war strategies, and better soldiers and remote ways of killing. This grants real power to those who control the best technologies. It increases the take of the winners of wars — those fought with weapons, and those fought with money.

A story as old as civilization

The question is how to move forward. This is where things get interesting and complicated. We hear over and over again that there is an urgent need for safeguards, for controls and legislation to deal with the AI revolution. Great. But if these machines are essentially functioning in a semi-black box of self-teaching neural nets, how exactly are we going to make safeguards that are sure to remain effective? How are we to ensure that the AI, with its unlimited ability to gather data, will not come up with new ways to bypass our safeguards, the same way that people break into safes?

The second question is that of global control. As I wrote before, overseeing new technology is complex. Should countries create a World Mind Organization that controls the technologies that develop AI? If so, how do we organize this planet-wide governing board? Who should be a part of its governing structure? What mechanisms will ensure that governments and private companies do not secretly break the rules, especially when to do so would put the most advanced weapons in the hands of the rule breakers? They will need those, after all, if other actors break the rules as well.

As before, the countries with the best scientists and engineers will have a great advantage. A new international détente will emerge in the molds of the nuclear détente of the Cold War. Again, we will fear destructive technology falling into the wrong hands. This can happen easily. AI machines will not need to be built at an industrial scale, as nuclear capabilities were, and AI-based terrorism will be a force to reckon with.

So here we are, afraid of our own technology all over again.

What is missing from this picture? It continues to illustrate the same destructive pattern of greed and power that has defined so much of our civilization. The failure it shows is moral, and only we can change it. We define civilization by the accumulation of wealth, and this worldview is killing us. The project of civilization we invented has become self-cannibalizing. As long as we do not see this, and we keep on following the same route we have trodden for the past 10,000 years, it will be very hard to legislate the technology to come and to ensure such legislation is followed. Unless, of course, AI helps us become better humans, perhaps by teaching us how stupid we have been for so long. This sounds far-fetched, given who this AI will be serving. But one can always hope.