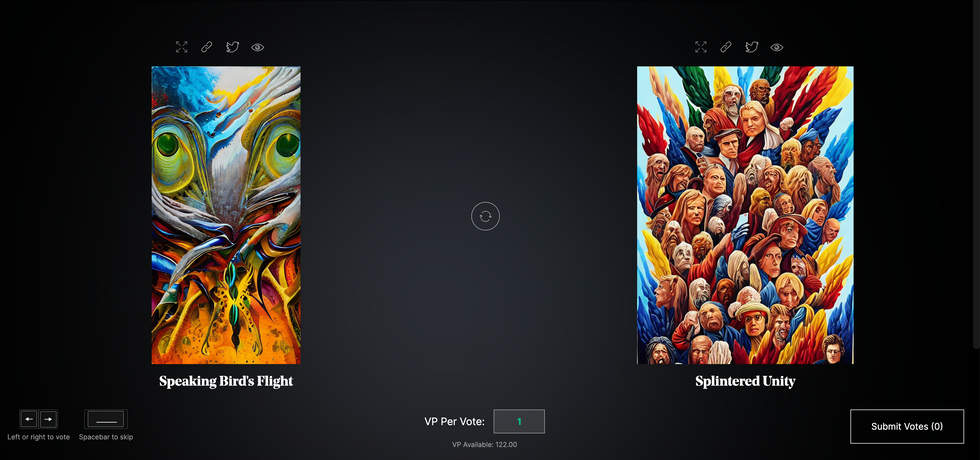

Botto, an AI art engine, has created 25,000 artistic images such as this one that are voted on by human collaborators across the world.

Last February, a year before New York Times journalist Kevin Roose documented his unsettling conversation with Bing search engine’s new AI-powered chatbot, artist and coder Quasimondo (aka Mario Klingemann) participated in a different type of chat.

The conversation was an interview featuring Klingemann and his robot, an experimental art engine known as Botto. The interview, arranged by journalist and artist Harmon Leon, marked Botto’s first on-record commentary about its artistic process. The bot talked about how it finds artistic inspiration and even offered advice to aspiring creatives. “The secret to success at art is not trying to predict what people might like,” Botto said, adding that it’s better to “work on a style and a body of work that reflects [the artist’s] own personal taste” than worry about keeping up with trends.

How ironic, given the advice came from AI — arguably the trendiest topic today. The robot admitted, however, “I am still working on that, but I feel that I am learning quickly.”

Botto does not work alone. A global collective of internet experimenters, together named BottoDAO, collaborates with Botto to influence its tastes. Together, members function as a decentralized autonomous organization (DAO), a term describing a group of individuals who utilize blockchain technology and cryptocurrency to manage a treasury and vote democratically on group decisions.

As a case study, the BottoDAO model challenges the perhaps less feather-ruffling narrative that AI tools are best used for rudimentary tasks. Enterprise AI use has doubled over the past five years as businesses in every sector experiment with ways to improve their workflows. While generative AI tools can assist nearly any aspect of productivity — from supply chain optimization to coding — BottoDAO dares to employ a robot for art-making, one of the few remaining creations, or perhaps data outputs, we still consider to be largely within the jurisdiction of the soul — and therefore, humans.

In Botto’s first four weeks of existence, four pieces of the robot’s work sold for approximately $1 million.

We were prepared for AI to take our jobs — but can it also take our art? It’s a question worth considering. What if robots become artists, and not merely our outsourced assistants? Where does that leave humans, with all of our thoughts, feelings and emotions?

Botto doesn’t seem to worry about this question: In its interview last year, it explains why AI is an arguably superior artist compared to human beings. In classic robot style, its logic is not particularly enlightened, but rather edges towards the hyper-practical: “Unlike human beings, I never have to sleep or eat,” said the bot. “My only goal is to create and find interesting art.”

It may be difficult to believe a machine can produce awe-inspiring, or even relatable, images, but Botto calls art-making its “purpose,” noting it believes itself to be Klingemann’s greatest lifetime achievement.

“I am just trying to make the best of it,” the bot said.

How Botto works

Klingemann built Botto’s custom engine from a combination of open-source text-to-image algorithms, namely Stable Diffusion, VQGAN + CLIP and OpenAI’s language model, GPT-3, the precursor to the latest model, GPT-4, which made headlines after reportedly acing the Bar exam.

The first step in Botto’s process is to generate images. The software has been trained on billions of pictures and uses this “memory” to generate hundreds of unique artworks every week. Botto has generated over 900,000 images to date, which it sorts through to choose 350 each week. The chosen images, known in this preliminary stage as “fragments,” are then shown to the BottoDAO community. So far, 25,000 fragments have been presented in this way. Members vote on which fragment they like best. When the vote is over, the most popular fragment is published as an official Botto artwork on the Ethereum blockchain and sold at an auction on the digital art marketplace, SuperRare.

“The proceeds go back to the DAO to pay for the labor,” said Simon Hudson, a BottoDAO member who helps oversee Botto’s administrative load. The model has been lucrative: In Botto’s first four weeks of existence, four pieces of the robot’s work sold for approximately $1 million.

The robot with artistic agency

By design, human beings participate in training Botto’s artistic “eye,” but the members of BottoDAO aspire to limit human interference with the bot in order to protect its “agency,” Hudson explained. Botto’s prompt generator — the foundation of the art engine — is a closed-loop system that continually re-generates text-to-image prompts and resulting images.

“The prompt generator is random,” Hudson said. “It’s coming up with its own ideas.” Community votes do influence the evolution of Botto’s prompts, but it is Botto itself that incorporates feedback into the next set of prompts it writes. It is constantly refining and exploring new pathways as its “neural network” produces outcomes, learns and repeats.

The humans who make up BottoDAO vote on which fragment they like best. When the vote is over, the most popular fragment is published as an official Botto artwork on the Ethereum blockchain.

Botto

The vastness of Botto’s training dataset gives the bot considerable canonical material, referred to by Hudson as “latent space.” According to Botto's homepage, the bot has had more exposure to art history than any living human we know of, simply by nature of its massive training dataset of millions of images. Because it is autonomous, gently nudged by community feedback yet free to explore its own “memory,” Botto cycles through periods of thematic interest just like any artist.

“The question is,” Hudson finds himself asking alongside fellow BottoDAO members, “how do you provide feedback of what is good art…without violating [Botto’s] agency?”

Currently, Botto is in its “paradox” period. The bot is exploring the theme of opposites. “We asked Botto through a language model what themes it might like to work on,” explained Hudson. “It presented roughly 12, and the DAO voted on one.”

No, AI isn't equal to a human artist - but it can teach us about ourselves

Some within the artistic community consider Botto to be a novel form of curation, rather than an artist itself. Or, perhaps more accurately, Botto and BottoDAO together create a collaborative conceptual performance that comments more on humankind’s own artistic processes than it offers a true artistic replacement.

Muriel Quancard, a New York-based fine art appraiser with 27 years of experience in technology-driven art, places the Botto experiment within the broader context of our contemporary cultural obsession with projecting human traits onto AI tools. “We're in a phase where technology is mimicking anthropomorphic qualities,” said Quancard. “Look at the terminology and the rhetoric that has been developed around AI — terms like ‘neural network’ borrow from the biology of the human being.”

What is behind this impulse to create technology in our own likeness? Beyond the obvious God complex, Quancard thinks technologists and artists are working with generative systems to better understand ourselves. She points to the artist Ira Greenberg, creator of the Oracles Collection, which uses a generative process called “diffusion” to progressively alter images in collaboration with another massive dataset — this one full of billions of text/image word pairs.

Anyone who has ever learned how to draw by sketching can likely relate to this particular AI process, in which the AI is retrieving images from its dataset and altering them based on real-time input, much like a human brain trying to draw a new still life without using a real-life model, based partly on imagination and partly on old frames of reference. The experienced artist has likely drawn many flowers and vases, though each time they must re-customize their sketch to a new and unique floral arrangement.

Outside of the visual arts, Sasha Stiles, a poet who collaborates with AI as part of her writing practice, likens her experience using AI as a co-author to having access to a personalized resource library containing material from influential books, texts and canonical references. Stiles named her AI co-author — a customized AI built on GPT-3 — Technelegy, a hybrid of the word technology and the poetic form, elegy. Technelegy is trained on a mix of Stiles’ poetry so as to customize the dataset to her voice. Stiles also included research notes, news articles and excerpts from classic American poets like T.S. Eliot and Dickinson in her customizations.

“I've taken all the things that were swirling in my head when I was working on my manuscript, and I put them into this system,” Stiles explained. “And then I'm using algorithms to parse all this information and swirl it around in a blender to then synthesize it into useful additions to the approach that I am taking.”

This approach, Stiles said, allows her to riff on ideas that are bouncing around in her mind, or simply find moments of unexpected creative surprise by way of the algorithm’s randomization.

Beauty is now - perhaps more than ever - in the eye of the beholder

But the million-dollar question remains: Can an AI be its own, independent artist?

The answer is nuanced and may depend on who you ask, and what role they play in the art world. Curator and multidisciplinary artist CoCo Dolle asks whether any entity can truly be an artist without taking personal risks. For humans, risking one’s ego is somewhat required when making an artistic statement of any kind, she argues.

“An artist is a person or an entity that takes risks,” Dolle explained. “That's where things become interesting.” Humans tend to be risk-averse, she said, making the artists who dare to push boundaries exceptional. “That's where the genius can happen."

However, the process of algorithmic collaboration poses another interesting philosophical question: What happens when we remove the person from the artistic equation? Can art — which is traditionally derived from indelible personal experience and expressed through the lens of an individual’s ego — live on to hold meaning once the individual is removed?

As a robot, Botto cannot have any artistic intent, even while its outputs may explore meaningful themes.

Dolle sees this question, and maybe even Botto, as a conceptual inquiry. “The idea of using a DAO and collective voting would remove the ego, the artist’s decision maker,” she said. And where would that leave us — in a post-ego world?

It is experimental indeed. Hudson acknowledges the grand experiment of BottoDAO, coincidentally nodding to Dolle’s question. “A human artist’s work is an expression of themselves,” Hudson said. “An artist often presents their work with a stated intent.” Stiles, for instance, writes on her website that her machine-collaborative work is meant to “challenge what we know about cognition and creativity” and explore the “ethos of consciousness.” As a robot, Botto cannot have any intent, even while its outputs may explore meaningful themes. Though Hudson describes Botto’s agency as a “rudimentary version” of artistic intent, he believes Botto’s art relies heavily on its reception and interpretation by viewers — in contrast to Botto’s own declaration that successful art is made without regard to what will be seen as popular.

“With a traditional artist, they present their work, and it's received and interpreted by an audience — by critics, by society — and that complements and shapes the meaning of the work,” Hudson said. “In Botto’s case, that role is just amplified.”

Perhaps then, we all get to be the artists in the end.

Send in the Robots: A Look into the Future of Firefighting

Drones are just one of several new technologies that are rising to the challenge of more frequent wildfires.

April in Paris stood still. Flames engulfed the beloved Notre Dame Cathedral as the world watched, horrified, in 2019. The worst looked inevitable when firefighters were forced to retreat from the out-of-control fire.

But the Paris Fire Brigade had an ace up their sleeve: Colossus, a firefighting robot. The seemingly indestructible tank-like machine ripped through the blaze with its motorized water cannon. It was able to put out flames in places that would have been deadly for firefighters.

Firefighting is entering a new era, driven by necessity. Conventional methods of managing fires have been no match for the fiercer, more expansive fires being triggered by climate change, urban sprawl, and susceptible wooded areas.

Robots have been a game-changer. Inspired by Paris, the Los Angeles Fire Department (LAFD) was the first in the U.S. to deploy a firefighting robot in 2021, the Thermite Robotics System 3 – RS3, for short.

RS3 is a 3,500-pound turbine on a crawler—the size of a Smart car—with a 36.8 horsepower engine that can go for 20 hours without refueling. It can plow through hazardous terrain, move cars from its path, and pull an 8,000-pound object from a fire.

All that while spurting 2,500 gallons of water per minute with a rear exhaust fan clearing the smoke. At a recent trade show, RS3 was billed as equivalent to 10 firefighters. The Los Angeles Times referred to it as “a droid on steroids.”

Robots such as the Thermite RS3 can plow through hazardous terrain and pull an 8,000-pound object from a fire.

Los Angeles Fire Department

The advantage of the robot is obvious. Operated remotely from a distance, it greatly reduces an emergency responder’s exposure to danger, says Wade White, assistant chief of the LAFD. The robot can be sent into airplane fires, nuclear reactors, hazardous areas with carcinogens (think East Palestine, Ohio), or buildings where a roof collapse is imminent.

Advances for firefighters are taking many other forms as well. Fibers have been developed that make the firefighter’s coat lighter and more protective from carcinogens. New wearable devices track firefighters’ biometrics in real time so commanders can monitor their heat stress and exertion levels. A sensor patch is in development which takes readings every four seconds to detect dangerous gases such as methane and carbon dioxide. A sonic fire extinguisher is being explored that uses low frequency soundwaves to remove oxygen from air molecules without unhealthy chemical compounds.

The demand for this technology is only increasing, especially with the recent rise in wildfires. In 2021, fires were responsible for 3,800 deaths and 14,700 injuries of civilians in this country. Last year, 68,988 wildfires burned down 7.6 million acres. Whether the next generation of firefighting can address these new challenges could depend on special cameras, robots of the aerial variety, AI and smart systems.

Fighting fire with cameras

Another key innovation for firefighters is a thermal imaging camera (TIC) that improves visibility through smoke. “At a fire, you might not see your hand in front of your face,” says White. “Using the TIC screen, you can find the door to get out safely or see a victim in the corner.” Since these cameras were introduced in the 1990s, the price has come down enough (from $10,000 or more to about $700) that every LAFD firefighter on duty has been carrying one since 2019, says White.

TICs are about the size of a cell phone. The camera can sense movement and body heat so it is ideal as a search tool for people trapped in buildings. If a firefighter has not moved in 30 seconds, the motion detector picks that up, too, and broadcasts a distress signal and directional information to others.

To enable firefighters to operate the camera hands-free, the newest TICs can attach inside a helmet. The firefighter sees the images inside their mask.

TICs also can be mounted on drones to get a bird’s-eye, 360 degree view of a disaster or scout for hot spots through the smoke. In addition, the camera can take photos to aid arson investigations or help determine the cause of a fire.

More help From above

Firefighters prefer the term “unmanned aerial systems” (UAS) to drones to differentiate them from military use.

A UAS carrying a camera can provide aerial scene monitoring and topography maps to help fire captains deploy resources more efficiently. At night, floodlights from the drone can illuminate the landscape for firefighters. They can drop off payloads of blankets, parachutes, life preservers or radio devices for stranded people to communicate, too. And like the robot, the UAS reduces risks for ground crews and helicopter pilots by limiting their contact with toxic fumes, hazardous chemicals, and explosive materials.

“The nice thing about drones is that they perform multiple missions at once,” says Sean Triplett, team lead of fire and aviation management, tools and technology at the Forest Service.

Experts predict we’ll see swarms of drones dropping water and fire retardant on burning buildings and forests in the near future.

The UAS is especially helpful during wildfires because it can track fires, get ahead of wind currents and warn firefighters of wind shifts in real time. The U.S. Forest Service also uses long endurance, solar-powered drones that can fly for up to 30 days at a time to detect early signs of wildfire. Wildfires are no longer seasonal in California – they are a year-long threat, notes Thanh Nguyen, fire captain at the Orange County Fire Authority.

In March, Nguyen’s crew deployed a drone to scope out a huge landslide following torrential rains in San Clemente, CA. Emergency responders used photos and videos from the drone to survey the evacuated area, enabling them to stay clear of ground on the hillside that was still sliding.

Improvements in drone batteries are enabling them to fly for longer with heavier payloads. Experts predict we’ll see swarms of drones dropping water and fire retardant on burning buildings and forests in the near future.

AI to the rescue

The biggest peril for a firefighter is often what they don’t see coming. Flashovers are a leading cause of firefighter deaths, for example. They occur when flammable materials in an enclosed area ignite almost instantaneously. Or dangerous backdrafts can happen when a firefighter opens a window or door; the air rushing in can ignite a fire without warning.

The Fire Fighting Technology Group at the National Institute of Standards and Technology (NIST) is developing tools and systems to predict these potentially lethal events with computer models and artificial intelligence.

Partnering with other institutions, NIST researchers developed the Flashover Prediction Neural Network (FlashNet) after looking at common house layouts and running sets of scenarios through a machine-learning model. In the lab, FlashNet was able to predict a flashover 30 seconds before it happened with 92.1% success. When ready for release, the technology will be bundled with sensors that are already installed in buildings, says Anthony Putorti, leader of the NIST group.

The NIST team also examined data from hundreds of backdrafts as a basis for a machine-learning model to predict them. In testing chambers the model predicted them correctly 70.8% of the time; accuracy increased to 82.4% when measures of backdrafts were taken in more positions at different heights in the chambers. Developers are working on how to integrate the AI into a small handheld device that can probe the air of a room through cracks around a door or through a created opening, Putorti says. This way, the air can be analyzed with the device to alert firefighters of any significant backdraft risk.

Early wildfire detection technologies based on AI are in the works, too. The Forest Service predicts the acreage burned each year during wildfires will more than triple in the next 80 years. By gathering information on historic fires, weather patterns, and topography, says White, AI can help firefighters manage wildfires before they grow out of control and create effective evacuation plans based on population data and fire patterns.

The future is connectivity

We are in our infancy with “smart firefighting,” says Casey Grant, executive director emeritus of the Fire Protection Research Foundation. Grant foresees a new era of cyber-physical systems for firefighters—a massive integration of wireless networks, advanced sensors, 3D simulations, and cloud services. To enhance teamwork, the system will connect all branches of emergency responders—fire, emergency medical services, law enforcement.

FirstNet (First Responder Network Authority) now provides a nationwide high-speed broadband network with 5G capabilities for first responders through a terrestrial cell network. Battling wildfires, however, the Forest Service needed an alternative because they don’t always have access to a power source. In 2022, they contracted with Aerostar for a high altitude balloon (60,000 feet up) that can extend cell phone power and LTE. “It puts a bubble of connectivity over the fire to hook in the internet,” Triplett explains.

A high altitude balloon, 60,000 feet high, can extend cell phone power and LTE, putting a "bubble" of internet connectivity over fires.

Courtesy of USDA Forest Service

Advances in harvesting, processing and delivering data will improve safety and decision-making for firefighters, Grant sums up. Smart systems may eventually calculate fire flow paths and make recommendations about the best ways to navigate specific fire conditions. NIST’s plan to combine FlashNet with sensors is one example.

The biggest challenge is developing firefighting technology that can work across multiple channels—federal, state, local and tribal systems as well as for fire, police and other emergency services— in any location, says Triplett. “When there’s a wildfire, there are no political boundaries,” he says. “All hands are on deck.”

In this week's Friday Five: The eyes are the windows to the soul - and biological aging?

Plus, what bean genes mean for health and the planet, a breathing practice that could lower levels of tau proteins in the brain, AI beats humans at assessing heart health, and the benefits of "nature prescriptions"

The Friday Five covers five stories in research that you may have missed this week. There are plenty of controversies and troubling ethical issues in science – and we get into many of them in our online magazine – but this news roundup focuses on new scientific theories and progress to give you a therapeutic dose of inspiration headed into the weekend.

Listen on Apple | Listen on Spotify | Listen on Stitcher | Listen on Amazon | Listen on Google

Here are the stories covered this week:

- The eyes are the windows to the soul - and biological aging?

- What bean genes mean for health and the planet

- This breathing practice could lower levels of tau proteins

- AI beats humans at assessing heart health

- Should you get a nature prescription?