The Algorithm Will See You Now

Artificial intelligence in medicine, still in an early phase, stands to transform how doctors and nurses spend their time.

There's a quiet revolution going on in medicine. It's driven by artificial intelligence, but paradoxically, new technology may put a more human face on healthcare.

AI's usefulness in healthcare ranges far and wide.

Artificial intelligence is software that can process massive amounts of information and learn over time, arriving at decisions with striking accuracy and efficiency. It offers greater accuracy in diagnosis, exponentially faster genome sequencing, the mining of medical literature and patient records at breathtaking speed, a dramatic reduction in administrative bureaucracy, personalized medicine, and even the democratization of healthcare.

The algorithms that bring these advantages won't replace doctors; rather, by offloading some of the most time-consuming tasks in healthcare, providers will be able to focus on personal interactions with patients—listening, empathizing, educating and generally putting the care back in healthcare. The relationship can focus on the alleviation of suffering, both the physical and emotional kind.

Challenges of Getting AI Up and Running

The AI revolution, still in its early phase in medicine, is already spurring some amazing advances, despite the fact that some experts say it has been overhyped. IBM's Watson Health program is a case in point. IBM capitalized on Watson's ability to process natural language by designing algorithms that devour data like medical articles and analyze images like MRIs and medical slides. The algorithms help diagnose diseases and recommend treatment strategies.

But Technology Review reported that a heavily hyped partnership with the MD Anderson Cancer Center in Houston fell apart in 2017 because of a lack of data in the proper format. The data existed, just not in a way that the voraciously data-hungry AI could use to train itself.

The hiccup certainly hasn't dampened the enthusiasm for medical AI among other tech giants, including Google and Apple, both of which have invested billions in their own healthcare projects. At this point, the main challenge is the need for algorithms to interpret a huge diversity of data mined from medical records. This can include everything from CT scans, MRIs, electrocardiograms, x-rays, and medical slides, to millions of pages of medical literature, physician's notes, and patient histories. It can even include data from implantables and wearables such as the Apple Watch and blood sugar monitors.

None of this information is in anything resembling a standard format across and even within hospitals, clinics, and diagnostic centers. Once the algorithms are trained, however, they can crunch massive amounts of data at blinding speed, with an accuracy that matches and sometimes even exceeds that of highly experienced doctors.

Genome sequencing, for example, took years to accomplish as recently as the early 2000s. The Human Genome Project, the first sequencing of the human genome, was an international effort that took 13 years to complete. In April of this year, Rady Children's Institute for Genomic Medicine in San Diego used an AI-powered genome sequencing algorithm to diagnose rare genetic diseases in infants in about 20 hours, according to ScienceDaily.

"Patient care will always begin and end with the doctor."

Dr. Stephen Kingsmore, the lead author of an article published in Science Translational Medicine, emphasized that even though the algorithm helped guide the treatment strategies of neonatal intensive care physicians, the doctor was still an indispensable link in the chain. "Some people call this artificial intelligence, we call it augmented intelligence," he says. "Patient care will always begin and end with the doctor."

One existing trend is helping to supply a great amount of valuable data to algorithms—the electronic health record. Initially blamed for exacerbating the already crushing workload of many physicians, the EHR is emerging as a boon for algorithms because it consolidates all of a patient's data in one record.

Examples of AI in Action Around the Globe

If you're a parent who has ever taken a child to the doctor with flulike symptoms, you know the anxiety of wondering if the symptoms signal something serious. Kang Zhang, M.D., Ph.D., the founding director of the Institute for Genomic Medicine at the University of California at San Diego, and colleagues developed an AI natural language processing model that used deep learning to analyze the EHRs of 1.3 million pediatric visits to a clinic in Guanzhou, China.

The AI identified common childhood diseases with about the same accuracy as human doctors, and it was even able to split the diagnoses into two categories—common conditions such as flu, and serious, life-threatening conditions like meningitis. Zhang has emphasized that the algorithm didn't replace the human doctor, but it did streamline the diagnostic process and could be used in a triage capacity when emergency room personnel need to prioritize the seriously ill over those suffering from common, less dangerous ailments.

AI's usefulness in healthcare ranges far and wide. In Uganda and several other African nations, AI is bringing modern diagnostics to remote villages that have no access to traditional technologies such as x-rays. The New York Times recently reported that there, doctors are using a pocket-sized, hand-held ultrasound machine that works in concert with a cell phone to image and diagnose everything from pneumonia (a common killer of children) to cancerous tumors.

The beauty of the highly portable, battery-powered device is that ultrasound images can be uploaded on computers so that physicians anywhere in the world can review them and weigh in with their advice. And the images are instantly incorporated into the patient's EHR.

Jonathan Rothberg, the founder of Butterfly Network, the Connecticut company that makes the device, told The New York Times that "Two thirds of the world's population gets no imaging at all. When you put something on a chip, the price goes down and you democratize it." The Butterfly ultrasound machine, which sells for $2,000, promises to be a game-changer in remote areas of Africa, South America, and Asia, as well as at the bedsides of patients in developed countries.

AI algorithms are rapidly emerging in healthcare across the U.S. and the world. China has become a major international player, set to surpass the U.S. this year in AI capital investment, the translation of AI research into marketable products, and even the number of often-cited research papers on AI. So far the U.S. is still the leader, but some experts describe the relationship between the U.S. and China as an AI cold war.

"The future of machine learning isn't sentient killer robots. It's longer human lives."

The U.S. Food and Drug Administration expanded its approval of medical algorithms from two in all of 2017 to about two per month throughout 2018. One of the first fields to be impacted is ophthalmology.

One algorithm, developed by the British AI company DeepMind (owned by Alphabet, the parent company of Google), instantly scans patients' retinas and is able to diagnose diabetic retinopathy without needing an ophthalmologist to interpret the scans. This means diabetics can get the test every year from their family physician without having to see a specialist. The Financial Times reported in March that the technology is now being used in clinics throughout Europe.

In Copenhagen, emergency service dispatchers are using a new voice-processing AI called Corti to analyze the conversations in emergency phone calls. The algorithm analyzes the verbal cues of callers, searches its huge database of medical information, and provides dispatchers with onscreen diagnostic information. Freddy Lippert, the CEO of EMS Copenhagen, notes that the algorithm has already saved lives by expediting accurate diagnoses in high-pressure situations where time is of the essence.

Researchers at the University of Nottingham in the UK have even developed a deep learning algorithm that predicts death more accurately than human clinicians. The algorithm incorporates data from a huge range of factors in a chronically ill population, including how many fruits and vegetables a patient eats on a daily basis. Dr. Stephen Weng, lead author of the study, published in PLOS ONE, said in a press release, "We found machine learning algorithms were significantly more accurate in predicting death than the standard prediction models developed by a human expert."

New digital technologies are allowing patients to participate in their healthcare as never before. A feature of the new Apple Watch is an app that detects cardiac arrhythmias and even produces an electrocardiogram if an abnormality is detected. The technology, approved by the FDA, is helping cardiologists monitor heart patients and design interventions for those who may be at higher risk of a cardiac event like a stroke.

If having an algorithm predict your death sends a shiver down your spine, consider that algorithms may keep you alive longer. In 2018, technology reporter Tristan Greene wrote for Medium that "…despite the unending deluge of panic-ridden articles declaring AI the path to apocalypse, we're now living in a world where algorithms save lives every day. The future of machine learning isn't sentient killer robots. It's longer human lives."

The Risks of AI Compiling Your Data

To be sure, the advent of AI-infused medical technology is not without its risks. One risk is that the use of AI wearables constantly monitoring our vital signs could turn us into a nation of hypochondriacs, racing to our doctors every time there's a blip in some vital sign. Such a development could stress an already overburdened system that suffers from, among other things, a shortage of doctors and nurses. Another risk has to do with the privacy protections on the massive repository of intimately personal information that AI will have on us.

In an article recently published in the Journal of the American Medical Association, Australian researcher Kit Huckvale and colleagues examined the handling of data by 36 smartphone apps that assisted people with either depression or smoking cessation, two areas that could lend themselves to stigmatization if they fell into the wrong hands.

Out of the 36 apps, 33 shared their data with third parties, despite the fact that just 25 of those apps had a privacy policy at all and out of those, only 23 stated that data would be shared with third parties. The recipients of all that data? It went almost exclusively to Facebook and Google, to be used for advertising and marketing purposes. But there's nothing to stop it from ending up in the hands of insurers, background databases, or any other entity.

Even when data isn't voluntarily shared, any digital information can be hacked. EHRs and even wearable devices share the same vulnerability as any other digital record or device. Still, the promise of AI to radically improve efficiency and accuracy in healthcare is hard to ignore.

AI Can Help Restore Humanity to Medicine

Eric Topol, director of the Scripps Research Translational Institute and author of the new book Deep Medicine, says that AI gives doctors and nurses the most precious gift of all: time.

Topol welcomes his patients' use of the Apple Watch cardiac feature and is optimistic about the ways that AI is revolutionizing medicine. He says that the watch helps doctors monitor how well medications are working and has already helped to prevent strokes. But in addition to that, AI will help bring the humanity back to a profession that has become as cold and hard as a stainless steel dissection table.

"When I graduated from medical school in the 1970s," he says, "you had a really intimate relationship with your doctor." Over the decades, he has seen that relationship steadily erode as medical organizations demanded that doctors see more and more patients within ever-shrinking time windows.

"Doctors have no time to think, to communicate. We need to restore the mission in medicine."

In addition to that, EHRs have meant that doctors and nurses are getting buried in paperwork and administrative tasks. This is no doubt one reason why a recent study by the World Health Organization showed that worldwide, about 50 percent of doctors suffer from burnout. People who are utterly exhausted make more mistakes, and medical clinicians are no different from the rest of us. Only medical mistakes have unacceptably high stakes. According to its website, Johns Hopkins University recently announced that in the U.S. alone, 250,000 people die from medical mistakes each year.

"Doctors have no time to think, to communicate," says Topol. "We need to restore the mission in medicine." AI is giving doctors more time to devote to the thing that attracted them to medicine in the first place—connecting deeply with patients.

There is a real danger at this juncture, though, that administrators aware of the time-saving aspects of AI will simply push doctors to see more patients, read more tests, and embrace an even more crushing workload.

"We can't leave it to the administrators to just make things worse," says Topol. "Now is the time for doctors to advocate for a restoration of the human touch. We need to stand up for patients and for the patient-doctor relationship."

AI could indeed be a game changer, he says, but rather than squander the huge benefits of more time, "We need a new equation going forward."

A newly discovered brain cell may lead to better treatments for cognitive disorders

Swiss researchers have found a type of brain cell that appears to be a hybrid of the two other main types — and it could lead to new treatments for brain disorders.

Swiss researchers have discovered a third type of brain cell that appears to be a hybrid of the two other primary types — and it could lead to new treatments for many brain disorders.

The challenge: Most of the cells in the brain are either neurons or glial cells. While neurons use electrical and chemical signals to send messages to one another across small gaps called synapses, glial cells exist to support and protect neurons.

Astrocytes are a type of glial cell found near synapses. This close proximity to the place where brain signals are sent and received has led researchers to suspect that astrocytes might play an active role in the transmission of information inside the brain — a.k.a. “neurotransmission” — but no one has been able to prove the theory.

A new brain cell: Researchers at the Wyss Center for Bio and Neuroengineering and the University of Lausanne believe they’ve definitively proven that some astrocytes do actively participate in neurotransmission, making them a sort of hybrid of neurons and glial cells.

According to the researchers, this third type of brain cell, which they call a “glutamatergic astrocyte,” could offer a way to treat Alzheimer’s, Parkinson’s, and other disorders of the nervous system.

“Its discovery opens up immense research prospects,” said study co-director Andrea Volterra.

The study: Neurotransmission starts with a neuron releasing a chemical called a neurotransmitter, so the first thing the researchers did in their study was look at whether astrocytes can release the main neurotransmitter used by neurons: glutamate.

By analyzing astrocytes taken from the brains of mice, they discovered that certain astrocytes in the brain’s hippocampus did include the “molecular machinery” needed to excrete glutamate. They found evidence of the same machinery when they looked at datasets of human glial cells.

Finally, to demonstrate that these hybrid cells are actually playing a role in brain signaling, the researchers suppressed their ability to secrete glutamate in the brains of mice. This caused the rodents to experience memory problems.

“Our next studies will explore the potential protective role of this type of cell against memory impairment in Alzheimer’s disease, as well as its role in other regions and pathologies than those explored here,” said Andrea Volterra, University of Lausanne.

But why? The researchers aren’t sure why the brain needs glutamatergic astrocytes when it already has neurons, but Volterra suspects the hybrid brain cells may help with the distribution of signals — a single astrocyte can be in contact with thousands of synapses.

“Often, we have neuronal information that needs to spread to larger ensembles, and neurons are not very good for the coordination of this,” researcher Ludovic Telley told New Scientist.

Looking ahead: More research is needed to see how the new brain cell functions in people, but the discovery that it plays a role in memory in mice suggests it might be a worthwhile target for Alzheimer’s disease treatments.

The researchers also found evidence during their study that the cell might play a role in brain circuits linked to seizures and voluntary movements, meaning it’s also a new lead in the hunt for better epilepsy and Parkinson’s treatments.

“Our next studies will explore the potential protective role of this type of cell against memory impairment in Alzheimer’s disease, as well as its role in other regions and pathologies than those explored here,” said Volterra.

Researchers claimed they built a breakthrough superconductor. Social media shot it down almost instantly.

In July, South Korean scientists posted a paper finding they had achieved superconductivity - a claim that was debunked within days.

Harsh Mathur was a graduate physics student at Yale University in late 1989 when faculty announced they had failed to replicate claims made by scientists at the University of Utah and the University of Wolverhampton in England.

Such work is routine. Replicating or attempting to replicate the contraptions, calculations and conclusions crafted by colleagues is foundational to the scientific method. But in this instance, Yale’s findings were reported globally.

“I had a ringside view, and it was crazy,” recalls Mathur, now a professor of physics at Case Western Reserve University in Ohio.

Yale’s findings drew so much attention because initial experiments by Stanley Pons of Utah and Martin Fleischmann of Wolverhampton led to a startling claim: They were able to fuse atoms at room temperature – a scientific El Dorado known as “cold fusion.”

Nuclear fusion powers the stars in the universe. However, star cores must be at least 23.4 million degrees Fahrenheit and under extraordinary pressure to achieve fusion. Pons and Fleischmann claimed they had created an almost limitless source of power achievable at any temperature.

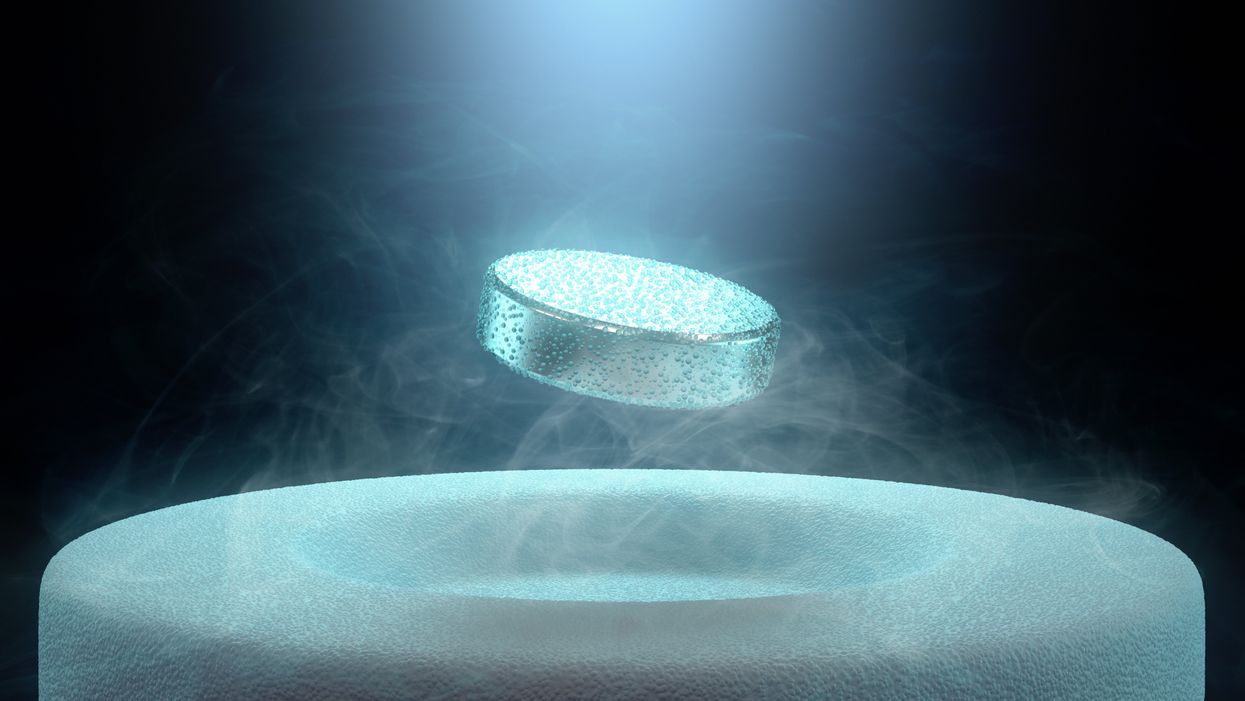

Like fusion, superconductivity can only be achieved in mostly impractical circumstances.

But about six months after they made their startling announcement, the pair’s findings were discredited by researchers at Yale and the California Institute of Technology. It was one of the first instances of a major scientific debunking covered by mass media.

Some scholars say the media attention for cold fusion stemmed partly from a dazzling announcement made three years prior in 1986: Scientists had created the first “superconductor” – material that could transmit electrical current with little or no resistance. It drew global headlines – and whetted the public’s appetite for announcements of scientific breakthroughs that could cause economic transformations.

But like fusion, superconductivity can only be achieved in mostly impractical circumstances: It must operate either at temperatures of at least negative 100 degrees Fahrenheit, or under pressures of around 150,000 pounds per square inch. Superconductivity that functions in closer to a normal environment would cut energy costs dramatically while also opening infinite possibilities for computing, space travel and other applications.

In July, a group of South Korean scientists posted material claiming they had created an iron crystalline substance called LK-99 that could achieve superconductivity at slightly above room temperature and at ambient pressure. The group partners with the Quantum Energy Research Centre, a privately-held enterprise in Seoul, and their claims drew global headlines.

Their work was also debunked. But in the age of internet and social media, the process was compressed from half-a-year into days. And it did not require researchers at world-class universities.

One of the most compelling critiques came from Derrick VanGennep. Although he works in finance, he holds a Ph.D. in physics and held a postdoctoral position at Harvard. The South Korean researchers had posted a video of a nugget of LK-99 in what they claimed was the throes of the Meissner effect – an expulsion of the substance’s magnetic field that would cause it to levitate above a magnet. Unless Hollywood magic is involved, only superconducting material can hover in this manner.

That claim made VanGennep skeptical, particularly since LK-99’s levitation appeared unenthusiastic at best. In fact, a corner of the material still adhered to the magnet near its center. He thought the video demonstrated ferromagnetism – two magnets repulsing one another. He mixed powdered graphite with super glue, stuck iron filings to its surface and mimicked the behavior of LK-99 in his own video, which was posted alongside the researchers’ video.

VanGennep believes the boldness of the South Korean claim was what led to him and others in the scientific community questioning it so quickly.

“The swift replication attempts stemmed from the combination of the extreme claim, the fact that the synthesis for this material is very straightforward and fast, and the amount of attention that this story was getting on social media,” he says.

But practicing scientists were suspicious of the data as well. Michael Norman, director of the Argonne Quantum Institute at the Argonne National Laboratory just outside of Chicago, had doubts immediately.

Will this saga hurt or even affect the careers of the South Korean researchers? Possibly not, if the previous fusion example is any indication.

“It wasn’t a very polished paper,” Norman says of the Korean scientists’ work. That opinion was reinforced, he adds, when it turned out the paper had been posted online by one of the researchers prior to seeking publication in a peer-reviewed journal. Although Norman and Mathur say that is routine with scientific research these days, Norman notes it was posted by one of the junior researchers over the doubts of two more senior scientists on the project.

Norman also raises doubts about the data reported. Among other issues, he observes that the samples created by the South Korean researchers contained traces of copper sulfide that could inadvertently amplify findings of conductivity.

The lack of the Meissner effect also caught Mathur’s attention. “Ferromagnets tend to be unstable when they levitate,” he says, adding that the video “just made me feel unconvinced. And it made me feel like they hadn't made a very good case for themselves.”

Will this saga hurt or even affect the careers of the South Korean researchers? Possibly not, if the previous fusion example is any indication. Despite being debunked, cold fusion claimants Pons and Fleischmann didn’t disappear. They moved their research to automaker Toyota’s IMRA laboratory in France, which along with the Japanese government spent tens of millions of dollars on their work before finally pulling the plug in 1998.

Fusion has since been created in laboratories, but being unable to reproduce the density of a star’s core would require excruciatingly high temperatures to achieve – about 160 million degrees Fahrenheit. A recently released Government Accountability Office report concludes practical fusion likely remains at least decades away.

However, like Pons and Fleischman, the South Korean researchers are not going anywhere. They claim that LK-99’s Meissner effect is being obscured by the fact the substance is both ferromagnetic and diamagnetic. They have filed for a patent in their country. But for now, those claims remain chimerical.

In the meantime, the consensus as to when a room temperature superconductor will be achieved is mixed. VenGennep – who studied the issue during his graduate and postgraduate work – puts the chance of creating such a superconductor by 2050 at perhaps 50-50. Mathur believes it could happen sooner, but adds that research on the topic has been going on for nearly a century, and that it has seen many plateaus.

“There's always this possibility that there's going to be something out there that we're going to discover unexpectedly,” Norman notes. The only certainty in this age of social media is that it will be put through the rigors of replication instantly.