The Shiny–and Potentially Dangerous—New Tool for Predicting Human Behavior

Studies of twins have played an important role in determining that genetic differences play a role in the development of differences in behavior.

[Editor's Note: This essay is in response to our current Big Question, which we posed to experts with different perspectives: "How should DNA tests for intelligence be used, if at all, by parents and educators?"]

Imagine a world in which pregnant women could go to the doctor and obtain a simple inexpensive genetic test of their unborn child that would allow them to predict how tall he or she would eventually be. The test might also tell them the child's risk for high blood pressure or heart disease.

Can we use DNA not to understand, but to predict who is going to be intelligent or extraverted or mentally ill?

Even more remarkable -- and more dangerous -- the test might predict how intelligent the child would be, or how far he or she could be expected to go in school. Or heading further out, it might predict whether he or she will be an alcoholic or a teetotaler, or straight or gay, or… you get the idea. Is this really possible? If it is, would it be a good idea? Answering these questions requires some background in a scientific field called behavior genetics.

Differences in human behavior -- intelligence, personality, mental illness, pretty much everything -- are related to genetic differences among people. Scientists have known this for 150 years, ever since Darwin's half-cousin Francis Galton first applied Shakespeare's phrase, "Nature and Nurture" to the scientific investigation of human differences. We knew about the heritability of behavior before Mendel's laws of genetics had been re-discovered at the end of the last century, and long before the structure of DNA was discovered in the 1950s. How could discoveries about genetics be made before a science of genetics even existed?

The answer is that scientists developed clever research designs that allowed them to make inferences about genetics in the absence of biological knowledge about DNA. The best-known is the twin study: identical twins are essentially clones, sharing 100 percent of their DNA, while fraternal twins are essentially siblings, sharing half. To the extent that identical twins are more similar for some trait than fraternal twins, one can infer that heredity is playing a role. Adoption studies are even more straightforward. Is the personality of an adopted child more like the biological parents she has never seen, or the adoptive parents who raised her?

Twin and adoption studies played an important role in establishing beyond any reasonable doubt that genetic differences play a role in the development of differences in behavior, but they told us very little about how the genetics of behavior actually worked. When the human genome was finally sequenced in the early 2000s, and it became easier and cheaper to obtain actual DNA from large samples of people, scientists anticipated that we would soon find the genes for intelligence, mental illness, and all the other behaviors that were known to be "heritable" in a general way.

But to everyone's amazement, the genes weren't there. It turned out that there are thousands of genes related to any given behavior, so many that they can't be counted, and each one of them has such a tiny effect that it can't be tied to meaningful biological processes. The whole scientific enterprise of understanding the genetics of behavior seemed ready to collapse, until it was rescued -- sort of -- by a new method called polygenic scores, PGS for short. Polygenic scores abandon the old task of finding the genes for complex human behavior, replacing it with black-box prediction: can we use DNA not to understand, but to predict who is going to be intelligent or extraverted or mentally ill?

Prediction from observing parents works better, and is far easier and cheaper, than anything we can do with DNA.

PGS are the shiny new toy of human genetics. From a technological standpoint they are truly amazing, and they are useful for some scientific applications that don't involve making decisions about individual people. We can obtain DNA from thousands of people, estimate the tiny relationships between individual bits of DNA and any outcome we want — height or weight or cardiac disease or IQ — and then add all those tiny effects together into a single bell-shaped score that can predict the outcome of interest. In theory, we could do this from the moment of conception.

Polygenic scores for height already work pretty well. Physicians are debating whether the PGS for heart disease are robust enough to be used in the clinic. For some behavioral traits-- the most data exist for educational attainment -- they work well enough to be scientifically interesting, if not practically useful. For traits like personality or sexual orientation, the prediction is statistically significant but nowhere close to practically meaningful. No one knows how much better any of these predictions are likely to get.

Without a doubt, PGS are an amazing feat of genomic technology, but the task they accomplish is something scientists have been able to do for a long time, and in fact it is something that our grandparents could have done pretty well. PGS are basically a new way to predict a trait in an individual by using the same trait in the individual's parents — a way of observing that the acorn doesn't fall far from the tree.

The children of tall people tend to be tall. Children of excellent athletes are athletic; children of smart people are smart; children of people with heart disease are at risk, themselves. Not every time, of course, but that is how imperfect prediction works: children of tall parents vary in their height like anyone else, but on average they are taller than the rest of us. Prediction from observing parents works better, and is far easier and cheaper, than anything we can do with DNA.

But wait a minute. Prediction from parents isn't strictly genetic. Smart parents not only pass on their genes to their kids, but they also raise them. Smart families are privileged in thousands of ways — they make more money and can send their kids to better schools. The same is true for PGS.

The ability of a genetic score to predict educational attainment depends not only on examining the relationship between certain genes and how far people go in school, but also on every personal and social characteristic that helps or hinders education: wealth, status, discrimination, you name it. The bottom line is that for any kind of prediction of human behavior, separation of genetic from environmental prediction is very difficult; ultimately it isn't possible.

Still, experts are already discussing how to use PGS to make predictions for children, and even for embryos.

This is a reminder that we really have no idea why either parents or PGS predict as well or as poorly as they do. It is easy to imagine that a PGS for educational attainment works because it is summarizing genes that code for efficient neurological development, bigger brains, and swifter problem solving, but we really don't know that. PGS could work because they are associated with being rich, or being motivated, or having light skin. It's the same for predicting from parents. We just don't know.

Still, experts are already discussing how to use PGS to make predictions for children, and even for embryos.

For example, maybe couples could fertilize multiple embryos in vitro, test their DNA, and select the one with the "best" PGS on some trait. This would be a bad idea for a lot of reasons. Such scores aren't effective enough to be very useful to parents, and to the extent they are effective, it is very difficult to know what other traits might be selected for when parents try to prioritize intelligence or attractiveness. People will no doubt try it anyway, and as a matter of reproductive freedom I can't think of any way to stop them. Fortunately, the practice probably won't have any great impact one way or another.

That brings us to the ethics of PGS, particularly in the schools. Imagine that when a child enrolls in a public school, an IQ test is given to her biological parents. Children with low-IQ parents are statistically more likely to have low IQs themselves, so they could be assigned to less demanding classrooms or vocational programs. Hopefully we agree that this would be unethical, but let's think through why.

First of all, it would be unethical because we don't know why the parents have low IQs, or why their IQs predict their children's. The parents could be from a marginalized ethnic group, recognizable by their skin color and passed on genetically to their children, so discriminating based on a parent's IQ would just be a proxy for discriminating based on skin color. Such a system would be no more than a social scientific gloss on an old-fashioned program for perpetuating economic and cognitive privilege via the educational system.

People deserve to be judged on the basis of their own behavior, not a genetic test.

Assigning children to classrooms based on genetic testing would be no different, although it would have the slight ethical advantage of being less effective. The PGS for educational attainment could reflect brain-efficiency, but it could also depend on skin color, or economic advantage, or personality, or literally anything that is related in any way to economic success. Privileging kids with higher genetic scores would be no different than privileging children with smart parents. If schools really believe that a psychological trait like IQ is important for school placement, the sensible thing is to administer the children an actual IQ test – not a genetic test.

IQ testing has its own issues, of course, but at least it involves making decisions about individuals based on their own observable characteristics, rather than on characteristics of their parents or their genome. If decisions must be made, if resources must be apportioned, people deserve to be judged on the basis of their own behavior, the content of their character. Since it can't be denied that people differ in all sorts of relevant ways, this is what it means for all people to be created equal.

[Editor's Note: Read another perspective in the series here.]

Thanks to safety cautions from the COVID-19 pandemic, a strain of influenza has been completely eliminated.

If you were one of the millions who masked up, washed your hands thoroughly and socially distanced, pat yourself on the back—you may have helped change the course of human history.

Scientists say that thanks to these safety precautions, which were introduced in early 2020 as a way to stop transmission of the novel COVID-19 virus, a strain of influenza has been completely eliminated. This marks the first time in human history that a virus has been wiped out through non-pharmaceutical interventions, such as vaccines.

The flu shot, explained

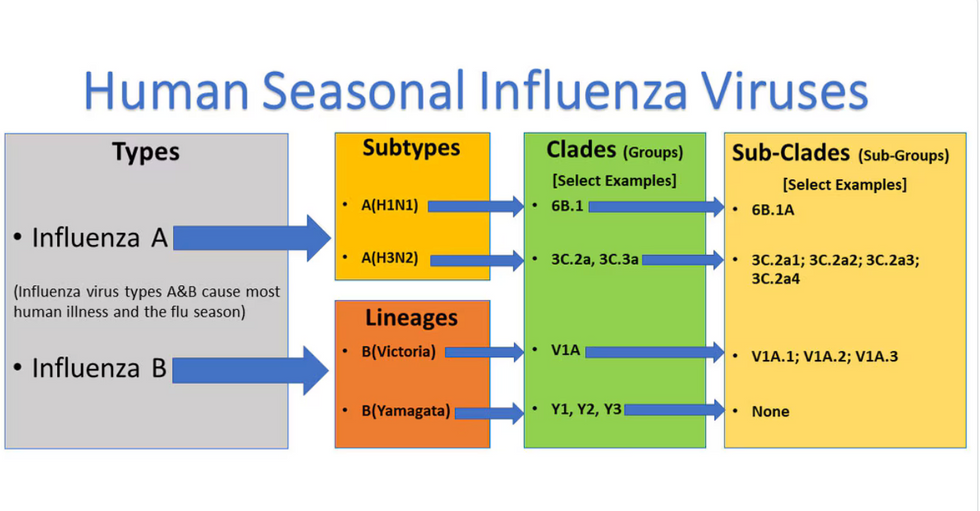

Influenza viruses type A and B are responsible for the majority of human illnesses and the flu season.

Centers for Disease Control

For more than a decade, flu shots have protected against two types of the influenza virus–type A and type B. While there are four different strains of influenza in existence (A, B, C, and D), only strains A, B, and C are capable of infecting humans, and only A and B cause pandemics. In other words, if you catch the flu during flu season, you’re most likely sick with flu type A or B.

Flu vaccines contain inactivated—or dead—influenza virus. These inactivated viruses can’t cause sickness in humans, but when administered as part of a vaccine, they teach a person’s immune system to recognize and kill those viruses when they’re encountered in the wild.

Each spring, a panel of experts gives a recommendation to the US Food and Drug Administration on which strains of each flu type to include in that year’s flu vaccine, depending on what surveillance data says is circulating and what they believe is likely to cause the most illness during the upcoming flu season. For the past decade, Americans have had access to vaccines that provide protection against two strains of influenza A and two lineages of influenza B, known as the Victoria lineage and the Yamagata lineage. But this year, the seasonal flu shot won’t include the Yamagata strain, because the Yamagata strain is no longer circulating among humans.

How Yamagata Disappeared

Flu surveillance data from the Global Initiative on Sharing All Influenza Data (GISAID) shows that the Yamagata lineage of flu type B has not been sequenced since April 2020.

Nature

Experts believe that the Yamagata lineage had already been in decline before the pandemic hit, likely because the strain was naturally less capable of infecting large numbers of people compared to the other strains. When the COVID-19 pandemic hit, the resulting safety precautions such as social distancing, isolating, hand-washing, and masking were enough to drive the virus into extinction completely.

Because the strain hasn’t been circulating since 2020, the FDA elected to remove the Yamagata strain from the seasonal flu vaccine. This will mark the first time since 2012 that the annual flu shot will be trivalent (three-component) rather than quadrivalent (four-component).

Should I still get the flu shot?

The flu shot will protect against fewer strains this year—but that doesn’t mean we should skip it. Influenza places a substantial health burden on the United States every year, responsible for hundreds of thousands of hospitalizations and tens of thousands of deaths. The flu shot has been shown to prevent millions of illnesses each year (more than six million during the 2022-2023 season). And while it’s still possible to catch the flu after getting the flu shot, studies show that people are far less likely to be hospitalized or die when they’re vaccinated.

Another unexpected benefit of dropping the Yamagata strain from the seasonal vaccine? This will possibly make production of the flu vaccine faster, and enable manufacturers to make more vaccines, helping countries who have a flu vaccine shortage and potentially saving millions more lives.

After his grandmother’s dementia diagnosis, one man invented a snack to keep her healthy and hydrated.

Founder Lewis Hornby and his grandmother Pat, sampling Jelly Drops—an edible gummy containing water and life-saving electrolytes.

On a visit to his grandmother’s nursing home in 2016, college student Lewis Hornby made a shocking discovery: Dehydration is a common (and dangerous) problem among seniors—especially those that are diagnosed with dementia.

Hornby’s grandmother, Pat, had always had difficulty keeping up her water intake as she got older, a common issue with seniors. As we age, our body composition changes, and we naturally hold less water than younger adults or children, so it’s easier to become dehydrated quickly if those fluids aren’t replenished. What’s more, our thirst signals diminish naturally as we age as well—meaning our body is not as good as it once was in letting us know that we need to rehydrate. This often creates a perfect storm that commonly leads to dehydration. In Pat’s case, her dehydration was so severe she nearly died.

When Lewis Hornby visited his grandmother at her nursing home afterward, he learned that dehydration especially affects people with dementia, as they often don’t feel thirst cues at all, or may not recognize how to use cups correctly. But while dementia patients often don’t remember to drink water, it seemed to Hornby that they had less problem remembering to eat, particularly candy.

Hornby wanted to create a solution for elderly people who struggled keeping their fluid intake up. He spent the next eighteen months researching and designing a solution and securing funding for his project. In 2019, Hornby won a sizable grant from the Alzheimer’s Society, a UK-based care and research charity for people with dementia and their caregivers. Together, through the charity’s Accelerator Program, they created a bite-sized, sugar-free, edible jelly drop that looked and tasted like candy. The candy, called Jelly Drops, contained 95% water and electrolytes—important minerals that are often lost during dehydration. The final product launched in 2020—and was an immediate success. The drops were able to provide extra hydration to the elderly, as well as help keep dementia patients safe, since dehydration commonly leads to confusion, hospitalization, and sometimes even death.

Not only did Jelly Drops quickly become a favorite snack among dementia patients in the UK, but they were able to provide an additional boost of hydration to hospital workers during the pandemic. In NHS coronavirus hospital wards, patients infected with the virus were regularly given Jelly Drops to keep their fluid levels normal—and staff members snacked on them as well, since long shifts and personal protective equipment (PPE) they were required to wear often left them feeling parched.

In April 2022, Jelly Drops launched in the United States. The company continues to donate 1% of its profits to help fund Alzheimer’s research.