To Make Science Engaging, We Need a Sesame Street for Adults

A new kind of television series could establish the baseline narratives for novel science like gene editing, quantum computing, or artificial intelligence.

This article is part of the magazine, "The Future of Science In America: The Election Issue," co-published by LeapsMag, the Aspen Institute Science & Society Program, and GOOD.

In the mid-1960s, a documentary producer in New York City wondered if the addictive jingles, clever visuals, slogans, and repetition of television ads—the ones that were captivating young children of the time—could be harnessed for good. Over the course of three months, she interviewed educators, psychologists, and artists, and the result was a bonanza of ideas.

Perhaps a new TV show could teach children letters and numbers in short animated sequences? Perhaps adults and children could read together with puppets providing comic relief and prompting interaction from the audience? And because it would be broadcast through a device already in almost every home, perhaps this show could reach across socioeconomic divides and close an early education gap?

Soon after Joan Ganz Cooney shared her landmark report, "The Potential Uses of Television in Preschool Education," in 1966, she was prototyping show ideas, attracting funding from The Carnegie Corporation, The Ford Foundation, and The Corporation for Public Broadcasting, and co-founding the Children's Television Workshop with psychologist Lloyd Morrisett. And then, on November 10, 1969, informal learning was transformed forever with the premiere of Sesame Street on public television.

For its first season, Sesame Street won three Emmy Awards and a Peabody Award. Its star, Big Bird, landed on the cover of Time Magazine, which called the show "TV's gift to children." Fifty years later, it's hard to imagine an approach to informal preschool learning that isn't Sesame Street.

And that approach can be boiled down to one word: Entertainment.

Despite decades of evidence from Sesame Street—one of the most studied television shows of all time—and more research from social science, psychology, and media communications, we haven't yet taken Ganz Cooney's concepts to heart in educating adults. Adults have news programs and documentaries and educational YouTube channels, but no Sesame Street. So why don't we? Here's how we can design a new kind of television to make science engaging and accessible for a public that is all too often intimidated by it.

We have to start from the realization that America is a nation of high-school graduates. By the end of high school, students have decided to abandon science because they think it's too difficult, and as a nation, we've made it acceptable for any one of us to say "I'm not good at science" and offload thinking to the ones who might be. So, is it surprising that a large number of Americans are likely to believe in conspiracy theories like the 25% that believe the release of COVID-19 was planned, the one in ten who believe the Moon landing was a hoax, or the 30–40% that think the condensation trails of planes are actually nefarious chemtrails? If we're meeting people where they are, the aim can't be to get the audience from an A to an A+, but from an F to a D, and without judgment of where they are starting from.

There's also a natural compulsion for a well-meaning educator to fill a literacy gap with a barrage of information, but this is what I call "factsplaining," and we know it doesn't work. And worse, it can backfire. In one study from 2014, parents were provided with factual information about vaccine safety, and it was the group that was already the most averse to vaccines that uniquely became even more averse.

Why? Our social identities and cognitive biases are stubborn gatekeepers when it comes to processing new information. We filter ideas through pre-existing beliefs—our values, our religions, our political ideologies. Incongruent ideas are rejected. Congruent ideas, no matter how absurd, are allowed through. We hear what we want to hear, and then our brains justify the input by creating narratives that preserve our identities. Even when we have all the facts, we can use them to support any worldview.

But social science has revealed many mechanisms for hijacking these processes through narrative storytelling, and this can form the foundation of a new kind of educational television.

Could new television series establish the baseline narratives for novel science like gene editing, quantum computing, or artificial intelligence?

As media creators, we can reject factsplaining and instead construct entertaining narratives that disrupt cognitive processes. Two-decade-old research tells us when people are immersed in entertaining fiction narratives, they loosen their defenses, opening a path for new information, editing attitudes, and inspiring new behavior. Where news about hot-button issues like climate change or vaccination might trigger resistance or a backfire effect, fiction can be crafted to be absorbing and, as a result, persuasive.

But the narratives can't be stuffed with information. They must be simplified. If this feels like the opposite of what an educator should be doing, it is possible to reduce the complexity of information, without oversimplification, through "exemplification," a framing device to tell the stories of individuals in specific circumstances that can speak to the greater issue without needing to explain it all. It's a technique you've seen used in biopics. The Discovery Channel true-crime miniseries Manhunt: Unabomber does many things well from a science storytelling perspective, including exemplifying the virtues of the scientific method through a character who argues for a new field of science, forensic linguistics, to catch one of the most notorious domestic terrorists in U.S. history.

We must also appeal to the audience's curiosity. We know curiosity is such a strong driver of human behavior that it can even counteract the biases put up by one's political ideology around subjects like climate change. If we treat science information like a product—and we should—advertising research tells us we can maximize curiosity though a Goldilocks effect. If the information is too complex, your show might as well be a PowerPoint presentation. If it's too simple, it's Sesame Street. There's a sweet spot for creating intrigue about new information when there's a moderate cognitive gap.

The science of "identification" tells us that the more the main character is endearing to a viewer, the more likely the viewer will adopt the character's worldview and journey of change. This insight further provides incentives to craft characters reflective of our audiences. If we accept our biases for what they are, we can understand why the messenger becomes more important than the message, because, without an appropriate messenger, the message becomes faint and ineffective. And research confirms that the stereotype-busting doctor-skeptic Dana Scully of The X-Files, a popular science-fiction series, was an inspiration for a generation of women who pursued science careers.

With these directions, we can start making a new kind of television. But is television itself still the right delivery medium? Americans do spend six hours per day—a quarter of their lives—watching video. And even with the rise of social media and apps, science-themed television shows remain popular, with four out of five adults reporting that they watch shows about science at least sometimes. CBS's The Big Bang Theory was the most-watched show on television in the 2017–2018 season, and Cartoon Network's Rick & Morty is the most popular comedy series among millennials. And medical and forensic dramas continue to be broadcast staples. So yes, it's as true today as it was in the 1980s when George Gerbner, the "cultivation theory" researcher who studied the long-term impacts of television images, wrote, "a single episode on primetime television can reach more people than all science and technology promotional efforts put together."

We know from cultivation theory that media images can shape our views of scientists. Quick, picture a scientist! Was it an old, white man with wild hair in a lab coat? If most Americans don't encounter research science firsthand, it's media that dictates how we perceive science and scientists. Characters like Sheldon Cooper and Rick Sanchez become the model. But we can correct that by representing professionals more accurately on-screen and writing characters more like Dana Scully.

Could new television series establish the baseline narratives for novel science like gene editing, quantum computing, or artificial intelligence? Or could new series counter the misinfodemics surrounding COVID-19 and vaccines through more compelling, corrective narratives? Social science has given us a blueprint suggesting they could. Binge-watching a show like the surreal NBC sitcom The Good Place doesn't replace a Ph.D. in philosophy, but its use of humor plants the seed of continued interest in a new subject. The goal of persuasive entertainment isn't to replace formal education, but it can inspire, shift attitudes, increase confidence in the knowledge of complex issues, and otherwise prime viewers for continued learning.

[Editor's Note: To read other articles in this special magazine issue, visit the beautifully designed e-reader version.]

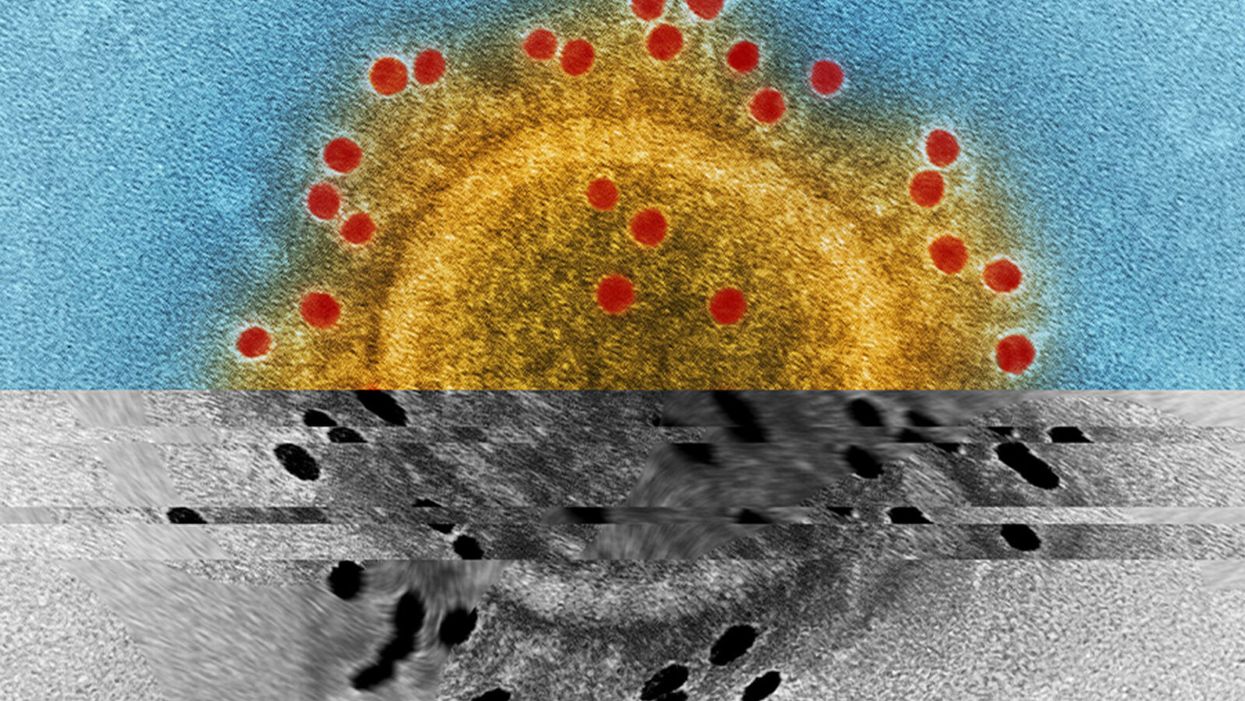

Pseudoscience Is Rampant: How Not to Fall for It

A metaphorical rendering of scientific truth gone awry.

Whom to believe?

The relentless and often unpredictable coronavirus (SARS-CoV-2) has, among its many quirky terrors, dredged up once again the issue that will not die: science versus pseudoscience.

How does one learn to spot the con without getting a Ph.D. and spending years in a laboratory?

The scientists, experts who would be the first to admit they are not infallible, are now in danger of being drowned out by the growing chorus of pseudoscientists, conspiracy theorists, and just plain troublemakers that seem to be as symptomatic of the virus as fever and weakness.

How is the average citizen to filter this cacophony of information and misinformation posing as science alongside real science? While all that noise makes it difficult to separate the real stuff from the fakes, there is at least one positive aspect to it all.

A famous aphorism by one Charles Caleb Colton, a popular 19th-century English cleric and writer, says that "imitation is the sincerest form of flattery."

The frauds and the paranoid conspiracy mongers who would perpetrate false science on a susceptible public are at least recognizing the value of science—they imitate it. They imitate the ways in which science works and make claims as if they were scientists, because even they recognize the power of a scientific approach. They are inadvertently showing us how much we value science. Unfortunately they are just shabby counterfeits.

Separating real science from pseudoscience is not a new problem. Philosophers, politicians, scientists, and others have been worrying about this perhaps since science as we know it, a science based entirely on experiment and not opinion, arrived in the 1600s. The original charter of the British Royal Society, the first organized scientific society, stated that at their formal meetings there would be no discussion of politics, religion, or perpetual motion machines.

The first two of those for the obvious purpose of keeping the peace. But the third is interesting because at that time perpetual motion machines were one of the main offerings of the imitators, the bogus scientists who were sure that you could find ways around the universal laws of energy and make a buck on it. The motto adopted by the society was, and remains, Nullius in verba, Latin for "take nobody's word for it." Kind of an early version of Missouri's venerable state motto: "Show me."

You might think that telling phony science from the real thing wouldn't be so difficult, but events, historical and current, tell a very different story—often with tragic outcomes. Just one terrible example is the estimated 350,000 additional HIV deaths in South Africa directly caused by the now-infamous conspiracy theories of their own elected President no less (sound familiar?). It's surprisingly easy to dress up phony science as the real thing by simply adopting, or appearing to adopt, the trappings of science.

Thus, the anti-vaccine movement claims to be based on suspicion of authority, beginning with medical authority in this case, stemming from the fraudulent data published by the now-disgraced Andrew Wakefield, an English gastroenterologist. And it's true that much of science is based on suspicion of authority. Science got its start when the likes of Galileo and Copernicus claimed that the Church, the State, even Aristotle, could no longer be trusted as authoritative sources of knowledge.

But Galileo and those who followed him produced alternative explanations, and those alternatives were based on data that arose independently from many sources and generated a great deal of debate and, most importantly, could be tested by experiments that could prove them wrong. The anti-vaccine movement imitates science, still citing the discredited Wakefield report, but really offers nothing but suspicion—and that is paranoia, not science.

Similarly, there are those who try to cloak their nefarious motives in the trappings of science by claiming that they are taking the scientific posture of doubt. Science after all depends on doubt—every scientist doubts every finding they make. Every scientist knows that they can't possibly foresee all possible instances or situations in which they could be proven wrong, no matter how strong their data. Einstein was doubted for two decades, and cosmologists are still searching for experimental proofs of relativity. Science indeed progresses by doubt. In science revision is a victory.

But the imitators merely use doubt to suggest that science is not dependable and should not be used for informing policy or altering our behavior. They claim to be taking the legitimate scientific stance of doubt. Of course, they don't doubt everything, only what is problematic for their individual enterprises. They don't doubt the value of blood pressure medicine to control their hypertension. But they should, because every medicine has side effects and we don't completely understand how blood pressure is regulated and whether there may not be still better ways of controlling it.

But we use the pills we have because the science is sound even when it is not completely settled. Ask a hypertensive oil executive who would like you to believe that climate science should be ignored because there are too many uncertainties in the data, if he is willing to forgo his blood pressure medicine—because it, too, has its share of uncertainties and unwanted side effects.

The apparent success of pseudoscience is not due to gullibility on the part of the public. The problem is that science is recognized as valuable and that the imitators are unfortunately good at what they do. They take a scientific pose to gain your confidence and then distort the facts to their own purposes. How does one learn to spot the con without getting a Ph.D. and spending years in a laboratory?

"If someone claims to have the ultimate answer or that they know something for certain, the only thing for sure is that they are trying to fool you."

What can be done to make the distinction clearer? Several solutions have been tried—and seem to have failed. Radio and television shows about the latest scientific breakthroughs are a noble attempt to give the public a taste of good science, but they do nothing to help you distinguish between them and the pseudoscience being purveyed on the neighboring channel and its "scientific investigations" of haunted houses.

Similarly, attempts to inculcate what are called "scientific habits of mind" are of little practical help. These habits of mind are not so easy to adopt. They invariably require some amount of statistics and probability and much of that is counterintuitive—one of the great values of science is to help us to counter our normal biases and expectations by showing that the actual measurements may not bear them out.

Additionally, there is math—no matter how much you try to hide it, much of the language of science is math (Galileo said that). And half the audience is gone with each equation (Stephen Hawking said that). It's hard to imagine a successful program of making a non-scientifically trained public interested in adopting the rigors of scientific habits of mind. Indeed, I suspect there are some people, artists for example, who would be rightfully suspicious of changing their thinking to being habitually scientific. Many scientists are frustrated by the public's inability to think like a scientist, but in fact it is neither easy nor always desirable to do so. And it is certainly not practical.

There is a more intuitive and simpler way to tell the difference between the real thing and the cheap knock-off. In fact, it is not so much intuitive as it is counterintuitive, so it takes a little bit of mental work. But the good thing is it works almost all the time by following a simple, if as I say, counterintuitive, rule.

True science, you see, is mostly concerned with the unknown and the uncertain. If someone claims to have the ultimate answer or that they know something for certain, the only thing for sure is that they are trying to fool you. Mystery and uncertainty may not strike you right off as desirable or strong traits, but that is precisely where one finds the creative solutions that science has historically arrived at. Yes, science accumulates factual knowledge, but it is at its best when it generates new and better questions. Uncertainty is not a place of worry, but of opportunity. Progress lives at the border of the unknown.

How much would it take to alter the public perception of science to appreciate unknowns and uncertainties along with facts and conclusions? Less than you might think. In fact, we make decisions based on uncertainty every day—what to wear in case of 60 percent chance of rain—so it should not be so difficult to extend that to science, in spite of what you were taught in school about all the hard facts in those giant textbooks.

You can believe science that says there is clear evidence that takes us this far… and then we have to speculate a bit and it could go one of two or three ways—or maybe even some way we don't see yet. But like your blood pressure medicine, the stuff we know is reliable even if incomplete. It will lower your blood pressure, no matter that better treatments with fewer side effects may await us in the future.

Unsettled science is not unsound science. The honesty and humility of someone who is willing to tell you that they don't have all the answers, but they do have some thoughtful questions to pursue, are easy to distinguish from the charlatans who have ready answers or claim that nothing should be done until we are an impossible 100-percent sure.

Imitation may be the sincerest form of flattery.

The problem, as we all know, is that flattery will get you nowhere.

[Editor's Note: This article was originally published on June 8th, 2020 as part of a standalone magazine called GOOD10: The Pandemic Issue. Produced as a partnership among LeapsMag, The Aspen Institute, and GOOD, the magazine is available for free online.]

Henrietta Lacks' Cells Enabled Medical Breakthroughs. Is It Time to Finally Retire Them?

Henrietta Lacks, (1920-1951) unknowingly had her cells cultured and used in medical research.

For Victoria Tokarz, a third-year PhD student at the University of Toronto, experimenting with cells is just part of a day's work. Tokarz, 26, is studying to be a cell biologist and spends her time inside the lab manipulating muscle cells sourced from rodents to try to figure out how they respond to insulin. She hopes this research could someday lead to a breakthrough in our understanding of diabetes.

"People like to use HeLa cells because they're easy to use."

But in all her research, there is one cell culture that Tokarz refuses to touch. The culture is called HeLa, short for Henrietta Lacks, named after the 31-year-old tobacco farmer the cells were stolen from during a tumor biopsy she underwent in 1951.

"In my opinion, there's no question or experiment I can think of that validates stealing from and profiting off of a black woman's body," Tokarz says. "We're not talking about a reagent we created in a lab, a mixture of some chemicals. We're talking about a human being who suffered indescribably so we could profit off of her misfortune."

Lacks' suffering is something that, until recently, was not widely known. Born to a poor family in Roanoke, VA, Lacks was sent to live with her grandfather on the family tobacco farm at age four, shortly after the death of her mother. She gave birth to her first child at just fourteen, and two years later had another child with profound developmental disabilities. Lacks married her first cousin, David, in 1941 and the family moved to Maryland where they had three additional children.

But the real misfortune came in 1951, when Lacks told her cousins that she felt a hard "knot" in her womb. When Lacks went to Johns Hopkins hospital to have the knot examined, doctors discovered that the hard lump Henrietta felt was a rapidly-growing cervical tumor.

Before the doctors treated the tumor – inserting radium tubes into her vagina, in the hopes they could kill the cancer, Lacks' doctors clipped two tissue samples from her cervix, without Lacks' knowledge or consent. While it's considered widely unethical today, taking tissue samples from patients was commonplace at the time. The samples were sent to a cancer researcher at Johns Hopkins and Lacks continued treatment unsuccessfully until she died a few months later of metastatic cancer.

Lacks' story was not over, however: When her tissue sample arrived at the lab of George Otto Gey, the Johns Hopkins cancer researcher, he noticed that the cancerous cells grew at a shocking pace. Unlike other cell cultures that would die within a day or two of arriving at the lab, Lacks' cells kept multiplying. They doubled every 24 hours, and to this day, have never stopped.

Scientists would later find out that this growth was due to an infection of Human Papilloma Virus, or HPV, which is known for causing aggressive cancers. Lacks' cells became the world's first-ever "immortalized" human cell line, meaning that as long as certain environmental conditions are met, the cells can replicate indefinitely. Although scientists have cultivated other immortalized cell lines since then, HeLa cells remain a favorite among scientists due to their resilience, Tokarz says.

"People like to use HeLa cells because they're easy to use," Tokarz says. "They're easy to manipulate, because they're very hardy, and they allow for transection, which means expressing a protein in a cell that's not normally there. Other cells, like endothelial cells, don't handle those manipulations well."

Once the doctors at Johns Hopkins discovered that Lacks' cells could replicate indefinitely, they started shipping them to labs around the world to promote medical research. As they were the only immortalized cell line available at the time, researchers used them for thousands of experiments — some of which resulted in life-saving treatments. Jonas Salk's polio vaccine, for example, was manufactured using HeLa cells. HeLa cell research was also used to develop a vaccine for HPV, and for the development of in vitro fertilization and gene mapping. Between 1951 and 2018, HeLa cells have been cited in over 110,000 publications, according to a review from the National Institutes of Health.

But while some scientists like Tokarz are thankful for the advances brought about by HeLa cells, they still believe it's well past time to stop using them in research.

"Am I thankful we have a polio vaccine? Absolutely. Do I resent the way we came to have that vaccine? Absolutely," Tokarz says. "We could have still arrived at those same advances by treating her as the human being she is, not just a specimen."

Ethical considerations aside, HeLa is no longer the world's only available cell line – nor, Tokarz argues, are her cells the most suitable for every type of research. "The closer you can get to the physiology of the thing you're studying, the better," she says. "Now we have the ability to use primary cells, which are isolated from a person and put right into the culture dish, and those don't have the same mutations as cells that have been growing for 20 years. We didn't have the expertise to do that initially, but now we do."

Raphael Valdivia, a professor of molecular genetics and microbiology at Duke University School of Medicine, agrees that HeLa cells are no longer optimal for most research. "A lot of scientists are moving away from HeLa cells because they're so unstable," he says. "They mutate, they rearrange chromosomes to become adaptive, and different batches of cells evolve separately from each other. The HeLa cells in my lab are very different than the ones down the hall, and that means sometimes we can't replicate our results. We have to go back to an earlier batch of cells in the freezer and re-test."

Still, the idea of retiring the cells completely doesn't make sense, Valdivia says: "To some extent, you're beholden to previous research. You need to be able to confirm findings that happen in earlier studies, and to do that you need to use the same cell line that other researchers have used."

"Ethics is not black and white, and sometimes there's no such thing as a straightforward ethical or unethical choice."

"The way in which the cells were taken – without patient consent – is completely inappropriate," says Yann Joly, associate professor at the Faculty of Medicine in Toronto and Research Director at the Centre of Genomics and Policy. "The question now becomes, what can we do about it now? What are our options?"

While scientists are not able to erase what was done to Henrietta Lacks, Joly argues that retiring her cells is also non-consensual, assuming – maybe incorrectly – what Henrietta would have wanted, without her input. Additionally, Joly points out that other immortalized human cell lines are fraught with what some people consider to be ethical concerns as well, such as the human embryonic kidney cell line, commonly referred to as HEK-293, that was derived from an aborted female fetus. "Just because you're using another kind of cell doesn't mean it's devoid of ethical issue," he says.

Seemingly, the one thing scientists can agree on is that Henrietta Lacks was mistreated by the medical community. But even so, retiring her cells from medical research is not an obvious solution. Scientists are now using HeLa cells to better understand how the novel coronavirus affects humans, and this knowledge will inform how researchers develop a COVID-19 vaccine.

"Ethics is not black and white, and sometimes there's no such thing as a straightforward ethical or unethical choice," Joly says. "If [ethics] were that easy, nobody would need to teach it."