Researchers Are Testing a New Stem Cell Therapy in the Hopes of Saving Millions from Blindness

NIH researchers in Kapil Bharti's lab work toward the development of induced pluripotent stem cells to treat dry age-related macular degeneration.

Of all the infirmities of old age, failing sight is among the cruelest. It can mean the end not only of independence, but of a whole spectrum of joys—from gazing at a sunset or a grandchild's face to reading a novel or watching TV.

The Phase 1 trial will likely run through 2022, followed by a larger Phase 2 trial that could last another two or three years.

The leading cause of vision loss in people over 55 is age-related macular degeneration, or AMD, which afflicts an estimated 11 million Americans. As photoreceptors in the macula (the central part of the retina) die off, patients experience increasingly severe blurring, dimming, distortions, and blank spots in one or both eyes.

The disorder comes in two varieties, "wet" and "dry," both driven by a complex interaction of genetic, environmental, and lifestyle factors. It begins when deposits of cellular debris accumulate beneath the retinal pigment epithelium (RPE)—a layer of cells that nourish and remove waste products from the photoreceptors above them. In wet AMD, this process triggers the growth of abnormal, leaky blood vessels that damage the photoreceptors. In dry AMD, which accounts for 80 to 90 percent of cases, RPE cells atrophy, causing photoreceptors to wither away. Wet AMD can be controlled in about a quarter of patients, usually by injections of medication into the eye. For dry AMD, no effective remedy exists.

Stem Cells: Promise and Perils

Over the past decade, stem cell therapy has been widely touted as a potential treatment for AMD. The idea is to augment a patient's ailing RPE cells with healthy ones grown in the lab. A few small clinical trials have shown promising results. In a study published in 2018, for example, a University of Southern California team cultivated RPE tissue from embryonic stem cells on a plastic matrix and transplanted it into the retinas of four patients with advanced dry AMD. Because the trial was designed to test safety rather than efficacy, lead researcher Amir Kashani told a reporter, "we didn't expect that replacing RPE cells would return a significant amount of vision." Yet acuity improved substantially in one recipient, and the others regained their lost ability to focus on an object.

Therapies based on embryonic stem cells, however, have two serious drawbacks: Using fetal cell lines raises ethical issues, and such treatments require the patient to take immunosuppressant drugs (which can cause health problems of their own) to prevent rejection. That's why some experts favor a different approach—one based on induced pluripotent stem cells (iPSCs). Such cells, first produced in 2006, are made by returning adult cells to an undifferentiated state, and then using chemicals to reprogram them as desired. Treatments grown from a patient's own tissues could sidestep both hurdles associated with embryonic cells.

At least hypothetically. Today, the only stem cell therapies approved by the U.S. Food and Drug Administration (FDA) are umbilical cord-derived products for various blood and immune disorders. Although scientists are probing the use of embryonic stem cells or iPSCs for conditions ranging from diabetes to Parkinson's disease, such applications remain experimental—or fraudulent, as a growing number of patients treated at unlicensed "stem cell clinics" have painfully learned. (Some have gone blind after receiving bogus AMD therapies at those facilities.)

Last December, researchers at the National Eye Institute in Bethesda, Maryland, began enrolling patients with dry AMD in the country's first clinical trial using tissue grown from the patients' own stem cells. Led by biologist Kapil Bharti, the team intends to implant custom-made RPE cells in 12 recipients. If the effort pans out, it could someday save the sight of countless oldsters.

That, however, is what's technically referred to as a very big "if."

The First Steps

Bharti's trial is not the first in the world to use patient-derived iPSCs to treat age-related macular degeneration. In 2013, Japanese researchers implanted such cells into the eyes of a 77-year-old woman with wet AMD; after a year, her vision had stabilized, and she no longer needed injections to keep abnormal blood vessels from forming. A second patient was scheduled for surgery—but the procedure was canceled after the lab-grown RPE cells showed signs of worrisome mutations. That incident illustrates one potential problem with using stem cells: Under some circumstances, the cells or the tissue they form could turn cancerous.

"The knowledge and expertise we're gaining can be applied to many other iPSC-based therapies."

Bharti and his colleagues have gone to great lengths to avoid such outcomes. "Our process is significantly different," he told me in a phone interview. His team begins with patients' blood stem cells, which appear to be more genomically stable than the skin cells that the Japanese group used. After converting the blood cells to RPE stem cells, his team cultures them in a single layer on a biodegradable scaffold, which helps them grow in an orderly manner. "We think this material gives us a big advantage," Bharti says. The team uses a machine-learning algorithm to identify optimal cell structure and ensure quality control.

It takes about six months for a patch of iPSCs to become viable RPE cells. When they're ready, a surgeon uses a specially-designed tool to insert the tiny structure into the retina. Within days, the scaffold melts away, enabling the transplanted RPE cells to integrate fully into their new environment. Bharti's team initially tested their method on rats and pigs with eye damage mimicking AMD. The study, published in January 2019 in Science Translational Medicine, found that at ten weeks, the implanted RPE cells continued to function normally and protected neighboring photoreceptors from further deterioration. No trace of mutagenesis appeared.

Encouraged by these results, Bharti began recruiting human subjects. The Phase 1 trial will likely run through 2022, followed by a larger Phase 2 trial that could last another two or three years. FDA approval would require an even larger Phase 3 trial, with a decision expected sometime between 2025 and 2028—that is, if nothing untoward happens before then. One unknown (among many) is whether implanted cells can thrive indefinitely under the biochemically hostile conditions of an eye with AMD.

"Most people don't have a sense of just how long it takes to get something like this to work, and how many failures—even disasters—there are along the way," says Marco Zarbin, professor and chair of Ophthalmology and visual science at Rutgers New Jersey Medical School and co-editor of the book Cell-Based Therapy for Degenerative Retinal Diseases. "The first kidney transplant was done in 1933. But the first successful kidney transplant was in 1954. That gives you a sense of the time frame. We're really taking the very first steps in this direction."

Looking Ahead

Even if Bharti's method proves safe and effective, there's the question of its practicality. "My sense is that using induced pluripotent stem cells to treat the patient from whom they're derived is a very expensive undertaking," Zarbin observes. "So you'd have to have a very dramatic clinical benefit to justify that cost."

Bharti concedes that the price of iPSC therapy is likely to be high, given that each "dose" is formulated for a single individual, requires months to manufacture, and must be administered via microsurgery. Still, he expects economies of scale and production to emerge with time. "We're working on automating several steps of the process," he explains. "When that kicks in, a technician will be able to make products for 10 or 20 people at once, so the cost will drop proportionately."

Meanwhile, other researchers are pressing ahead with therapies for AMD using embryonic stem cells, which could be mass-produced to treat any patient who needs them. But should that approach eventually win FDA approval, Bharti believes there will still be room for a technique that requires neither fetal cell lines nor immunosuppression.

And not only for eye ailments. "The knowledge and expertise we're gaining can be applied to many other iPSC-based therapies," says the scientist, who is currently consulting with several companies that are developing such treatments. "I'm hopeful that we can leverage these approaches for a wide range of applications, whether it's for vision or across the body."

NEI launches iPS cell therapy trial for dry AMD

In The Fake News Era, Are We Too Gullible? No, Says Cognitive Scientist

Cognitive scientist Hugo Mercier says the real challenge is not fighting fake news, but figuring out "how to make it easier for people who say correct things to convince people."

One of the oddest political hoaxes of recent times was Pizzagate, in which conspiracy theorists claimed that Hillary Clinton and her 2016 campaign chief ran a child sex ring from the basement of a Washington, DC, pizzeria.

To fight disinformation more effectively, he suggests, humans need to stop believing in one thing above all: our own gullibility.

Millions of believers spread the rumor on social media, abetted by Russian bots; one outraged netizen stormed the restaurant with an assault rifle and shot open what he took to be the dungeon door. (It actually led to a computer closet.) Pundits cited the imbroglio as evidence that Americans had lost the ability to tell fake news from the real thing, putting our democracy in peril.

Such fears, however, are nothing new. "For most of history, the concept of widespread credulity has been fundamental to our understanding of society," observes Hugo Mercier in Not Born Yesterday: The Science of Who We Trust and What We Believe (Princeton University Press, 2020). In the fourth century BCE, he points out, the historian Thucydides blamed Athens' defeat by Sparta on a demagogue who hoodwinked the public into supporting idiotic military strategies; Plato extended that argument to condemn democracy itself. Today, atheists and fundamentalists decry one another's gullibility, as do climate-change accepters and deniers. Leftists bemoan the masses' blind acceptance of the "dominant ideology," while conservatives accuse those who do revolt of being duped by cunning agitators.

What's changed, all sides agree, is the speed at which bamboozlement can propagate. In the digital age, it seems, a sucker is born every nanosecond.

The Case Against Credulity

Yet Mercier, a cognitive scientist at the Jean Nicod Institute in Paris, thinks we've got the problem backward. To fight disinformation more effectively, he suggests, humans need to stop believing in one thing above all: our own gullibility. "We don't credulously accept whatever we're told—even when those views are supported by the majority of the population, or by prestigious, charismatic individuals," he writes. "On the contrary, we are skilled at figuring out who to trust and what to believe, and, if anything, we're too hard rather than too easy to influence."

He bases those contentions on a growing body of research in neuropsychiatry, evolutionary psychology, and other fields. Humans, Mercier argues, are hardwired to balance openness with vigilance when assessing communicated information. To gauge a statement's accuracy, we instinctively test it from many angles, including: Does it jibe with what I already believe? Does the speaker share my interests? Has she demonstrated competence in this area? What's her reputation for trustworthiness? And, with more complex assertions: Does the argument make sense?

This process, Mercier says, enables us to learn much more from one another than do other animals, and to communicate in a far more complex way—key to our unparalleled adaptability. But it doesn't always save us from trusting liars or embracing demonstrably false beliefs. To better understand why, leapsmag spoke with the author.

How did you come to write Not Born Yesterday?

In 2010, I collaborated with the cognitive scientist Dan Sperber and some other colleagues on a paper called "Epistemic Vigilance," which laid out the argument that evolutionarily, it would make no sense for humans to be gullible. If you can be easily manipulated and influenced, you're going to be in major trouble. But as I talked to people, I kept encountering resistance. They'd tell me, "No, no, people are influenced by advertising, by political campaigns, by religious leaders." I started doing more research to see if I was wrong, and eventually I had enough to write a book.

With all the talk about "fake news" these days, the topic has gotten a lot more timely.

Yes. But on the whole, I'm skeptical that fake news matters very much. And all the energy we spend fighting it is energy not spent on other pursuits that may be better ways of improving our informational environment. The real challenge, I think, is not how to shut up people who say stupid things on the internet, but how to make it easier for people who say correct things to convince people.

"History shows that the audience's state of mind and material conditions matter more than the leader's powers of persuasion."

You start the book with an anecdote about your encounter with a con artist several years ago, who scammed you out of 20 euros. Why did you choose that anecdote?

Although I'm arguing that people aren't generally gullible, I'm not saying we're completely impervious to attempts at tricking us. It's just that we're much better than we think at resisting manipulation. And while there's a risk of trusting someone who doesn't deserve to be trusted, there's also a risk of not trusting someone who could have been trusted. You miss out on someone who could help you, or from whom you might have learned something—including figuring out who to trust.

You argue that in humans, vigilance and open-mindedness evolved hand-in-hand, leading to a set of cognitive mechanisms you call "open vigilance."

There's a common view that people start from a state of being gullible and easy to influence, and get better at rejecting information as they become smarter and more sophisticated. But that's not what really happens. It's much harder to get apes than humans to do anything they don't want to do, for example. And research suggests that over evolutionary time, the better our species became at telling what we should and shouldn't listen to, the more open to influence we became. Even small children have ways to evaluate what people tell them.

The most basic is what I call "plausibility checking": if you tell them you're 200 years old, they're going to find that highly suspicious. Kids pay attention to competence; if someone is an expert in the relevant field, they'll trust her more. They're likelier to trust someone who's nice to them. My colleagues and I have found that by age 2 ½, children can distinguish between very strong and very weak arguments. Obviously, these skills keep developing throughout your life.

But you've found that even the most forceful leaders—and their propaganda machines—have a hard time changing people's minds.

Throughout history, there's been this fear of demagogues leading whole countries into terrible decisions. In reality, these leaders are mostly good at feeling the crowd and figuring out what people want to hear. They're not really influencing [the masses]; they're surfing on pre-existing public opinion. We know from a recent study, for instance, that if you match cities in which Hitler gave campaign speeches in the late '20s through early '30s with similar cities in which he didn't give campaign speeches, there was no difference in vote share for the Nazis. Nazi propaganda managed to make Germans who were already anti-Semitic more likely to express their anti-Semitism or act on it. But Germans who were not already anti-Semitic were completely inured to the propaganda.

So why, in totalitarian regimes, do people seem so devoted to the ruler?

It's not a very complex psychology. In these regimes, the slightest show of discontent can be punished by death, or by you and your whole family being sent to a labor camp. That doesn't mean propaganda has no effect, but you can explain people's obedience without it.

What about cult leaders and religious extremists? Their followers seem willing to believe anything.

Prophets and preachers can inspire the kind of fervor that leads people to suicidal acts or doomed crusades. But history shows that the audience's state of mind and material conditions matter more than the leader's powers of persuasion. Only when people are ready for extreme actions can a charismatic figure provide the spark that lights the fire.

Once a religion becomes ubiquitous, the limits of its persuasive powers become clear. Every anthropologist knows that in societies that are nominally dominated by orthodox belief systems—whether Christian or Muslim or anything else—most people share a view of God, or the spirit, that's closer to what you find in societies that lack such religions. In the Middle Ages, for instance, you have records of priests complaining of how unruly the people are—how they spend the whole Mass chatting or gossiping, or go on pilgrimages mostly because of all the prostitutes and wine-drinking. They continue pagan practices. They resist attempts to make them pay tithes. It's very far from our image of how much people really bought the dominant religion.

"The mainstream media is extremely reliable. The scientific consensus is extremely reliable."

And what about all those wild rumors and conspiracy theories on social media? Don't those demonstrate widespread gullibility?

I think not, for two reasons. One is that most of these false beliefs tend to be held in a way that's not very deep. People may say Pizzagate is true, yet that belief doesn't really interact with the rest of their cognition or their behavior. If you really believe that children are being abused, then trying to free them is the moral and rational thing to do. But the only person who did that was the guy who took his assault weapon to the pizzeria. Most people just left one-star reviews of the restaurant.

The other reason is that most of these beliefs actually play some useful role for people. Before any ethnic massacre, for example, rumors circulate about atrocities having been committed by the targeted minority. But those beliefs aren't what's really driving the phenomenon. In the horrendous pogrom of Kishinev, Moldova, 100 years ago, you had these stories of blood libel—a child disappeared, typical stuff. And then what did the Christian inhabitants do? They raped the [Jewish] women, they pillaged the wine stores, they stole everything they could. They clearly wanted to get that stuff, and they made up something to justify it.

Where do skeptics like climate-change deniers and anti-vaxxers fit into the picture?

Most people in most countries accept that vaccination is good and that climate change is real and man-made. These ideas are deeply counter-intuitive, so the fact that scientists were able to get them across is quite fascinating. But the environment in which we live is vastly different from the one in which we evolved. There's a lot more information, which makes it harder to figure out who we can trust. The main effect is that we don't trust enough; we don't accept enough information. We also rely on shortcuts and heuristics—coarse cues of trustworthiness. There are people who abuse these cues. They may have a PhD or an MD, and they use those credentials to help them spread messages that are not true and not good. Mostly, they're affirming what people want to believe, but they may also be changing minds at the margins.

How can we improve people's ability to resist that kind of exploitation?

I wish I could tell you! That's literally my next project. Generally speaking, though, my advice is very vanilla. The mainstream media is extremely reliable. The scientific consensus is extremely reliable. If you trust those sources, you'll go wrong in a very few cases, but on the whole, they'll probably give you good results. Yet a lot of the problems that we attribute to people being stupid and irrational are not entirely their fault. If governments were less corrupt, if the pharmaceutical companies were irreproachable, these problems might not go away—but they would certainly be minimized.

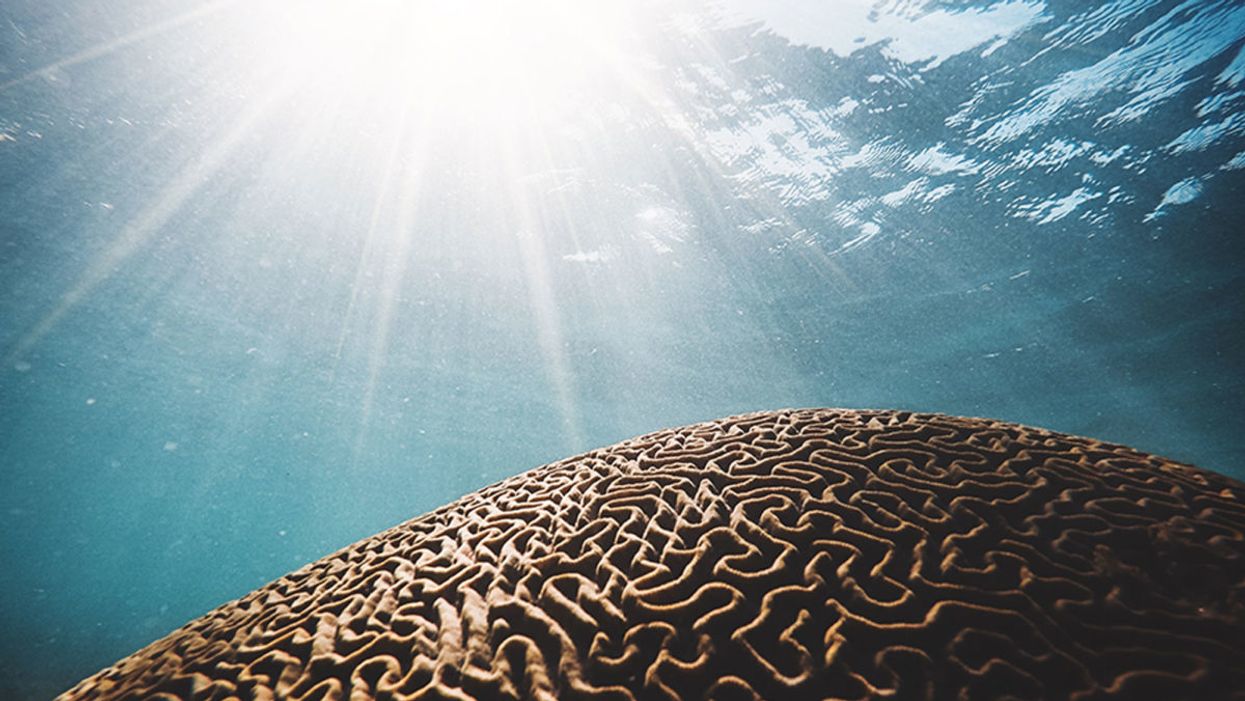

Biologists are Growing Mini-Brains. What If They Become Conscious?

Brain coral metaphorically represents the concept of growing organoids in a dish that may eventually attain consciousness--if scientists can first determine what that means.

Few images are more uncanny than that of a brain without a body, fully sentient but afloat in sterile isolation. Such specters have spooked the speculatively-minded since the seventeenth century, when René Descartes declared, "I think, therefore I am."

Since August 29, 2019, the prospect of a bodiless but functional brain has begun to seem far less fantastical.

In Meditations on First Philosophy (1641), the French penseur spins a chilling thought experiment: he imagines "having no hands or eyes, or flesh, or blood or senses," but being tricked by a demon into believing he has all these things, and a world to go with them. A disembodied brain itself becomes a demon in the classic young-adult novel A Wrinkle in Time (1962), using mind control to subjugate a planet called Camazotz. In the sci-fi blockbuster The Matrix (1999), most of humanity endures something like Descartes' nightmare—kept in womblike pods by their computer overlords, who fill the captives' brains with a synthetized reality while tapping their metabolic energy as a power source.

Since August 29, 2019, however, the prospect of a bodiless but functional brain has begun to seem far less fantastical. On that date, researchers at the University of California, San Diego published a study in the journal Cell Stem Cell, reporting the detection of brainwaves in cerebral organoids—pea-size "mini-brains" grown in the lab. Such organoids had emitted random electrical impulses in the past, but not these complex, synchronized oscillations. "There are some of my colleagues who say, 'No, these things will never be conscious,'" lead researcher Alysson Muotri, a Brazilian-born biologist, told The New York Times. "Now I'm not so sure."

Alysson Muotri has no qualms about his creations attaining consciousness as a side effect of advancing medical breakthroughs.

(Credit: ZELMAN STUDIOS)

Muotri's findings—and his avowed ambition to push them further—brought new urgency to simmering concerns over the implications of brain organoid research. "The closer we come to his goal," said Christof Koch, chief scientist and president of the Allen Brain Institute in Seattle, "the more likely we will get a brain that is capable of sentience and feeling pain, agony, and distress." At the annual meeting of the Society for Neuroscience, researchers from the Green Neuroscience Laboratory in San Diego called for a partial moratorium, warning that the field was "perilously close to crossing this ethical Rubicon and may have already done so."

Yet experts are far from a consensus on whether brain organoids can become conscious, whether that development would necessarily be dreadful—or even how to tell if it has occurred.

So how worried do we need to be?

***

An organoid is a miniaturized, simplified version of an organ, cultured from various types of stem cells. Scientists first learned to make them in the 1980s, and have since turned out mini-hearts, lungs, kidneys, intestines, thyroids, and retinas, among other wonders. These creations can be used for everything from observation of basic biological processes to testing the effects of gene variants, pathogens, or medications. They enable researchers to run experiments that might be less accurate using animal models and unethical or impractical using actual humans. And because organoids are three-dimensional, they can yield insights into structural, developmental, and other matters that an ordinary cell culture could never provide.

In 2006, Japanese biologist Shinya Yamanaka developed a mix of proteins that turned skin cells into "pluripotent" stem cells, which could subsequently be transformed into neurons, muscle cells, or blood cells. (He later won a Nobel Prize for his efforts.) Developmental biologist Madeline Lancaster, then a post-doctoral student at the Institute of Molecular Biotechnology in Vienna, adapted that technique to grow the first brain organoids in 2013. Other researchers soon followed suit, cultivating specialized mini-brains to study disorders ranging from microcephaly to schizophrenia.

Muotri, now a youthful 45-year-old, was among the boldest of these pioneers. His team revealed the process by which Zika virus causes brain damage, and showed that sofosbuvir, a drug previously approved for hepatitis C, protected organoids from infection. He persuaded NASA to fly his organoids to the International Space Station, where they're being used to trace the impact of microgravity on neurodevelopment. He grew brain organoids using cells implanted with Neanderthal genes, and found that their wiring differed from organoids with modern DNA.

Like the latter experiment, Muotri's brainwave breakthrough emerged from a longtime obsession with neuroarchaeology. "I wanted to figure out how the human brain became unique," he told me in a phone interview. "Compared to other species, we are very social. So I looked for conditions where the social brain doesn't function well, and that led me to autism." He began investigating how gene variants associated with severe forms of the disorder affected neural networks in brain organoids.

Tinkering with chemical cocktails, Muotri and his colleagues were able to keep their organoids alive far longer than earlier versions, and to culture more diverse types of brain cells. One team member, Priscilla Negraes, devised a way to measure the mini-brains' electrical activity, by planting them in a tray lined with electrodes. By four months, the researchers found to their astonishment, normal organoids (but not those with an autism gene) emitted bursts of synchronized firing, separated by 20-second silences. At nine months, the organoids were producing up to 300,000 spikes per minute, across a range of frequencies.

He shared his vision for "brain farms," which would grow organoids en masse for drug development or tissue transplants.

When the team used an artificial intelligence system to compare these patterns with EEGs of gestating fetuses, the program found them to be nearly identical at each stage of development. As many scientists noted when the news broke, that didn't mean the organoids were conscious. (Their chaotic bursts bore little resemblance to the orderly rhythms of waking adult brains.) But to some observers, it suggested that they might be approaching the borderline.

***

Shortly after Muotri's team published their findings, I attended a conference at UCSD on the ethical questions they raised. The scientist, in jeans and a sky-blue shirt, spoke rhapsodically of brain organoids' potential to solve scientific mysteries and lead to new medical treatments. He showed video of a spider-like robot connected to an organoid through a computer interface. The machine responded to different brainwave patterns by walking or stopping—the first stage, Muotri hoped, in teaching organoids to communicate with the outside world. He described his plans to develop organoids with multiple brain regions, and to hook them up to retinal organoids so they could "see." He shared his vision for "brain farms," which would grow organoids en masse for drug development or tissue transplants.

Muotri holds a spider-like robot that can connect to an organoid through a computer interface.

(Credit: ROLAND LIZARONDO/KPBS)

Yet Muotri also stressed the current limitations of the technology. His organoids contain approximately 2 million neurons, compared to about 200 million in a rat's brain and 86 billion in an adult human's. They consist only of a cerebral cortex, and lack many of a real brain's cell types. Because researchers haven't yet found a way to give organoids blood vessels, moreover, nutrients can't penetrate their inner recesses—a severe constraint on their growth.

Another panelist strongly downplayed the imminence of any Rubicon. Patricia Churchland, an eminent philosopher of neuroscience, cited research suggesting that in mammals, networked connections between the cortex and the thalamus are a minimum requirement for consciousness. "It may be a blessing that you don't have the enabling conditions," she said, "because then you don't have the ethical issues."

Christof Koch, for his part, sounded much less apprehensive than the Times had made him seem. He noted that science lacks a definition of consciousness, beyond an organism's sense of its own existence—"the fact that it feels like something to be you or me." As to the competing notions of how the phenomenon arises, he explained, he prefers one known as Integrated Information Theory, developed by neuroscientist Giulio Tononi. IIT considers consciousness to be a quality intrinsic to systems that reach a certain level of complexity, integration, and causal power (the ability for present actions to determine future states). By that standard, Koch doubted that brain organoids had stepped over the threshold.

One way to tell, he said, might be to use the "zap and zip" test invented by Tononi and his colleague Marcello Massimini in the early 2000s to determine whether patients are conscious in the medical sense. This technique zaps the brain with a pulse of magnetic energy, using a coil held to the scalp. As loops of neural impulses cascade through the cerebral circuitry, an EEG records the firing patterns. In a waking brain, the feedback is highly complex—neither totally predictable nor totally random. In other states, such as sleep, coma, or anesthesia, the rhythms are simpler. Applying an algorithm commonly used for computer "zip" files, the researchers devised a scale that allowed them to correctly diagnose most patients who were minimally conscious or in a vegetative state.

If scientists could find a way to apply "zap and zip" to brain organoids, Koch ventured, it should be possible to rank their degree of awareness on a similar scale. And if it turned out that an organoid was conscious, he added, our ethical calculations should strive to minimize suffering, and avoid it where possible—just as we now do, or ought to, with animal subjects. (Muotri, I later learned, was already contemplating sensors that would signal when organoids were likely in distress.)

During the question-and-answer period, an audience member pressed Churchland about how her views might change if the "enabling conditions" for consciousness in brain organoids were to arise. "My feeling is, we'll answer that when we get there," she said. "That's an unsatisfying answer, but it's because I don't know. Maybe they're totally happy hanging out in a dish! Maybe that's the way to be."

***

Muotri himself admits to no qualms about his creations attaining consciousness, whether sooner or later. "I think we should try to replicate the model as close as possible to the human brain," he told me after the conference. "And if that involves having a human consciousness, we should go in that direction." Still, he said, if strong evidence of sentience does arise, "we should pause and discuss among ourselves what to do."

"The field is moving so rapidly, you blink your eyes and another advance has occurred."

Churchland figures it will be at least a decade before anyone reaches the crossroads. "That's partly because the thalamus has a very complex architecture," she said. It might be possible to mimic that architecture in the lab, she added, "but I tend to think it's not going to be a piece of cake."

If anything worries Churchland about brain organoids, in fact, it's that Muotri's visionary claims for their potential could set off a backlash among those who find them unacceptably spooky. "Alysson has done brilliant work, and he's wonderfully charismatic and charming," she said. "But then there's that guy back there who doesn't think it's exciting; he thinks you're the Devil incarnate. You're playing into the hands of people who are going to shut you down."

Koch, however, is more willing to indulge Muotri's dreams. "Ten years ago," he said, "nobody would have believed you can take a stem cell and get an entire retina out of it. It's absolutely frigging amazing. So who am I to say the same thing can't be true for the thalamus or the cortex? The field is moving so rapidly, you blink your eyes and another advance has occurred."

The point, he went on, is not to build a Cartesian thought experiment—or a Matrix-style dystopia—but to vanquish some of humankind's most terrifying foes. "You know, my dad passed away of Parkinson's. I had a twin daughter; she passed away of sudden death syndrome. One of my best friends killed herself; she was schizophrenic. We want to eliminate all these terrible things, and that requires experimentation. We just have to go into it with open eyes."