What’s the Right Way to Regulate Gene-Edited Crops?

A cornfield in summer.

In the next few decades, humanity faces its biggest food crisis since the invention of the plow. The planet's population, currently 7.6 billion, is expected to reach 10 billion by 2050; to avoid mass famine, according to the World Resource Institute, we'll need to produce 70 percent more calories than we do today.

Imagine that a cheap, easy-to-use, and rapidly deployable technology could make crops more fertile and strengthen their resistance to threats.

Meanwhile, climate change will bring intensifying assaults by heat, drought, storms, pests, and weeds, depressing farm yields around the globe. Epidemics of plant disease—already laying waste to wheat, citrus, bananas, coffee, and cacao in many regions—will spread ever further through the vectors of modern trade and transportation.

So here's a thought experiment: Imagine that a cheap, easy-to-use, and rapidly deployable technology could make crops more fertile and strengthen their resistance to these looming threats. Imagine that it could also render them more nutritious and tastier, with longer shelf lives and less vulnerability to damage in shipping—adding enhancements to human health and enjoyment, as well as reduced food waste, to the possible benefits.

Finally, imagine that crops bred with the aid of this tool might carry dangers. Some could contain unsuspected allergens or toxins. Others might disrupt ecosystems, affecting the behavior or very survival of other species, or infecting wild relatives with their altered DNA.

Now ask yourself: If such a technology existed, should policymakers encourage its adoption, or ban it due to the risks? And if you chose the former alternative, how should crops developed by this method be regulated?

In fact, this technology does exist, though its use remains mostly experimental. It's called gene editing, and in the past five years it has emerged as a potentially revolutionary force in many areas—among them, treating cancer and genetic disorders; growing transplantable human organs in pigs; controlling malaria-spreading mosquitoes; and, yes, transforming agriculture. Several versions are currently available, the newest and nimblest of which goes by the acronym CRISPR.

Gene editing is far simpler and more efficient than older methods used to produce genetically modified organisms (GMOs). Unlike those methods, moreover, it can be used in ways that leave no foreign genes in the target organism—an advantage that proponents argue should comfort anyone leery of consuming so-called "Frankenfoods." But debate persists over what precautions must be taken before these crops come to market.

Recently, two of the world's most powerful regulatory bodies offered very different answers to that question. The United States Department of Agriculture (USDA) declared in March 2018 that it "does not currently regulate, or have any plans to regulate" plants that are developed through most existing methods of gene editing. The Court of Justice of the European Union (ECJ), by contrast, ruled in July that such crops should be governed by the same stringent regulations as conventional GMOs.

Some experts suggest that the broadly permissive American approach and the broadly restrictive EU policy are equally flawed.

Each announcement drew protests, for opposite reasons. Anti-GMO activists assailed the USDA's statement, arguing that all gene-edited crops should be tested and approved before marketing. "You don't know what those mutations or rearrangements might do in a plant," warned Michael Hansen, a senior scientist with the advocacy group Consumers Union. Biotech boosters griped that the ECJ's decision would stifle innovation and investment. "By any sensible standard, this judgment is illogical and absurd," wrote the British newspaper The Observer.

Yet some experts suggest that the broadly permissive American approach and the broadly restrictive EU policy are equally flawed. "What's behind these regulatory decisions is not science," says Jennifer Kuzma, co-director of the Genetic Engineering and Society Center at North Carolina State University, a former advisor to the World Economic Forum, who has researched and written extensively on governance issues in biotechnology. "It's politics, economics, and culture."

The U.S. Welcomes Gene-Edited Food

Humans have been modifying the genomes of plants and animals for 10,000 years, using selective breeding—a hit-or-miss method that can take decades or more to deliver rewards. In the mid-20th century, we learned to speed up the process by exposing organisms to radiation or mutagenic chemicals. But it wasn't until the 1980s that scientists began modifying plants by altering specific stretches of their DNA.

Today, about 90 percent of the corn, cotton and soybeans planted in the U.S. are GMOs; such crops cover nearly 4 million square miles (10 million square kilometers) of land in 29 countries. Most of these plants are transgenic, meaning they contain genes from an unrelated species—often as biologically alien as a virus or a fish. Their modifications are designed primarily to boost profit margins for mechanized agribusiness: allowing crops to withstand herbicides so that weeds can be controlled by mass spraying, for example, or to produce their own pesticides to lessen the need for chemical inputs.

In the early days, the majority of GM crops were created by extracting the gene for a desired trait from a donor organism, multiplying it, and attaching it to other snippets of DNA—usually from a microbe called an agrobacterium—that could help it infiltrate the cells of the target plant. Biotechnologists injected these particles into the target, hoping at least one would land in a place where it would perform its intended function; if not, they kept trying. The process was quicker than conventional breeding, but still complex, scattershot, and costly.

Because agrobacteria can cause plant tumors, Kuzma explains, policymakers in the U.S. decided to regulate GMO crops under an existing law, the Plant Pest Act of 1957, which addressed dangers like imported trees infested with invasive bugs. Every GMO containing the DNA of agrobacterium or another plant pest had to be tested to see whether it behaved like a pest, and undergo a lengthy approval process. By 2010, however, new methods had been developed for creating GMOs without agrobacteria; such plants could typically be marketed without pre-approval.

Soon after that, the first gene-edited crops began appearing. If old-school genetic engineering was a shotgun, techniques like TALEN and CRISPR were a scalpel—or the search-and-replace function on a computer program. With CRISPR/Cas9, for example, an enzyme that bacteria use to recognize and chop up hostile viruses is reprogrammed to find and snip out a desired bit of a plant or other organism's DNA. The enzyme can also be used to insert a substitute gene. If a DNA sequence is simply removed, or the new gene comes from a similar species, the changes in the target plant's genotype and phenotype (its general characteristics) may be no different from those that could be produced through selective breeding. If a foreign gene is added, the plant becomes a transgenic GMO.

Companies are already teeing up gene-edited products for the U.S. market, like a cooking oil and waxy corn.

This development, along with the emergence of non-agrobacterium GMOs, eventually prompted the USDA to propose a tiered regulatory system for all genetically engineered crops, beginning with an initial screening for potentially hazardous metaboloids or ecological impacts. (The screening was intended, in part, to guard against the "off-target effects"—stray mutations—that occasionally appear in gene-edited organisms.) If no red flags appeared, the crop would be approved; otherwise, it would be subject to further review, and possible regulation.

The plan was unveiled in January 2017, during the last week of the Obama presidency. Then, under the Trump administration, it was shelved. Although the USDA continues to promise a new set of regulations, the only hint of what they might contain has been Secretary of Agriculture Sonny Perdue's statement last March that gene-edited plants would remain unregulated if they "could otherwise have been developed through traditional breeding techniques, as long as they are not plant pests or developed using plant pests."

Because transgenic plants could not be "developed through traditional breeding techniques," this statement could be taken to mean that gene editing in which foreign DNA is introduced might actually be regulated. But because the USDA regulates conventional transgenic GMOs only if they trigger the plant-pest stipulation, experts assume gene-edited crops will face similarly limited oversight.

Meanwhile, companies are already teeing up gene-edited products for the U.S. market. An herbicide-resistant oilseed rape, developed using a proprietary technique, has been available since 2016. A cooking oil made from TALEN-tweaked soybeans, designed to have a healthier fatty-acid profile, is slated for release within the next few months. A CRISPR-edited "waxy" corn, designed with a starch profile ideal for processed foods, should be ready by 2021.

In all likelihood, none of these products will have to be tested for safety.

In the E.U., Stricter Rules Apply

Now let's look at the European Union. Since the late 1990s, explains Gregory Jaffe, director of the Project on Biotechnology at the Center for Science in the Public Interest, the EU has had a "process-based trigger" for genetically engineered products: "If you use recombinant DNA, you are going to be regulated." All foods and animal feeds must be approved and labeled if they consist of or contain more than 0.9 percent GM ingredients. (In the U.S., "disclosure" of GM ingredients is mandatory, if someone asks, but labeling is not required.) The only GM crop that can be commercially grown in EU member nations is a type of insect-resistant corn, though some countries allow imports.

European scientists helped develop gene editing, and they—along with the continent's biotech entrepreneurs—have been busy developing applications for crops. But European farmers seem more divided over the technology than their American counterparts. The main French agricultural trades union, for example, supports research into non-transgenic gene editing and its exemption from GMO regulation. But it was the country's small-farmers' union, the Confédération Paysanne, along with several allied groups, that in 2015 submitted a complaint to the ECJ, asking that all plants produced via mutagenesis—including gene-editing—be regulated as GMOs.

At this point, it should be mentioned that in the past 30 years, large population studies have found no sign that consuming GM foods is harmful to human health. GMO critics can, however, point to evidence that herbicide-resistant crops have encouraged overuse of herbicides, giving rise to poison-proof "superweeds," polluting the environment with suspected carcinogens, and inadvertently killing beneficial plants. Those allegations were key to the French plaintiffs' argument that gene-edited crops might similarly do unexpected harm. (Disclosure: Leapsmag's parent company, Bayer, recently acquired Monsanto, a maker of herbicides and herbicide-resistant seeds. Also, Leaps by Bayer, an innovation initiative of Bayer and Leapsmag's direct founder, has funded a biotech startup called JoynBio that aims to reduce the amount of nitrogen fertilizer required to grow crops.)

The ruling was "scientifically nonsensical. It's because of things like this that I'll never go back to Europe."

In the end, the EU court found in the Confédération's favor on gene editing—though the court maintained the regulatory exemption for mutagenesis induced by chemicals or radiation, citing the 'long safety record' of those methods.

The ruling was "scientifically nonsensical," fumes Rodolphe Barrangou, a French food scientist who pioneered CRISPR while working for DuPont in Wisconsin and is now a professor at NC State. "It's because of things like this that I'll never go back to Europe."

Nonetheless, the decision was consistent with longstanding EU policy on crops made with recombinant DNA. Given the difficulty and expense of getting such products through the continent's regulatory system, many other European researchers may wind up following Barrangou to America.

Getting to the Root of the Cultural Divide

What explains the divergence between the American and European approaches to GMOs—and, by extension, gene-edited crops? In part, Jennifer Kuzma speculates, it's that Europeans have a different attitude toward eating. "They're generally more tied to where their food comes from, where it's produced," she notes. They may also share a mistrust of government assurances on food safety, borne of the region's Mad Cow scandals of the 1980s and '90s. In Catholic countries, consumers may have misgivings about tinkering with the machinery of life.

But the principal factor, Kuzma argues, is that European and American agriculture are structured differently. "GM's benefits have mostly been designed for large-scale industrial farming and commodity crops," she says. That kind of farming is dominant in the U.S., but not in Europe, leading to a different balance of political power. In the EU, there was less pressure on decisionmakers to approve GMOs or exempt gene-edited crops from regulation—and more pressure to adopt a GM-resistant stance.

Such dynamics may be operating in other regions as well. In China, for example, the government has long encouraged research in GMOs; a state-owned company recently acquired Syngenta, a Swiss-based multinational corporation that is a leading developer of GM and gene-edited crops. GM animal feed and cooking oil can be freely imported. Yet commercial cultivation of most GM plants remains forbidden, out of deference to popular suspicions of genetically altered food. "As a new item, society has debates and doubts on GMO techniques, which is normal," President Xi Jinping remarked in 2014. "We must be bold in studying it, [but] be cautious promoting it."

The proper balance between boldness and caution is still being worked out all over the world. Europe's process-based approach may prevent researchers from developing crops that, with a single DNA snip, could rescue millions from starvation. EU regulations will also make it harder for small entrepreneurs to challenge Big Ag with a technology that, as Barrangou puts it, "can be used affordably, quickly, scalably, by anyone, without even a graduate degree in genetics." America's product-based approach, conversely, may let crops with hidden genetic dangers escape detection. And by refusing to investigate such risks, regulators may wind up exacerbating consumers' doubts about GM and gene-edited products, rather than allaying them.

"Science...can't tell you what to regulate. That's a values-based decision."

Perhaps the solution lies in combining both approaches, and adding some flexibility and nuance to the mix. "I don't believe in regulation by the product or the process," says CSPI's Jaffe. "I think you need both." Deleting a DNA base pair to silence a gene, for example, might be less risky than inserting a foreign gene into a plant—unless the deletion enables the production of an allergen, and the transgene comes from spinach.

Kuzma calls for the creation of "cooperative governance networks" to oversee crop genome editing, similar to bodies that already help develop and enforce industry standards in fisheries, electronics, industrial cleaning products, and (not incidentally) organic agriculture. Such a network could include farmers, scientists, advocacy groups, private companies, and governmental agencies. "Safety isn't an all-or-nothing concept," Kuzma says. "Science can tell you what some of the issues are in terms of risk and benefit, but it can't tell you what to regulate. That's a values-based decision."

By drawing together a wide range of stakeholders to make such decisions, she adds, "we're more likely to anticipate future consequences, and to develop a robust approach—one that not only seems more legitimate to people, but is actually just plain old better."

Researchers are looking to engineer chocolate with less oil, which could reduce some of its detriments to health.

Creamy milk with velvety texture. Dark with sprinkles of sea salt. Crunchy hazelnut-studded chunks. Chocolate is a treat that appeals to billions of people worldwide, no matter the age. And it’s not only the taste, but the feel of a chocolate morsel slowly melting in our mouths—the smoothness and slipperiness—that’s part of the overwhelming satisfaction. Why is it so enjoyable?

That’s what an interdisciplinary research team of chocolate lovers from the University of Leeds School of Food Science and Nutrition and School of Mechanical Engineering in the U.K. resolved to study in 2021. They wanted to know, “What is making chocolate that desirable?” says Siavash Soltanahmadi, one of the lead authors of a new study about chocolates hedonistic quality.

Besides addressing the researchers’ general curiosity, their answers might help chocolate manufacturers make the delicacy even more enjoyable and potentially healthier. After all, chocolate is a billion-dollar industry. Revenue from chocolate sales, whether milk or dark, is forecasted to grow 13 percent by 2027 in the U.K. In the U.S., chocolate and candy sales increased by 11 percent from 2020 to 2021, on track to reach $44.9 billion by 2026. Figuring out how chocolate affects the human palate could up the ante even more.

Building a 3D tongue

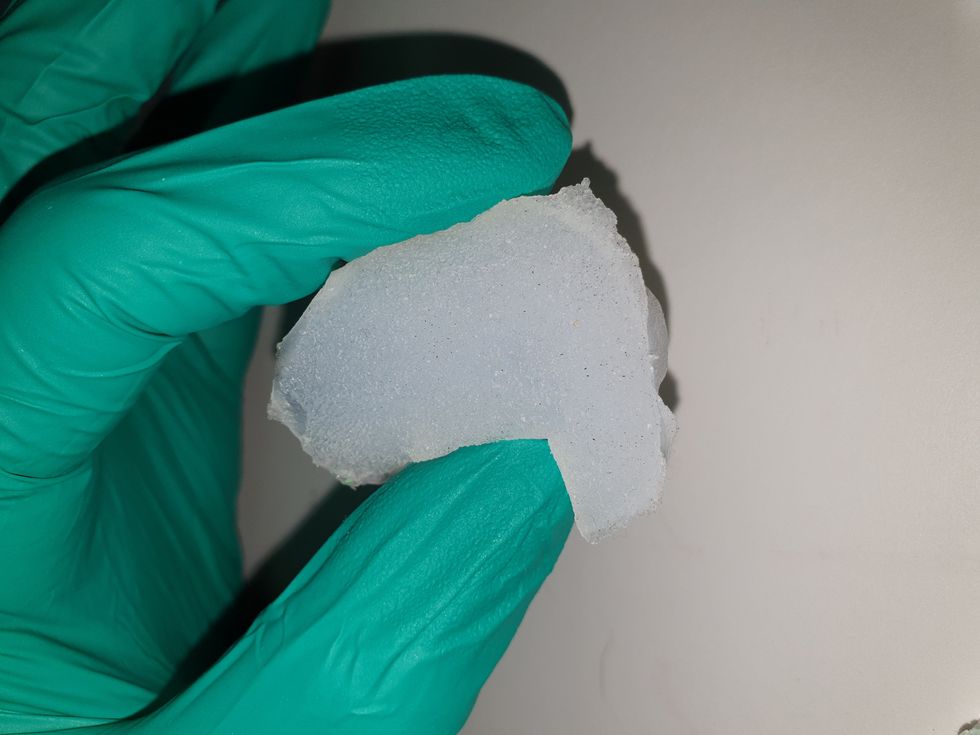

The team began by building a 3D tongue to analyze the physical process by which chocolate breaks down inside the mouth.

As part of the effort, reported earlier this year in the scientific journal ACS Applied Materials and Interfaces, the team studied a large variety of human tongues with the intention to build an “average” 3D model, says Soltanahmadi, a lubrication scientist. When it comes to edible substances, lubrication science looks at how food feels in the mouth and can help design foods that taste better and have more satisfying texture or health benefits.

There are variations in how people enjoy chocolate; some chew it while others “lick it” inside their mouths.

Tongue impressions from human participants studied using optical imaging helped the team build a tongue with key characteristics. “Our tongue is not a smooth muscle, it’s got some texture, it has got some roughness,” Soltanahmadi says. From those images, the team came up with a digital design of an average tongue and, using 3D printed molds, built a “mimic tongue.” They also added elastomers—such as silicone or polyurethane—to mimic the roughness, the texture and the mechanical properties of a real tongue. “Wettability" was another key component of the 3D tongue, Soltanahmadi says, referring to whether a surface mixes with water (hydrophilic) or, in the case of oil, resists it (hydrophobic).

Notably, the resulting artificial 3D-tongues looked nothing like the human version, but they were good mimics. The scientists also created “testing kits” that produced data on various physical parameters. One such parameter was viscosity, the measure of how gooey a food or liquid is — honey is more viscous compared to water, for example. Another was tribology, which defines how slippery something is — high fat yogurt is more slippery than low fat yogurt; milk can be more slippery than water. The researchers then mixed chocolate with artificial saliva and spread it on the 3D tongue to measure the tribology and the viscosity. From there they were able to study what happens inside the mouth when we eat chocolate.

The team focused on the stages of lubrication and the location of the fat in the chocolate, a process that has rarely been researched.

The artificial 3D-tongues look nothing like human tongues, but they function well enough to do the job.

Courtesy Anwesha Sarkar and University of Leeds

The oral processing of chocolate

We process food in our mouths in several stages, Soltanahmadi says. And there is variation in these stages depending on the type of food. So, the oral processing of a piece of meat would be different from, say, the processing of jelly or popcorn.

There are variations with chocolate, in particular; some people chew it while others use their tongues to explore it (within their mouths), Soltanahmadi explains. “Usually, from a consumer perspective, what we find is that if you have a luxury kind of a chocolate, then people tend to start with licking the chocolate rather than chewing it.” The researchers used a luxury brand of dark chocolate and focused on the process of licking rather than chewing.

As solid cocoa particles and fat are released, the emulsion envelops the tongue and coats the palette creating a smooth feeling of chocolate all over the mouth. That tactile sensation is part of the chocolate’s hedonistic appeal we crave.

Understanding the make-up of the chocolate was also an important step in the study. “Chocolate is a composite material. So, it has cocoa butter, which is oil, it has some particles in it, which is cocoa solid, and it has sugars," Soltanahmadi says. "Dark chocolate has less oil, for example, and less sugar in it, most of the time."

The researchers determined that the oral processing of chocolate begins as soon as it enters a person’s mouth; it starts melting upon exposure to one’s body temperature, even before the tongue starts moving, Soltanahmadi says. Then, lubrication begins. “[Saliva] mixes with the oily chocolate and it makes an emulsion." An emulsion is a fluid with a watery (or aqueous) phase and an oily phase. As chocolate breaks down in the mouth, that solid piece turns into a smooth emulsion with a fatty film. “The oil from the chocolate becomes droplets in a continuous aqueous phase,” says Soltanahmadi. In other words, as solid cocoa particles and fat are released, the emulsion envelops the tongue and coats the palette, creating a smooth feeling of chocolate all over the mouth. That tactile sensation is part of the chocolate’s hedonistic appeal we crave, says Soltanahmadi.

Finding the sweet spot

After determining how chocolate is orally processed, the research team wanted to find the exact sweet spot of the breakdown of solid cocoa particles and fat as they are released into the mouth. They determined that the epicurean pleasure comes only from the chocolate's outer layer of fat; the secondary fatty layers inside the chocolate don’t add to the sensation. It was this final discovery that helped the team determine that it might be possible to produce healthier chocolate that would contain less oil, says Soltanahmadi. And therefore, less fat.

Rongjia Tao, a physicist at Temple University in Philadelphia, thinks the Leeds study and the concept behind it is “very interesting.” Tao, himself, did a study in 2016 and found he could reduce fat in milk chocolate by 20 percent. He believes that the Leeds researchers’ discovery about the first layer of fat being more important for taste than the other layer can inform future chocolate manufacturing. “As a scientist I consider this significant and an important starting point,” he says.

Chocolate is rich in polyphenols, naturally occurring compounds also found in fruits and vegetables, such as grapes, apples and berries. Research found that plant polyphenols can protect against cancer, diabetes and osteoporosis as well as cardiovascular ad neurodegenerative diseases.

Not everyone thinks it’s a good idea, such as chef Michael Antonorsi, founder and owner of Chuao Chocolatier, one of the leading chocolate makers in the U.S. First, he says, “cacao fat is definitely a good fat.” Second, he’s not thrilled that science is trying to interfere with nature. “Every time we've tried to intervene and change nature, we get things out of balance,” says Antonorsi. “There’s a reason cacao is botanically known as food of the gods. The botanical name is the Theobroma cacao: Theobroma in ancient Greek, Theo is God and Brahma is food. So it's a food of the gods,” Antonorsi explains. He’s doubtful that a chocolate made only with a top layer of fat will produce the same epicurean satisfaction. “You're not going to achieve the same sensation because that surface fat is going to dissipate and there is no fat from behind coming to take over,” he says.

Without layers of fat, Antonorsi fears the deeply satisfying experiential part of savoring chocolate will be lost. The University of Leeds team, however, thinks that it may be possible to make chocolate healthier - when consumed in limited amounts - without sacrificing its taste. They believe the concept of less fatty but no less slick chocolate will resonate with at least some chocolate-makers and consumers, too.

Chocolate already contains some healthful compounds. Its cocoa particles have “loads of health benefits,” says Soltanahmadi. Dark chocolate usually has more cocoa than milk chocolate. Some experts recommend that dark chocolate should contain at least 70 percent cocoa in order for it to offer some health benefit. Research has shown that the cocoa in chocolate is rich in polyphenols, naturally occurring compounds also found in fruits and vegetables, such as grapes, apples and berries. Research has shown that consuming plant polyphenols can be protective against cancer, diabetes and osteoporosis as well as cardiovascular and neurodegenerative diseases.

“So keeping the healthy part of it and reducing the oily part of it, which is not healthy, but is giving you that indulgence of it … that was the final aim,” Soltanahmadi says. He adds that the team has been approached by individuals in the chocolate industry about their research. “Everyone wants to have a healthy chocolate, which at the same time tastes brilliant and gives you that self-indulging experience.”

Probiotic bacteria can be engineered to fight antibiotic-resistant superbugs by releasing chemicals that kill them.

In 1945, almost two decades after Alexander Fleming discovered penicillin, he warned that as antibiotics use grows, they may lose their efficiency. He was prescient—the first case of penicillin resistance was reported two years later. Back then, not many people paid attention to Fleming’s warning. After all, the “golden era” of the antibiotics age had just began. By the 1950s, three new antibiotics derived from soil bacteria — streptomycin, chloramphenicol, and tetracycline — could cure infectious diseases like tuberculosis, cholera, meningitis and typhoid fever, among others.

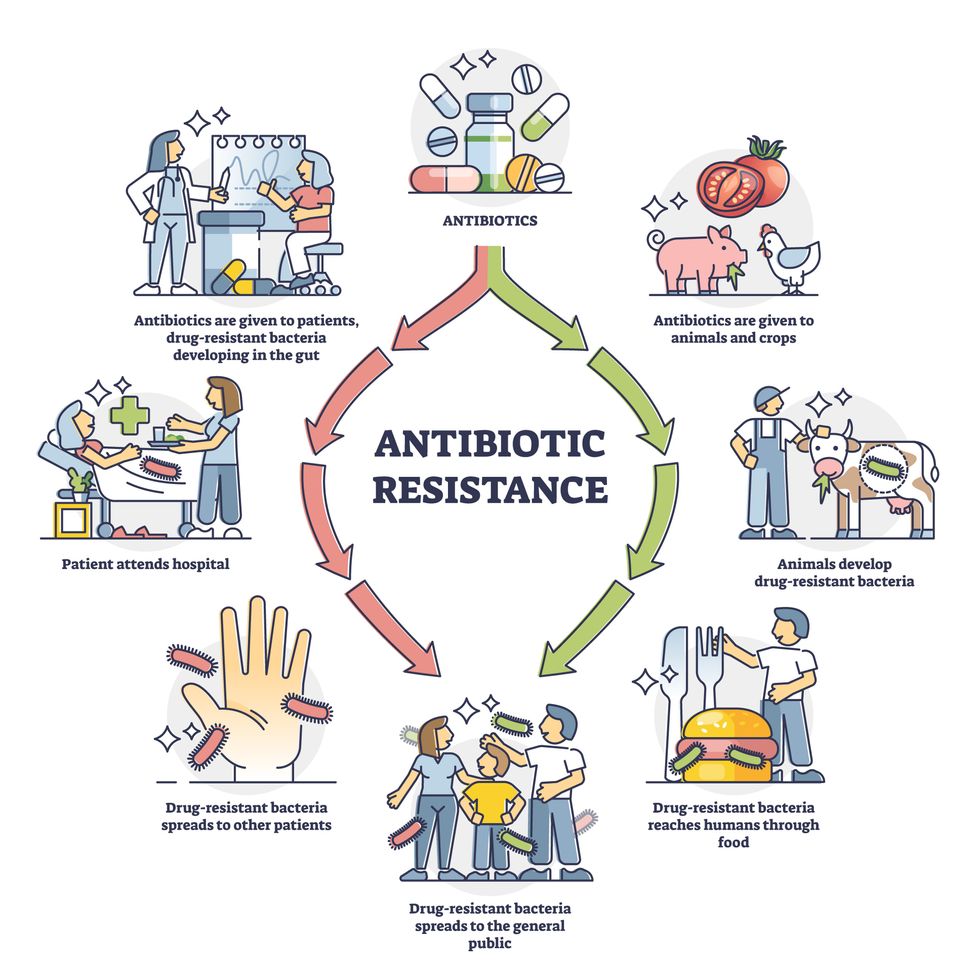

Today, these antibiotics and many of their successors developed through the 1980s are gradually losing their effectiveness. The extensive overuse and misuse of antibiotics led to the rise of drug resistance. The livestock sector buys around 80 percent of all antibiotics sold in the U.S. every year. Farmers feed cows and chickens low doses of antibiotics to prevent infections and fatten up the animals, which eventually causes resistant bacterial strains to evolve. If manure from cattle is used on fields, the soil and vegetables can get contaminated with antibiotic-resistant bacteria. Another major factor is doctors overprescribing antibiotics to humans, particularly in low-income countries. Between 2000 to 2018, the global rates of human antibiotic consumption shot up by 46 percent.

In recent years, researchers have been exploring a promising avenue: the use of synthetic biology to engineer new bacteria that may work better than antibiotics. The need continues to grow, as a Lancet study linked antibiotic resistance to over 1.27 million deaths worldwide in 2019, surpassing HIV/AIDS and malaria. The western sub-Saharan Africa region had the highest death rate (27.3 people per 100,000).

Researchers warn that if nothing changes, by 2050, antibiotic resistance could kill 10 million people annually.

To make it worse, our remedy pipelines are drying up. Out of the 18 biggest pharmaceutical companies, 15 abandoned antibiotic development by 2013. According to the AMR Action Fund, venture capital has remained indifferent towards biotech start-ups developing new antibiotics. In 2019, at least two antibiotic start-ups filed for bankruptcy. As of December 2020, there were 43 new antibiotics in clinical development. But because they are based on previously known molecules, scientists say they are inadequate for treating multidrug-resistant bacteria. Researchers warn that if nothing changes, by 2050, antibiotic resistance could kill 10 million people annually.

The rise of synthetic biology

To circumvent this dire future, scientists have been working on alternative solutions using synthetic biology tools, meaning genetically modifying good bacteria to fight the bad ones.

From the time life evolved on earth around 3.8 billion years ago, bacteria have engaged in biological warfare. They constantly strategize new methods to combat each other by synthesizing toxic proteins that kill competition.

For example, Escherichia coli produces bacteriocins or toxins to kill other strains of E.coli that attempt to colonize the same habitat. Microbes like E.coli (which are not all pathogenic) are also naturally present in the human microbiome. The human microbiome harbors up to 100 trillion symbiotic microbial cells. The majority of them are beneficial organisms residing in the gut at different compositions.

The chemicals that these “good bacteria” produce do not pose any health risks to us, but can be toxic to other bacteria, particularly to human pathogens. For the last three decades, scientists have been manipulating bacteria’s biological warfare tactics to our collective advantage.

In the late 1990s, researchers drew inspiration from electrical and computing engineering principles that involve constructing digital circuits to control devices. In certain ways, every cell in living organisms works like a tiny computer. The cell receives messages in the form of biochemical molecules that cling on to its surface. Those messages get processed within the cells through a series of complex molecular interactions.

Synthetic biologists can harness these living cells’ information processing skills and use them to construct genetic circuits that perform specific instructions—for example, secrete a toxin that kills pathogenic bacteria. “Any synthetic genetic circuit is merely a piece of information that hangs around in the bacteria’s cytoplasm,” explains José Rubén Morones-Ramírez, a professor at the Autonomous University of Nuevo León, Mexico. Then the ribosome, which synthesizes proteins in the cell, processes that new information, making the compounds scientists want bacteria to make. “The genetic circuit remains separated from the living cell’s DNA,” Morones-Ramírez explains. When the engineered bacteria replicates, the genetic circuit doesn’t become part of its genome.

Highly intelligent by bacterial standards, some multidrug resistant V. cholerae strains can also “collaborate” with other intestinal bacterial species to gain advantage and take hold of the gut.

In 2000, Boston-based researchers constructed an E.coli with a genetic switch that toggled between turning genes on and off two. Later, they built some safety checks into their bacteria. “To prevent unintentional or deleterious consequences, in 2009, we built a safety switch in the engineered bacteria’s genetic circuit that gets triggered after it gets exposed to a pathogen," says James Collins, a professor of biological engineering at MIT and faculty member at Harvard University’s Wyss Institute. “After getting rid of the pathogen, the engineered bacteria is designed to switch off and leave the patient's body.”

Overuse and misuse of antibiotics causes resistant strains to evolve

Adobe Stock

Seek and destroy

As the field of synthetic biology developed, scientists began using engineered bacteria to tackle superbugs. They first focused on Vibrio cholerae, which in the 19th and 20th century caused cholera pandemics in India, China, the Middle East, Europe, and Americas. Like many other bacteria, V. cholerae communicate with each other via quorum sensing, a process in which the microorganisms release different signaling molecules, to convey messages to its brethren. Highly intelligent by bacterial standards, some multidrug resistant V. cholerae strains can also “collaborate” with other intestinal bacterial species to gain advantage and take hold of the gut. When untreated, cholera has a mortality rate of 25 to 50 percent and outbreaks frequently occur in developing countries, especially during floods and droughts.

Sometimes, however, V. cholerae makes mistakes. In 2008, researchers at Cornell University observed that when quorum sensing V. cholerae accidentally released high concentrations of a signaling molecule called CAI-1, it had a counterproductive effect—the pathogen couldn’t colonize the gut.

So the group, led by John March, professor of biological and environmental engineering, developed a novel strategy to combat V. cholerae. They genetically engineered E.coli to eavesdrop on V. cholerae communication networks and equipped it with the ability to release the CAI-1 molecules. That interfered with V. cholerae progress. Two years later, the Cornell team showed that V. cholerae-infected mice treated with engineered E.coli had a 92 percent survival rate.

These findings inspired researchers to sic the good bacteria present in foods like yogurt and kimchi onto the drug-resistant ones.

Three years later in 2011, Singapore-based scientists engineered E.coli to detect and destroy Pseudomonas aeruginosa, an often drug-resistant pathogen that causes pneumonia, urinary tract infections, and sepsis. Once the genetically engineered E.coli found its target through its quorum sensing molecules, it then released a peptide, that could eradicate 99 percent of P. aeruginosa cells in a test-tube experiment. The team outlined their work in a Molecular Systems Biology study.

“At the time, we knew that we were entering new, uncharted territory,” says lead author Matthew Chang, an associate professor and synthetic biologist at the National University of Singapore and lead author of the study. “To date, we are still in the process of trying to understand how long these microbes stay in our bodies and how they might continue to evolve.”

More teams followed the same path. In a 2013 study, MIT researchers also genetically engineered E.coli to detect P. aeruginosa via the pathogen’s quorum-sensing molecules. It then destroyed the pathogen by secreting a lab-made toxin.

Probiotics that fight

A year later in 2014, a Nature study found that the abundance of Ruminococcus obeum, a probiotic bacteria naturally occurring in the human microbiome, interrupts and reduces V.cholerae’s colonization— by detecting the pathogen’s quorum sensing molecules. The natural accumulation of R. obeum in Bangladeshi adults helped them recover from cholera despite living in an area with frequent outbreaks.

The findings from 2008 to 2014 inspired Collins and his team to delve into how good bacteria present in foods like yogurt and kimchi can attack drug-resistant bacteria. In 2018, Collins and his team developed the engineered probiotic strategy. They tweaked a bacteria commonly found in yogurt called Lactococcus lactis to treat cholera.

Engineered bacteria can be trained to target pathogens when they are at their most vulnerable metabolic stage in the human gut. --José Rubén Morones-Ramírez.

More scientists followed with more experiments. So far, researchers have engineered various probiotic organisms to fight pathogenic bacteria like Staphylococcus aureus (leading cause of skin, tissue, bone, joint and blood infections) and Clostridium perfringens (which causes watery diarrhea) in test-tube and animal experiments. In 2020, Russian scientists engineered a probiotic called Pichia pastoris to produce an enzyme called lysostaphin that eradicated S. aureus in vitro. Another 2020 study from China used an engineered probiotic bacteria Lactobacilli casei as a vaccine to prevent C. perfringens infection in rabbits.

In a study last year, Ramírez’s group at the Autonomous University of Nuevo León, engineered E. coli to detect quorum-sensing molecules from Methicillin-resistant Staphylococcus aureus or MRSA, a notorious superbug. The E. coli then releases a bacteriocin that kills MRSA. “An antibiotic is just a molecule that is not intelligent,” says Ramírez. “On the other hand, engineered bacteria can be trained to target pathogens when they are at their most vulnerable metabolic stage in the human gut.”

Collins and Timothy Lu, an associate professor of biological engineering at MIT, found that engineered E. coli can help treat other conditions—such as phenylketonuria, a rare metabolic disorder, that causes the build-up of an amino acid phenylalanine. Their start-up Synlogic aims to commercialize the technology, and has completed a phase 2 clinical trial.

Circumventing the challenges

The bacteria-engineering technique is not without pitfalls. One major challenge is that beneficial gut bacteria produce their own quorum-sensing molecules that can be similar to those that pathogens secrete. If an engineered bacteria’s biosensor is not specific enough, it will be ineffective.

Another concern is whether engineered bacteria might mutate after entering the gut. “As with any technology, there are risks where bad actors could have the capability to engineer a microbe to act quite nastily,” says Collins of MIT. But Collins and Ramírez both insist that the chances of the engineered bacteria mutating on its own are virtually non-existent. “It is extremely unlikely for the engineered bacteria to mutate,” Ramírez says. “Coaxing a living cell to do anything on command is immensely challenging. Usually, the greater risk is that the engineered bacteria entirely lose its functionality.”

However, the biggest challenge is bringing the curative bacteria to consumers. Pharmaceutical companies aren’t interested in antibiotics or their alternatives because it’s less profitable than developing new medicines for non-infectious diseases. Unlike the more chronic conditions like diabetes or cancer that require long-term medications, infectious diseases are usually treated much quicker. Running clinical trials are expensive and antibiotic-alternatives aren’t lucrative enough.

“Unfortunately, new medications for antibiotic resistant infections have been pushed to the bottom of the field,” says Lu of MIT. “It's not because the technology does not work. This is more of a market issue. Because clinical trials cost hundreds of millions of dollars, the only solution is that governments will need to fund them.” Lu stresses that societies must lobby to change how the modern healthcare industry works. “The whole world needs better treatments for antibiotic resistance.”