What’s the Right Way to Regulate Gene-Edited Crops?

A cornfield in summer.

In the next few decades, humanity faces its biggest food crisis since the invention of the plow. The planet's population, currently 7.6 billion, is expected to reach 10 billion by 2050; to avoid mass famine, according to the World Resource Institute, we'll need to produce 70 percent more calories than we do today.

Imagine that a cheap, easy-to-use, and rapidly deployable technology could make crops more fertile and strengthen their resistance to threats.

Meanwhile, climate change will bring intensifying assaults by heat, drought, storms, pests, and weeds, depressing farm yields around the globe. Epidemics of plant disease—already laying waste to wheat, citrus, bananas, coffee, and cacao in many regions—will spread ever further through the vectors of modern trade and transportation.

So here's a thought experiment: Imagine that a cheap, easy-to-use, and rapidly deployable technology could make crops more fertile and strengthen their resistance to these looming threats. Imagine that it could also render them more nutritious and tastier, with longer shelf lives and less vulnerability to damage in shipping—adding enhancements to human health and enjoyment, as well as reduced food waste, to the possible benefits.

Finally, imagine that crops bred with the aid of this tool might carry dangers. Some could contain unsuspected allergens or toxins. Others might disrupt ecosystems, affecting the behavior or very survival of other species, or infecting wild relatives with their altered DNA.

Now ask yourself: If such a technology existed, should policymakers encourage its adoption, or ban it due to the risks? And if you chose the former alternative, how should crops developed by this method be regulated?

In fact, this technology does exist, though its use remains mostly experimental. It's called gene editing, and in the past five years it has emerged as a potentially revolutionary force in many areas—among them, treating cancer and genetic disorders; growing transplantable human organs in pigs; controlling malaria-spreading mosquitoes; and, yes, transforming agriculture. Several versions are currently available, the newest and nimblest of which goes by the acronym CRISPR.

Gene editing is far simpler and more efficient than older methods used to produce genetically modified organisms (GMOs). Unlike those methods, moreover, it can be used in ways that leave no foreign genes in the target organism—an advantage that proponents argue should comfort anyone leery of consuming so-called "Frankenfoods." But debate persists over what precautions must be taken before these crops come to market.

Recently, two of the world's most powerful regulatory bodies offered very different answers to that question. The United States Department of Agriculture (USDA) declared in March 2018 that it "does not currently regulate, or have any plans to regulate" plants that are developed through most existing methods of gene editing. The Court of Justice of the European Union (ECJ), by contrast, ruled in July that such crops should be governed by the same stringent regulations as conventional GMOs.

Some experts suggest that the broadly permissive American approach and the broadly restrictive EU policy are equally flawed.

Each announcement drew protests, for opposite reasons. Anti-GMO activists assailed the USDA's statement, arguing that all gene-edited crops should be tested and approved before marketing. "You don't know what those mutations or rearrangements might do in a plant," warned Michael Hansen, a senior scientist with the advocacy group Consumers Union. Biotech boosters griped that the ECJ's decision would stifle innovation and investment. "By any sensible standard, this judgment is illogical and absurd," wrote the British newspaper The Observer.

Yet some experts suggest that the broadly permissive American approach and the broadly restrictive EU policy are equally flawed. "What's behind these regulatory decisions is not science," says Jennifer Kuzma, co-director of the Genetic Engineering and Society Center at North Carolina State University, a former advisor to the World Economic Forum, who has researched and written extensively on governance issues in biotechnology. "It's politics, economics, and culture."

The U.S. Welcomes Gene-Edited Food

Humans have been modifying the genomes of plants and animals for 10,000 years, using selective breeding—a hit-or-miss method that can take decades or more to deliver rewards. In the mid-20th century, we learned to speed up the process by exposing organisms to radiation or mutagenic chemicals. But it wasn't until the 1980s that scientists began modifying plants by altering specific stretches of their DNA.

Today, about 90 percent of the corn, cotton and soybeans planted in the U.S. are GMOs; such crops cover nearly 4 million square miles (10 million square kilometers) of land in 29 countries. Most of these plants are transgenic, meaning they contain genes from an unrelated species—often as biologically alien as a virus or a fish. Their modifications are designed primarily to boost profit margins for mechanized agribusiness: allowing crops to withstand herbicides so that weeds can be controlled by mass spraying, for example, or to produce their own pesticides to lessen the need for chemical inputs.

In the early days, the majority of GM crops were created by extracting the gene for a desired trait from a donor organism, multiplying it, and attaching it to other snippets of DNA—usually from a microbe called an agrobacterium—that could help it infiltrate the cells of the target plant. Biotechnologists injected these particles into the target, hoping at least one would land in a place where it would perform its intended function; if not, they kept trying. The process was quicker than conventional breeding, but still complex, scattershot, and costly.

Because agrobacteria can cause plant tumors, Kuzma explains, policymakers in the U.S. decided to regulate GMO crops under an existing law, the Plant Pest Act of 1957, which addressed dangers like imported trees infested with invasive bugs. Every GMO containing the DNA of agrobacterium or another plant pest had to be tested to see whether it behaved like a pest, and undergo a lengthy approval process. By 2010, however, new methods had been developed for creating GMOs without agrobacteria; such plants could typically be marketed without pre-approval.

Soon after that, the first gene-edited crops began appearing. If old-school genetic engineering was a shotgun, techniques like TALEN and CRISPR were a scalpel—or the search-and-replace function on a computer program. With CRISPR/Cas9, for example, an enzyme that bacteria use to recognize and chop up hostile viruses is reprogrammed to find and snip out a desired bit of a plant or other organism's DNA. The enzyme can also be used to insert a substitute gene. If a DNA sequence is simply removed, or the new gene comes from a similar species, the changes in the target plant's genotype and phenotype (its general characteristics) may be no different from those that could be produced through selective breeding. If a foreign gene is added, the plant becomes a transgenic GMO.

Companies are already teeing up gene-edited products for the U.S. market, like a cooking oil and waxy corn.

This development, along with the emergence of non-agrobacterium GMOs, eventually prompted the USDA to propose a tiered regulatory system for all genetically engineered crops, beginning with an initial screening for potentially hazardous metaboloids or ecological impacts. (The screening was intended, in part, to guard against the "off-target effects"—stray mutations—that occasionally appear in gene-edited organisms.) If no red flags appeared, the crop would be approved; otherwise, it would be subject to further review, and possible regulation.

The plan was unveiled in January 2017, during the last week of the Obama presidency. Then, under the Trump administration, it was shelved. Although the USDA continues to promise a new set of regulations, the only hint of what they might contain has been Secretary of Agriculture Sonny Perdue's statement last March that gene-edited plants would remain unregulated if they "could otherwise have been developed through traditional breeding techniques, as long as they are not plant pests or developed using plant pests."

Because transgenic plants could not be "developed through traditional breeding techniques," this statement could be taken to mean that gene editing in which foreign DNA is introduced might actually be regulated. But because the USDA regulates conventional transgenic GMOs only if they trigger the plant-pest stipulation, experts assume gene-edited crops will face similarly limited oversight.

Meanwhile, companies are already teeing up gene-edited products for the U.S. market. An herbicide-resistant oilseed rape, developed using a proprietary technique, has been available since 2016. A cooking oil made from TALEN-tweaked soybeans, designed to have a healthier fatty-acid profile, is slated for release within the next few months. A CRISPR-edited "waxy" corn, designed with a starch profile ideal for processed foods, should be ready by 2021.

In all likelihood, none of these products will have to be tested for safety.

In the E.U., Stricter Rules Apply

Now let's look at the European Union. Since the late 1990s, explains Gregory Jaffe, director of the Project on Biotechnology at the Center for Science in the Public Interest, the EU has had a "process-based trigger" for genetically engineered products: "If you use recombinant DNA, you are going to be regulated." All foods and animal feeds must be approved and labeled if they consist of or contain more than 0.9 percent GM ingredients. (In the U.S., "disclosure" of GM ingredients is mandatory, if someone asks, but labeling is not required.) The only GM crop that can be commercially grown in EU member nations is a type of insect-resistant corn, though some countries allow imports.

European scientists helped develop gene editing, and they—along with the continent's biotech entrepreneurs—have been busy developing applications for crops. But European farmers seem more divided over the technology than their American counterparts. The main French agricultural trades union, for example, supports research into non-transgenic gene editing and its exemption from GMO regulation. But it was the country's small-farmers' union, the Confédération Paysanne, along with several allied groups, that in 2015 submitted a complaint to the ECJ, asking that all plants produced via mutagenesis—including gene-editing—be regulated as GMOs.

At this point, it should be mentioned that in the past 30 years, large population studies have found no sign that consuming GM foods is harmful to human health. GMO critics can, however, point to evidence that herbicide-resistant crops have encouraged overuse of herbicides, giving rise to poison-proof "superweeds," polluting the environment with suspected carcinogens, and inadvertently killing beneficial plants. Those allegations were key to the French plaintiffs' argument that gene-edited crops might similarly do unexpected harm. (Disclosure: Leapsmag's parent company, Bayer, recently acquired Monsanto, a maker of herbicides and herbicide-resistant seeds. Also, Leaps by Bayer, an innovation initiative of Bayer and Leapsmag's direct founder, has funded a biotech startup called JoynBio that aims to reduce the amount of nitrogen fertilizer required to grow crops.)

The ruling was "scientifically nonsensical. It's because of things like this that I'll never go back to Europe."

In the end, the EU court found in the Confédération's favor on gene editing—though the court maintained the regulatory exemption for mutagenesis induced by chemicals or radiation, citing the 'long safety record' of those methods.

The ruling was "scientifically nonsensical," fumes Rodolphe Barrangou, a French food scientist who pioneered CRISPR while working for DuPont in Wisconsin and is now a professor at NC State. "It's because of things like this that I'll never go back to Europe."

Nonetheless, the decision was consistent with longstanding EU policy on crops made with recombinant DNA. Given the difficulty and expense of getting such products through the continent's regulatory system, many other European researchers may wind up following Barrangou to America.

Getting to the Root of the Cultural Divide

What explains the divergence between the American and European approaches to GMOs—and, by extension, gene-edited crops? In part, Jennifer Kuzma speculates, it's that Europeans have a different attitude toward eating. "They're generally more tied to where their food comes from, where it's produced," she notes. They may also share a mistrust of government assurances on food safety, borne of the region's Mad Cow scandals of the 1980s and '90s. In Catholic countries, consumers may have misgivings about tinkering with the machinery of life.

But the principal factor, Kuzma argues, is that European and American agriculture are structured differently. "GM's benefits have mostly been designed for large-scale industrial farming and commodity crops," she says. That kind of farming is dominant in the U.S., but not in Europe, leading to a different balance of political power. In the EU, there was less pressure on decisionmakers to approve GMOs or exempt gene-edited crops from regulation—and more pressure to adopt a GM-resistant stance.

Such dynamics may be operating in other regions as well. In China, for example, the government has long encouraged research in GMOs; a state-owned company recently acquired Syngenta, a Swiss-based multinational corporation that is a leading developer of GM and gene-edited crops. GM animal feed and cooking oil can be freely imported. Yet commercial cultivation of most GM plants remains forbidden, out of deference to popular suspicions of genetically altered food. "As a new item, society has debates and doubts on GMO techniques, which is normal," President Xi Jinping remarked in 2014. "We must be bold in studying it, [but] be cautious promoting it."

The proper balance between boldness and caution is still being worked out all over the world. Europe's process-based approach may prevent researchers from developing crops that, with a single DNA snip, could rescue millions from starvation. EU regulations will also make it harder for small entrepreneurs to challenge Big Ag with a technology that, as Barrangou puts it, "can be used affordably, quickly, scalably, by anyone, without even a graduate degree in genetics." America's product-based approach, conversely, may let crops with hidden genetic dangers escape detection. And by refusing to investigate such risks, regulators may wind up exacerbating consumers' doubts about GM and gene-edited products, rather than allaying them.

"Science...can't tell you what to regulate. That's a values-based decision."

Perhaps the solution lies in combining both approaches, and adding some flexibility and nuance to the mix. "I don't believe in regulation by the product or the process," says CSPI's Jaffe. "I think you need both." Deleting a DNA base pair to silence a gene, for example, might be less risky than inserting a foreign gene into a plant—unless the deletion enables the production of an allergen, and the transgene comes from spinach.

Kuzma calls for the creation of "cooperative governance networks" to oversee crop genome editing, similar to bodies that already help develop and enforce industry standards in fisheries, electronics, industrial cleaning products, and (not incidentally) organic agriculture. Such a network could include farmers, scientists, advocacy groups, private companies, and governmental agencies. "Safety isn't an all-or-nothing concept," Kuzma says. "Science can tell you what some of the issues are in terms of risk and benefit, but it can't tell you what to regulate. That's a values-based decision."

By drawing together a wide range of stakeholders to make such decisions, she adds, "we're more likely to anticipate future consequences, and to develop a robust approach—one that not only seems more legitimate to people, but is actually just plain old better."

Beyond Henrietta Lacks: How the Law Has Denied Every American Ownership Rights to Their Own Cells

A 2017 portrait of Henrietta Lacks.

The common perception is that Henrietta Lacks was a victim of poverty and racism when in 1951 doctors took samples of her cervical cancer without her knowledge or permission and turned them into the world's first immortalized cell line, which they called HeLa. The cell line became a workhorse of biomedical research and facilitated the creation of medical treatments and cures worth untold billions of dollars. Neither Lacks nor her family ever received a penny of those riches.

But racism and poverty is not to blame for Lacks' exploitation—the reality is even worse. In fact all patients, then and now, regardless of social or economic status, have absolutely no right to cells that are taken from their bodies. Some have called this biological slavery.

How We Got Here

The case that established this legal precedent is Moore v. Regents of the University of California.

John Moore was diagnosed with hairy-cell leukemia in 1976 and his spleen was removed as part of standard treatment at the UCLA Medical Center. On initial examination his physician, David W. Golde, had discovered some unusual qualities to Moore's cells and made plans prior to the surgery to have the tissue saved for research rather than discarded as waste. That research began almost immediately.

"On both sides of the case, legal experts and cultural observers cautioned that ownership of a human body was the first step on the slippery slope to 'bioslavery.'"

Even after Moore moved to Seattle, Golde kept bringing him back to Los Angeles to collect additional samples of blood and tissue, saying it was part of his treatment. When Moore asked if the work could be done in Seattle, he was told no. Golde's charade even went so far as claiming to find a low-income subsidy to pay for Moore's flights and put him up in a ritzy hotel to get him to return to Los Angeles, while paying for those out of his own pocket.

Moore became suspicious when he was asked to sign new consent forms giving up all rights to his biological samples and he hired an attorney to look into the matter. It turned out that Golde had been lying to his patient all along; he had been collecting samples unnecessary to Moore's treatment and had turned them into a cell line that he and UCLA had patented and already collected millions of dollars in compensation. The market for the cell lines was estimated at $3 billion by 1990.

Moore felt he had been taken advantage of and filed suit to claim a share of the money that had been made off of his body. "On both sides of the case, legal experts and cultural observers cautioned that ownership of a human body was the first step on the slippery slope to 'bioslavery,'" wrote Priscilla Wald, a professor at Duke University whose career has focused on issues of medicine and culture. "Moore could be viewed as asking to commodify his own body part or be seen as the victim of the theft of his most private and inalienable information."

The case bounced around different levels of the court system with conflicting verdicts for nearly six years until the California Supreme Court ruled on July 9, 1990 that Moore had no legal rights to cells and tissue once they were removed from his body.

The court made a utilitarian argument that the cells had no value until scientists manipulated them in the lab. And it would be too burdensome for researchers to track individual donations and subsequent cell lines to assure that they had been ethically gathered and used. It would impinge on the free sharing of materials between scientists, slow research, and harm the public good that arose from such research.

"In effect, what Moore is asking us to do is impose a tort duty on scientists to investigate the consensual pedigree of each human cell sample used in research," the majority wrote. In other words, researchers don't need to ask any questions about the materials they are using.

One member of the court did not see it that way. In his dissent, Stanley Mosk raised the specter of slavery that "arises wherever scientists or industrialists claim, as defendants have here, the right to appropriate and exploit a patient's tissue for their sole economic benefit—the right, in other words, to freely mine or harvest valuable physical properties of the patient's body. … This is particularly true when, as here, the parties are not in equal bargaining positions."

Mosk also cited the appeals court decision that the majority overturned: "If this science has become for profit, then we fail to see any justification for excluding the patient from participation in those profits."

But the majority bought the arguments that Golde, UCLA, and the nascent biotechnology industry in California had made in amici briefs filed throughout the legal proceedings. The road was now cleared for them to develop products worth billions without having to worry about or share with the persons who provided the raw materials upon which their research was based.

Critical Views

Biomedical research requires a continuous and ever-growing supply of human materials for the foundation of its ongoing work. If an increasing number of patients come to feel as John Moore did, that the system is ripping them off, then they become much less likely to consent to use of their materials in future research.

Some legal and ethical scholars say that donors should be able to limit the types of research allowed for their tissues and researchers should be monitored to assure compliance with those agreements. For example, today it is commonplace for companies to certify that their clothing is not made by child labor, their coffee is grown under fair trade conditions, that food labeled kosher is properly handled. Should we ask any less of our pharmaceuticals than that the donors whose cells made such products possible have been treated honestly and fairly, and share in the financial bounty that comes from such drugs?

Protection of individual rights is a hallmark of the American legal system, says Lisa Ikemoto, a law professor at the University of California Davis. "Putting the needs of a generalized public over the interests of a few often rests on devaluation of the humanity of the few," she writes in a reimagined version of the Moore decision that upholds Moore's property claims to his excised cells. The commentary is in a chapter of a forthcoming book in the Feminist Judgment series, where authors may only use legal precedent in effect at the time of the original decision.

"Why is the law willing to confer property rights upon some while denying the same rights to others?" asks Radhika Rao, a professor at the University of California, Hastings College of the Law. "The researchers who invest intellectual capital and the companies and universities that invest financial capital are permitted to reap profits from human research, so why not those who provide the human capital in the form of their own bodies?" It might be seen as a kind of sweat equity where cash strapped patients make a valuable in kind contribution to the enterprise.

The Moore court also made a big deal about inhibiting the free exchange of samples between scientists. That has become much less the situation over the more than three decades since the decision was handed down. Ironically, this decision, as well as other laws and regulations, have since strengthened the power of patents in biomedicine and by doing so have increased secrecy and limited sharing.

"Although the research community theoretically endorses the sharing of research, in reality sharing is commonly compromised by the aggressive pursuit and defense of patents and by the use of licensing fees that hinder collaboration and development," Robert D. Truog, Harvard Medical School ethicist and colleagues wrote in 2012 in the journal Science. "We believe that measures are required to ensure that patients not bear all of the altruistic burden of promoting medical research."

Additionally, the increased complexity of research and the need for exacting standardization of materials has given rise to an industry that supplies certified chemical reagents, cell lines, and whole animals bred to have specific genetic traits to meet research needs. This has been more efficient for research and has helped to ensure that results from one lab can be reproduced in another.

The Court's rationale of fostering collaboration and free exchange of materials between researchers also has been undercut by the changing structure of that research. Big pharma has shrunk the size of its own research labs and over the last decade has worked out cooperative agreements with major research universities where the companies contribute to the research budget and in return have first dibs on any findings (and sometimes a share of patent rights) that come out of those university labs. It has had a chilling effect on the exchange of materials between universities.

Perhaps tracking cell line donors and use restrictions on those donations might have been burdensome to researchers when Moore was being litigated. Some labs probably still kept their cell line records on 3x5 index cards, computers were primarily expensive room-size behemoths with limited capacity, the internet barely existed, and there was no cloud storage.

But that was the dawn of a new technological age and standards have changed. Now cell lines are kept in state-of-the-art sub zero storage units, tagged with the source, type of tissue, date gathered and often other information. Adding a few more data fields and contacting the donor if and when appropriate does not seem likely to disrupt the research process, as the court asserted.

Forging the Future

"U.S. universities are awarded almost 3,000 patents each year. They earn more than $2 billion each year from patent royalties. Sharing a modest portion of these profits is a novel method for creating a greater sense of fairness in research relationships that we think is worth exploring," wrote Mark Yarborough, a bioethicist at the University of California Davis Medical School, and colleagues. That was penned nearly a decade ago and those numbers have only grown.

The Michigan BioTrust for Health might serve as a useful model in tackling some of these issues. Dried blood spots have been collected from all newborns for half a century to be tested for certain genetic diseases, but controversy arose when the huge archive of dried spots was used for other research projects. As a result, the state created a nonprofit organization to in essence become a biobank and manage access to these spots only for specific purposes, and also to share any revenue that might arise from that research.

"If there can be no property in a whole living person, does it stand to reason that there can be no property in any part of a living person? If there were, can it be said that this could equate to some sort of 'biological slavery'?" Irish ethicist Asim A. Sheikh wrote several years ago. "Any amount of effort spent pondering the issue of 'ownership' in human biological materials with existing law leaves more questions than answers."

Perhaps the biggest question will arise when -- not if but when -- it becomes possible to clone a human being. Would a human clone be a legal person or the property of those who created it? Current legal precedent points to it being the latter.

Today, October 4, is the 70th anniversary of Henrietta Lacks' death from cancer. Over those decades her immortalized cells have helped make possible miraculous advances in medicine and have had a role in generating billions of dollars in profits. Surviving family members have spoken many times about seeking a share of those profits in the name of social justice; they intend to file lawsuits today. Such cases will succeed or fail on their own merits. But regardless of their specific outcomes, one can hope that they spark a larger public discussion of the role of patients in the biomedical research enterprise and lead to establishing a legal and financial claim for their contributions toward the next generation of biomedical research.

Is a Successful HIV Vaccine Finally on the Horizon?

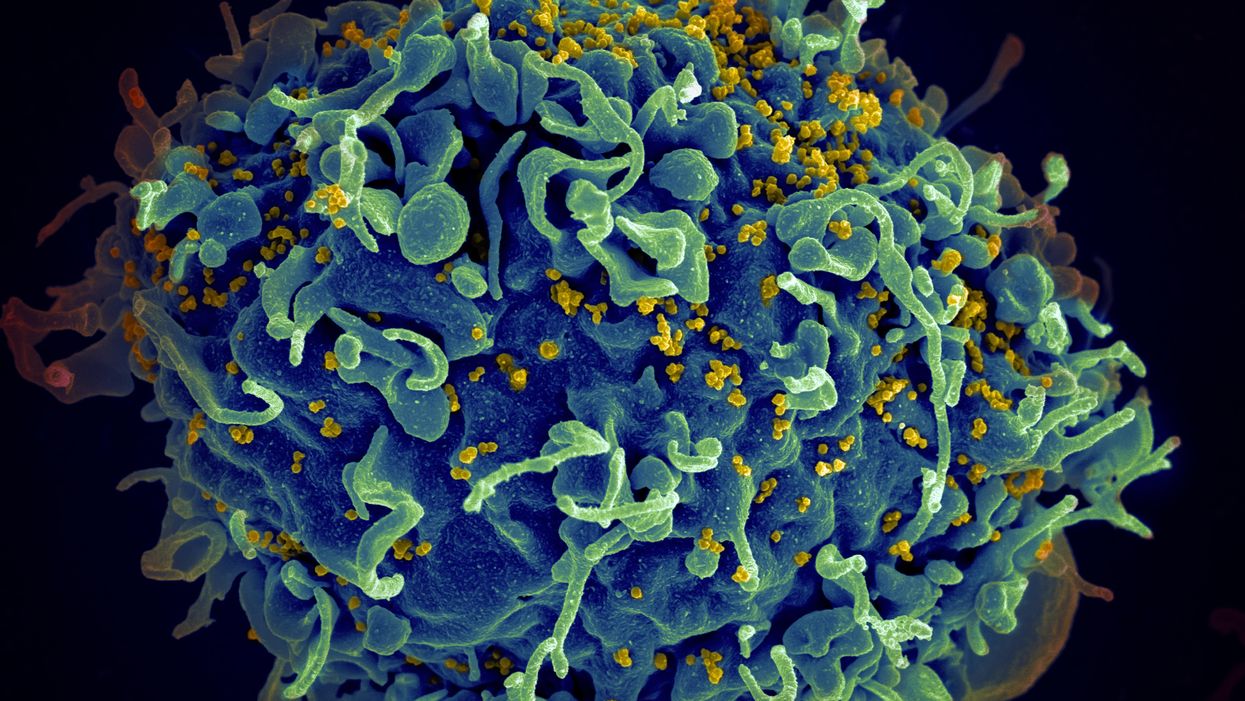

The HIV virus (yellow) infecting a human cell.

Few vaccines have been as complicated—and filled with false starts and crushed hopes—as the development of an HIV vaccine.

While antivirals help HIV-positive patients live longer and reduce viral transmission to virtually nil, these medications must be taken for life, and preventative medications like pre-exposure prophylaxis, known as PrEP, need to be taken every day to be effective. Vaccines, even if they need boosters, would make prevention much easier.

In August, Moderna began human trials for two HIV vaccine candidates based on messenger RNA.

As they have with the Covid-19 pandemic, mRNA vaccines could change the game. The technology could be applied for gene editing therapy, cancer, other infectious diseases—even a universal influenza vaccine.

In the past, three other mRNA vaccines completed phase-2 trials without success. But the easily customizable platforms mean the vaccines can be tweaked better to target HIV as researchers learn more.

Ever since HIV was discovered as the virus causing AIDS, researchers have been searching for a vaccine. But the decades-long journey has so far been fruitless; while some vaccine candidates showed promise in early trials, none of them have worked well among later-stage clinical trials.

There are two main reasons for this: HIV evolves incredibly quickly, and the structure of the virus makes it very difficult to neutralize with antibodies.

"We in HIV medicine have been desperate to find a vaccine that has effectiveness, but this goal has been elusive so far."

"You know the panic that goes on when a new coronavirus variant surfaces?" asked John Moore, professor of microbiology and immunology at Weill Cornell Medicine who has researched HIV vaccines for 25 years. "With HIV, that kind of variation [happens] pretty much every day in everybody who's infected. It's just orders of magnitude more variable a virus."

Vaccines like these usually work by imitating the outer layer of a virus to teach cells how to recognize and fight off the real thing off before it enters the cell. "If you can prevent landing, you can essentially keep the virus out of the cell," said Larry Corey, the former president and director of the Fred Hutchinson Cancer Research Center who helped run a recent trial of a Johnson & Johnson HIV vaccine candidate, which failed its first efficacy trial.

Like the coronavirus, HIV also has a spike protein with a receptor-binding domain—what Moore calls "the notorious RBD"—that could be neutralized with antibodies. But while that target sticks out like a sore thumb in a virus like SARS-CoV-2, in HIV it's buried under a dense shield. That's not the only target for neutralizing the virus, but all of the targets evolve rapidly and are difficult to reach.

"We understand these targets. We know where they are. But it's still proving incredibly difficult to raise antibodies against them by vaccination," Moore said.

In fact, mRNA vaccines for HIV have been under development for years. The Covid vaccines were built on decades of that research. But it's not as simple as building on this momentum, because of how much more complicated HIV is than SARS-CoV-2, researchers said.

"They haven't succeeded because they were not designed appropriately and haven't been able to induce what is necessary for them to induce," Moore said. "The mRNA technology will enable you to produce a lot of antibodies to the HIV envelope, but if they're the wrong antibodies that doesn't solve the problem."

Part of the problem is that the HIV vaccines have to perform better than our own immune systems. Many vaccines are created by imitating how our bodies overcome an infection, but that doesn't happen with HIV. Once you have the virus, you can't fight it off on your own.

"The human immune system actually does not know how to innately cure HIV," Corey said. "We needed to improve upon the human immune system to make it quicker… with Covid. But we have to actually be better than the human immune system" with HIV.

But in the past few years, there have been impressive leaps in understanding how an HIV vaccine might work. Scientists have known for decades that neutralizing antibodies are key for a vaccine. But in 2010 or so, they were able to mimic the HIV spike and understand how antibodies need to disable the virus. "It helps us understand the nature of the problem, but doesn't instantly solve the problem," Moore said. "Without neutralizing antibodies, you don't have a chance."

Because the vaccines need to induce broadly neutralizing antibodies, and because it's very difficult to neutralize the highly variable HIV, any vaccine will likely be a series of shots that teach the immune system to be on the lookout for a variety of potential attacks.

"Each dose is going to have to have a different purpose," Corey said. "And we hope by the end of the third or fourth dose, we will achieve the level of neutralization that we want."

That's not ideal, because each individual component has to be made and tested—and four shots make the vaccine harder to administer.

"You wouldn't even be going down that route, if there was a better alternative," Moore said. "But there isn't a better alternative."

The mRNA platform is exciting because it is easily customizable, which is especially important in fighting against a shapeshifting, complicated virus. And the mRNA platform has shown itself, in the Covid pandemic, to be safe and quick to make. Effective Covid vaccines were comparatively easy to develop, since the coronavirus is easier to battle than HIV. But companies like Moderna are capitalizing on their success to launch other mRNA therapeutics and vaccines, including the HIV trial.

"You can make the vaccine in two months, three months, in a research lab, and not a year—and the cost of that is really less," Corey said. "It gives us a chance to try many more options, if we've got a good response."

In a trial on macaque monkeys, the Moderna vaccine reduced the chances of infection by 85 percent. "The mRNA platform represents a very promising approach for the development of an HIV vaccine in the future," said Dr. Peng Zhang, who is helping lead the trial at the National Institute of Allergy and Infectious Diseases.

Moderna's trial in humans represents "a very exciting possibility for the prevention of HIV infection," Dr. Monica Gandhi, director of the UCSF-Gladstone Center for AIDS Research, said in an email. "We in HIV medicine have been desperate to find a vaccine that has effectiveness, but this goal has been elusive so far."

If a successful HIV vaccine is developed, the series of shots could include an mRNA shot that primes the immune system, followed by protein subunits that generate the necessary antibodies, Moore said.

"I think it's the only thing that's worth doing," he said. "Without something complicated like that, you have no chance of inducing broadly neutralizing antibodies."

"I can't guarantee you that's going to work," Moore added. "It may completely fail. But at least it's got some science behind it."