Who’s Responsible If a Scientist’s Work Is Used for Harm?

A face off in medical ethics.

Are scientists morally responsible for the uses of their work? To some extent, yes. Scientists are responsible for both the uses that they intend with their work and for some of the uses they don't intend. This is because scientists bear the same moral responsibilities that we all bear, and we are all responsible for the ends we intend to help bring about and for some (but not all) of those we don't.

To not think about plausible unintended effects is to be negligent -- and to recognize, but do nothing about, such effects is to be reckless.

It should be obvious that the intended outcomes of our work are within our sphere of moral responsibility. If a scientist intends to help alleviate hunger (by, for example, breeding new drought-resistant crop strains), and they succeed in that goal, they are morally responsible for that success, and we would praise them accordingly. If a scientist intends to produce a new weapon of mass destruction (by, for example, developing a lethal strain of a virus), and they are unfortunately successful, they are morally responsible for that as well, and we would blame them accordingly. Intention matters a great deal, and we are most praised or blamed for what we intend to accomplish with our work.

But we are responsible for more than just the intended outcomes of our choices. We are also responsible for unintended but readily foreseeable uses of our work. This is in part because we are all responsible for thinking not just about what we intend, but also what else might follow from our chosen course of action. In cases where severe and egregious harms are plausible, we should act in ways that strive to prevent such outcomes. To not think about plausible unintended effects is to be negligent -- and to recognize, but do nothing about, such effects is to be reckless. To be negligent or reckless is to be morally irresponsible, and thus blameworthy. Each of us should think beyond what we intend to do, reflecting carefully on what our course of action could entail, and adjusting our choices accordingly.

It is this area, of unintended but readily foreseeable (and plausible) impacts, that often creates the most difficulty for scientists. Many scientists can become so focused on their work (which is often demanding) and so focused on achieving their intended goals, that they fail to stop and think about other possible implications.

Debates over "dual-use" research exemplify these concerns, where harmful potential uses of research might mean the work should not be pursued, or the full publication of results should be curtailed. When researchers perform gain-of-function research, pushing viruses to become more transmissible or more deadly, it is clear how dangerous such work could be in the wrong hands. In these cases, it is not enough to simply claim that such uses were not intended and that it is someone else's job to ensure that the materials remain secure. We know securing infectious materials can be error-prone (recall events at the CDC and the FDA).

In some areas of research, scientists are already worrying about the unintended possible downsides of their work.

Further, securing viral strains does nothing to secure the knowledge that could allow for reproducing the viral strain (particularly when the methodologies and/or genetic sequences are published after the fact, as was the case for H5N1 and horsepox). It is, in fact, the researcher's moral responsibility to be concerned not just about the biosafety controls in their own labs, but also which projects should be pursued (Will the gain in knowledge be worth the possible downsides?) and which results should be published (Will a result make it easier for a malicious actor to deploy a new bioweapon?).

We have not yet had (to my knowledge) a use of gain-of-function research to harm people. If that does happen, those who actually released the virus on the public will be most blameworthy–-intentions do matter. But the scientists who developed the knowledge deployed by the malicious actors may also be held blameworthy, especially if the malicious use was easy to foresee, even if it was not pleasant to think about.

In some areas of research, scientists are already worrying about the unintended possible downsides of their work. Scientists investigating gene drives have thought beyond the immediate desired benefits of their work (e.g. reducing invasive species populations) and considered the possible spread of gene drives to untargeted populations. Modeling the impacts of such possibilities has led some researchers to pull back from particular deployment possibilities. It is precisely such thinking through both the intended and unintended possible outcomes that is needed for responsible work.

The world has gotten too small, too vulnerable for scientists to act as though they are not responsible for the uses of their work, intended or not. They must seek to ensure that, as the recent AAAS Statement on Scientific Freedom and Responsibility demands, their work is done "in the interest of humanity." This requires thinking beyond one's intentions, potentially drawing on the expertise of others, sometimes from other disciplines, to help explore implications. The need for such thinking does not guarantee good outcomes, but it will ensure that we are doing the best we can, and that is what being morally responsible is all about.

Thanks to safety cautions from the COVID-19 pandemic, a strain of influenza has been completely eliminated.

If you were one of the millions who masked up, washed your hands thoroughly and socially distanced, pat yourself on the back—you may have helped change the course of human history.

Scientists say that thanks to these safety precautions, which were introduced in early 2020 as a way to stop transmission of the novel COVID-19 virus, a strain of influenza has been completely eliminated. This marks the first time in human history that a virus has been wiped out through non-pharmaceutical interventions, such as vaccines.

The flu shot, explained

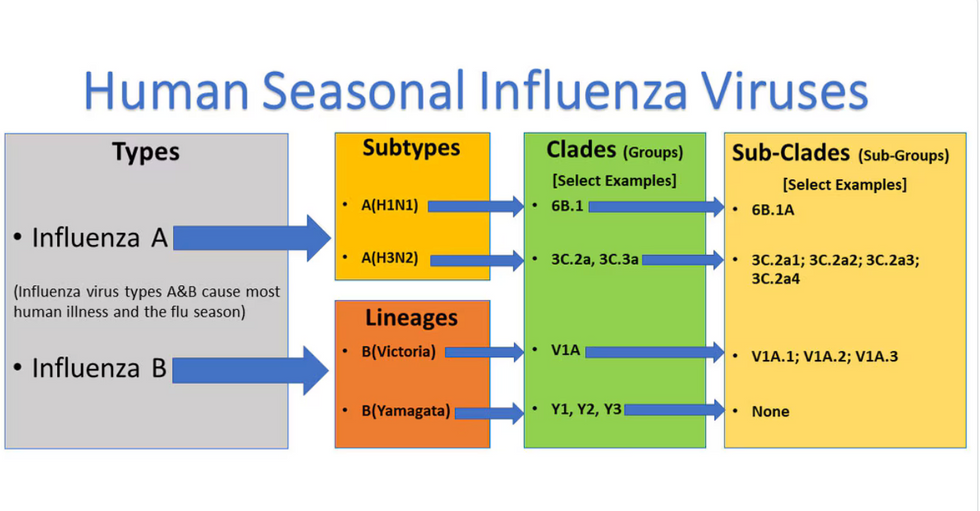

Influenza viruses type A and B are responsible for the majority of human illnesses and the flu season.

Centers for Disease Control

For more than a decade, flu shots have protected against two types of the influenza virus–type A and type B. While there are four different strains of influenza in existence (A, B, C, and D), only strains A, B, and C are capable of infecting humans, and only A and B cause pandemics. In other words, if you catch the flu during flu season, you’re most likely sick with flu type A or B.

Flu vaccines contain inactivated—or dead—influenza virus. These inactivated viruses can’t cause sickness in humans, but when administered as part of a vaccine, they teach a person’s immune system to recognize and kill those viruses when they’re encountered in the wild.

Each spring, a panel of experts gives a recommendation to the US Food and Drug Administration on which strains of each flu type to include in that year’s flu vaccine, depending on what surveillance data says is circulating and what they believe is likely to cause the most illness during the upcoming flu season. For the past decade, Americans have had access to vaccines that provide protection against two strains of influenza A and two lineages of influenza B, known as the Victoria lineage and the Yamagata lineage. But this year, the seasonal flu shot won’t include the Yamagata strain, because the Yamagata strain is no longer circulating among humans.

How Yamagata Disappeared

Flu surveillance data from the Global Initiative on Sharing All Influenza Data (GISAID) shows that the Yamagata lineage of flu type B has not been sequenced since April 2020.

Nature

Experts believe that the Yamagata lineage had already been in decline before the pandemic hit, likely because the strain was naturally less capable of infecting large numbers of people compared to the other strains. When the COVID-19 pandemic hit, the resulting safety precautions such as social distancing, isolating, hand-washing, and masking were enough to drive the virus into extinction completely.

Because the strain hasn’t been circulating since 2020, the FDA elected to remove the Yamagata strain from the seasonal flu vaccine. This will mark the first time since 2012 that the annual flu shot will be trivalent (three-component) rather than quadrivalent (four-component).

Should I still get the flu shot?

The flu shot will protect against fewer strains this year—but that doesn’t mean we should skip it. Influenza places a substantial health burden on the United States every year, responsible for hundreds of thousands of hospitalizations and tens of thousands of deaths. The flu shot has been shown to prevent millions of illnesses each year (more than six million during the 2022-2023 season). And while it’s still possible to catch the flu after getting the flu shot, studies show that people are far less likely to be hospitalized or die when they’re vaccinated.

Another unexpected benefit of dropping the Yamagata strain from the seasonal vaccine? This will possibly make production of the flu vaccine faster, and enable manufacturers to make more vaccines, helping countries who have a flu vaccine shortage and potentially saving millions more lives.

After his grandmother’s dementia diagnosis, one man invented a snack to keep her healthy and hydrated.

Founder Lewis Hornby and his grandmother Pat, sampling Jelly Drops—an edible gummy containing water and life-saving electrolytes.

On a visit to his grandmother’s nursing home in 2016, college student Lewis Hornby made a shocking discovery: Dehydration is a common (and dangerous) problem among seniors—especially those that are diagnosed with dementia.

Hornby’s grandmother, Pat, had always had difficulty keeping up her water intake as she got older, a common issue with seniors. As we age, our body composition changes, and we naturally hold less water than younger adults or children, so it’s easier to become dehydrated quickly if those fluids aren’t replenished. What’s more, our thirst signals diminish naturally as we age as well—meaning our body is not as good as it once was in letting us know that we need to rehydrate. This often creates a perfect storm that commonly leads to dehydration. In Pat’s case, her dehydration was so severe she nearly died.

When Lewis Hornby visited his grandmother at her nursing home afterward, he learned that dehydration especially affects people with dementia, as they often don’t feel thirst cues at all, or may not recognize how to use cups correctly. But while dementia patients often don’t remember to drink water, it seemed to Hornby that they had less problem remembering to eat, particularly candy.

Hornby wanted to create a solution for elderly people who struggled keeping their fluid intake up. He spent the next eighteen months researching and designing a solution and securing funding for his project. In 2019, Hornby won a sizable grant from the Alzheimer’s Society, a UK-based care and research charity for people with dementia and their caregivers. Together, through the charity’s Accelerator Program, they created a bite-sized, sugar-free, edible jelly drop that looked and tasted like candy. The candy, called Jelly Drops, contained 95% water and electrolytes—important minerals that are often lost during dehydration. The final product launched in 2020—and was an immediate success. The drops were able to provide extra hydration to the elderly, as well as help keep dementia patients safe, since dehydration commonly leads to confusion, hospitalization, and sometimes even death.

Not only did Jelly Drops quickly become a favorite snack among dementia patients in the UK, but they were able to provide an additional boost of hydration to hospital workers during the pandemic. In NHS coronavirus hospital wards, patients infected with the virus were regularly given Jelly Drops to keep their fluid levels normal—and staff members snacked on them as well, since long shifts and personal protective equipment (PPE) they were required to wear often left them feeling parched.

In April 2022, Jelly Drops launched in the United States. The company continues to donate 1% of its profits to help fund Alzheimer’s research.