Massive benefits of AI come with environmental and human costs. Can AI itself be part of the solution?

Generative AI has a large carbon footprint and other drawbacks. But AI can help mitigate its own harms—by plowing through mountains of data on extreme weather and human displacement.

The recent explosion of generative artificial intelligence tools like ChatGPT and Dall-E enabled anyone with internet access to harness AI’s power for enhanced productivity, creativity, and problem-solving. With their ever-improving capabilities and expanding user base, these tools proved useful across disciplines, from the creative to the scientific.

But beneath the technological wonders of human-like conversation and creative expression lies a dirty secret—an alarming environmental and human cost. AI has an immense carbon footprint. Systems like ChatGPT take months to train in high-powered data centers, which demand huge amounts of electricity, much of which is still generated with fossil fuels, as well as water for cooling. “One of the reasons why Open AI needs investments [to the tune of] $10 billion from Microsoft is because they need to pay for all of that computation,” says Kentaro Toyama, a computer scientist at the University of Michigan. There’s also an ecological toll from mining rare minerals required for hardware and infrastructure. This environmental exploitation pollutes land, triggers natural disasters and causes large-scale human displacement. Finally, for data labeling needed to train and correct AI algorithms, the Big Data industry employs cheap and exploitative labor, often from the Global South.

Generative AI tools are based on large language models (LLMs), with most well-known being various versions of GPT. LLMs can perform natural language processing, including translating, summarizing and answering questions. They use artificial neural networks, called deep learning or machine learning. Inspired by the human brain, neural networks are made of millions of artificial neurons. “The basic principles of neural networks were known even in the 1950s and 1960s,” Toyama says, “but it’s only now, with the tremendous amount of compute power that we have, as well as huge amounts of data, that it’s become possible to train generative AI models.”

Though there aren’t any official figures about the power consumption or emissions from data centers, experts estimate that they use one percent of global electricity—more than entire countries.

In recent months, much attention has gone to the transformative benefits of these technologies. But it’s important to consider that these remarkable advances may come at a price.

AI’s carbon footprint

In their latest annual report, 2023 Landscape: Confronting Tech Power, the AI Now Institute, an independent policy research entity focusing on the concentration of power in the tech industry, says: “The constant push for scale in artificial intelligence has led Big Tech firms to develop hugely energy-intensive computational models that optimize for ‘accuracy’—through increasingly large datasets and computationally intensive model training—over more efficient and sustainable alternatives.”

Though there aren’t any official figures about the power consumption or emissions from data centers, experts estimate that they use one percent of global electricity—more than entire countries. In 2019, Emma Strubell, then a graduate researcher at the University of Massachusetts Amherst, estimated that training a single LLM resulted in over 280,000 kg in CO2 emissions—an equivalent of driving almost 1.2 million km in a gas-powered car. A couple of years later, David Patterson, a computer scientist from the University of California Berkeley, and colleagues, estimated GPT-3’s carbon footprint at over 550,000 kg of CO2 In 2022, the tech company Hugging Face, estimated the carbon footprint of its own language model, BLOOM, as 25,000 kg in CO2 emissions. (BLOOM’s footprint is lower because Hugging Face uses renewable energy, but it doubled when other life-cycle processes like hardware manufacturing and use were added.)

Luckily, despite the growing size and numbers of data centers, their increasing energy demands and emissions have not kept pace proportionately—thanks to renewable energy sources and energy-efficient hardware.

But emissions don’t tell the full story.

AI’s hidden human cost

“If historical colonialism annexed territories, their resources, and the bodies that worked on them, data colonialism’s power grab is both simpler and deeper: the capture and control of human life itself through appropriating the data that can be extracted from it for profit.” So write Nick Couldry and Ulises Mejias, authors of the book The Costs of Connection.

The energy requirements, hardware manufacture and the cheap human labor behind AI systems disproportionately affect marginalized communities.

Technologies we use daily inexorably gather our data. “Human experience, potentially every layer and aspect of it, is becoming the target of profitable extraction,” Couldry and Meijas say. This feeds data capitalism, the economic model built on the extraction and commodification of data. While we are being dispossessed of our data, Big Tech commodifies it for their own benefit. This results in consolidation of power structures that reinforce existing race, gender, class and other inequalities.

“The political economy around tech and tech companies, and the development in advances in AI contribute to massive displacement and pollution, and significantly changes the built environment,” says technologist and activist Yeshi Milner, who founded Data For Black Lives (D4BL) to create measurable change in Black people’s lives using data. The energy requirements, hardware manufacture and the cheap human labor behind AI systems disproportionately affect marginalized communities.

AI’s recent explosive growth spiked the demand for manual, behind-the-scenes tasks, creating an industry described by Mary Gray and Siddharth Suri as “ghost work” in their book. This invisible human workforce that lies behind the “magic” of AI, is overworked and underpaid, and very often based in the Global South. For example, workers in Kenya who made less than $2 an hour, were the behind the mechanism that trained ChatGPT to properly talk about violence, hate speech and sexual abuse. And, according to an article in Analytics India Magazine, in some cases these workers may not have been paid at all, a case for wage theft. An exposé by the Washington Post describes “digital sweatshops” in the Philippines, where thousands of workers experience low wages, delays in payment, and wage theft by Remotasks, a platform owned by Scale AI, a $7 billion dollar American startup. Rights groups and labor researchers have flagged Scale AI as one company that flouts basic labor standards for workers abroad.

It is possible to draw a parallel with chattel slavery—the most significant economic event that continues to shape the modern world—to see the business structures that allow for the massive exploitation of people, Milner says. Back then, people got chocolate, sugar, cotton; today, they get generative AI tools. “What’s invisible through distance—because [tech companies] also control what we see—is the massive exploitation,” Milner says.

“At Data for Black Lives, we are less concerned with whether AI will become human…[W]e’re more concerned with the growing power of AI to decide who’s human and who’s not,” Milner says. As a decision-making force, AI becomes a “justifying factor for policies, practices, rules that not just reinforce, but are currently turning the clock back generations years on people’s civil and human rights.”

Ironically, AI plays an important role in mitigating its own harms—by plowing through mountains of data about weather changes, extreme weather events and human displacement.

Nuria Oliver, a computer scientist, and co-founder and vice-president of the European Laboratory of Learning and Intelligent Systems (ELLIS), says that instead of focusing on the hypothetical existential risks of today’s AI, we should talk about its real, tangible risks.

“Because AI is a transverse discipline that you can apply to any field [from education, journalism, medicine, to transportation and energy], it has a transformative power…and an exponential impact,” she says.

AI's accountability

“At the core of what we were arguing about data capitalism [is] a call to action to abolish Big Data,” says Milner. “Not to abolish data itself, but the power structures that concentrate [its] power in the hands of very few actors.”

A comprehensive AI Act currently negotiated in the European Parliament aims to rein Big Tech in. It plans to introduce a rating of AI tools based on the harms caused to humans, while being as technology-neutral as possible. That sets standards for safe, transparent, traceable, non-discriminatory, and environmentally friendly AI systems, overseen by people, not automation. The regulations also ask for transparency in the content used to train generative AIs, particularly with copyrighted data, and also disclosing that the content is AI-generated. “This European regulation is setting the example for other regions and countries in the world,” Oliver says. But, she adds, such transparencies are hard to achieve.

Google, for example, recently updated its privacy policy to say that anything on the public internet will be used as training data. “Obviously, technology companies have to respond to their economic interests, so their decisions are not necessarily going to be the best for society and for the environment,” Oliver says. “And that’s why we need strong research institutions and civil society institutions to push for actions.” ELLIS also advocates for data centers to be built in locations where the energy can be produced sustainably.

Ironically, AI plays an important role in mitigating its own harms—by plowing through mountains of data about weather changes, extreme weather events and human displacement. “The only way to make sense of this data is using machine learning methods,” Oliver says.

Milner believes that the best way to expose AI-caused systemic inequalities is through people's stories. “In these last five years, so much of our work [at D4BL] has been creating new datasets, new data tools, bringing the data to life. To show the harms but also to continue to reclaim it as a tool for social change and for political change.” This change, she adds, will depend on whose hands it is in.

When graduating college this month, many North American engineering students will take a special pledge, with a history dating back to 1925.

This spring, just like any other year, thousands of young North American engineers will graduate from their respective colleges ready to start erecting buildings, assembling machinery, and programming software, among other things. But before they take on these complex and important tasks, many of them will recite a special vow stating their ethical obligations to society, not unlike the physicians who take their Hippocratic Oath, affirming their ethos toward the patients they would treat. At the end of the ceremony, the engineers receive an iron ring, as a reminder of their promise to the millions of people their work will serve.

The ceremony isn’t just another graduation formality. As a profession, engineering has ethical weight. Moreover, engineering mistakes can be even more deadly than medical ones. A doctor’s error may cost a patient their life. But an engineering blunder may bring down a plane or crumble a building, resulting in many more fatalities. When larger projects—such as fracking, deep-sea mining or building nuclear reactors—malfunction and backfire, they can cause global disasters, afflicting millions. A vow that reminds an engineer that their work directly affects humankind and their planet is no less important than a medical oath that summons one to do no harm.

The tradition of taking an engineering oath began over a century ago in Canada. In 1922, Herbert E.T. Haultain, professor of mining engineering at the University of Toronto, presented the idea at the annual meeting of the Engineering Institute of Canada. The seven past presidents of that body were in attendance, heard Haultain’s speech and accepted his suggestion to form a committee to create an honor oath. Later, they formed the nonprofit Corporation of the Seven Wardens, which would oversee the ritual. Next year, in 1923, with the encouragement of the Seven Wardens, Haultain wrote to poet and writer Rudyard Kipling, asking him to develop a professional oath for engineers. “We are a tribe—a very important tribe within the community,” Haultain said in the letter, “but we are lacking in tribal spirit, or perhaps I should say, in manifestation of tribal spirit. Also, we are inarticulate. Can you help us?”

While Kipling is most famous now for “The Jungle Book” and perhaps his poem “Gunga Din,” he had also written a short story about engineers, “The Bridge Builders.” His poem “The Sons of Martha” can be read as a celebration of engineers:

It is their care in all the ages to take the buffet and cushion the shock.

It is their care that the gear engages; it is their care that the switches lock.

It is their care that the wheels run truly; it is their care to embark and entrain,

Tally, transport, and deliver duly the Sons of Mary by land and main.

Kipling accepted the ask and wrote the Ritual of the Calling of an Engineer, which he sent to Haultain a month later. In his response to Haultain, he stated that he preferred the word “Obligation” to “Oath.” He wrote the Obligation using Old English lettering and the old-fashioned capitalization. Kipling’s Obligation binds engineers upon their “Honor and Cold Iron” to not “suffer or pass, or be privy to the passing of, Bad Workmanship or Faulty Material,” and pardon is asked “in the presence of my betters and my equals in my Calling” for the engineer’s “assured failures and derelictions.” The hope is that when one is tempted to shoddy work by weakness or weariness, the memory of the Obligation “and the company before whom it was entered into, may return to me to aid, comfort, and restrain.”

Using the Obligation, The Seven Wardens created an induction ceremony, which seeks to unify the profession and recognize engineering’s ethics, including responsibility to the public and the need to make the best decisions possible. The induction ceremony included recitation of Kipling’s “Obligation” and incorporated an anvil, a hammer, an iron chain, and an iron ring. The inductee engineers sat inside an area marked off by the iron chain, with their more senior colleagues outside that area. At the start of the ritual, the leader beat out S-S-T in Morse code with the hammer and anvil—the letters standing for Steel, Stone, and Time. A more experienced and previously obligated engineer placed the ring on the small finger of the inductee engineer’s working hand. As per Kipling, the ring’s rough, faceted texture symbolized “the young engineer’s mind” and the difficulties engineers face in mastering their discipline.

A persistent myth purports that the original iron rings were made from the beams or bolts of the Quebec Bridge that failed twice during construction.

The first induction ceremony took place on April 25, 1925, in Montreal to obligate two of the Seven Wardens, along with four graduates from the University of Toronto class of 1893. On May 1 of that year, 14 more engineers were obligated at the University of Toronto. From that time to today most Canadian professional engineers have gone through that same ritual in their various camps, called Kipling camps—local chapters associated with various Canadian universities.

Henry Petroski, Duke University’s professor of civil engineering and history, notes in his book, “Forgive Design: Understanding Failure,” that Kipling’s poem “Sons of Martha” is often read as part of the ritual. However, sometimes inductees read Kipling’s “Hymn of Breaking Strain,” instead, which graphically depicts disastrous outcomes of engineering mistakes. The first stanza of that poem says:

The careful text-books measure

(Let all who build beware!)

The load, the shock, the pressure

Material can bear.

So, when the buckled girder

Lets down the grinding span,

'The blame of loss, or murder,

Is laid upon the man.

Not on the Stuff—the Man!

As if to strengthen the importance of these concepts, a persistent myth purports that the original iron rings were made from the beams or bolts of the Quebec Bridge that failed twice during construction. The bridge spans the St. Lawrence River upriver from Quebec City, and at the time of its construction was the world’s longest at 1,800 feet. Due to engineering errors and poor oversight, the bridge’s own weight exceeded its carrying capacity. Moreover, engineers downplayed danger when bridge beams began to warp under stress, saying that they were probably warped before they were installed. On August 29, 1907, the bridge collapsed, killing 75 of 86 workers. A second collapse occurred in 1916 when lifting equipment failed, and thirteen more workers died.

The ring myth, however, couldn’t be true. The original iron rings couldn’t have come from the failed bridge since it was made of steel, not wrought iron. Today the rings are made from stainless steel because iron deteriorates and stains engineers’ finger black.

On August 14, 2018, Morandi Bridge over Polcevera River in Genoa, Italy, collapsed from structural failure, killing 43 people.

Adobe Stock

The Seven Wardens decided to restrict the ritual to engineers trained in Canada. They copyrighted the obligation oath in Canada and the United States in 1935. Although the ritual is not a requirement for professional licensing, just like the Hippocratic Oath is not part of medical licensing, it remains a long-standing tradition.

The American Obligation of the Engineer has its own creation story, albeit a very different one. The American Order of the Engineer (OOE) was initiated in 1970, during the era of the anti-war protests, Apollo missions and the first Earth Day. On May 4, 1970, the National Guard shot into a crowd of protesters at Kent State University, killing four people. The two authors of the American obligation—Cleveland State University’s (CSU) engineering professor John Janssen and his wife Susan—reflected these historical events in the oath they wrote. Their version of the oath binds engineers to “practice integrity and fair dealing.” It also notes that their “skill carries with it the obligation to serve humanity by making the best use of the Earth’s precious wealth.” As Petroski explains in his book, “campus antiwar protestors around the country tended to view engineers as complicit in weapons proliferation [which] prompted some [CSU] engineering student leaders to look for a means of asserting some more positive values.”

Kip A. Wedel, associate professor of history and politics at Bethel College, wrote in his book, “The Obligation: A History of the Order of the Engineer,” that the ceremony was not a direct response to the Kent State shootings—it was already scheduled when the shootings happened. Yet, engineering students found the ceremony a positive action they could take in contrast to the overall turmoil. The first American ritual took place on June 4, 1970, at CSU. In total, 170 students, faculty members, and practicing engineers took the obligation. This established CSU as the first Link of the Order, as the OOE designates its local chapters. For their first ceremony, the CSU students fabricated smooth, unfaceted rings from stainless steel pipe. Later they were replaced by factory-made rings. According to Paula Ostaff, OOE’s Executive Director, about 20,000 eligible students and alumni obligate themselves yearly.

Societies hope that every engineer is imbued with a strong ethical sense and that their pledges are never far from mind. For some, the rings they wear serve a daily reminder that every paper they sign off on is touched by a physical reminder of their commitment.

These ethical and responsible engineering practices are especially salient today, when one in three American bridges needs repair or replacement, some have already collapsed, and engineers are working on projects related to the bipartisan infrastructure bill President Biden signed into law in 2021. Canada has committed $33 billion to its Investing in Canada Infrastructure Program. At the heart of these grand projects are many thousands of professional engineers, collectively working millions of hours. The professional vows they took aim to assure that the homes, bridges and airplanes they build will work as expected.

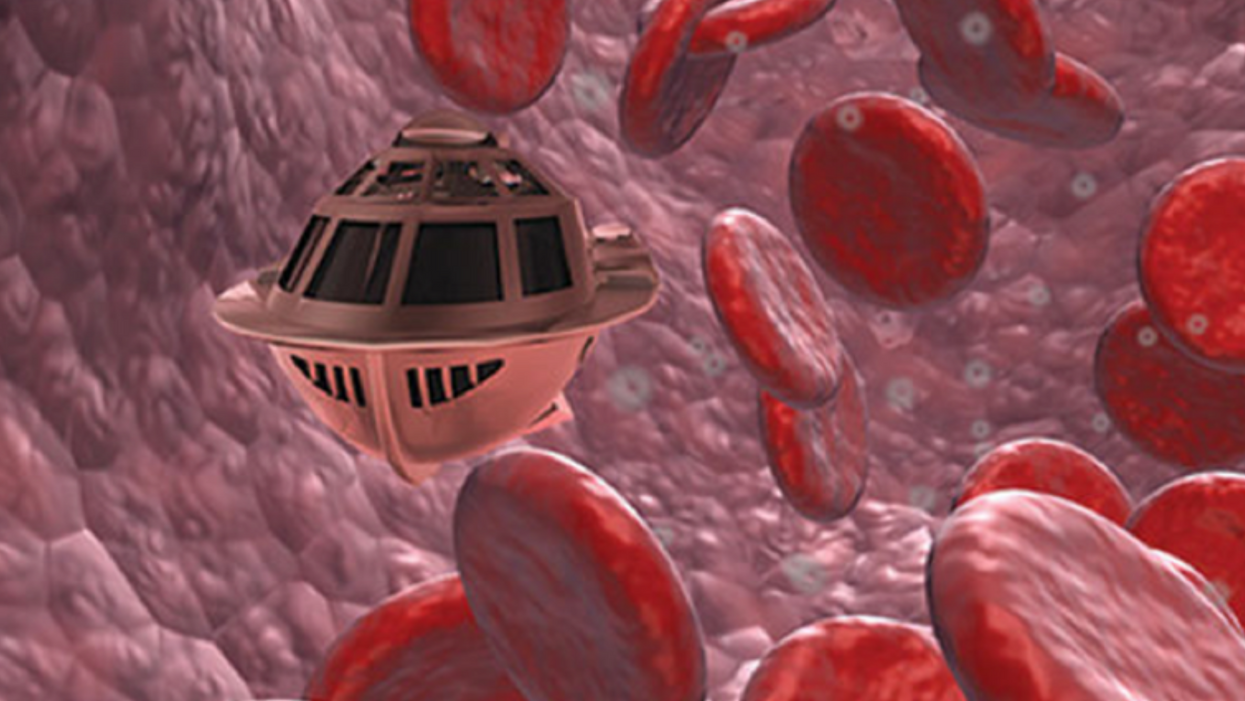

A movie still from the 1966 film "Fantastic Voyage"

In the 1966 movie "Fantastic Voyage," actress Raquel Welch and her submarine were shrunk to the size of a cell in order to eliminate a blood clot in a scientist's brain. Now, 55 years later, the scenario is becoming closer to reality.

California-based startup Bionaut Labs has developed a nanobot about the size of a grain of rice that's designed to transport medication to the exact location in the body where it's needed. If you think about it, the conventional way to deliver medicine makes little sense: A painkiller affects the entire body instead of just the arm that's hurting, and chemotherapy is flushed through all the veins instead of precisely targeting the tumor.

"Chemotherapy is delivered systemically," Bionaut-founder and CEO Michael Shpigelmacher says. "Often only a small percentage arrives at the location where it is actually needed."

But what if it was possible to send a tiny robot through the body to attack a tumor or deliver a drug at exactly the right location?

Several startups and academic institutes worldwide are working to develop such a solution but Bionaut Labs seems the furthest along in advancing its invention. "You can think of the Bionaut as a tiny screw that moves through the veins as if steered by an invisible screwdriver until it arrives at the tumor," Shpigelmacher explains. Via Zoom, he shares the screen of an X-ray machine in his Culver City lab to demonstrate how the half-transparent, yellowish device winds its way along the spine in the body. The nanobot contains a tiny but powerful magnet. The "invisible screwdriver" is an external magnetic field that rotates that magnet inside the device and gets it to move and change directions.

The current model has a diameter of less than a millimeter. Shpigelmacher's engineers could build the miniature vehicle even smaller but the current size has the advantage of being big enough to see with bare eyes. It can also deliver more medicine than a tinier version. In the Zoom demonstration, the micorobot is injected into the spine, not unlike an epidural, and pulled along the spine through an outside magnet until the Bionaut reaches the brainstem. Depending which organ it needs to reach, it could be inserted elsewhere, for instance through a catheter.

"The hope is that we can develop a vehicle to transport medication deep into the body," says Max Planck scientist Tian Qiu.

Imagine moving a screw through a steak with a magnet — that's essentially how the device works. But of course, the Bionaut is considerably different from an ordinary screw: "At the right location, we give a magnetic signal, and it unloads its medicine package," Shpigelmacher says.

To start, Bionaut Labs wants to use its device to treat Parkinson's disease and brain stem gliomas, a type of cancer that largely affects children and teenagers. About 300 to 400 young people a year are diagnosed with this type of tumor. Radiation and brain surgery risk damaging sensitive brain tissue, and chemotherapy often doesn't work. Most children with these tumors live less than 18 months. A nanobot delivering targeted chemotherapy could be a gamechanger. "These patients really don't have any other hope," Shpigelmacher says.

Of course, the main challenge of the developing such a device is guaranteeing that it's safe. Because tissue is so sensitive, any mistake could risk disastrous results. In recent years, Bionaut has tested its technology in dozens of healthy sheep and pigs with no major adverse effects. Sheep make a good stand-in for humans because their brains and spines are similar to ours.

The Bionaut device is about the size of a grain of rice.

Bionaut Labs

"As the Bionaut moves through brain tissue, it creates a transient track that heals within a few weeks," Shpigelmacher says. The company is hoping to be the first to test a nanobot in humans. In December 2022, it announced that a recent round of funding drew $43.2 million, for a total of 63.2 million, enabling more research and, if all goes smoothly, human clinical trials by early next year.

Once the technique has been perfected, further applications could include addressing other kinds of brain disorders that are considered incurable now, such as Alzheimer's or Huntington's disease. "Microrobots could serve as a bridgehead, opening the gateway to the brain and facilitating precise access of deep brain structure – either to deliver medication, take cell samples or stimulate specific brain regions," Shpigelmacher says.

Robot-assisted hybrid surgery with artificial intelligence is already used in state-of-the-art surgery centers, and many medical experts believe that nanorobotics will be the instrument of the future. In 2016, three scientists were awarded the Nobel Prize in Chemistry for their development of "the world's smallest machines," nano "elevators" and minuscule motors. Since then, the scientific experiments have progressed to the point where applicable devices are moving closer to actually being implemented.

Bionaut's technology was initially developed by a research team lead by Peer Fischer, head of the independent Micro Nano and Molecular Systems Lab at the Max Planck Institute for Intelligent Systems in Stuttgart, Germany. Fischer is considered a pioneer in the research of nano systems, which he began at Harvard University more than a decade ago. He and his team are advising Bionaut Labs and have licensed their technology to the company.

"The hope is that we can develop a vehicle to transport medication deep into the body," says Max Planck scientist Tian Qiu, who leads the cooperation with Bionaut Labs. He agrees with Shpigelmacher that the Bionaut's size is perfect for transporting medication loads and is researching potential applications for even smaller nanorobots, especially in the eye, where the tissue is extremely sensitive. "Nanorobots can sneak through very fine tissue without causing damage."

In "Fantastic Voyage," Raquel Welch's adventures inside the body of a dissident scientist let her swim through his veins into his brain, but her shrunken miniature submarine is attacked by antibodies; she has to flee through the nerves into the scientist's eye where she escapes into freedom on a tear drop. In reality, the exit in the lab is much more mundane. The Bionaut simply leaves the body through the same port where it entered. But apart from the dramatization, the "Fantastic Voyage" was almost prophetic, or, as Shpigelmacher says, "Science fiction becomes science reality."

This article was first published by Leaps.org on April 12, 2021.