Don’t fear AI, fear power-hungry humans

Story by Big Think

We live in strange times, when the technology we depend on the most is also that which we fear the most. We celebrate cutting-edge achievements even as we recoil in fear at how they could be used to hurt us. From genetic engineering and AI to nuclear technology and nanobots, the list of awe-inspiring, fast-developing technologies is long.

However, this fear of the machine is not as new as it may seem. Technology has a longstanding alliance with power and the state. The dark side of human history can be told as a series of wars whose victors are often those with the most advanced technology. (There are exceptions, of course.) Science, and its technological offspring, follows the money.

This fear of the machine seems to be misplaced. The machine has no intent: only its maker does. The fear of the machine is, in essence, the fear we have of each other — of what we are capable of doing to one another.

How AI changes things

Sure, you would reply, but AI changes everything. With artificial intelligence, the machine itself will develop some sort of autonomy, however ill-defined. It will have a will of its own. And this will, if it reflects anything that seems human, will not be benevolent. With AI, the claim goes, the machine will somehow know what it must do to get rid of us. It will threaten us as a species.

Well, this fear is also not new. Mary Shelley wrote Frankenstein in 1818 to warn us of what science could do if it served the wrong calling. In the case of her novel, Dr. Frankenstein’s call was to win the battle against death — to reverse the course of nature. Granted, any cure of an illness interferes with the normal workings of nature, yet we are justly proud of having developed cures for our ailments, prolonging life and increasing its quality. Science can achieve nothing more noble. What messes things up is when the pursuit of good is confused with that of power. In this distorted scale, the more powerful the better. The ultimate goal is to be as powerful as gods — masters of time, of life and death.

Should countries create a World Mind Organization that controls the technologies that develop AI?

Back to AI, there is no doubt the technology will help us tremendously. We will have better medical diagnostics, better traffic control, better bridge designs, and better pedagogical animations to teach in the classroom and virtually. But we will also have better winnings in the stock market, better war strategies, and better soldiers and remote ways of killing. This grants real power to those who control the best technologies. It increases the take of the winners of wars — those fought with weapons, and those fought with money.

A story as old as civilization

The question is how to move forward. This is where things get interesting and complicated. We hear over and over again that there is an urgent need for safeguards, for controls and legislation to deal with the AI revolution. Great. But if these machines are essentially functioning in a semi-black box of self-teaching neural nets, how exactly are we going to make safeguards that are sure to remain effective? How are we to ensure that the AI, with its unlimited ability to gather data, will not come up with new ways to bypass our safeguards, the same way that people break into safes?

The second question is that of global control. As I wrote before, overseeing new technology is complex. Should countries create a World Mind Organization that controls the technologies that develop AI? If so, how do we organize this planet-wide governing board? Who should be a part of its governing structure? What mechanisms will ensure that governments and private companies do not secretly break the rules, especially when to do so would put the most advanced weapons in the hands of the rule breakers? They will need those, after all, if other actors break the rules as well.

As before, the countries with the best scientists and engineers will have a great advantage. A new international détente will emerge in the molds of the nuclear détente of the Cold War. Again, we will fear destructive technology falling into the wrong hands. This can happen easily. AI machines will not need to be built at an industrial scale, as nuclear capabilities were, and AI-based terrorism will be a force to reckon with.

So here we are, afraid of our own technology all over again.

What is missing from this picture? It continues to illustrate the same destructive pattern of greed and power that has defined so much of our civilization. The failure it shows is moral, and only we can change it. We define civilization by the accumulation of wealth, and this worldview is killing us. The project of civilization we invented has become self-cannibalizing. As long as we do not see this, and we keep on following the same route we have trodden for the past 10,000 years, it will be very hard to legislate the technology to come and to ensure such legislation is followed. Unless, of course, AI helps us become better humans, perhaps by teaching us how stupid we have been for so long. This sounds far-fetched, given who this AI will be serving. But one can always hope.

This article originally appeared on Big Think, home of the brightest minds and biggest ideas of all time.

How Excessive Regulation Helped Ignite COVID-19's Rampant Spread

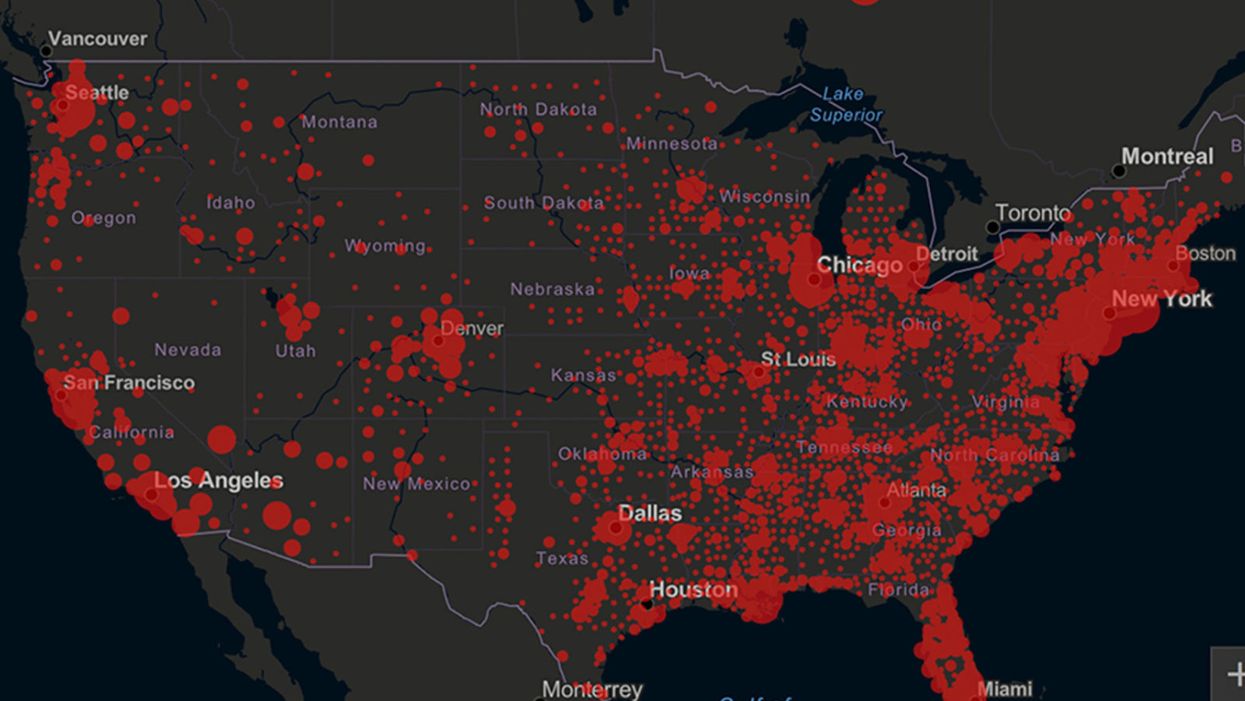

Screenshot of an interactive map of coronavirus cases across the United States, current as of 1:45 p.m. Pacific time on Tuesday, March 31st. Full map accessible at https://coronavirus.jhu.edu/map.html

When historians of the future look back at the 2020 pandemic, the heroic work of Helen Y. Chu, a flu researcher at the University of Washington, will be worthy of recognition.

Chu's team bravely defied the order and conducted the testing anyway.

In late January, Chu was testing nasal swabs for the Seattle Flu Study to monitor influenza spread when she learned of the first case of COVID-19 in Washington state. She deemed it a pressing public health matter to document if and how the illness was spreading locally, so that early containment efforts could succeed. So she sought regulatory approval to adapt the Flu Study to test for the coronavirus, but the federal government denied the request because the original project was funded to study only influenza.

Aware of the urgency, Chu's team bravely defied the order and conducted the testing anyway. Soon they identified a local case in a teenager without any travel history, followed by others. Still, the government tried to shutter their efforts until the outbreak grew dangerous enough to command attention.

Needless testing delays, prompted by excessive regulatory interference, eliminated any chances of curbing the pandemic at its initial stages. Even after Chu went out on a limb to sound alarms, a heavy-handed bureaucracy crushed the nation's ability to roll out early and widespread testing across the country. The Centers for Disease Control and Prevention infamously blundered its own test, while also impeding state and private labs from coming on board, fueling a massive shortage.

The long holdup created "a backlog of testing that needed to be done," says Amesh Adalja, an infectious disease specialist who is a senior scholar at the Johns Hopkins University Center for Health Security.

In a public health crisis, "the ideal situation" would allow the government's test to be "supplanted by private laboratories" without such "a lag in that transition," Adalja says. Only after the eventual release of CDC's test could private industry "begin in earnest" to develop its own versions under the Food and Drug Administration's emergency use authorization.

In a statement, CDC acknowledged that "this process has not gone as smoothly as we would have liked, but there is currently no backlog for testing at CDC."

Now, universities and corporations are in a race against time, playing catch up as the virus continues its relentless spread, also afflicting many health care workers on the front lines.

"Home-testing accessibility is key to preventing further spread of the COVID-19 pandemic."

Hospitals are attempting to add the novel coronavirus to the testing panel of their existent diagnostic machines, which would reduce the results processing time from 48 hours to as little as four hours. Meanwhile, at least four companies announced plans to deliver at-home collection tests to help meet the demand – before a startling injunction by the FDA halted their plans.

Everlywell, an Austin, Texas-based digital health company, had been set to launch online sales of at-home collection kits directly to consumers last week. Scaling up in a matter of days to an initial supply of 30,000 tests, Everlywell collaborated with multiple laboratories where consumers could ship their nasal swab samples overnight, projecting capacity to screen a quarter-million individuals on a weekly basis, says Frank Ong, chief medical and scientific officer.

Secure digital results would have been available online within 48 hours of a sample's arrival at the lab, as well as a telehealth consultation with an independent, board-certified doctor if someone tested positive, for an inclusive $135 cost. The test has a less than 3 percent false-negative rate, Ong says, and in the event of an inadequate self-swab, the lab would not report a conclusive finding. "Home-testing accessibility," he says, "is key to preventing further spread of the COVID-19 pandemic."

But on March 20, the FDA announced restrictions on home collection tests due to concerns about accuracy. The agency did note "the public health value in expanding the availability of COVID-19 testing through safe and accurate tests that may include home collection," while adding that "we are actively working with test developers in this space."

After the restrictions were announced, Everlywell decided to allocate its initial supply of COVID-19 collection kits to hospitals, clinics, nursing homes, and other qualifying health care companies that can commit to no-cost screening of frontline workers and high-risk symptomatic patients. For now, no consumers can order a home-collection test.

"Losing two months is close to disastrous, and that's what we did."

Currently, the U.S. has ramped up to testing an estimated 100,000 people a day, according to Stat News. But 150,000 or more Americans should be tested every day, says Ashish Jha, professor and director of the Harvard Global Health Institute. Due to the dearth of tests, many sick people who suspect they are infected still cannot get confirmation unless they need to be hospitalized.

To give a concrete sense of how far behind we are in testing, consider Palm Beach County, Fla. The state's only drive-thru test center just opened there, requiring an appointment. The center aims to test 750 people per day, but more than 330,000 people have already called to try to book a slot.

"This is such a rapidly moving infection that losing a few days is bad, and losing a couple of weeks is terrible," says Jha, a practicing general internist. "Losing two months is close to disastrous, and that's what we did."

At this point, it will take a long time to fully ramp up. "We are blindfolded," he adds, "and I'd like to take the blindfolds off so we can fight this battle with our eyes wide open."

Better late than never: Yesterday, FDA Commissioner Stephen Hahn said in a statement that the agency has worked with more than 230 test developers and has approved 20 tests since January. An especially notable one was authorized last Friday – 67 days since the country's first known case in Washington state. It's a rapid point-of-care test from medical-device firm Abbott that provides positive results in five minutes and negative results in 13 minutes. Abbott will send 50,000 tests a day to urgent care settings. The first tests are expected to ship tomorrow.

Your Privacy vs. the Public's Health: High-Tech Tracking to Fight COVID-19 Evokes Orwell

Governments around the world are using technology to track their citizens to contain COVID-19.

The COVID-19 pandemic has placed public health and personal privacy on a collision course, as smartphone technology has completely rewritten the book on contact tracing.

It's not surprising that an autocratic regime like China would adopt such measures, but democracies such as Israel have taken a similar path.

The gold standard – patient interviews and detective work – had been in place for more than a century. It's been all but replaced by GPS data in smartphones, which allows contact tracing to occur not only virtually in real time, but with vastly more precision.

China has gone the furthest in using such tech to monitor and prevent the spread of the coronavirus. It developed an app called Health Code to determine which of its citizens are infected or at risk of becoming infected. It has assigned each individual a color code – red, yellow or green – and restricts their movement depending on their assignment. It has also leveraged its millions of public video cameras in conjunction with facial recognition tech to identify people in public who are not wearing masks.

It's not surprising that an autocratic regime like China would adopt such measures, but democracies such as Israel have taken a similar path. The national security agency Shin Bet this week began analyzing all personal cellphone data under emergency measures approved by the government. It texts individuals when it's determined they had been in contact with someone who had the coronavirus. In Spain and China, police have sent drones aloft searching for people violating stay-at-home orders. Commands to disperse can be issued through audio systems built into the aircraft. In the U.S., efforts are underway to lift federal restrictions on drones so that police can use them to prevent people from gathering.

The chief executive of a drone manufacturer in the U.S. aptly summed up the situation in an interview with the Financial Times: "It seems a little Orwellian, but this could save lives."

Epidemics and how they're surveilled often pose thorny dilemmas, according to Craig Klugman, a bioethicist and professor of health sciences at DePaul University in Chicago. "There's always a moral issue to contact tracing," he said, adding that the issue doesn't change by nation, only in the way it's resolved.

"Once certain privacy barriers have been breached, it can be difficult to roll them back again."

In China, there's little to no expectation for privacy, so their decision to take the most extreme measures makes sense to Klugman. "In China, the community comes first. In the U.S., individual rights come first," he said.

As the U.S. has scrambled to develop testing kits and manufacture ventilators to identify potential patients and treat them, individual rights have mostly not received any scrutiny. However, that could change in the coming weeks.

The American approach is also leaning toward using smartphone apps, but in a way that may preserve the privacy of users. Researchers at MIT have released a prototype known as Private Kit: Safe Paths. Patients diagnosed with the coronavirus can use the app to disclose their location trail for the prior 28 days to other users without releasing their specific identity. They also have the option of sharing the data with public health officials. But such an app would only be effective if there is a significant number of users.

Singapore is offering a similar app to its citizens known as TraceTogether, which uses both GPS and Bluetooth pings among users to trace potential encounters. It's being offered on a voluntary basis.

The Electronic Frontier Foundation, the leading nonprofit organization defending civil liberties in the digital world, said it is monitoring how these apps are developed and deployed. "Governments around the world are demanding new dragnet location surveillance powers to contain the COVID-19 outbreak," it said in a statement. "But before the public allows their governments to implement such systems, governments must explain to the public how these systems would be effective in stopping the spread of COVID-19. There's no questioning the need for far-reaching public health measures to meet this urgent challenge, but those measures must be scientifically rigorous, and based on the expertise of public health professionals."

Andrew Geronimo, director of the intellectual property venture clinic at the Case Western University School of Law, said that the U.S. government is currently in talks with Facebook, Google and other tech companies about using deidentified location data from smartphones to better monitor the progress of the outbreak. He was hesitant to endorse such a step.

"These companies may say that all of this data is anonymized," he said, "but studies have shown that it is difficult to fully anonymize data sets that contain so much information about us."

Beyond the technical issues, social attitudes may mount another challenge. Epic events such as 9/11 tend to loosen vigilance toward protecting privacy, according to Klugman and Geronimo. And as more people are sickened and hospitalized in the U.S. with COVID-19, Klugman believes more Americans will be willing to allow themselves to be tracked. "If that happens, there needs to be a time limitation," he said.

However, even if time limits are put in place, Geronimo believes it would lead to an even greater rollback of privacy during the next crisis.

"Once certain privacy barriers have been breached, it can be difficult to roll them back again," he warned. "And the prior incidents could always be used as a precedent – or as proof of concept."