AI and you: Is the promise of personalized nutrition apps worth the hype?

Personalized nutrition apps could provide valuable data to people trying to eat healthier, though more research must be done to show effectiveness.

As a type 2 diabetic, Michael Snyder has long been interested in how blood sugar levels vary from one person to another in response to the same food, and whether a more personalized approach to nutrition could help tackle the rapidly cascading levels of diabetes and obesity in much of the western world.

Eight years ago, Snyder, who directs the Center for Genomics and Personalized Medicine at Stanford University, decided to put his theories to the test. In the 2000s continuous glucose monitoring, or CGM, had begun to revolutionize the lives of diabetics, both type 1 and type 2. Using spherical sensors which sit on the upper arm or abdomen – with tiny wires that pierce the skin – the technology allowed patients to gain real-time updates on their blood sugar levels, transmitted directly to their phone.

It gave Snyder an idea for his research at Stanford. Applying the same technology to a group of apparently healthy people, and looking for ‘spikes’ or sudden surges in blood sugar known as hyperglycemia, could provide a means of observing how their bodies reacted to an array of foods.

“We discovered that different foods spike people differently,” he says. “Some people spike to pasta, others to bread, others to bananas, and so on. It’s very personalized and our feeling was that building programs around these devices could be extremely powerful for better managing people’s glucose.”

Unbeknown to Snyder at the time, thousands of miles away, a group of Israeli scientists at the Weizmann Institute of Science were doing exactly the same experiments. In 2015, they published a landmark paper which used CGM to track the blood sugar levels of 800 people over several days, showing that the biological response to identical foods can vary wildly. Like Snyder, they theorized that giving people a greater understanding of their own glucose responses, so they spend more time in the normal range, may reduce the prevalence of type 2 diabetes.

The commercial potential of such apps is clear, but the underlying science continues to generate intriguing findings.

“At the moment 33 percent of the U.S. population is pre-diabetic, and 70 percent of those pre-diabetics will become diabetic,” says Snyder. “Those numbers are going up, so it’s pretty clear we need to do something about it.”

Fast forward to 2022,and both teams have converted their ideas into subscription-based dietary apps which use artificial intelligence to offer data-informed nutritional and lifestyle recommendations. Snyder’s spinoff, January AI, combines CGM information with heart rate, sleep, and activity data to advise on foods to avoid and the best times to exercise. DayTwo–a start-up which utilizes the findings of Weizmann Institute of Science–obtains microbiome information by sequencing stool samples, and combines this with blood glucose data to rate ‘good’ and ‘bad’ foods for a particular person.

“CGMs can be used to devise personalized diets,” says Eran Elinav, an immunology professor and microbiota researcher at the Weizmann Institute of Science in addition to serving as a scientific consultant for DayTwo. “However, this process can be cumbersome. Therefore, in our lab we created an algorithm, based on data acquired from a big cohort of people, which can accurately predict post-meal glucose responses on a personal basis.”

The commercial potential of such apps is clear. DayTwo, who market their product to corporate employers and health insurers rather than individual consumers, recently raised $37 million in funding. But the underlying science continues to generate intriguing findings.

Last year, Elinav and colleagues published a study on 225 individuals with pre-diabetes which found that they achieved better blood sugar control when they followed a personalized diet based on DayTwo’s recommendations, compared to a Mediterranean diet. The journal Cell just released a new paper from Snyder’s group which shows that different types of fibre benefit people in different ways.

“The idea is you hear different fibres are good for you,” says Snyder. “But if you look at fibres they’re all over the map—it’s like saying all animals are the same. The responses are very individual. For a lot of people [a type of fibre called] arabinoxylan clearly reduced cholesterol while the fibre inulin had no effect. But in some people, it was the complete opposite.”

Eight years ago, Stanford's Michael Snyder began studying how continuous glucose monitors could be used by patients to gain real-time updates on their blood sugar levels, transmitted directly to their phone.

The Snyder Lab, Stanford Medicine

Because of studies like these, interest in precision nutrition approaches has exploded in recent years. In January, the National Institutes of Health announced that they are spending $170 million on a five year, multi-center initiative which aims to develop algorithms based on a whole range of data sources from blood sugar to sleep, exercise, stress, microbiome and even genomic information which can help predict which diets are most suitable for a particular individual.

“There's so many different factors which influence what you put into your mouth but also what happens to different types of nutrients and how that ultimately affects your health, which means you can’t have a one-size-fits-all set of nutritional guidelines for everyone,” says Bruce Y. Lee, professor of health policy and management at the City University of New York Graduate School of Public Health.

With the falling costs of genomic sequencing, other precision nutrition clinical trials are choosing to look at whether our genomes alone can yield key information about what our diets should look like, an emerging field of research known as nutrigenomics.

The ASPIRE-DNA clinical trial at Imperial College London is aiming to see whether particular genetic variants can be used to classify individuals into two groups, those who are more glucose sensitive to fat and those who are more sensitive to carbohydrates. By following a tailored diet based on these sensitivities, the trial aims to see whether it can prevent people with pre-diabetes from developing the disease.

But while much hope is riding on these trials, even precision nutrition advocates caution that the field remains in the very earliest of stages. Lars-Oliver Klotz, professor of nutrigenomics at Friedrich-Schiller-University in Jena, Germany, says that while the overall goal is to identify means of avoiding nutrition-related diseases, genomic data alone is unlikely to be sufficient to prevent obesity and type 2 diabetes.

“Genome data is rather simple to acquire these days as sequencing techniques have dramatically advanced in recent years,” he says. “However, the predictive value of just genome sequencing is too low in the case of obesity and prediabetes.”

Others say that while genomic data can yield useful information in terms of how different people metabolize different types of fat and specific nutrients such as B vitamins, there is a need for more research before it can be utilized in an algorithm for making dietary recommendations.

“I think it’s a little early,” says Eileen Gibney, a professor at University College Dublin. “We’ve identified a limited number of gene-nutrient interactions so far, but we need more randomized control trials of people with different genetic profiles on the same diet, to see whether they respond differently, and if that can be explained by their genetic differences.”

Some start-ups have already come unstuck for promising too much, or pushing recommendations which are not based on scientifically rigorous trials. The world of precision nutrition apps was dubbed a ‘Wild West’ by some commentators after the founders of uBiome – a start-up which offered nutritional recommendations based on information obtained from sequencing stool samples –were charged with fraud last year. The weight-loss app Noom, which was valued at $3.7 billion in May 2021, has been criticized on Twitter by a number of users who claimed that its recommendations have led to them developed eating disorders.

With precision nutrition apps marketing their technology at healthy individuals, question marks have also been raised about the value which can be gained through non-diabetics monitoring their blood sugar through CGM. While some small studies have found that wearing a CGM can make overweight or obese individuals more motivated to exercise, there is still a lack of conclusive evidence showing that this translates to improved health.

However, independent researchers remain intrigued by the technology, and say that the wealth of data generated through such apps could be used to help further stratify the different types of people who become at risk of developing type 2 diabetes.

“CGM not only enables a longer sampling time for capturing glucose levels, but will also capture lifestyle factors,” says Robert Wagner, a diabetes researcher at University Hospital Düsseldorf. “It is probable that it can be used to identify many clusters of prediabetic metabolism and predict the risk of diabetes and its complications, but maybe also specific cardiometabolic risk constellations. However, we still don’t know which forms of diabetes can be prevented by such approaches and how feasible and long-lasting such self-feedback dietary modifications are.”

Snyder himself has now been wearing a CGM for eight years, and he credits the insights it provides with helping him to manage his own diabetes. “My CGM still gives me novel insights into what foods and behaviors affect my glucose levels,” he says.

He is now looking to run clinical trials with his group at Stanford to see whether following a precision nutrition approach based on CGM and microbiome data, combined with other health information, can be used to reverse signs of pre-diabetes. If it proves successful, January AI may look to incorporate microbiome data in future.

“Ultimately, what I want to do is be able take people’s poop samples, maybe a blood draw, and say, ‘Alright, based on these parameters, this is what I think is going to spike you,’ and then have a CGM to test that out,” he says. “Getting very predictive about this, so right from the get go, you can have people better manage their health and then use the glucose monitor to help follow that.”

DNA- and RNA-based electronic implants may revolutionize healthcare

The test tubes contain tiny DNA/enzyme-based circuits, which comprise TRUMPET, a new type of electronic device, smaller than a cell.

Implantable electronic devices can significantly improve patients’ quality of life. A pacemaker can encourage the heart to beat more regularly. A neural implant, usually placed at the back of the skull, can help brain function and encourage higher neural activity. Current research on neural implants finds them helpful to patients with Parkinson’s disease, vision loss, hearing loss, and other nerve damage problems. Several of these implants, such as Elon Musk’s Neuralink, have already been approved by the FDA for human use.

Yet, pacemakers, neural implants, and other such electronic devices are not without problems. They require constant electricity, limited through batteries that need replacements. They also cause scarring. “The problem with doing this with electronics is that scar tissue forms,” explains Kate Adamala, an assistant professor of cell biology at the University of Minnesota Twin Cities. “Anytime you have something hard interacting with something soft [like muscle, skin, or tissue], the soft thing will scar. That's why there are no long-term neural implants right now.” To overcome these challenges, scientists are turning to biocomputing processes that use organic materials like DNA and RNA. Other promised benefits include “diagnostics and possibly therapeutic action, operating as nanorobots in living organisms,” writes Evgeny Katz, a professor of bioelectronics at Clarkson University, in his book DNA- And RNA-Based Computing Systems.

While a computer gives these inputs in binary code or "bits," such as a 0 or 1, biocomputing uses DNA strands as inputs, whether double or single-stranded, and often uses fluorescent RNA as an output.

Adamala’s research focuses on developing such biocomputing systems using DNA, RNA, proteins, and lipids. Using these molecules in the biocomputing systems allows the latter to be biocompatible with the human body, resulting in a natural healing process. In a recent Nature Communications study, Adamala and her team created a new biocomputing platform called TRUMPET (Transcriptional RNA Universal Multi-Purpose GatE PlaTform) which acts like a DNA-powered computer chip. “These biological systems can heal if you design them correctly,” adds Adamala. “So you can imagine a computer that will eventually heal itself.”

The basics of biocomputing

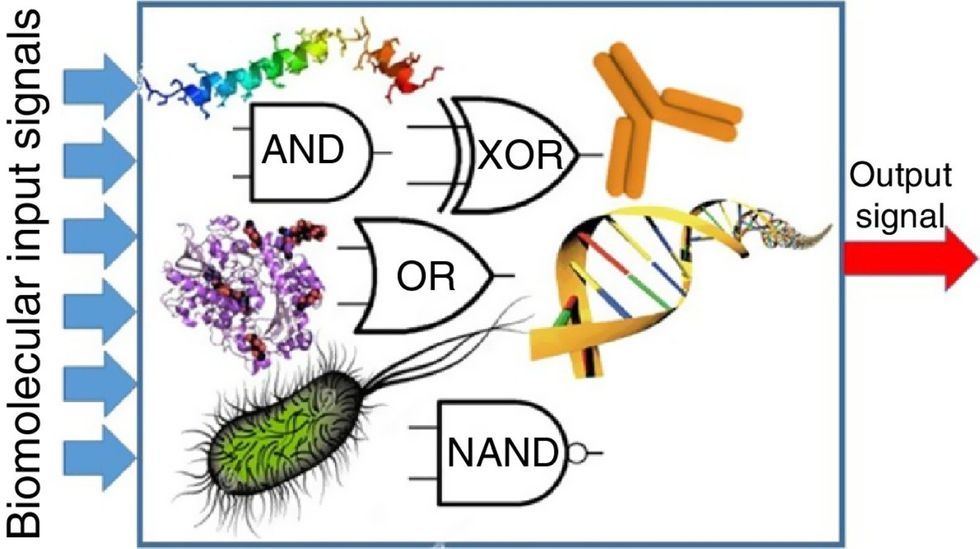

Biocomputing and regular computing have many similarities. Like regular computing, biocomputing works by running information through a series of gates, usually logic gates. A logic gate works as a fork in the road for an electronic circuit. The input will travel one way or another, giving two different outputs. An example logic gate is the AND gate, which has two inputs (A and B) and two different results. If both A and B are 1, the AND gate output will be 1. If only A is 1 and B is 0, the output will be 0 and vice versa. If both A and B are 0, the result will be 0. While a computer gives these inputs in binary code or "bits," such as a 0 or 1, biocomputing uses DNA strands as inputs, whether double or single-stranded, and often uses fluorescent RNA as an output. In this case, the DNA enters the logic gate as a single or double strand.

If the DNA is double-stranded, the system “digests” the DNA or destroys it, which results in non-fluorescence or “0” output. Conversely, if the DNA is single-stranded, it won’t be digested and instead will be copied by several enzymes in the biocomputing system, resulting in fluorescent RNA or a “1” output. And the output for this type of binary system can be expanded beyond fluorescence or not. For example, a “1” output might be the production of the enzyme insulin, while a “0” may be that no insulin is produced. “This kind of synergy between biology and computation is the essence of biocomputing,” says Stephanie Forrest, a professor and the director of the Biodesign Center for Biocomputing, Security and Society at Arizona State University.

Biocomputing circles are made of DNA, RNA, proteins and even bacteria.

Evgeny Katz

The TRUMPET’s promise

Depending on whether the biocomputing system is placed directly inside a cell within the human body, or run in a test-tube, different environmental factors play a role. When an output is produced inside a cell, the cell's natural processes can amplify this output (for example, a specific protein or DNA strand), creating a solid signal. However, these cells can also be very leaky. “You want the cells to do the thing you ask them to do before they finish whatever their businesses, which is to grow, replicate, metabolize,” Adamala explains. “However, often the gate may be triggered without the right inputs, creating a false positive signal. So that's why natural logic gates are often leaky." While biocomputing outside a cell in a test tube can allow for tighter control over the logic gates, the outputs or signals cannot be amplified by a cell and are less potent.

TRUMPET, which is smaller than a cell, taps into both cellular and non-cellular biocomputing benefits. “At its core, it is a nonliving logic gate system,” Adamala states, “It's a DNA-based logic gate system. But because we use enzymes, and the readout is enzymatic [where an enzyme replicates the fluorescent RNA], we end up with signal amplification." This readout means that the output from the TRUMPET system, a fluorescent RNA strand, can be replicated by nearby enzymes in the platform, making the light signal stronger. "So it combines the best of both worlds,” Adamala adds.

These organic-based systems could detect cancer cells or low insulin levels inside a patient’s body.

The TRUMPET biocomputing process is relatively straightforward. “If the DNA [input] shows up as single-stranded, it will not be digested [by the logic gate], and you get this nice fluorescent output as the RNA is made from the single-stranded DNA, and that's a 1,” Adamala explains. "And if the DNA input is double-stranded, it gets digested by the enzymes in the logic gate, and there is no RNA created from the DNA, so there is no fluorescence, and the output is 0." On the story's leading image above, if the tube is "lit" with a purple color, that is a binary 1 signal for computing. If it's "off" it is a 0.

While still in research, TRUMPET and other biocomputing systems promise significant benefits to personalized healthcare and medicine. These organic-based systems could detect cancer cells or low insulin levels inside a patient’s body. The study’s lead author and graduate student Judee Sharon is already beginning to research TRUMPET's ability for earlier cancer diagnoses. Because the inputs for TRUMPET are single or double-stranded DNA, any mutated or cancerous DNA could theoretically be detected from the platform through the biocomputing process. Theoretically, devices like TRUMPET could be used to detect cancer and other diseases earlier.

Adamala sees TRUMPET not only as a detection system but also as a potential cancer drug delivery system. “Ideally, you would like the drug only to turn on when it senses the presence of a cancer cell. And that's how we use the logic gates, which work in response to inputs like cancerous DNA. Then the output can be the production of a small molecule or the release of a small molecule that can then go and kill what needs killing, in this case, a cancer cell. So we would like to develop applications that use this technology to control the logic gate response of a drug’s delivery to a cell.”

Although platforms like TRUMPET are making progress, a lot more work must be done before they can be used commercially. “The process of translating mechanisms and architecture from biology to computing and vice versa is still an art rather than a science,” says Forrest. “It requires deep computer science and biology knowledge,” she adds. “Some people have compared interdisciplinary science to fusion restaurants—not all combinations are successful, but when they are, the results are remarkable.”

Crickets are low on fat, high on protein, and can be farmed sustainably. They are also crunchy.

In today’s podcast episode, Leaps.org Deputy Editor Lina Zeldovich speaks about the health and ecological benefits of farming crickets for human consumption with Bicky Nguyen, who joins Lina from Vietnam. Bicky and her business partner Nam Dang operate an insect farm named CricketOne. Motivated by the idea of sustainable and healthy protein production, they started their unconventional endeavor a few years ago, despite numerous naysayers who didn’t believe that humans would ever consider munching on bugs.

Yet, making creepy crawlers part of our diet offers many health and planetary advantages. Food production needs to match the rise in global population, estimated to reach 10 billion by 2050. One challenge is that some of our current practices are inefficient, polluting and wasteful. According to nonprofit EarthSave.org, it takes 2,500 gallons of water, 12 pounds of grain, 35 pounds of topsoil and the energy equivalent of one gallon of gasoline to produce one pound of feedlot beef, although exact statistics vary between sources.

Meanwhile, insects are easy to grow, high on protein and low on fat. When roasted with salt, they make crunchy snacks. When chopped up, they transform into delicious pâtes, says Bicky, who invents her own cricket recipes and serves them at industry and public events. Maybe that’s why some research predicts that edible insects market may grow to almost $10 billion by 2030. Tune in for a delectable chat on this alternative and sustainable protein.

Listen on Apple | Listen on Spotify | Listen on Stitcher | Listen on Amazon | Listen on Google

Further reading:

More info on Bicky Nguyen

https://yseali.fulbright.edu.vn/en/faculty/bicky-n...

The environmental footprint of beef production

https://www.earthsave.org/environment.htm

https://www.watercalculator.org/news/articles/beef-king-big-water-footprints/

https://www.frontiersin.org/articles/10.3389/fsufs.2019.00005/full

https://ourworldindata.org/carbon-footprint-food-methane

Insect farming as a source of sustainable protein

https://www.insectgourmet.com/insect-farming-growing-bugs-for-protein/

https://www.sciencedirect.com/topics/agricultural-and-biological-sciences/insect-farming

Cricket flour is taking the world by storm

https://www.cricketflours.com/

https://talk-commerce.com/blog/what-brands-use-cricket-flour-and-why/

Lina Zeldovich has written about science, medicine and technology for Popular Science, Smithsonian, National Geographic, Scientific American, Reader’s Digest, the New York Times and other major national and international publications. A Columbia J-School alumna, she has won several awards for her stories, including the ASJA Crisis Coverage Award for Covid reporting, and has been a contributing editor at Nautilus Magazine. In 2021, Zeldovich released her first book, The Other Dark Matter, published by the University of Chicago Press, about the science and business of turning waste into wealth and health. You can find her on http://linazeldovich.com/ and @linazeldovich.