Meet the Scientists on the Frontlines of Protecting Humanity from a Man-Made Pathogen

From left: Jean Peccoud, Randall Murch, and Neeraj Rao.

Jean Peccoud wasn't expecting an email from the FBI. He definitely wasn't expecting the agency to invite him to a meeting. "My reaction was, 'What did I do wrong to be on the FBI watch list?'" he recalls.

You use those blueprints for white-hat research—which is, indeed, why the open blueprints exist—or you can do the same for a black-hat attack.

He didn't know what the feds could possibly want from him. "I was mostly scared at this point," he says. "I was deeply disturbed by the whole thing."

But he decided to go anyway, and when he traveled to San Francisco for the 2008 gathering, the reason for the e-vite became clear: The FBI was reaching out to researchers like him—scientists interested in synthetic biology—in anticipation of the potential nefarious uses of this technology. "The whole purpose of the meeting was, 'Let's start talking to each other before we actually need to talk to each other,'" says Peccoud, now a professor of chemical and biological engineering at Colorado State University. "'And let's make sure next time you get an email from the FBI, you don't freak out."

Synthetic biology—which Peccoud defines as "the application of engineering methods to biological systems"—holds great power, and with that (as always) comes great responsibility. When you can synthesize genetic material in a lab, you can create new ways of diagnosing and treating people, and even new food ingredients. But you can also "print" the genetic sequence of a virus or virulent bacterium.

And while it's not easy, it's also not as hard as it could be, in part because dangerous sequences have publicly available blueprints. You use those blueprints for white-hat research—which is, indeed, why the open blueprints exist—or you can do the same for a black-hat attack. You could synthesize a dangerous pathogen's code on purpose, or you could unwittingly do so because someone tampered with your digital instructions. Ordering synthetic genes for viral sequences, says Peccoud, would likely be more difficult today than it was a decade ago.

"There is more awareness of the industry, and they are taking this more seriously," he says. "There is no specific regulation, though."

Trying to lock down the interconnected machines that enable synthetic biology, secure its lab processes, and keep dangerous pathogens out of the hands of bad actors is part of a relatively new field: cyberbiosecurity, whose name Peccoud and colleagues introduced in a 2018 paper.

Biological threats feel especially acute right now, during the ongoing pandemic. COVID-19 is a natural pathogen -- not one engineered in a lab. But future outbreaks could start from a bug nature didn't build, if the wrong people get ahold of the right genetic sequences, and put them in the right sequence. Securing the equipment and processes that make synthetic biology possible -- so that doesn't happen -- is part of why the field of cyberbiosecurity was born.

The Origin Story

It is perhaps no coincidence that the FBI pinged Peccoud when it did: soon after a journalist ordered a sequence of smallpox DNA and wrote, for The Guardian, about how easy it was. "That was not good press for anybody," says Peccoud. Previously, in 2002, the Pentagon had funded SUNY Stonybrook researchers to try something similar: They ordered bits of polio DNA piecemeal and, over the course of three years, strung them together.

Although many years have passed since those early gotchas, the current patchwork of regulations still wouldn't necessarily prevent someone from pulling similar tricks now, and the technological systems that synthetic biology runs on are more intertwined — and so perhaps more hackable — than ever. Researchers like Peccoud are working to bring awareness to those potential problems, to promote accountability, and to provide early-detection tools that would catch the whiff of a rotten act before it became one.

Peccoud notes that if someone wants to get access to a specific pathogen, it is probably easier to collect it from the environment or take it from a biodefense lab than to whip it up synthetically. "However, people could use genetic databases to design a system that combines different genes in a way that would make them dangerous together without each of the components being dangerous on its own," he says. "This would be much more difficult to detect."

After his meeting with the FBI, Peccoud grew more interested in these sorts of security questions. So he was paying attention when, in 2010, the Department of Health and Human Services — now helping manage the response to COVID-19 — created guidance for how to screen synthetic biology orders, to make sure suppliers didn't accidentally send bad actors the sequences that make up bad genomes.

Guidance is nice, Peccoud thought, but it's just words. He wanted to turn those words into action: into a computer program. "I didn't know if it was something you can run on a desktop or if you need a supercomputer to run it," he says. So, one summer, he tasked a team of student researchers with poring over the sentences and turning them into scripts. "I let the FBI know," he says, having both learned his lesson and wanting to get in on the game.

Peccoud later joined forces with Randall Murch, a former FBI agent and current Virginia Tech professor, and a team of colleagues from both Virginia Tech and the University of Nebraska-Lincoln, on a prototype project for the Department of Defense. They went into a lab at the University of Nebraska at Lincoln and assessed all its cyberbio-vulnerabilities. The lab develops and produces prototype vaccines, therapeutics, and prophylactic components — exactly the kind of place that you always, and especially right now, want to keep secure.

"We were creating wiki of all these nasty things."

The team found dozens of Achilles' heels, and put them in a private report. Not long after that project, the two and their colleagues wrote the paper that first used the term "cyberbiosecurity." A second paper, led by Murch, came out five months later and provided a proposed definition and more comprehensive perspective on cyberbiosecurity. But although it's now a buzzword, it's the definition, not the jargon, that matters. "Frankly, I don't really care if they call it cyberbiosecurity," says Murch. Call it what you want: Just pay attention to its tenets.

A Database of Scary Sequences

Peccoud and Murch, of course, aren't the only ones working to screen sequences and secure devices. At the nonprofit Battelle Memorial Institute in Columbus, Ohio, for instance, scientists are working on solutions that balance the openness inherent to science and the closure that can stop bad stuff. "There's a challenge there that you want to enable research but you want to make sure that what people are ordering is safe," says the organization's Neeraj Rao.

Rao can't talk about the work Battelle does for the spy agency IARPA, the Intelligence Advanced Research Projects Activity, on a project called Fun GCAT, which aims to use computational tools to deep-screen gene-sequence orders to see if they pose a threat. It can, though, talk about a twin-type internal project: ThreatSEQ (pronounced, of course, "threat seek").

The project started when "a government customer" (as usual, no one will say which) asked Battelle to curate a list of dangerous toxins and pathogens, and their genetic sequences. The researchers even started tagging sequences according to their function — like whether a particular sequence is involved in a germ's virulence or toxicity. That helps if someone is trying to use synthetic biology not to gin up a yawn-inducing old bug but to engineer a totally new one. "How do you essentially predict what the function of a novel sequence is?" says Rao. You look at what other, similar bits of code do.

"We were creating wiki of all these nasty things," says Rao. As they were working, they realized that DNA manufacturers could potentially scan in sequences that people ordered, run them against the database, and see if anything scary matched up. Kind of like that plagiarism software your college professors used.

Battelle began offering their screening capability, as ThreatSEQ. When customers -- like, currently, Twist Bioscience -- throw their sequences in, and get a report back, the manufacturers make the final decision about whether to fulfill a flagged order — whether, in the analogy, to give an F for plagiarism. After all, legitimate researchers do legitimately need to have DNA from legitimately bad organisms.

"Maybe it's the CDC," says Rao. "If things check out, oftentimes [the manufacturers] will fulfill the order." If it's your aggrieved uncle seeking the virulent pathogen, maybe not. But ultimately, no one is stopping the manufacturers from doing so.

Beyond that kind of tampering, though, cyberbiosecurity also includes keeping a lockdown on the machines that make the genetic sequences. "Somebody now doesn't need physical access to infrastructure to tamper with it," says Rao. So it needs the same cyber protections as other internet-connected devices.

Scientists are also now using DNA to store data — encoding information in its bases, rather than into a hard drive. To download the data, you sequence the DNA and read it back into a computer. But if you think like a bad guy, you'd realize that a bad guy could then, for instance, insert a computer virus into the genetic code, and when the researcher went to nab her data, her desktop would crash or infect the others on the network.

Something like that actually happened in 2017 at the USENIX security symposium, an annual programming conference: Researchers from the University of Washington encoded malware into DNA, and when the gene sequencer assembled the DNA, it corrupted the sequencer's software, then the computer that controlled it.

"This vulnerability could be just the opening an adversary needs to compromise an organization's systems," Inspirion Biosciences' J. Craig Reed and Nicolas Dunaway wrote in a paper for Frontiers in Bioengineering and Biotechnology, included in an e-book that Murch edited called Mapping the Cyberbiosecurity Enterprise.

Where We Go From Here

So what to do about all this? That's hard to say, in part because we don't know how big a current problem any of it poses. As noted in Mapping the Cyberbiosecurity Enterprise, "Information about private sector infrastructure vulnerabilities or data breaches is protected from public release by the Protected Critical Infrastructure Information (PCII) Program," if the privateers share the information with the government. "Government sector vulnerabilities or data breaches," meanwhile, "are rarely shared with the public."

"What I think is encouraging right now is the fact that we're even having this discussion."

The regulations that could rein in problems aren't as robust as many would like them to be, and much good behavior is technically voluntary — although guidelines and best practices do exist from organizations like the International Gene Synthesis Consortium and the National Institute of Standards and Technology.

Rao thinks it would be smart if grant-giving agencies like the National Institutes of Health and the National Science Foundation required any scientists who took their money to work with manufacturing companies that screen sequences. But he also still thinks we're on our way to being ahead of the curve, in terms of preventing print-your-own bioproblems: "What I think is encouraging right now is the fact that we're even having this discussion," says Rao.

Peccoud, for his part, has worked to keep such conversations going, including by doing training for the FBI and planning a workshop for students in which they imagine and work to guard against the malicious use of their research. But actually, Peccoud believes that human error, flawed lab processes, and mislabeled samples might be bigger threats than the outside ones. "Way too often, I think that people think of security as, 'Oh, there is a bad guy going after me,' and the main thing you should be worried about is yourself and errors," he says.

Murch thinks we're only at the beginning of understanding where our weak points are, and how many times they've been bruised. Decreasing those contusions, though, won't just take more secure systems. "The answer won't be technical only," he says. It'll be social, political, policy-related, and economic — a cultural revolution all its own.

New elevators could lift up our access to space

A space elevator would be cheaper and cleaner than using rockets

Story by Big Think

When people first started exploring space in the 1960s, it cost upwards of $80,000 (adjusted for inflation) to put a single pound of payload into low-Earth orbit.

A major reason for this high cost was the need to build a new, expensive rocket for every launch. That really started to change when SpaceX began making cheap, reusable rockets, and today, the company is ferrying customer payloads to LEO at a price of just $1,300 per pound.

This is making space accessible to scientists, startups, and tourists who never could have afforded it previously, but the cheapest way to reach orbit might not be a rocket at all — it could be an elevator.

The space elevator

The seeds for a space elevator were first planted by Russian scientist Konstantin Tsiolkovsky in 1895, who, after visiting the 1,000-foot (305 m) Eiffel Tower, published a paper theorizing about the construction of a structure 22,000 miles (35,400 km) high.

This would provide access to geostationary orbit, an altitude where objects appear to remain fixed above Earth’s surface, but Tsiolkovsky conceded that no material could support the weight of such a tower.

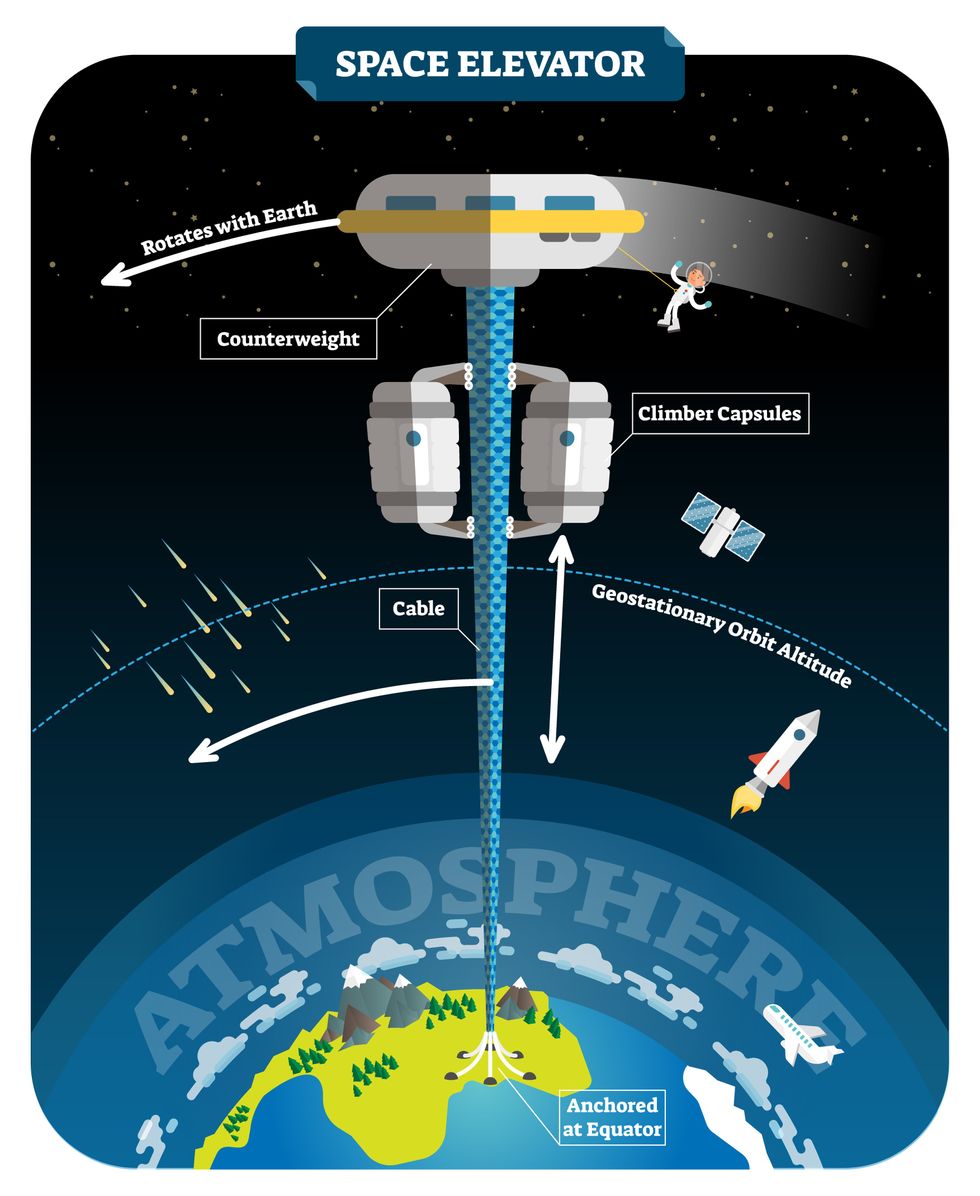

We could then send electrically powered “climber” vehicles up and down the tether to deliver payloads to any Earth orbit.

In 1959, soon after Sputnik, Russian engineer Yuri N. Artsutanov proposed a way around this issue: instead of building a space elevator from the ground up, start at the top. More specifically, he suggested placing a satellite in geostationary orbit and dropping a tether from it down to Earth’s equator. As the tether descended, the satellite would ascend. Once attached to Earth’s surface, the tether would be kept taut, thanks to a combination of gravitational and centrifugal forces.

We could then send electrically powered “climber” vehicles up and down the tether to deliver payloads to any Earth orbit. According to physicist Bradley Edwards, who researched the concept for NASA about 20 years ago, it’d cost $10 billion and take 15 years to build a space elevator, but once operational, the cost of sending a payload to any Earth orbit could be as low as $100 per pound.

“Once you reduce the cost to almost a Fed-Ex kind of level, it opens the doors to lots of people, lots of countries, and lots of companies to get involved in space,” Edwards told Space.com in 2005.

In addition to the economic advantages, a space elevator would also be cleaner than using rockets — there’d be no burning of fuel, no harmful greenhouse emissions — and the new transport system wouldn’t contribute to the problem of space junk to the same degree that expendable rockets do.

So, why don’t we have one yet?

Tether troubles

Edwards wrote in his report for NASA that all of the technology needed to build a space elevator already existed except the material needed to build the tether, which needs to be light but also strong enough to withstand all the huge forces acting upon it.

The good news, according to the report, was that the perfect material — ultra-strong, ultra-tiny “nanotubes” of carbon — would be available in just two years.

“[S]teel is not strong enough, neither is Kevlar, carbon fiber, spider silk, or any other material other than carbon nanotubes,” wrote Edwards. “Fortunately for us, carbon nanotube research is extremely hot right now, and it is progressing quickly to commercial production.”Unfortunately, he misjudged how hard it would be to synthesize carbon nanotubes — to date, no one has been able to grow one longer than 21 inches (53 cm).

Further research into the material revealed that it tends to fray under extreme stress, too, meaning even if we could manufacture carbon nanotubes at the lengths needed, they’d be at risk of snapping, not only destroying the space elevator, but threatening lives on Earth.

Looking ahead

Carbon nanotubes might have been the early frontrunner as the tether material for space elevators, but there are other options, including graphene, an essentially two-dimensional form of carbon that is already easier to scale up than nanotubes (though still not easy).

Contrary to Edwards’ report, Johns Hopkins University researchers Sean Sun and Dan Popescu say Kevlar fibers could work — we would just need to constantly repair the tether, the same way the human body constantly repairs its tendons.

“Using sensors and artificially intelligent software, it would be possible to model the whole tether mathematically so as to predict when, where, and how the fibers would break,” the researchers wrote in Aeon in 2018.

“When they did, speedy robotic climbers patrolling up and down the tether would replace them, adjusting the rate of maintenance and repair as needed — mimicking the sensitivity of biological processes,” they continued.Astronomers from the University of Cambridge and Columbia University also think Kevlar could work for a space elevator — if we built it from the moon, rather than Earth.

They call their concept the Spaceline, and the idea is that a tether attached to the moon’s surface could extend toward Earth’s geostationary orbit, held taut by the pull of our planet’s gravity. We could then use rockets to deliver payloads — and potentially people — to solar-powered climber robots positioned at the end of this 200,000+ mile long tether. The bots could then travel up the line to the moon’s surface.

This wouldn’t eliminate the need for rockets to get into Earth’s orbit, but it would be a cheaper way to get to the moon. The forces acting on a lunar space elevator wouldn’t be as strong as one extending from Earth’s surface, either, according to the researchers, opening up more options for tether materials.

“[T]he necessary strength of the material is much lower than an Earth-based elevator — and thus it could be built from fibers that are already mass-produced … and relatively affordable,” they wrote in a paper shared on the preprint server arXiv.

After riding up the Earth-based space elevator, a capsule would fly to a space station attached to the tether of the moon-based one.

Electrically powered climber capsules could go up down the tether to deliver payloads to any Earth orbit.

Adobe Stock

Some Chinese researchers, meanwhile, aren’t giving up on the idea of using carbon nanotubes for a space elevator — in 2018, a team from Tsinghua University revealed that they’d developed nanotubes that they say are strong enough for a tether.

The researchers are still working on the issue of scaling up production, but in 2021, state-owned news outlet Xinhua released a video depicting an in-development concept, called “Sky Ladder,” that would consist of space elevators above Earth and the moon.

After riding up the Earth-based space elevator, a capsule would fly to a space station attached to the tether of the moon-based one. If the project could be pulled off — a huge if — China predicts Sky Ladder could cut the cost of sending people and goods to the moon by 96 percent.

The bottom line

In the 120 years since Tsiolkovsky looked at the Eiffel Tower and thought way bigger, tremendous progress has been made developing materials with the properties needed for a space elevator. At this point, it seems likely we could one day have a material that can be manufactured at the scale needed for a tether — but by the time that happens, the need for a space elevator may have evaporated.

Several aerospace companies are making progress with their own reusable rockets, and as those join the market with SpaceX, competition could cause launch prices to fall further.

California startup SpinLaunch, meanwhile, is developing a massive centrifuge to fling payloads into space, where much smaller rockets can propel them into orbit. If the company succeeds (another one of those big ifs), it says the system would slash the amount of fuel needed to reach orbit by 70 percent.

Even if SpinLaunch doesn’t get off the ground, several groups are developing environmentally friendly rocket fuels that produce far fewer (or no) harmful emissions. More work is needed to efficiently scale up their production, but overcoming that hurdle will likely be far easier than building a 22,000-mile (35,400-km) elevator to space.

This article originally appeared on Big Think, home of the brightest minds and biggest ideas of all time.

New tech aims to make the ocean healthier for marine life

Overabundance of dissolved carbon dioxide poses a threat to marine life. A new system detects elevated levels of the greenhouse gases and mitigates them on the spot.

A defunct drydock basin arched by a rusting 19th century steel bridge seems an incongruous place to conduct state-of-the-art climate science. But this placid and protected sliver of water connecting Brooklyn’s Navy Yard to the East River was just right for Garrett Boudinot to float a small dock topped with water carbon-sensing gear. And while his system right now looks like a trio of plastic boxes wired up together, it aims to mediate the growing ocean acidification problem, caused by overabundance of dissolved carbon dioxide.

Boudinot, a biogeochemist and founder of a carbon-management startup called Vycarb, is honing his method for measuring CO2 levels in water, as well as (at least temporarily) correcting their negative effects. It’s a challenge that’s been occupying numerous climate scientists as the ocean heats up, and as states like New York recognize that reducing emissions won’t be enough to reach their climate goals; they’ll have to figure out how to remove carbon, too.

To date, though, methods for measuring CO2 in water at scale have been either intensely expensive, requiring fancy sensors that pump CO2 through membranes; or prohibitively complicated, involving a series of lab-based analyses. And that’s led to a bottleneck in efforts to remove carbon as well.

But recently, Boudinot cracked part of the code for measurement and mitigation, at least on a small scale. While the rest of the industry sorts out larger intricacies like getting ocean carbon markets up and running and driving carbon removal at billion-ton scale in centralized infrastructure, his decentralized method could have important, more immediate implications.

Specifically, for shellfish hatcheries, which grow seafood for human consumption and for coastal restoration projects. Some of these incubators for oysters and clams and scallops are already feeling the negative effects of excess carbon in water, and Vycarb’s tech could improve outcomes for the larval- and juvenile-stage mollusks they’re raising. “We’re learning from these folks about what their needs are, so that we’re developing our system as a solution that’s relevant,” Boudinot says.

Ocean acidification can wreak havoc on developing shellfish, inhibiting their shells from growing and leading to mass die-offs.

Ocean waters naturally absorb CO2 gas from the atmosphere. When CO2 accumulates faster than nature can dissipate it, it reacts with H2O molecules, forming carbonic acid, H2CO3, which makes the water column more acidic. On the West Coast, acidification occurs when deep, carbon dioxide-rich waters upwell onto the coast. This can wreak havoc on developing shellfish, inhibiting their shells from growing and leading to mass die-offs; this happened, disastrously, at Pacific Northwest oyster hatcheries in 2007.

This type of acidification will eventually come for the East Coast, too, says Ryan Wallace, assistant professor and graduate director of environmental studies and sciences at Long Island’s Adelphi University, who studies acidification. But at the moment, East Coast acidification has other sources: agricultural runoff, usually in the form of nitrogen, and human and animal waste entering coastal areas. These excess nutrient loads cause algae to grow, which isn’t a problem in and of itself, Wallace says; but when algae die, they’re consumed by bacteria, whose respiration in turn bumps up CO2 levels in water.

“Unfortunately, this is occurring at the bottom [of the water column], where shellfish organisms live and grow,” Wallace says. Acidification on the East Coast is minutely localized, occurring closest to where nutrients are being released, as well as seasonally; at least one local shellfish farm, on Fishers Island in the Long Island Sound, has contended with its effects.

The second Vycarb pilot, ready to be installed at the East Hampton shellfish hatchery.

Courtesy of Vycarb

Besides CO2, ocean water contains two other forms of dissolved carbon — carbonate (CO3-) and bicarbonate (HCO3) — at all times, at differing levels. At low pH (acidic), CO2 prevails; at medium pH, HCO3 is the dominant form; at higher pH, CO3 dominates. Boudinot’s invention is the first real-time measurement for all three, he says. From the dock at the Navy Yard, his pilot system uses carefully calibrated but low-cost sensors to gauge the water’s pH and its corresponding levels of CO2. When it detects elevated levels of the greenhouse gas, the system mitigates it on the spot. It does this by adding a bicarbonate powder that’s a byproduct of agricultural limestone mining in nearby Pennsylvania. Because the bicarbonate powder is alkaline, it increases the water pH and reduces the acidity. “We drive a chemical reaction to increase the pH to convert greenhouse gas- and acid-causing CO2 into bicarbonate, which is HCO3,” Boudinot says. “And HCO3 is what shellfish and fish and lots of marine life prefers over CO2.”

This de-acidifying “buffering” is something shellfish operations already do to water, usually by adding soda ash (NaHCO3), which is also alkaline. Some hatcheries add soda ash constantly, just in case; some wait till acidification causes significant problems. Generally, for an overly busy shellfish farmer to detect acidification takes time and effort. “We’re out there daily, taking a look at the pH and figuring out how much we need to dose it,” explains John “Barley” Dunne, director of the East Hampton Shellfish Hatchery on Long Island. “If this is an automatic system…that would be much less labor intensive — one less thing to monitor when we have so many other things we need to monitor.”

Across the Sound at the hatchery he runs, Dunne annually produces 30 million hard clams, 6 million oysters, and “if we’re lucky, some years we get a million bay scallops,” he says. These mollusks are destined for restoration projects around the town of East Hampton, where they’ll create habitat, filter water, and protect the coastline from sea level rise and storm surge. So far, Dunne’s hatchery has largely escaped the ill effects of acidification, although his bay scallops are having a finicky year and he’s checking to see if acidification might be part of the problem. But “I think it's important to have these solutions ready-at-hand for when the time comes,” he says. That’s why he’s hosting a second, 70-liter Vycarb pilot starting this summer on a dock adjacent to his East Hampton operation; it will amp up to a 50,000 liter-system in a few months.

If it can buffer water over a large area, absolutely this will benefit natural spawns. -- John “Barley” Dunne.

Boudinot hopes this new pilot will act as a proof of concept for hatcheries up and down the East Coast. The area from Maine to Nova Scotia is experiencing the worst of Atlantic acidification, due in part to increased Arctic meltwater combining with Gulf of St. Lawrence freshwater; that decreases saturation of calcium carbonate, making the water more acidic. Boudinot says his system should work to adjust low pH regardless of the cause or locale. The East Hampton system will eventually test and buffer-as-necessary the water that Dunne pumps from the Sound into 100-gallon land-based tanks where larvae grow for two weeks before being transferred to an in-Sound nursery to plump up.

Dunne says this could have positive effects — not only on his hatchery but on wild shellfish populations, too, reducing at least one stressor their larvae experience (others include increasing water temperatures and decreased oxygen levels). “If it can buffer water over a large area, absolutely this will [benefit] natural spawns,” he says.

No one believes the Vycarb model — even if it proves capable of functioning at much greater scale — is the sole solution to acidification in the ocean. Wallace says new water treatment plants in New York City, which reduce nitrogen released into coastal waters, are an important part of the equation. And “certainly, some green infrastructure would help,” says Boudinot, like restoring coastal and tidal wetlands to help filter nutrient runoff.

In the meantime, Boudinot continues to collect data in advance of amping up his own operations. Still unknown is the effect of releasing huge amounts of alkalinity into the ocean. Boudinot says a pH of 9 or higher can be too harsh for marine life, plus it can also trigger a release of CO2 from the water back into the atmosphere. For a third pilot, on Governor’s Island in New York Harbor, Vycarb will install yet another system from which Boudinot’s team will frequently sample to analyze some of those and other impacts. “Let's really make sure that we know what the results are,” he says. “Let's have data to show, because in this carbon world, things behave very differently out in the real world versus on paper.”