How a Deadly Fire Gave Birth to Modern Medicine

The Cocoanut Grove fire in Boston in 1942 tragically claimed 490 lives, but was the catalyst for several important medical advances.

On the evening of November 28, 1942, more than 1,000 revelers from the Boston College-Holy Cross football game jammed into the Cocoanut Grove, Boston's oldest nightclub. When a spark from faulty wiring accidently ignited an artificial palm tree, the packed nightspot, which was only designed to accommodate about 500 people, was quickly engulfed in flames. In the ensuing panic, hundreds of people were trapped inside, with most exit doors locked. Bodies piled up by the only open entrance, jamming the exits, and 490 people ultimately died in the worst fire in the country in forty years.

"People couldn't get out," says Dr. Kenneth Marshall, a retired plastic surgeon in Boston and president of the Cocoanut Grove Memorial Committee. "It was a tragedy of mammoth proportions."

Within a half an hour of the start of the blaze, the Red Cross mobilized more than five hundred volunteers in what one newspaper called a "Rehearsal for Possible Blitz." The mayor of Boston imposed martial law. More than 300 victims—many of whom subsequently died--were taken to Boston City Hospital in one hour, averaging one victim every eleven seconds, while Massachusetts General Hospital admitted 114 victims in two hours. In the hospitals, 220 victims clung precariously to life, in agonizing pain from massive burns, their bodies ravaged by infection.

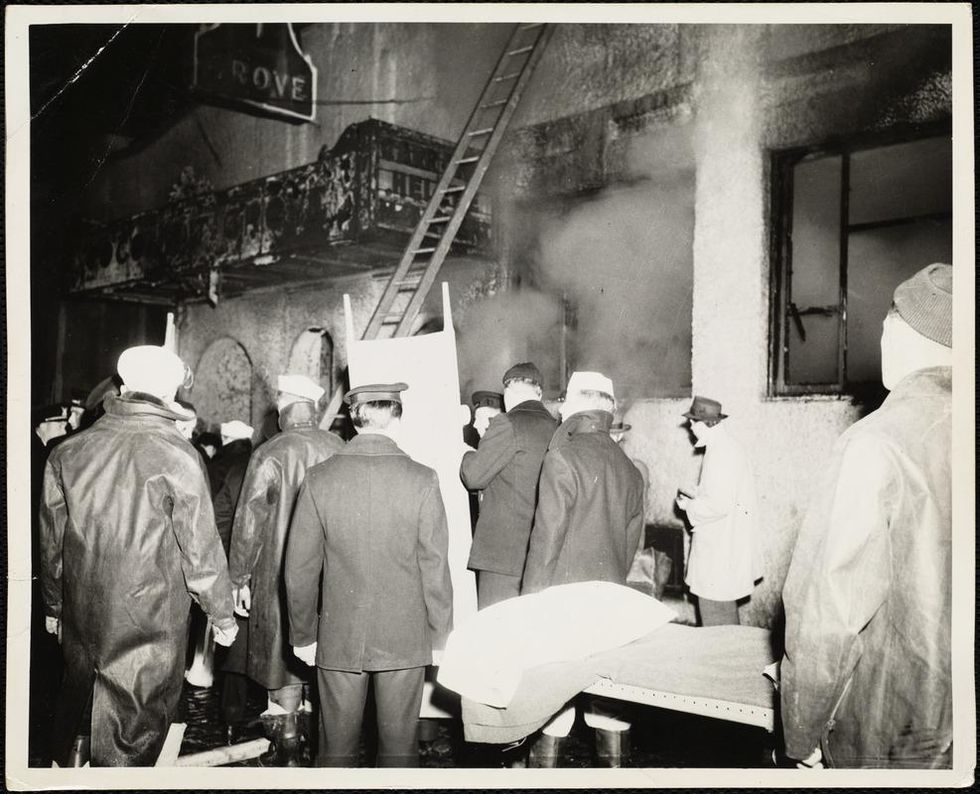

The scene of the fire.

Boston Public Library

Tragic Losses Prompted Revolutionary Leaps

But there is a silver lining: this horrific disaster prompted dramatic changes in safety regulations to prevent another catastrophe of this magnitude and led to the development of medical techniques that eventually saved millions of lives. It transformed burn care treatment and the use of plasma on burn victims, but most importantly, it introduced to the public a new wonder drug that revolutionized medicine, midwifed the birth of the modern pharmaceutical industry, and nearly doubled life expectancy, from 48 years at the turn of the 20th century to 78 years in the post-World War II years.

The devastating grief of the survivors also led to the first published study of post-traumatic stress disorder by pioneering psychiatrist Alexandra Adler, daughter of famed Viennese psychoanalyst Alfred Adler, who was a student of Freud. Dr. Adler studied the anxiety and depression that followed this catastrophe, according to the New York Times, and "later applied her findings to the treatment World War II veterans."

Dr. Ken Marshall is intimately familiar with the lingering psychological trauma of enduring such a disaster. His mother, an Irish immigrant and a nurse in the surgical wards at Boston City Hospital, was on duty that cold Thanksgiving weekend night, and didn't come home for four days. "For years afterward, she'd wake up screaming in the middle of the night," recalls Dr. Marshall, who was four years old at the time. "Seeing all those bodies lined up in neat rows across the City Hospital's parking lot, still in their evening clothes. It was always on her mind and memories of the horrors plagued her for the rest of her life."

The sheer magnitude of casualties prompted overwhelmed physicians to try experimental new procedures that were later successfully used to treat thousands of battlefield casualties. Instead of cutting off blisters and using dyes and tannic acid to treat burned tissues, which can harden the skin, they applied gauze coated with petroleum jelly. Doctors also refined the formula for using plasma--the fluid portion of blood and a medical technology that was just four years old--to replenish bodily liquids that evaporated because of the loss of the protective covering of skin.

"Every war has given us a new medical advance. And penicillin was the great scientific advance of World War II."

"The initial insult with burns is a loss of fluids and patients can die of shock," says Dr. Ken Marshall. "The scientific progress that was made by the two institutions revolutionized fluid management and topical management of burn care forever."

Still, they could not halt the staph infections that kill most burn victims—which prompted the first civilian use of a miracle elixir that was being secretly developed in government-sponsored labs and that ultimately ushered in a new age in therapeutics. Military officials quickly realized this disaster could provide an excellent natural laboratory to test the effectiveness of this drug and see if it could be used to treat the acute traumas of combat in this unfortunate civilian approximation of battlefield conditions. At the time, the very existence of this wondrous medicine—penicillin—was a closely guarded military secret.

From Forgotten Lab Experiment to Wonder Drug

In 1928, Alexander Fleming discovered the curative powers of penicillin, which promised to eradicate infectious pathogens that killed millions every year. But the road to mass producing enough of the highly unstable mold was littered with seemingly unsurmountable obstacles and it remained a forgotten laboratory curiosity for over a decade. But Fleming never gave up and penicillin's eventual rescue from obscurity was a landmark in scientific history.

In 1940, a group at Oxford University, funded in part by the Rockefeller Foundation, isolated enough penicillin to test it on twenty-five mice, which had been infected with lethal doses of streptococci. Its therapeutic effects were miraculous—the untreated mice died within hours, while the treated ones played merrily in their cages, undisturbed. Subsequent tests on a handful of patients, who were brought back from the brink of death, confirmed that penicillin was indeed a wonder drug. But Britain was then being ravaged by the German Luftwaffe during the Blitz, and there were simply no resources to devote to penicillin during the Nazi onslaught.

In June of 1941, two of the Oxford researchers, Howard Florey and Ernst Chain, embarked on a clandestine mission to enlist American aid. Samples of the temperamental mold were stored in their coats. By October, the Roosevelt Administration had recruited four companies—Merck, Squibb, Pfizer and Lederle—to team up in a massive, top-secret development program. Merck, which had more experience with fermentation procedures, swiftly pulled away from the pack and every milligram they produced was zealously hoarded.

After the nightclub fire, the government ordered Merck to dispatch to Boston whatever supplies of penicillin that they could spare and to refine any crude penicillin broth brewing in Merck's fermentation vats. After working in round-the-clock relays over the course of three days, on the evening of December 1st, 1942, a refrigerated truck containing thirty-two liters of injectable penicillin left Merck's Rahway, New Jersey plant. It was accompanied by a convoy of police escorts through four states before arriving in the pre-dawn hours at Massachusetts General Hospital. Dozens of people were rescued from near-certain death in the first public demonstration of the powers of the antibiotic, and the existence of penicillin could no longer be kept secret from inquisitive reporters and an exultant public. The next day, the Boston Globe called it "priceless" and Time magazine dubbed it a "wonder drug."

Within fourteen months, penicillin production escalated exponentially, churning out enough to save the lives of thousands of soldiers, including many from the Normandy invasion. And in October 1945, just weeks after the Japanese surrender ended World War II, Alexander Fleming, Howard Florey and Ernst Chain were awarded the Nobel Prize in medicine. But penicillin didn't just save lives—it helped build some of the most innovative medical and scientific companies in history, including Merck, Pfizer, Glaxo and Sandoz.

"Every war has given us a new medical advance," concludes Marshall. "And penicillin was the great scientific advance of World War II."

Podcast: The future of brain health with Percy Griffin

Percy Griffin, director of scientific engagement for the Alzheimer’s Association, joins Leaps.org to discuss the present and future of the fight against dementia.

Today's guest is Percy Griffin, director of scientific engagement for the Alzheimer’s Association, a nonprofit that’s focused on speeding up research, finding better ways to detect Alzheimer’s earlier and other approaches for reducing risk. Percy has a doctorate in molecular cell biology from Washington University, he’s led important research on Alzheimer’s, and you can find the link to his full bio in the show notes, below.

Our topic for this conversation is the present and future of the fight against dementia. Billions of dollars have been spent by the National Institutes of Health and biotechs to research new treatments for Alzheimer's and other forms of dementia, but so far there's been little to show for it. Last year, Aduhelm became the first drug to be approved by the FDA for Alzheimer’s in 20 years, but it's received a raft of bad publicity, with red flags about its effectiveness, side effects and cost.

Meanwhile, 6.5 million Americans have Alzheimer's, and this number could increase to 13 million in 2050. Listen to this conversation if you’re concerned about your own brain health, that of family members getting older, or if you’re just concerned about the future of this country with experts predicting the number people over 65 will increase dramatically in the very near future.

Listen to the Episode

Listen on Apple | Listen on Spotify | Listen on Stitcher | Listen on Amazon | Listen on Google

4:40 - We talk about the parts of Percy’s life that led to him to concentrate on working in this important area.

6:20 - He defines Alzheimer's and dementia, and discusses the key elements of communicating science.

10:20 - Percy explains why the Alzheimer’s Association has been supportive of Aduhelm, even as others have been critical.

17:58 - We talk about therapeutics under development, which ones to be excited about, and how they could be tailored to a person's own biology.

24:25 - Percy discusses funding and tradeoffs between investing more money into Alzheimer’s research compared to other intractable diseases like cancer, and new opportunities to accelerate progress, such as ARPA-H, President Biden’s proposed agency to speed up health breakthroughs.

27:24 - We talk about the social determinants of brain health. What are the pros/cons of continuing to spend massive sums of money to develop new drugs like Aduhelm versus refocusing on expanding policies to address social determinants - like better education, nutritious food and safe drinking water - that have enabled some groups more than others to enjoy improved cognition late in life.

34:18 - Percy describes his top lifestyle recommendations for protecting your mind.

37:33 - Is napping bad for the brain?

39:39 - Circadian rhythm and Alzheimer's.

42:34 - What tests can people take to check their brain health today, and which biomarkers are we making progress on?

47:25 - Percy highlights important programs run by the Alzheimer’s Association to support advances.

Show links:

** After this episode was recorded, the Centers for Medicare and Medicaid Services affirmed its decision from last June to limit coverage of Aduhelm. More here.

- Percy Griffin's bio: https://www.alz.org/manh/events/alztalks/upcoming-...

- The Alzheimer's Association's Part the Cloud program: https://alz.org/partthecloud/about-us.asp

- The paradox of dementia rates decreasing: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7455342/

- The argument for focusing more resources on improving institutions and social processes for brain health: https://www.statnews.com/2021/09/23/the-brain-heal...

- Recent research on napping: https://www.ocregister.com/2022/03/25/alzheimers-s...

- The Alzheimer's Association helpline: https://www.alz.org/help-support/resources/helpline

- ALZConnected, a free online community for people affected by dementia https://www.alzconnected.org/

- TrialMatch for people with dementia and healthy volunteers to find clinical trials for Alzheimer's and other dementia: https://www.alz.org/alzheimers-dementia/research_p...

Employers can create a culture of “Excellence From Anywhere” to reduce the risk of inequality among office-centric, hybrid, and fully remote employees.

COVID-19 prompted numerous companies to reconsider their approach to the future of work. Many leaders felt reluctant about maintaining hybrid and remote work options after vaccines became widely available. Yet the emergence of dangerous COVID variants such as Omicron has shown the folly of this mindset.

To mitigate the risks of new variants and other public health threats, as well as to satisfy the desires of a large majority of employees who express a strong desire in multiple surveys for a flexible hybrid or fully remote schedule, leaders are increasingly accepting that hybrid and remote options represent the future of work. No wonder that a February 2022 survey by the Federal Reserve Bank of Richmond showed that more and more firms are offering hybrid and fully-remote work options. The firms expect to have more remote workers next year and more geographically-distributed workers.

Although hybrid and remote work mitigates public health risks, it poses another set of health concerns relevant to employee wellbeing, due to the threat of proximity bias. This term refers to the negative impact on work culture from the prospect of inequality among office-centric, hybrid, and fully remote employees.

The difference in time spent in the office leads to concerns ranging from decreased career mobility for those who spend less facetime with their supervisor to resentment building up against the staff who have the most flexibility in where to work. In fact, a January 2022 survey by the company Slack of over 10,000 knowledge workers and their leaders shows that proximity bias is the top concern – expressed by 41% of executives - about hybrid and remote work.

To address this problem requires using best practices based on cognitive science for creating a culture of “Excellence From Anywhere.” This solution is based on guidance that I developed for leaders at 17 pioneering organizations for a company culture fit for the future of work.

Protect from proximity bias via the "Excellence From Anywhere" strategy

So why haven’t firms addressed the obvious problem of proximity bias? Any reasonable external observer could predict the issues arising from differences of time spent in the office.

Unfortunately, leaders often fail to see the clear threat in front of their nose. You might have heard of black swans: low-probability, high-impact threats. Well, the opposite kind of threats are called gray rhinos: obvious dangers that we fail to see because of our mental blindspots. The scientific name for these blindspots is cognitive biases, which cause leaders to resist best practices in transitioning to a hybrid-first model.

The core idea is to get all of your workforce to pull together to achieve business outcomes: the location doesn’t matter.

Leaders can address this by focusing on a shared culture of “Excellence From Anywhere.” This term refers to a flexible organizational culture that takes into account the nature of an employee's work and promotes evaluating employees based on task completion, allowing remote work whenever possible.

Addressing Resentments Due to Proximity Bias

The “Excellence From Anywhere” strategy addresses concerns about treatment of remote workers by focusing on deliverables, regardless of where you work. Doing so also involves adopting best practices for hybrid and remote collaboration and innovation.

By valuing deliverables, collaboration, and innovation through a focus on a shared work culture of “Excellence From Anywhere,” you can instill in your employees a focus on deliverables. The core idea is to get all of your workforce to pull together to achieve business outcomes: the location doesn’t matter.

This work culture addresses concerns about fairness by reframing the conversation to focus on accomplishing shared goals, rather than the method of doing so. After all, no one wants their colleagues to have to commute out of spite.

This technique appeals to the tribal aspect of our brains. We are evolutionarily adapted to living in small tribal groups of 50-150 people. Spending different amounts of time in the office splits apart the work tribe into different tribes. However, cultivating a shared focus on business outcomes helps mitigate such divisions and create a greater sense of unity, alleviating frustrations and resentments. Doing so helps improve employee emotional wellbeing and facilitates good collaboration.

Solving the facetime concerns of proximity bias

But what about facetime with the boss? To address this problem necessitates shifting from the traditional, high-stakes, large-scale quarterly or even annual performance evaluations to much more frequent weekly or biweekly, low-stakes, brief performance evaluation through one-on-one in-person or videoconference check-ins.

Supervisees agree with their supervisor on three to five weekly or biweekly performance goals. Then, 72 hours before their check-in meeting, they send a brief report, under a page, to their boss of how they did on these goals, what challenges they faced and how they overcame them, a quantitative self-evaluation, and proposed goals for next week. Twenty-four hours before the meeting, the supervisor responds in a paragraph-long response with their initial impressions of the report.

It’s hard to tell how much any employee should worry about not being able to chat by the watercooler with their boss: knowing exactly where they stand is the key concern for employees, and they can take proactive action if they see their standing suffer.

At the one-on-one, the supervisor reinforces positive aspects of performance and coaches the supervisee on how to solve challenges better, agrees or revises the goals for next time, and affirms or revises the performance evaluation. That performance evaluation gets fed into a constant performance and promotion review system, which can replace or complement a more thorough annual evaluation.

This type of brief and frequent performance evaluation meeting ensures that the employee’s work is integrated with efforts by the supervisor’s other employees, thereby ensuring more unity in achieving business outcomes. It also mitigates concerns about facetime, since all get at least some personalized attention from their team leader. But more importantly, it addresses the underlying concerns about career mobility by giving all staff a clear indication of where they stand at all times. After all, it’s hard to tell how much any employee should worry about not being able to chat by the watercooler with their boss: knowing exactly where they stand is the key concern for employees, and they can take proactive action if they see their standing suffer.

Such best practices help integrate employees into a work culture fit for the future of work while fostering good relationships with managers. Research shows supervisor-supervisee relationships are the most critical ones for employee wellbeing, engagement, and retention.

Conclusion

You don’t have to be the CEO to implement these techniques. Lower-level leaders of small rank-and-file teams can implement these shifts within their own teams, adapting their culture and performance evaluations. And if you are a staff member rather than a leader, send this article to your supervisor and other employees at your company: start a conversation about the benefits of addressing proximity bias using such research-based best practices.