Researchers Are Testing a New Stem Cell Therapy in the Hopes of Saving Millions from Blindness

NIH researchers in Kapil Bharti's lab work toward the development of induced pluripotent stem cells to treat dry age-related macular degeneration.

Of all the infirmities of old age, failing sight is among the cruelest. It can mean the end not only of independence, but of a whole spectrum of joys—from gazing at a sunset or a grandchild's face to reading a novel or watching TV.

The Phase 1 trial will likely run through 2022, followed by a larger Phase 2 trial that could last another two or three years.

The leading cause of vision loss in people over 55 is age-related macular degeneration, or AMD, which afflicts an estimated 11 million Americans. As photoreceptors in the macula (the central part of the retina) die off, patients experience increasingly severe blurring, dimming, distortions, and blank spots in one or both eyes.

The disorder comes in two varieties, "wet" and "dry," both driven by a complex interaction of genetic, environmental, and lifestyle factors. It begins when deposits of cellular debris accumulate beneath the retinal pigment epithelium (RPE)—a layer of cells that nourish and remove waste products from the photoreceptors above them. In wet AMD, this process triggers the growth of abnormal, leaky blood vessels that damage the photoreceptors. In dry AMD, which accounts for 80 to 90 percent of cases, RPE cells atrophy, causing photoreceptors to wither away. Wet AMD can be controlled in about a quarter of patients, usually by injections of medication into the eye. For dry AMD, no effective remedy exists.

Stem Cells: Promise and Perils

Over the past decade, stem cell therapy has been widely touted as a potential treatment for AMD. The idea is to augment a patient's ailing RPE cells with healthy ones grown in the lab. A few small clinical trials have shown promising results. In a study published in 2018, for example, a University of Southern California team cultivated RPE tissue from embryonic stem cells on a plastic matrix and transplanted it into the retinas of four patients with advanced dry AMD. Because the trial was designed to test safety rather than efficacy, lead researcher Amir Kashani told a reporter, "we didn't expect that replacing RPE cells would return a significant amount of vision." Yet acuity improved substantially in one recipient, and the others regained their lost ability to focus on an object.

Therapies based on embryonic stem cells, however, have two serious drawbacks: Using fetal cell lines raises ethical issues, and such treatments require the patient to take immunosuppressant drugs (which can cause health problems of their own) to prevent rejection. That's why some experts favor a different approach—one based on induced pluripotent stem cells (iPSCs). Such cells, first produced in 2006, are made by returning adult cells to an undifferentiated state, and then using chemicals to reprogram them as desired. Treatments grown from a patient's own tissues could sidestep both hurdles associated with embryonic cells.

At least hypothetically. Today, the only stem cell therapies approved by the U.S. Food and Drug Administration (FDA) are umbilical cord-derived products for various blood and immune disorders. Although scientists are probing the use of embryonic stem cells or iPSCs for conditions ranging from diabetes to Parkinson's disease, such applications remain experimental—or fraudulent, as a growing number of patients treated at unlicensed "stem cell clinics" have painfully learned. (Some have gone blind after receiving bogus AMD therapies at those facilities.)

Last December, researchers at the National Eye Institute in Bethesda, Maryland, began enrolling patients with dry AMD in the country's first clinical trial using tissue grown from the patients' own stem cells. Led by biologist Kapil Bharti, the team intends to implant custom-made RPE cells in 12 recipients. If the effort pans out, it could someday save the sight of countless oldsters.

That, however, is what's technically referred to as a very big "if."

The First Steps

Bharti's trial is not the first in the world to use patient-derived iPSCs to treat age-related macular degeneration. In 2013, Japanese researchers implanted such cells into the eyes of a 77-year-old woman with wet AMD; after a year, her vision had stabilized, and she no longer needed injections to keep abnormal blood vessels from forming. A second patient was scheduled for surgery—but the procedure was canceled after the lab-grown RPE cells showed signs of worrisome mutations. That incident illustrates one potential problem with using stem cells: Under some circumstances, the cells or the tissue they form could turn cancerous.

"The knowledge and expertise we're gaining can be applied to many other iPSC-based therapies."

Bharti and his colleagues have gone to great lengths to avoid such outcomes. "Our process is significantly different," he told me in a phone interview. His team begins with patients' blood stem cells, which appear to be more genomically stable than the skin cells that the Japanese group used. After converting the blood cells to RPE stem cells, his team cultures them in a single layer on a biodegradable scaffold, which helps them grow in an orderly manner. "We think this material gives us a big advantage," Bharti says. The team uses a machine-learning algorithm to identify optimal cell structure and ensure quality control.

It takes about six months for a patch of iPSCs to become viable RPE cells. When they're ready, a surgeon uses a specially-designed tool to insert the tiny structure into the retina. Within days, the scaffold melts away, enabling the transplanted RPE cells to integrate fully into their new environment. Bharti's team initially tested their method on rats and pigs with eye damage mimicking AMD. The study, published in January 2019 in Science Translational Medicine, found that at ten weeks, the implanted RPE cells continued to function normally and protected neighboring photoreceptors from further deterioration. No trace of mutagenesis appeared.

Encouraged by these results, Bharti began recruiting human subjects. The Phase 1 trial will likely run through 2022, followed by a larger Phase 2 trial that could last another two or three years. FDA approval would require an even larger Phase 3 trial, with a decision expected sometime between 2025 and 2028—that is, if nothing untoward happens before then. One unknown (among many) is whether implanted cells can thrive indefinitely under the biochemically hostile conditions of an eye with AMD.

"Most people don't have a sense of just how long it takes to get something like this to work, and how many failures—even disasters—there are along the way," says Marco Zarbin, professor and chair of Ophthalmology and visual science at Rutgers New Jersey Medical School and co-editor of the book Cell-Based Therapy for Degenerative Retinal Diseases. "The first kidney transplant was done in 1933. But the first successful kidney transplant was in 1954. That gives you a sense of the time frame. We're really taking the very first steps in this direction."

Looking Ahead

Even if Bharti's method proves safe and effective, there's the question of its practicality. "My sense is that using induced pluripotent stem cells to treat the patient from whom they're derived is a very expensive undertaking," Zarbin observes. "So you'd have to have a very dramatic clinical benefit to justify that cost."

Bharti concedes that the price of iPSC therapy is likely to be high, given that each "dose" is formulated for a single individual, requires months to manufacture, and must be administered via microsurgery. Still, he expects economies of scale and production to emerge with time. "We're working on automating several steps of the process," he explains. "When that kicks in, a technician will be able to make products for 10 or 20 people at once, so the cost will drop proportionately."

Meanwhile, other researchers are pressing ahead with therapies for AMD using embryonic stem cells, which could be mass-produced to treat any patient who needs them. But should that approach eventually win FDA approval, Bharti believes there will still be room for a technique that requires neither fetal cell lines nor immunosuppression.

And not only for eye ailments. "The knowledge and expertise we're gaining can be applied to many other iPSC-based therapies," says the scientist, who is currently consulting with several companies that are developing such treatments. "I'm hopeful that we can leverage these approaches for a wide range of applications, whether it's for vision or across the body."

NEI launches iPS cell therapy trial for dry AMD

Beyond Henrietta Lacks: How the Law Has Denied Every American Ownership Rights to Their Own Cells

A 2017 portrait of Henrietta Lacks.

The common perception is that Henrietta Lacks was a victim of poverty and racism when in 1951 doctors took samples of her cervical cancer without her knowledge or permission and turned them into the world's first immortalized cell line, which they called HeLa. The cell line became a workhorse of biomedical research and facilitated the creation of medical treatments and cures worth untold billions of dollars. Neither Lacks nor her family ever received a penny of those riches.

But racism and poverty is not to blame for Lacks' exploitation—the reality is even worse. In fact all patients, then and now, regardless of social or economic status, have absolutely no right to cells that are taken from their bodies. Some have called this biological slavery.

How We Got Here

The case that established this legal precedent is Moore v. Regents of the University of California.

John Moore was diagnosed with hairy-cell leukemia in 1976 and his spleen was removed as part of standard treatment at the UCLA Medical Center. On initial examination his physician, David W. Golde, had discovered some unusual qualities to Moore's cells and made plans prior to the surgery to have the tissue saved for research rather than discarded as waste. That research began almost immediately.

"On both sides of the case, legal experts and cultural observers cautioned that ownership of a human body was the first step on the slippery slope to 'bioslavery.'"

Even after Moore moved to Seattle, Golde kept bringing him back to Los Angeles to collect additional samples of blood and tissue, saying it was part of his treatment. When Moore asked if the work could be done in Seattle, he was told no. Golde's charade even went so far as claiming to find a low-income subsidy to pay for Moore's flights and put him up in a ritzy hotel to get him to return to Los Angeles, while paying for those out of his own pocket.

Moore became suspicious when he was asked to sign new consent forms giving up all rights to his biological samples and he hired an attorney to look into the matter. It turned out that Golde had been lying to his patient all along; he had been collecting samples unnecessary to Moore's treatment and had turned them into a cell line that he and UCLA had patented and already collected millions of dollars in compensation. The market for the cell lines was estimated at $3 billion by 1990.

Moore felt he had been taken advantage of and filed suit to claim a share of the money that had been made off of his body. "On both sides of the case, legal experts and cultural observers cautioned that ownership of a human body was the first step on the slippery slope to 'bioslavery,'" wrote Priscilla Wald, a professor at Duke University whose career has focused on issues of medicine and culture. "Moore could be viewed as asking to commodify his own body part or be seen as the victim of the theft of his most private and inalienable information."

The case bounced around different levels of the court system with conflicting verdicts for nearly six years until the California Supreme Court ruled on July 9, 1990 that Moore had no legal rights to cells and tissue once they were removed from his body.

The court made a utilitarian argument that the cells had no value until scientists manipulated them in the lab. And it would be too burdensome for researchers to track individual donations and subsequent cell lines to assure that they had been ethically gathered and used. It would impinge on the free sharing of materials between scientists, slow research, and harm the public good that arose from such research.

"In effect, what Moore is asking us to do is impose a tort duty on scientists to investigate the consensual pedigree of each human cell sample used in research," the majority wrote. In other words, researchers don't need to ask any questions about the materials they are using.

One member of the court did not see it that way. In his dissent, Stanley Mosk raised the specter of slavery that "arises wherever scientists or industrialists claim, as defendants have here, the right to appropriate and exploit a patient's tissue for their sole economic benefit—the right, in other words, to freely mine or harvest valuable physical properties of the patient's body. … This is particularly true when, as here, the parties are not in equal bargaining positions."

Mosk also cited the appeals court decision that the majority overturned: "If this science has become for profit, then we fail to see any justification for excluding the patient from participation in those profits."

But the majority bought the arguments that Golde, UCLA, and the nascent biotechnology industry in California had made in amici briefs filed throughout the legal proceedings. The road was now cleared for them to develop products worth billions without having to worry about or share with the persons who provided the raw materials upon which their research was based.

Critical Views

Biomedical research requires a continuous and ever-growing supply of human materials for the foundation of its ongoing work. If an increasing number of patients come to feel as John Moore did, that the system is ripping them off, then they become much less likely to consent to use of their materials in future research.

Some legal and ethical scholars say that donors should be able to limit the types of research allowed for their tissues and researchers should be monitored to assure compliance with those agreements. For example, today it is commonplace for companies to certify that their clothing is not made by child labor, their coffee is grown under fair trade conditions, that food labeled kosher is properly handled. Should we ask any less of our pharmaceuticals than that the donors whose cells made such products possible have been treated honestly and fairly, and share in the financial bounty that comes from such drugs?

Protection of individual rights is a hallmark of the American legal system, says Lisa Ikemoto, a law professor at the University of California Davis. "Putting the needs of a generalized public over the interests of a few often rests on devaluation of the humanity of the few," she writes in a reimagined version of the Moore decision that upholds Moore's property claims to his excised cells. The commentary is in a chapter of a forthcoming book in the Feminist Judgment series, where authors may only use legal precedent in effect at the time of the original decision.

"Why is the law willing to confer property rights upon some while denying the same rights to others?" asks Radhika Rao, a professor at the University of California, Hastings College of the Law. "The researchers who invest intellectual capital and the companies and universities that invest financial capital are permitted to reap profits from human research, so why not those who provide the human capital in the form of their own bodies?" It might be seen as a kind of sweat equity where cash strapped patients make a valuable in kind contribution to the enterprise.

The Moore court also made a big deal about inhibiting the free exchange of samples between scientists. That has become much less the situation over the more than three decades since the decision was handed down. Ironically, this decision, as well as other laws and regulations, have since strengthened the power of patents in biomedicine and by doing so have increased secrecy and limited sharing.

"Although the research community theoretically endorses the sharing of research, in reality sharing is commonly compromised by the aggressive pursuit and defense of patents and by the use of licensing fees that hinder collaboration and development," Robert D. Truog, Harvard Medical School ethicist and colleagues wrote in 2012 in the journal Science. "We believe that measures are required to ensure that patients not bear all of the altruistic burden of promoting medical research."

Additionally, the increased complexity of research and the need for exacting standardization of materials has given rise to an industry that supplies certified chemical reagents, cell lines, and whole animals bred to have specific genetic traits to meet research needs. This has been more efficient for research and has helped to ensure that results from one lab can be reproduced in another.

The Court's rationale of fostering collaboration and free exchange of materials between researchers also has been undercut by the changing structure of that research. Big pharma has shrunk the size of its own research labs and over the last decade has worked out cooperative agreements with major research universities where the companies contribute to the research budget and in return have first dibs on any findings (and sometimes a share of patent rights) that come out of those university labs. It has had a chilling effect on the exchange of materials between universities.

Perhaps tracking cell line donors and use restrictions on those donations might have been burdensome to researchers when Moore was being litigated. Some labs probably still kept their cell line records on 3x5 index cards, computers were primarily expensive room-size behemoths with limited capacity, the internet barely existed, and there was no cloud storage.

But that was the dawn of a new technological age and standards have changed. Now cell lines are kept in state-of-the-art sub zero storage units, tagged with the source, type of tissue, date gathered and often other information. Adding a few more data fields and contacting the donor if and when appropriate does not seem likely to disrupt the research process, as the court asserted.

Forging the Future

"U.S. universities are awarded almost 3,000 patents each year. They earn more than $2 billion each year from patent royalties. Sharing a modest portion of these profits is a novel method for creating a greater sense of fairness in research relationships that we think is worth exploring," wrote Mark Yarborough, a bioethicist at the University of California Davis Medical School, and colleagues. That was penned nearly a decade ago and those numbers have only grown.

The Michigan BioTrust for Health might serve as a useful model in tackling some of these issues. Dried blood spots have been collected from all newborns for half a century to be tested for certain genetic diseases, but controversy arose when the huge archive of dried spots was used for other research projects. As a result, the state created a nonprofit organization to in essence become a biobank and manage access to these spots only for specific purposes, and also to share any revenue that might arise from that research.

"If there can be no property in a whole living person, does it stand to reason that there can be no property in any part of a living person? If there were, can it be said that this could equate to some sort of 'biological slavery'?" Irish ethicist Asim A. Sheikh wrote several years ago. "Any amount of effort spent pondering the issue of 'ownership' in human biological materials with existing law leaves more questions than answers."

Perhaps the biggest question will arise when -- not if but when -- it becomes possible to clone a human being. Would a human clone be a legal person or the property of those who created it? Current legal precedent points to it being the latter.

Today, October 4, is the 70th anniversary of Henrietta Lacks' death from cancer. Over those decades her immortalized cells have helped make possible miraculous advances in medicine and have had a role in generating billions of dollars in profits. Surviving family members have spoken many times about seeking a share of those profits in the name of social justice; they intend to file lawsuits today. Such cases will succeed or fail on their own merits. But regardless of their specific outcomes, one can hope that they spark a larger public discussion of the role of patients in the biomedical research enterprise and lead to establishing a legal and financial claim for their contributions toward the next generation of biomedical research.

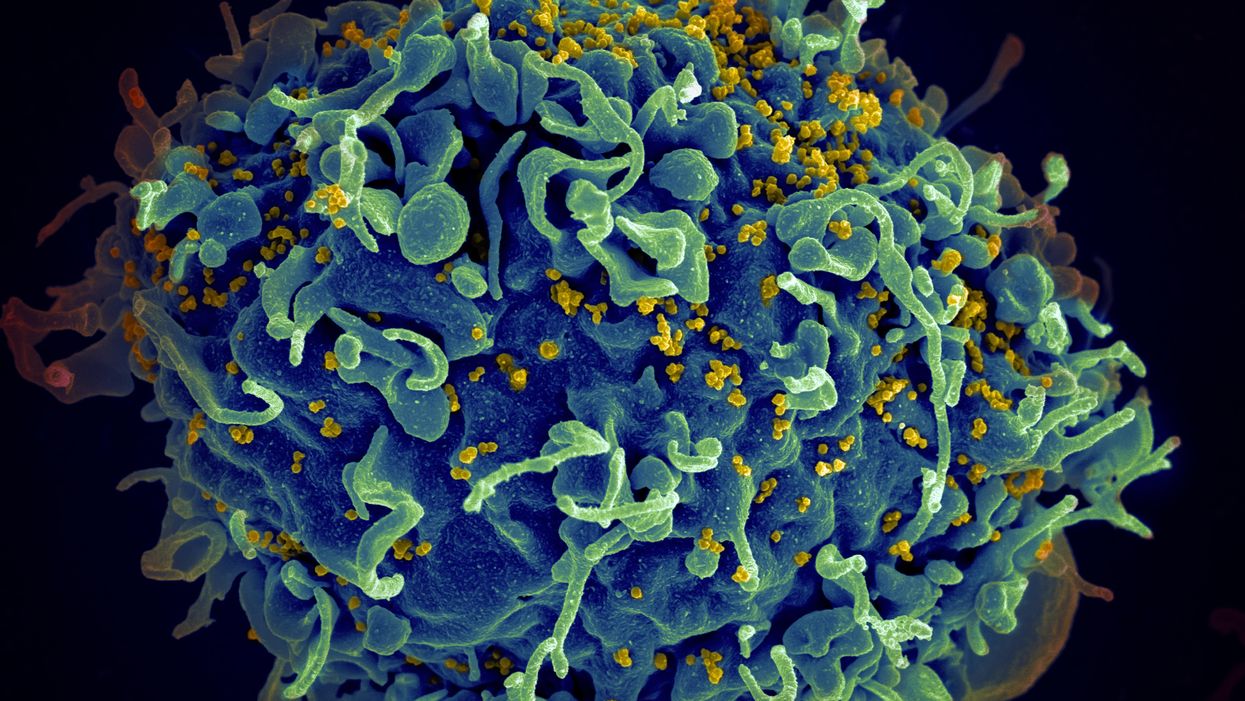

Is a Successful HIV Vaccine Finally on the Horizon?

The HIV virus (yellow) infecting a human cell.

Few vaccines have been as complicated—and filled with false starts and crushed hopes—as the development of an HIV vaccine.

While antivirals help HIV-positive patients live longer and reduce viral transmission to virtually nil, these medications must be taken for life, and preventative medications like pre-exposure prophylaxis, known as PrEP, need to be taken every day to be effective. Vaccines, even if they need boosters, would make prevention much easier.

In August, Moderna began human trials for two HIV vaccine candidates based on messenger RNA.

As they have with the Covid-19 pandemic, mRNA vaccines could change the game. The technology could be applied for gene editing therapy, cancer, other infectious diseases—even a universal influenza vaccine.

In the past, three other mRNA vaccines completed phase-2 trials without success. But the easily customizable platforms mean the vaccines can be tweaked better to target HIV as researchers learn more.

Ever since HIV was discovered as the virus causing AIDS, researchers have been searching for a vaccine. But the decades-long journey has so far been fruitless; while some vaccine candidates showed promise in early trials, none of them have worked well among later-stage clinical trials.

There are two main reasons for this: HIV evolves incredibly quickly, and the structure of the virus makes it very difficult to neutralize with antibodies.

"We in HIV medicine have been desperate to find a vaccine that has effectiveness, but this goal has been elusive so far."

"You know the panic that goes on when a new coronavirus variant surfaces?" asked John Moore, professor of microbiology and immunology at Weill Cornell Medicine who has researched HIV vaccines for 25 years. "With HIV, that kind of variation [happens] pretty much every day in everybody who's infected. It's just orders of magnitude more variable a virus."

Vaccines like these usually work by imitating the outer layer of a virus to teach cells how to recognize and fight off the real thing off before it enters the cell. "If you can prevent landing, you can essentially keep the virus out of the cell," said Larry Corey, the former president and director of the Fred Hutchinson Cancer Research Center who helped run a recent trial of a Johnson & Johnson HIV vaccine candidate, which failed its first efficacy trial.

Like the coronavirus, HIV also has a spike protein with a receptor-binding domain—what Moore calls "the notorious RBD"—that could be neutralized with antibodies. But while that target sticks out like a sore thumb in a virus like SARS-CoV-2, in HIV it's buried under a dense shield. That's not the only target for neutralizing the virus, but all of the targets evolve rapidly and are difficult to reach.

"We understand these targets. We know where they are. But it's still proving incredibly difficult to raise antibodies against them by vaccination," Moore said.

In fact, mRNA vaccines for HIV have been under development for years. The Covid vaccines were built on decades of that research. But it's not as simple as building on this momentum, because of how much more complicated HIV is than SARS-CoV-2, researchers said.

"They haven't succeeded because they were not designed appropriately and haven't been able to induce what is necessary for them to induce," Moore said. "The mRNA technology will enable you to produce a lot of antibodies to the HIV envelope, but if they're the wrong antibodies that doesn't solve the problem."

Part of the problem is that the HIV vaccines have to perform better than our own immune systems. Many vaccines are created by imitating how our bodies overcome an infection, but that doesn't happen with HIV. Once you have the virus, you can't fight it off on your own.

"The human immune system actually does not know how to innately cure HIV," Corey said. "We needed to improve upon the human immune system to make it quicker… with Covid. But we have to actually be better than the human immune system" with HIV.

But in the past few years, there have been impressive leaps in understanding how an HIV vaccine might work. Scientists have known for decades that neutralizing antibodies are key for a vaccine. But in 2010 or so, they were able to mimic the HIV spike and understand how antibodies need to disable the virus. "It helps us understand the nature of the problem, but doesn't instantly solve the problem," Moore said. "Without neutralizing antibodies, you don't have a chance."

Because the vaccines need to induce broadly neutralizing antibodies, and because it's very difficult to neutralize the highly variable HIV, any vaccine will likely be a series of shots that teach the immune system to be on the lookout for a variety of potential attacks.

"Each dose is going to have to have a different purpose," Corey said. "And we hope by the end of the third or fourth dose, we will achieve the level of neutralization that we want."

That's not ideal, because each individual component has to be made and tested—and four shots make the vaccine harder to administer.

"You wouldn't even be going down that route, if there was a better alternative," Moore said. "But there isn't a better alternative."

The mRNA platform is exciting because it is easily customizable, which is especially important in fighting against a shapeshifting, complicated virus. And the mRNA platform has shown itself, in the Covid pandemic, to be safe and quick to make. Effective Covid vaccines were comparatively easy to develop, since the coronavirus is easier to battle than HIV. But companies like Moderna are capitalizing on their success to launch other mRNA therapeutics and vaccines, including the HIV trial.

"You can make the vaccine in two months, three months, in a research lab, and not a year—and the cost of that is really less," Corey said. "It gives us a chance to try many more options, if we've got a good response."

In a trial on macaque monkeys, the Moderna vaccine reduced the chances of infection by 85 percent. "The mRNA platform represents a very promising approach for the development of an HIV vaccine in the future," said Dr. Peng Zhang, who is helping lead the trial at the National Institute of Allergy and Infectious Diseases.

Moderna's trial in humans represents "a very exciting possibility for the prevention of HIV infection," Dr. Monica Gandhi, director of the UCSF-Gladstone Center for AIDS Research, said in an email. "We in HIV medicine have been desperate to find a vaccine that has effectiveness, but this goal has been elusive so far."

If a successful HIV vaccine is developed, the series of shots could include an mRNA shot that primes the immune system, followed by protein subunits that generate the necessary antibodies, Moore said.

"I think it's the only thing that's worth doing," he said. "Without something complicated like that, you have no chance of inducing broadly neutralizing antibodies."

"I can't guarantee you that's going to work," Moore added. "It may completely fail. But at least it's got some science behind it."