Researchers Behaving Badly: Known Frauds Are "the Tip of the Iceberg"

Disgraced stem cell researcher and celebrity surgeon Paolo Macchiarini.

Last week, the whistleblowers in the Paolo Macchiarini affair at Sweden's Karolinska Institutet went on the record here to detail the retaliation they suffered for trying to expose a star surgeon's appalling research misconduct.

Scientific fraud of the type committed by Macchiarini is rare, but studies suggest that it's on the rise.

The whistleblowers had discovered that in six published papers, Macchiarini falsified data, lied about the condition of patients and circumvented ethical approvals. As a result, multiple patients suffered and died. But Karolinska turned a blind eye for years.

Scientific fraud of the type committed by Macchiarini is rare, but studies suggest that it's on the rise. Just this week, for example, Retraction Watch and STAT together broke the news that a Harvard Medical School cardiologist and stem cell researcher, Piero Anversa, falsified data in a whopping 31 papers, which now have to be retracted. Anversa had claimed that he could regenerate heart muscle by injecting bone marrow cells into damaged hearts, a result that no one has been able to duplicate.

A 2009 study published in the Public Library of Science (PLOS) found that about two percent of scientists admitted to committing fabrication, falsification or plagiarism in their work. That's a small number, but up to one third of scientists admit to committing "questionable research practices" that fall into a gray area between rigorous accuracy and outright fraud.

These dubious practices may include misrepresentations, research bias, and inaccurate interpretations of data. One common questionable research practice entails formulating a hypothesis after the research is done in order to claim a successful premise. Another highly questionable practice that can shape research is ghost-authoring by representatives of the pharmaceutical industry and other for-profit fields. Still another is gifting co-authorship to unqualified but powerful individuals who can advance one's career. Such practices can unfairly bolster a scientist's reputation and increase the likelihood of getting the work published.

The above percentages represent what scientists admit to doing themselves; when they evaluate the practices of their colleagues, the numbers jump dramatically. In a 2012 study published in the Journal of Research in Medical Sciences, researchers estimated that 14 percent of other scientists commit serious misconduct, while up to 72 percent engage in questionable practices. While these are only estimates, the problem is clearly not one of just a few bad apples.

In the PLOS study, Daniele Fanelli says that increasing evidence suggests the known frauds are "just the 'tip of the iceberg,' and that many cases are never discovered" because fraud is extremely hard to detect.

Essentially everyone wants to be associated with big breakthroughs, and they may overlook scientifically shaky foundations when a major advance is claimed.

In addition, it's likely that most cases of scientific misconduct go unreported because of the high price of whistleblowing. Those in the Macchiarini case showed extraordinary persistence in their multi-year campaign to stop his deadly trachea implants, while suffering serious damage to their careers. Such heroic efforts to unmask fraud are probably rare.

To make matters worse, there are numerous players in the scientific world who may be complicit in either committing misconduct or covering it up. These include not only primary researchers but co-authors, institutional executives, journal editors, and industry leaders. Essentially everyone wants to be associated with big breakthroughs, and they may overlook scientifically shaky foundations when a major advance is claimed.

Another part of the problem is that it's rare for students in science and medicine to receive an education in ethics. And studies have shown that older, more experienced and possibly jaded researchers are more likely to fudge results than their younger, more idealistic colleagues.

So, given the steep price that individuals and institutions pay for scientific misconduct, what compels them to go down that road in the first place? According to the JRMS study, individuals face intense pressures to publish and to attract grant money in order to secure teaching positions at universities. Once they have acquired positions, the pressure is on to keep the grants and publishing credits coming in order to obtain tenure, be appointed to positions on boards, and recruit flocks of graduate students to assist in research. And not to be underestimated is the human ego.

Paolo Macchiarini is an especially vivid example of a scientist seeking not only fortune, but fame. He liberally (and falsely) claimed powerful politicians and celebrities, even the Pope, as patients or admirers. He may be an extreme example, but we live in an age of celebrity scientists who bring huge amounts of grant money and high prestige to the institutions that employ them.

The media plays a significant role in both glorifying stars and unmasking frauds. In the Macchiarini scandal, the media first lifted him up, as in NBC's laudatory documentary, "A Leap of Faith," which painted him as a kind of miracle-worker, and then brought him down, as in the January 2016 documentary, "The Experiments," which chronicled the agonizing death of one of his patients.

Institutions can also play a crucial role in scientific fraud by putting more emphasis on the number and frequency of papers published than on their quality. The whole course of a scientist's career is profoundly affected by something called the h-index. This is a number based on both the frequency of papers published and how many times the papers are cited by other researchers. Raising one's ranking on the h-index becomes an overriding goal, sometimes eclipsing the kind of patient, time-consuming research that leads to true breakthroughs based on reliable results.

Universities also create a high-pressured environment that encourages scientists to cut corners. They, too, place a heavy emphasis on attracting large monetary grants and accruing fame and prestige. This can lead them, just as it led Karolinska, to protect a star scientist's sloppy or questionable research. According to Dr. Andrew Rosenberg, who is director of the Center for Science and Democracy at the U.S.-based Union of Concerned Scientists, "Karolinska defended its investment in an individual as opposed to the long-term health of the institution. People were dying, and they should have outsourced the investigation from the very beginning."

Having institutions investigate their own practices is a conflict of interest from the get-go, says Rosenberg.

Scientists, universities, and research institutions are also not immune to fads. "Hot" subjects attract grant money and confer prestige, incentivizing scientists to shift their research priorities in a direction that garners more grants. This can mean neglecting the scientist's true area of expertise and interests in favor of a subject that's more likely to attract grant money. In Macchiarini's case, he was allegedly at the forefront of the currently sexy field of regenerative medicine -- a field in which Karolinska was making a huge investment.

The relative scarcity of resources intensifies the already significant pressure on scientists. They may want to publish results rapidly, since they face many competitors for limited grant money, academic positions, students, and influence. The scarcity means that a great many researchers will fail while only a few succeed. Once again, the temptation may be to rush research and to show it in the most positive light possible, even if it means fudging or exaggerating results.

Though the pressures facing scientists are very real, the problem of misconduct is not inevitable.

Intense competition can have a perverse effect on researchers, according to a 2007 study in the journal Science of Engineering and Ethics. Not only does it place undue pressure on scientists to succeed, it frequently leads to the withholding of information from colleagues, which undermines a system in which new discoveries build on the previous work of others. Researchers may feel compelled to withhold their results because of the pressure to be the first to publish. The study's authors propose that more investment in basic research from governments could alleviate some of these competitive pressures.

Scientific journals, although they play a part in publishing flawed science, can't be expected to investigate cases of suspected fraud, says the German science blogger Leonid Schneider. Schneider's writings helped to expose the Macchiarini affair.

"They just basically wait for someone to retract problematic papers," he says.

He also notes that, while American scientists can go to the Office of Research Integrity to report misconduct, whistleblowers in Europe have no external authority to whom they can appeal to investigate cases of fraud.

"They have to go to their employer, who has a vested interest in covering up cases of misconduct," he says.

Science is increasingly international. Major studies can include collaborators from several different countries, and he suggests there should be an international body accessible to all researchers that will investigate suspected fraud.

Ultimately, says Rosenberg, the scientific system must incorporate trust. "You trust co-authors when you write a paper, and peer reviewers at journals trust that scientists at research institutions like Karolinska are acting with integrity."

Without trust, the whole system falls apart. It's the trust of the public, an elusive asset once it has been betrayed, that science depends upon for its very existence. Scientific research is overwhelmingly financed by tax dollars, and the need for the goodwill of the public is more than an abstraction.

The Macchiarini affair raises a profound question of trust and responsibility: Should multiple co-authors be held responsible for a lead author's misconduct?

Karolinska apparently believes so. When the institution at last owned up to the scandal, it vindictively found Karl Henrik-Grinnemo, one of the whistleblowers, guilty of scientific misconduct as well. It also designated two other whistleblowers as "blameworthy" for their roles as co-authors of the papers on which Macchiarini was the lead author.

As a result, the whistleblowers' reputations and employment prospects have become collateral damage. Accusations of research misconduct can be a career killer. Research grants dry up, employment opportunities evaporate, publishing becomes next to impossible, and collaborators vanish into thin air.

Grinnemo contends that co-authors should only be responsible for their discrete contributions, not for the data supplied by others.

"Different aspects of a paper are highly specialized," he says, "and that's why you have multiple authors. You cannot go through every single bit of data because you don't understand all the parts of the article."

This is especially true in multidisciplinary, translational research, where there are sometimes 20 or more authors. "You have to trust co-authors, and if you find something wrong you have to notify all co-authors. But you couldn't go through everything or it would take years to publish an article," says Grinnemo.

Though the pressures facing scientists are very real, the problem of misconduct is not inevitable. Along with increased support from governments and industry, a change in academic culture that emphasizes quality over quantity of published studies could help encourage meritorious research.

But beyond that, trust will always play a role when numerous specialists unite to achieve a common goal: the accumulation of knowledge that will promote human health, wealth, and well-being.

[Correction: An earlier version of this story mistakenly credited The New York Times with breaking the news of the Anversa retractions, rather than Retraction Watch and STAT, which jointly published the exclusive on October 14th. The piece in the Times ran on October 15th. We regret the error.]

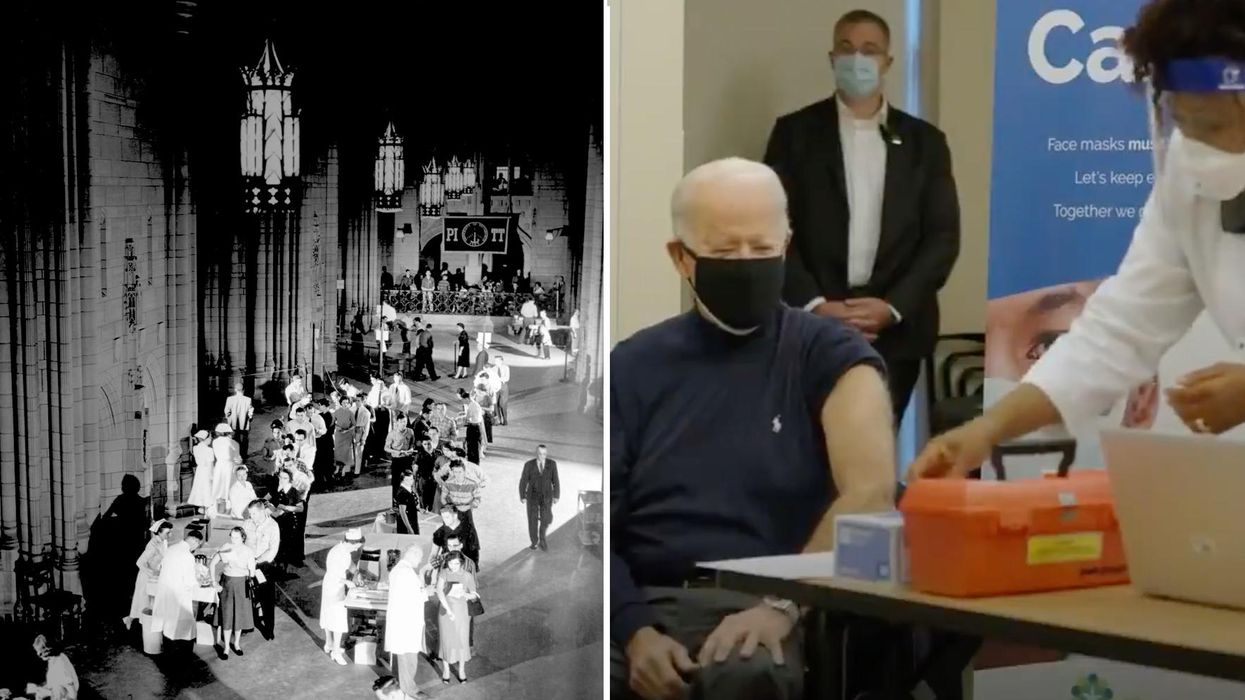

On left, people excitedly line up for Salk's polio vaccine in 1957; on right, Joe Biden gets one of the COVID vaccines on December 21, 2020.

On the morning of April 12, 1955, newsrooms across the United States inked headlines onto newsprint: the Salk Polio vaccine was "safe, effective, and potent." This was long-awaited news. Americans had limped through decades of fear, unaware of what caused polio or how to cure it, faced with the disease's terrifying, visible power to paralyze and kill, particularly children.

The announcement of the polio vaccine was celebrated with noisy jubilation: church bells rang, factory whistles sounded, people wept in the streets. Within weeks, mass inoculation began as the nation put its faith in a vaccine that would end polio.

Today, most of us are blissfully ignorant of child polio deaths, making it easier to believe that we have not personally benefited from the development of vaccines. According to Dr. Steven Pinker, cognitive psychologist and author of the bestselling book Enlightenment Now, we've become blasé to the gifts of science. "The default expectation is not that disease is part of life and science is a godsend, but that health is the default, and any disease is some outrage," he says.

We're now in the early stages of another vaccine rollout, one we hope will end the ravages of the COVID-19 pandemic. And yet, the Pfizer, Moderna, and AstraZeneca vaccines are met with far greater hesitancy and skepticism than the polio vaccine was in the 50s.

In 2021, concerns over the speed and safety of vaccine development and technology plague this heroic global effort, but the roots of vaccine hesitancy run far deeper. Vaccine hesitancy has always existed in the U.S., even in the polio era, motivated in part by fears around "living virus" in a bad batch of vaccines produced by Cutter Laboratories in 1955. But in the last half century, we've witnessed seismic cultural shifts—loss of public trust, a rise in misinformation, heightened racial and socioeconomic inequality, and political polarization have all intensified vaccine-related fears and resistance. Making sense of how we got here may help us understand how to move forward.

The Rise and Fall of Public Trust

When the polio vaccine was released in 1955, "we were nearing an all-time high point in public trust," says Matt Baum, Harvard Kennedy School professor and lead author of several reports measuring public trust and vaccine confidence. Baum explains that the U.S. was experiencing a post-war boom following the Allied triumph in WWII, a popular Roosevelt presidency, and the rapid innovation that elevated the country to an international superpower.

The 1950s witnessed the emergence of nuclear technology, a space program, and unprecedented medical breakthroughs, adds Emily Brunson, Texas State University anthropologist and co-chair of the Working Group on Readying Populations for COVID-19 Vaccine. "Antibiotics were a game changer," she states. While before, people got sick with pneumonia for a month, suddenly they had access to pills that accelerated recovery.

During this period, science seemed to hold all the answers; people embraced the idea that we could "come to know the world with an absolute truth," Brunson explains. Doctors were portrayed as unquestioned gods, so Americans were primed to trust experts who told them the polio vaccine was safe.

"The emotional tone of the news has gone downward since the 1940s, and journalists consider it a professional responsibility to cover the negative."

That blind acceptance eroded in the 1960s and 70s as people came to understand that science can be inherently political. "Getting to an absolute truth works out great for white men, but these things affect people socially in radically different ways," Brunson says. As the culture began questioning the white, patriarchal biases of science, doctors lost their god-like status and experts were pushed off their pedestals. This trend continues with greater intensity today, as President Trump has led a campaign against experts and waged a war on science that began long before the pandemic.

The Shift in How We Consume Information

In the 1950s, the media created an informational consensus. The fundamental ideas the public consumed about the state of the world were unified. "People argued about the best solutions, but didn't fundamentally disagree on the factual baseline," says Baum. Indeed, the messaging around the polio vaccine was centralized and consistent, led by President Roosevelt's successful March of Dimes crusade. People of lower socioeconomic status with limited access to this information were less likely to have confidence in the vaccine, but most people consumed media that assured them of the vaccine's safety and mobilized them to receive it.

Today, the information we consume is no longer centralized—in fact, just the opposite. "When you take that away, it's hard for people to know what to trust and what not to trust," Baum explains. We've witnessed an increase in polarization and the technology that makes it easier to give people what they want to hear, reinforcing the human tendencies to vilify the other side and reinforce our preexisting ideas. When information is engineered to further an agenda, each choice and risk calculation made while navigating the COVID-19 pandemic is deeply politicized.

This polarization maps onto a rise in socioeconomic inequality and economic uncertainty. These factors, associated with a sense of lost control, prime people to embrace misinformation, explains Baum, especially when the situation is difficult to comprehend. "The beauty of conspiratorial thinking is that it provides answers to all these questions," he says. Today's insidious fragmentation of news media accelerates the circulation of mis- and disinformation, reaching more people faster, regardless of veracity or motivation. In the case of vaccines, skepticism around their origin, safety, and motivation is intensified.

Alongside the rise in polarization, Pinker says "the emotional tone of the news has gone downward since the 1940s, and journalists consider it a professional responsibility to cover the negative." Relentless focus on everything that goes wrong further erodes public trust and paints a picture of the world getting worse. "Life saved is not a news story," says Pinker, but perhaps it should be, he continues. "If people were more aware of how much better life was generally, they might be more receptive to improvements that will continue to make life better. These improvements don't happen by themselves."

The Future Depends on Vaccine Confidence

So far, the U.S. has been unable to mitigate the catastrophic effects of the pandemic through social distancing, testing, and contact tracing. President Trump has downplayed the effects and threat of the virus, censored experts and scientists, given up on containing the spread, and mobilized his base to protest masks. The Trump Administration failed to devise a national plan, so our national plan has defaulted to hoping for the "miracle" of a vaccine. And they are "something of a miracle," Pinker says, describing vaccines as "the most benevolent invention in the history of our species." In record-breaking time, three vaccines have arrived. But their impact will be weakened unless we achieve mass vaccination. As Brunson notes, "The technology isn't the fix; it's people taking the technology."

Significant challenges remain, including facilitating widespread access and supporting on-the-ground efforts to allay concerns and build trust with specific populations with historic reasons for distrust, says Brunson. Baum predicts continuing delays as well as deaths from other causes that will be linked to the vaccine.

Still, there's every reason for hope. The new administration "has its eyes wide open to these challenges. These are the kind of problems that are amenable to policy solutions if we have the will," Baum says. He forecasts widespread vaccination by late summer and a bounce back from the economic damage, a "Good News Story" that will bolster vaccine acceptance in the future. And Pinker reminds us that science, medicine, and public health have greatly extended our lives in the last few decades, a trend that can only continue if we're willing to roll up our sleeves.

Scientists Are Working to Develop a Clever Nasal Spray That Tricks the Coronavirus Out of the Body

Biochemist Longxing Cao is working with colleagues at the University of Washington on promising research to disable infectious coronavirus in a person's nose.

Imagine this scenario: you get an annoying cough and a bit of a fever. When you wake up the next morning you lose your sense of taste and smell. That sounds familiar, so you head to a doctor's office for a Covid test, which comes back positive.

Your next step? An anti-Covid nasal spray of course, a "trickster drug" that will clear the once-dangerous and deadly virus out of the body. The drug works by tricking the coronavirus with decoy receptors that appear to be just like those on the surface of our own cells. The virus latches onto the drug's molecules "thinking" it is breaking into human cells, but instead it flushes out of your system before it can cause any serious damage.

This may sounds like science fiction, but several research groups are already working on such trickster coronavirus drugs, with some candidates close to clinical trials and possibly even becoming available late this year. The teams began working on them when the pandemic arrived, and continued in lockdown.

This may sounds like science fiction, but several research groups are already working on such trickster coronavirus drugs, with some candidates close to clinical trials and possibly even becoming available late this year. The teams began working on them when the pandemic arrived, and continued in lockdown.

When the pandemic first hit and the state of California issued a lockdown order on March 16, postdoctoral researchers Anum and Jeff Glasgow found themselves stuck at home with nothing to do. The two scientists who study bioengineering felt that they were well equipped to research molecular ways of disabling coronavirus's cell-penetrating spike protein, but they could no longer come to their labs at the University of California San Francisco.

"We were upset that no one put us in the game," says Anum Glasgow. "We have a lot of experience between us doing these types of projects so we wanted to contribute." But they still had computers so they began modeling the potential virus-disabling proteins in silico using Robetta, special software for designing and modeling protein structures, developed and maintained by University of Washington biochemist David Baker and his lab.

"We saw some imperfections in that lock and key and we created something better. We made a 10 times tighter adhesive."

The SARS-CoV-2 virus that causes Covid-19 uses its surface spike protein to bind on to a specific receptor on human cells called ACE2. Unfortunately for humans, the spike protein's molecular shape fits the ACE2 receptor like a well-cut key, making it very successful at breaking into our cells. But if one could design a molecular ACE2-mimic to "trick" the virus into latching onto it instead, the virus would no longer be able to enter cells. Scientists call such mimics receptor traps or inhibitors, or blockers. "It would block the adhesive part of the virus that binds to human cells," explains Jim Wells, professor of pharmaceutical chemistry at UCSF, whose lab took part in designing the ACE2-receptor mimic, working with the Glasgows and other colleagues.

The idea of disabling infectious or inflammatory agents by tricking them into binding to the targets' molecular look-alikes is something scientists have tried with other diseases. The anti-inflammatory drugs commonly used to treat autoimmune conditions, including rheumatoid arthritis, Crohn's disease and ulcerative colitis, rely on conceptually similar molecular mechanisms. Called TNF blockers, these drugs block the activity of the inflammatory cytokines, molecules that promote inflammation. "One of the biggest selling drugs in the world is a receptor trap," says Jeff Glasgow. "It acts as a receptor decoy. There's a TNF receptor that traps the cytokine."

In the recent past, scientists also pondered a similar look-alike approach to treating urinary tract infections, which are often caused by a pathogenic strain of Escherichia coli. An E. coli bacterium resembles a squid with protruding filaments equipped with proteins that can change their shape to form hooks, used to hang onto specific sugar molecules called ligands, which are present on the surface of the epithelial cells lining the urinary tract.

A recent study found that a sugar-like compound that's structurally similar to that ligand can play a similar trick on the E. Coli. When administered in in sufficient amounts, the compound hooks the bacteria on, which is then excreted out of the body with urine. The "trickster" method had been also tried against the HIV virus, but it wasn't very effective because HIV has a high mutation rate and multiple ways of entering human cells.

But the coronavirus spike protein's shape is more stable. And while it has a strong affinity for the ACE2 receptors, its natural binding to these receptors isn't perfect, which allowed the UCSF researchers to design a mimic with a better grip. "We saw some imperfections in that lock and key and we created something better," says Wells. "We made a 10 times tighter adhesive." The team demonstrated that their traps neutralized SARS-CoV-2 in lab experiments and published their study in the Proceedings of the National Academy of Sciences.

Baker, who is the director of the Institute for Protein Design at the University of Washington, was also devising ACE2 look-alikes with his team. Only unlike the UCSF team, they didn't perfect the virus-receptor lock and key combo, but instead designed their mimics from scratch. Using Robetta, they digitally modeled over two million proteins, zeroed-in on over 100,000 potential candidates and identified a handful with a strong promise of blocking SARS-CoV-2, testing them against the virus in human cells. Their design of the miniprotein inhibitors was published in the journal Science.

Biochemist David Baker, pictured in his lab at the University of Washington.

UW

The concept of the ACE2 receptor mimics is somewhat similar to the antibody plasma, but better, the teams explain. Antibodies don't always coat all of the virus's spike proteins and sometimes don't bind perfectly. By contrast, the ACE2 mimics directly compete with the virus's entry mechanism. ACE2 mimics are also easier and cheaper to make, researchers say.

Antibodies, which are long protein chains, must be grown inside mammalian cells, which is a slow and costly process. As drugs, antibody cocktails must be kept refrigerated. On the contrary, proteins that mimic ACE2 receptors are smaller and can be produced by bacteria easily and inexpensively. Designed to specs, these proteins don't need refrigeration and are easy to store. "We designed them to be very stable," says Baker. "Our computation design tries to come up with the stable proteins that have the desired functions."

That stability may allow the team to create an inhaler drug rather than an intravenous one, which would be another advantage over the antibody plasma, given via an IV. The team envisions people spraying the miniprotein solution into their nose, creating a protecting coating that would disable the inhaled virus. "The infection starts from your respiratory system, from your nose," explains Longxing Cao, the study's co-author—so a nasal spray would be a natural way to administer it. "So that you can have it like a layer, similar to a mask."

As the virus evolves, new variants are arising. But the teams think that their ACE2 protein mimics should work on the new variants too for several reasons. "Since the new SARS-CoV-2 variants still use ACE2 for their cell entry, they will likely still be susceptible to ACE2-based traps," Glasgow says.

Cao explains that their approach should work too because most of the mutations happen outside the ACE2 binding region. Plus, they are building multiple binders that can bind to an array of the coronavirus variants. "Our binder can still bind with most of the variants and we are trying to make one protein that could inhibit all the future escape variants," he says.

Baker and Cao hope that their miniproteins may be available to patients later this year. But besides getting the medicine out to patients, this approach will allow researchers to test the computer-modeled mimics end-to-end with an unprecedented speed. That would give humans a leg up in future pandemics or zoonotic disease outbreaks, which remain an increasingly pressing threat due to human activity and climate change.

"That's what we are focused on right now—understanding what we have learned from this pandemic to improve our design methods," says Baker. "So that we should be able to obtain binders like these very quickly when a new pandemic threat is identified."

Lina Zeldovich has written about science, medicine and technology for Popular Science, Smithsonian, National Geographic, Scientific American, Reader’s Digest, the New York Times and other major national and international publications. A Columbia J-School alumna, she has won several awards for her stories, including the ASJA Crisis Coverage Award for Covid reporting, and has been a contributing editor at Nautilus Magazine. In 2021, Zeldovich released her first book, The Other Dark Matter, published by the University of Chicago Press, about the science and business of turning waste into wealth and health. You can find her on http://linazeldovich.com/ and @linazeldovich.