Should Your Employer Have Access to Your Fitbit Data?

A woman using a wearable device to track her fitness activities.

The modern world today has become more dependent on technology than ever. We want to achieve maximal tasks with minimal human effort. And increasingly, we want our technology to go wherever we go.

Wearable devices operate by collecting massive amounts of personal information on unsuspecting users.

At work, we are leveraging the immense computing power of tablet computers. To supplement social interaction, we have turned to smartphones and social media. Lately, another novel and exciting technology is on the rise: wearable devices that track our personal data, like the FitBit and the Apple Watch. The interest and demand for these devices is soaring. CCS Insight, an organization that studies developments in digital markets, has reported that the market for wearables will be worth $25 billion by next year. By 2020, it is estimated that a staggering 411 million smart wearable devices will be sold.

Although wearables include smartwatches, fitness bands, and VR/AR headsets, devices that monitor and track health data are gaining most of the traction. Apple has announced the release of Apple Health Records, a new feature for their iOS operating system that will allow users to view and store medical records on their smart devices. Hospitals such as NYU Langone have started to use this feature on Apple Watch to send push notifications to ER doctors for vital lab results, so that they can review and respond immediately. Previously, Google partnered with Novartis to develop smart contact lens that can monitor blood glucose levels in diabetic patients, although the idea has been in limbo.

As these examples illustrate, these wearable devices present unique opportunities to address some of the most intractable problems in modern healthcare. At the same time, these devices operate by collecting massive personal information on unsuspecting users and pose unique ethical challenges regarding informed consent, user privacy, and health data security. If there is a lesson from the recent Facebook debacle, it is that big data applications, even those using anonymized data, are not immune from malicious third-party data-miners.

On consent: do users of wearable devices really know what they are getting into? There is very little evidence to support the claim that consent obtained on signing up can be considered 'informed.' A few months ago, researchers from Australia published an interesting study that surveyed users of wearable devices that monitor and track health data. The survey reported that users were "highly concerned" regarding issues of privacy and considered informed consent "very important" when asked about data sharing with third parties (for advertising or data analysis).

However, users were not aware of how privacy and informed consent were related. In essence, while they seemed to understand the abstract importance of privacy, they were unaware that clicking on the "I agree" dialog box entailed giving up control of their personal health information. This is not surprising, given that most user agreements for online applications or wearable devices are often in lengthy legalese.

Companies could theoretically use their employees' data to motivate desired behavior, throwing a modern wrench into the concept of work/life balance.

Privacy of health data is another unexamined ethical question. Although wearable devices have traditionally been used for promotion of healthy lifestyles (through fitness tracking) and ease of use (such as the call and message features on Apple Watch), increasing interest is coming from corporations. Tractica, a market research firm that studies trends in wearable devices, reports that corporate consumers will account for 17 percent of the market share in wearable devices by 2020 (current market share stands at 1 percent). This is because wearable devices, loaded with several sensors, provide unique insights to track workers' physical activity, stress levels, sleep, and health information. Companies could theoretically use this information to motivate desired behavior, throwing a modern wrench into the concept of work/life balance.

Since paying for employees' healthcare tends to be one of the largest expenses for employers, using wearable devices is seen as something that can boost the bottom line, while enhancing productivity. Even if one considers it reasonable to devise policies that promote productivity, we have yet to determine ethical frameworks that can prevent discrimination against those who may not be able-bodied, and to determine how much control employers ought to exert over the lifestyle of employees.

To be clear, wearable smart devices can address unique challenges in healthcare and elsewhere, but the focus needs to shift toward the user's needs. Data collection practices should also reflect this shift.

Privacy needs to be incorporated by design and not as an afterthought. If we were to read privacy policies properly, it could take some 180 to 300 hours per year per person. This needs to change. Privacy and consent policies ought to be in clear, simple language. If using your device means ultimately sharing your data with doctors, food manufacturers, insurers, companies, dating apps, or whoever might want access to it, then you should know that loud and clear.

The recent implementation of European Union's General Data Protection Regulation (GDPR) is also a move in the right direction. These protections include firm guidelines for consent, and an ability to withdraw consent; a right to access data, and to know what is being done with user's collected data; inherent privacy protections; notifications of security breach; and, strict penalties for companies that do not comply. For wearable devices in healthcare, collaborations with frontline providers would also reveal which areas can benefit from integrating wearable technology for maximum clinical benefit.

In our pursuit of advancement, we must not erode fundamental rights to privacy and security, and not infringe on the rights of the vulnerable and marginalized.

If current trends are any indication, wearable devices will play a central role in our future lives. In fact, the next generation of wearables will be implanted under our skin. This future is already visible when looking at the worrying rise in biohacking – or grinding, or cybernetic enhancement – where people attempt to enhance the physical capabilities of their bodies with do-it-yourself cybernetic devices (using hacker ethics to justify the practice).

Already, a company in Wisconsin called Three Square Market has become the first U.S. employer to provide rice-grained-sized radio-frequency identification (RFID) chips implanted under the skin between the thumb and forefinger of their employees. The company stated that these RFID chips (also available as wearable rings or bracelets) can be used to login to computers, open doors, or use the copy machines.

Humans have always used technology to push the boundaries of what we can do. But in our pursuit of advancement, we must not erode fundamental rights to privacy and security, and not infringe on the rights of the vulnerable and marginalized. The rise of powerful wearables will also necessitate a global discussion on moral questions such as: what are the boundaries for artificially enhancing the human body, and is hacking our bodies ethically acceptable? We should think long and hard before we answer.

Thanks to safety cautions from the COVID-19 pandemic, a strain of influenza has been completely eliminated.

If you were one of the millions who masked up, washed your hands thoroughly and socially distanced, pat yourself on the back—you may have helped change the course of human history.

Scientists say that thanks to these safety precautions, which were introduced in early 2020 as a way to stop transmission of the novel COVID-19 virus, a strain of influenza has been completely eliminated. This marks the first time in human history that a virus has been wiped out through non-pharmaceutical interventions, such as vaccines.

The flu shot, explained

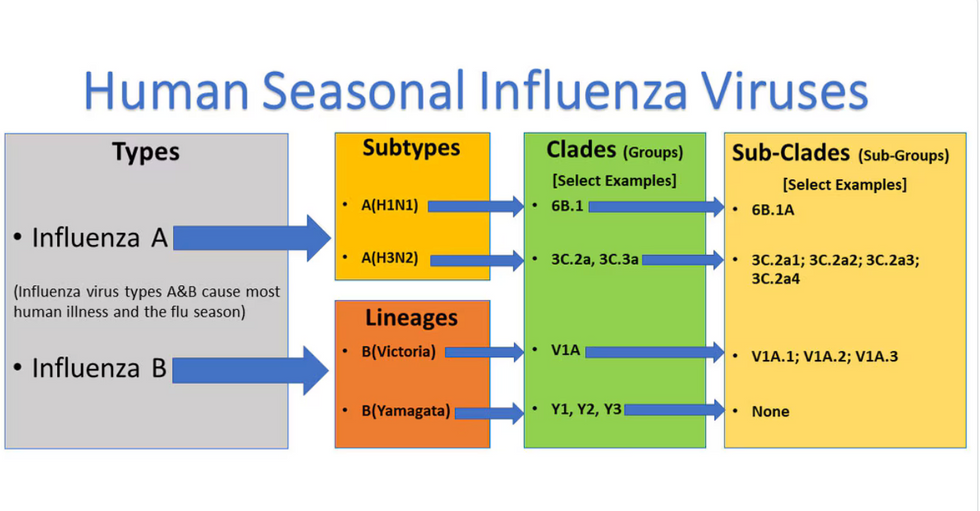

Influenza viruses type A and B are responsible for the majority of human illnesses and the flu season.

Centers for Disease Control

For more than a decade, flu shots have protected against two types of the influenza virus–type A and type B. While there are four different strains of influenza in existence (A, B, C, and D), only strains A, B, and C are capable of infecting humans, and only A and B cause pandemics. In other words, if you catch the flu during flu season, you’re most likely sick with flu type A or B.

Flu vaccines contain inactivated—or dead—influenza virus. These inactivated viruses can’t cause sickness in humans, but when administered as part of a vaccine, they teach a person’s immune system to recognize and kill those viruses when they’re encountered in the wild.

Each spring, a panel of experts gives a recommendation to the US Food and Drug Administration on which strains of each flu type to include in that year’s flu vaccine, depending on what surveillance data says is circulating and what they believe is likely to cause the most illness during the upcoming flu season. For the past decade, Americans have had access to vaccines that provide protection against two strains of influenza A and two lineages of influenza B, known as the Victoria lineage and the Yamagata lineage. But this year, the seasonal flu shot won’t include the Yamagata strain, because the Yamagata strain is no longer circulating among humans.

How Yamagata Disappeared

Flu surveillance data from the Global Initiative on Sharing All Influenza Data (GISAID) shows that the Yamagata lineage of flu type B has not been sequenced since April 2020.

Nature

Experts believe that the Yamagata lineage had already been in decline before the pandemic hit, likely because the strain was naturally less capable of infecting large numbers of people compared to the other strains. When the COVID-19 pandemic hit, the resulting safety precautions such as social distancing, isolating, hand-washing, and masking were enough to drive the virus into extinction completely.

Because the strain hasn’t been circulating since 2020, the FDA elected to remove the Yamagata strain from the seasonal flu vaccine. This will mark the first time since 2012 that the annual flu shot will be trivalent (three-component) rather than quadrivalent (four-component).

Should I still get the flu shot?

The flu shot will protect against fewer strains this year—but that doesn’t mean we should skip it. Influenza places a substantial health burden on the United States every year, responsible for hundreds of thousands of hospitalizations and tens of thousands of deaths. The flu shot has been shown to prevent millions of illnesses each year (more than six million during the 2022-2023 season). And while it’s still possible to catch the flu after getting the flu shot, studies show that people are far less likely to be hospitalized or die when they’re vaccinated.

Another unexpected benefit of dropping the Yamagata strain from the seasonal vaccine? This will possibly make production of the flu vaccine faster, and enable manufacturers to make more vaccines, helping countries who have a flu vaccine shortage and potentially saving millions more lives.

After his grandmother’s dementia diagnosis, one man invented a snack to keep her healthy and hydrated.

Founder Lewis Hornby and his grandmother Pat, sampling Jelly Drops—an edible gummy containing water and life-saving electrolytes.

On a visit to his grandmother’s nursing home in 2016, college student Lewis Hornby made a shocking discovery: Dehydration is a common (and dangerous) problem among seniors—especially those that are diagnosed with dementia.

Hornby’s grandmother, Pat, had always had difficulty keeping up her water intake as she got older, a common issue with seniors. As we age, our body composition changes, and we naturally hold less water than younger adults or children, so it’s easier to become dehydrated quickly if those fluids aren’t replenished. What’s more, our thirst signals diminish naturally as we age as well—meaning our body is not as good as it once was in letting us know that we need to rehydrate. This often creates a perfect storm that commonly leads to dehydration. In Pat’s case, her dehydration was so severe she nearly died.

When Lewis Hornby visited his grandmother at her nursing home afterward, he learned that dehydration especially affects people with dementia, as they often don’t feel thirst cues at all, or may not recognize how to use cups correctly. But while dementia patients often don’t remember to drink water, it seemed to Hornby that they had less problem remembering to eat, particularly candy.

Hornby wanted to create a solution for elderly people who struggled keeping their fluid intake up. He spent the next eighteen months researching and designing a solution and securing funding for his project. In 2019, Hornby won a sizable grant from the Alzheimer’s Society, a UK-based care and research charity for people with dementia and their caregivers. Together, through the charity’s Accelerator Program, they created a bite-sized, sugar-free, edible jelly drop that looked and tasted like candy. The candy, called Jelly Drops, contained 95% water and electrolytes—important minerals that are often lost during dehydration. The final product launched in 2020—and was an immediate success. The drops were able to provide extra hydration to the elderly, as well as help keep dementia patients safe, since dehydration commonly leads to confusion, hospitalization, and sometimes even death.

Not only did Jelly Drops quickly become a favorite snack among dementia patients in the UK, but they were able to provide an additional boost of hydration to hospital workers during the pandemic. In NHS coronavirus hospital wards, patients infected with the virus were regularly given Jelly Drops to keep their fluid levels normal—and staff members snacked on them as well, since long shifts and personal protective equipment (PPE) they were required to wear often left them feeling parched.

In April 2022, Jelly Drops launched in the United States. The company continues to donate 1% of its profits to help fund Alzheimer’s research.