Trading syphilis for malaria: How doctors treated one deadly disease by infecting patients with another

In the 1920s, doctors induced a high fever in patients - so called "fever therapy" - as a way to help them recover from syphilis, though it involved ethical problems.

If you had lived one hundred years ago, syphilis – a bacterial infection spread by sexual contact – would likely have been one of your worst nightmares. Even though syphilis still exists, it can now be detected early and cured quickly with a course of antibiotics. Back then, however, before antibiotics and without an easy way to detect the disease, syphilis was very often a death sentence.

To understand how feared syphilis once was, it’s important to understand exactly what it does if it’s allowed to progress: the infections start off as small, painless sores or even a single sore near the vagina, penis, anus, or mouth. The sores disappear around three to six weeks after the initial infection – but untreated, syphilis moves into a secondary stage, often presenting as a mild rash in various areas of the body (such as the palms of a person’s hands) or through other minor symptoms. The disease progresses from there, often quietly and without noticeable symptoms, sometimes for decades before it reaches its final stages, where it can cause blindness, organ damage, and even dementia. Research indicates, in fact, that as much as 10 percent of psychiatric admissions in the early 20th century were due to dementia caused by syphilis, also known as neurosyphilis.

Like any bacterial disease, syphilis can affect kids, too. Though it’s spread primarily through sexual contact, it can also be transmitted from mother to child during birth, causing lifelong disability.

The poet-physician Aldabert Bettman, who wrote fictionalized poems based on his experiences as a doctor in the 1930s, described the effect syphilis could have on an infant in his poem Daniel Healy:

I always got away clean

when I went out

With the boys.

The night before

I was married

I went out,—But was not so fortunate;

And I infected

My bride.

When little Daniel

Was born

His eyes discharged;

And I dared not tell

That because

I had seen too much

Little Daniel sees not at all

Given the horrors of untreated syphilis, it’s maybe not surprising that people would go to extremes to try and treat it. One of the earliest remedies for syphilis, dating back to 15th century Naples, was using mercury – either rubbing it on the skin where blisters appeared, or breathing it in as a vapor. (Not surprisingly, many people who underwent this type of “treatment” died of mercury poisoning.)

Other primitive treatments included using tinctures made of a flowering plant called guaiacum, as well as inducing “sweat baths” to eliminate the syphilitic toxins. In 1910, an arsenic-based drug called Salvarsan hit the market and was hailed as a “magic bullet” for its ability to target and destroy the syphilis-causing bacteria without harming the patient. However, while Salvarsan was effective in treating early-stage syphilis, it was largely ineffective by the time the infection progressed beyond the second stage. Tens of thousands of people each year continued to die of syphilis or were otherwise shipped off to psychiatric wards due to neurosyphilis.

It was in one of these psychiatric units in the early 20th century that Dr. Julius Wagner-Juaregg got the idea for a potential cure.

Wagner-Juaregg was an Austrian-born physician trained in “experimental pathology” at the University of Vienna. Wagner-Juaregg started his medical career conducting lab experiments on animals and then moved on to work at different psychiatric clinics in Vienna, despite having no training in psychiatry or neurology.

Wagner-Juaregg’s work was controversial to say the least. At the time, medicine – particularly psychiatric medicine – did not have anywhere near the same rigorous ethical standards that doctors, researchers, and other scientists are bound to today. Wagner-Juaregg would devise wild theories about the cause of their psychiatric ailments and then perform experimental procedures in an attempt to cure them. (As just one example, Wagner-Juaregg would sterilize his adolescent male patients, thinking “excessive masturbation” was the cause of their schizophrenia.)

But sometimes these wild theories paid off. In 1883, during his residency, Wagner-Juaregg noted that a female patient with mental illness who had contracted a skin infection and suffered a high fever experienced a sudden (and seemingly miraculous) remission from her psychosis symptoms after the fever had cleared. Wagner-Juaregg theorized that inducing a high fever in his patients with neurosyphilis could help them recover as well.

Eventually, Wagner-Juaregg was able to put his theory to the test. Around 1890, Wagner-Juaregg got his hands on something called tuberculin, a therapeutic treatment created by the German microbiologist Robert Koch in order to cure tuberculosis. Tuberculin would later turn out to be completely ineffective for treating tuberculosis, often creating severe immune responses in patients – but for a short time, Wagner-Juaregg had some success in using tuberculin to help his dementia patients. Giving his patients tuberculin resulted in a high fever – and after completing the treatment, Wagner-Jauregg reported that his patient’s dementia was completely halted. The success was short-lived, however: Wagner-Juaregg eventually had to discontinue tuberculin as a treatment, as it began to be considered too toxic.

By 1917, Wagner-Juaregg’s theory about syphilis and fevers was becoming more credible – and one day a new opportunity presented itself when a wounded soldier, stricken with malaria and a related fever, was accidentally admitted to his psychiatric unit.

When his findings were published in 1918, Wagner-Juaregg’s so-called “fever therapy” swept the globe.

What Wagner-Juaregg did next was ethically deplorable by any standard: Before he allowed the soldier any quinine (the standard treatment for malaria at the time), Wagner-Juaregg took a small sample of the soldier’s blood and inoculated three syphilis patients with the sample, rubbing the blood on their open syphilitic blisters.

It’s unclear how well the malaria treatment worked for those three specific patients – but Wagner-Juaregg’s records show that in the span of one year, he inoculated a total of nine patients with malaria, for the sole purpose of inducing fevers, and six of them made a full recovery. Wagner-Juaregg’s treatment was so successful, in fact, that one of his inoculated patients, an actor who was unable to work due to his dementia, was eventually able to find work again and return to the stage. Two additional patients – a military officer and a clerk – recovered from their once-terminal illnesses and returned to their former careers as well.

When his findings were published in 1918, Wagner-Juaregg’s so-called “fever therapy” swept the globe. The treatment was hailed as a breakthrough – but it still had risks. Malaria itself had a mortality rate of about 15 percent at the time. Many people considered that to be a gamble worth taking, compared to dying a painful, protracted death from syphilis.

Malaria could also be effectively treated much of the time with quinine, whereas other fever-causing illnesses were not so easily treated. Triggering a fever by way of malaria specifically, therefore, became the standard of care.

Tens of thousands of people with syphilitic dementia would go on to be treated with fever therapy until the early 1940s, when a combination of Salvarsan and penicillin caused syphilis infections to decline. Eventually, neurosyphilis became rare, and then nearly unheard of.

Despite his contributions to medicine, it’s important to note that Wagner-Juaregg was most definitely not a person to idolize. In fact, he was an outspoken anti-Semite and proponent of eugenics, arguing that Jews were more prone to mental illness and that people who were mentally ill should be forcibly sterilized. (Wagner-Juaregg later became a Nazi sympathizer during Hitler’s rise to power even though, bizarrely, his first wife was Jewish.) Another problematic issue was that his fever therapy involved experimental treatments on many who, due to their cognitive issues, could not give informed consent.

Lack of consent was also a fundamental problem with the syphilis study at Tuskegee, appalling research that began just 14 years after Wagner-Juaregg published his “fever therapy” findings.

Still, despite his outrageous views, Wagner-Juaregg was awarded the Nobel Prize in Medicine or Physiology in 1927 – and despite some egregious human rights abuses, the miraculous “fever therapy” was partly responsible for taming one of the deadliest plagues in human history.

COVID Vaccines Put Anti-Science Activists to Shame

On a rain-soaked day, thousands marched on Washington, D.C. to fight for science funding and scientific analysis in politics.

It turns out that, despite the destruction and heartbreak caused by the COVID pandemic, there is a silver lining: Scientists from academia, government, and industry worked together and, using the tools of biotechnology, created multiple vaccines that surely will put an end to the worst of the pandemic sometime in 2021. In short, they proved that science works, particularly that which comes from industry. Though politicians and the public love to hate Big Ag and Big Pharma, everybody comes begging for help when the going gets tough.

The change in public attitude is tangible. A headline in the Financial Times declared, "Covid vaccines offer Big Pharma a chance of rehabilitation." In its analysis, the FT says that the pharmaceutical industry is widely reviled because of the high prices it charges for its drugs, among other things, but the speed with which the industry developed COVID vaccines may allow for its reputation to be refurbished.

The Media's Role in Promoting Anti-Biotech Activism

Of course, the media is partly to blame for the pharmaceutical industry's dismal reputation in the first place because of journalists' penchant for oversimplifying complicated stories and pinning blame on an easy scapegoat. While the pharmaceutical industry is far from angelic and places a hefty price tag on its products in the U.S., often gone unmentioned is the fact that high drug prices are the result of multiple factors, including lack of competition (even among generic drugs), foreign price controls that allow citizens of other countries to "free load" off of American consumers, and a deliberately opaque drug supply chain (that involves not only profit-maximizing pharmaceutical manufacturers but "middlemen" like distributors). But why delve into such nuance when it's easier to point to villains like Martin Shkreli?

Big Ag has been subjected to identical mistreatment by the media, with outlets such as the New York Times among the biggest offenders. One article it published compared pesticides to "Nazi-made sarin gas," and another spread misinformation about a high-profile biotech scientist. The website Undark, whose stated mission is "true journalistic coverage of the sciences," once published an opinion piece written by a person who works for an anti-GMO organization and another criticizing Monsanto for its reasonable efforts to defend itself from disinformation. These aren't cherry-picked examples. Overall, the media clearly has taken sides: Science is great, unless it's science from industry.

If the scientific community can use the powerful techniques of biotechnology to cure a previously unknown infectious disease in less than a year, then why shouldn't it be able to cure genetic diseases in humans?

Now, the very same media – which has portrayed the pharmaceutical and biotech industries in the worst possible light, often for political or ideological reasons – is wondering why so many Americans are reluctant to get a COVID vaccine. Perhaps their reportage has something to do with it.

Tech Strikes Back

For years, the agricultural, pharmaceutical, and biotech industries fought back, but to no avail. GMOs are feared, pharma is hated, and biotech is misunderstood. Regulatory red tape abounds. But that may be all about to change, not because of a clever PR campaign, but thanks to the successful coronavirus vaccines produced by the pharma/biotech industry.

All of the major vaccines were created using biotechnology, broadly defined as the use of living systems and organisms to develop products intended to improve human life or the planet. The Pfizer/BioNTech and Moderna vaccines rely on mRNA (messenger RNA), which is essentially a molecular "photocopy" of the more familiar genetic material DNA. The mRNA molecules were tweaked using biotech and then shown to be 95% effective at preventing COVID in human volunteers. The AstraZeneca/Oxford vaccine is based on an older technology that genetically modifies a harmless virus to resemble an immunological target, in this case, SARS-CoV-2. Their vaccine is 62% to 90% effective.

Even better, the pharma/biotech industry showed that it can work hand-in-hand with the government, for instance the FDA, to produce vaccines in record-breaking time. Operation Warp Speed provided some financing to facilitate this process. History will look back at this endeavor and likely conclude that the unprecedented level of cooperation to develop a vaccine in less than 12 months was one of the greatest triumphs in public health history. (The bungled slow rollout is another story.)

Perhaps the most important lesson that society will learn is that the scientific method works.

The pharma/biotech industry has thus gained tremendous momentum. For the first time it seems, those who are opposed to scientific progress and biotechnology are on the defensive. If the scientific community can use the powerful techniques of biotechnology to cure a previously unknown infectious disease in less than a year, then why shouldn't it be able to cure genetic diseases in humans? Or create genetically modified crops that are resistant to insects and drought? Or use genetically modified mosquitoes to help fight against killer diseases like malaria? The arguments against biotechnology have been made exponentially weaker by the success of the coronavirus vaccine.

Perhaps the most important lesson that society will learn is that the scientific method works. We observed (by collecting samples of an unknown virus and sequencing its genome), hypothesized (by predicting which parts of the virus would trigger an immune response), experimented (by recruiting tens of thousands of volunteers into clinical trials), and concluded (that the vaccines worked). It was a thing of pure beauty.

Thanks to all the players involved – from Big Government to Big Pharma – we are beginning the process of being rescued from a modern-day plague. Let us hope that this scientific success also deals a fatal blow to the forces of ignorance that have held back technological progress for decades.

[Editor's Note: LeapsMag is an editorially independent publication that receives program support from Leaps by Bayer. LeapsMag's founding in 2017 predates Bayer's acquisition of Monsanto in 2018. All content published on LeapsMag is strictly free of influence, censorship, and oversight from its corporate sponsor. Read more about LeapsMag's organizational independence here.]

Eight Big Medical and Science Trends to Watch in 2021

Promising developments underway include advancements in gene and cell therapy, better testing for COVID, and a renewed focus on climate change.

The world as we know it has forever changed. With a greater focus on science and technology than before, experts in the biotech and life sciences spaces are grappling with what comes next as SARS-CoV-2, the coronavirus that causes the COVID-19 illness, has spread and mutated across the world.

Even with vaccines being distributed, so much still remains unknown.

Jared Auclair, Technical Supervisor for the Northeastern University's Life Science Testing Center in Burlington, Massachusetts, guides a COVID testing lab that cranks out thousands of coronavirus test results per day. His lab is also focused on monitoring the quality of new cell and gene therapy products coming to the market.

Here are trends Auclair and other experts are watching in 2021.

Better Diagnostic Testing for COVID

Expect improvements in COVID diagnostic testing and the ability to test at home.

There are currently three types of coronavirus tests. The molecular test—also known as the RT-PCR test, detects the virus's genetic material, and is highly accurate, but it can take days to receive results. There are also antibody tests, done through a blood draw, designed to test whether you've had COVID in the past. Finally, there's the quick antigen test that isn't as accurate as the PCR test, but can identify if people are going to infect others.

Last month, Lucira Health secured the U.S. FDA Emergency Use Authorization for the first prescription molecular diagnostic test for COVID-19 that can be performed at home. On December 15th, the Ellume Covid-19 Home Test received authorization as the first over-the-counter COVID-19 diagnostic antigen test that can be done at home without a prescription. The test uses a nasal swab that is connected to a smartphone app and returns results in 15-20 minutes. Similarly, the BinaxNOW COVID-19 Ag Card Home Test received authorization on Dec. 16 for its 15-minute antigen test that can be used within the first seven days of onset of COIVD-19 symptoms.

Home testing has the possibility to impact the pandemic pretty drastically, Auclair says, but there are other considerations: the type and timing of test that is administered, how expensive is the test (and if it is financially feasible for the general public) and the ability of a home test taker to accurately administer the test.

"The vaccine roll-out will not eliminate the need for testing until late 2021 or early 2022."

Ideally, everyone would frequently get tested, but that would mean the cost of a single home test—which is expected to be around $30 or more—would need to be much cheaper, more in the $5 range.

Auclair expects "innovations in the diagnostic space to explode" with the need for more accurate, inexpensive, quicker COVID tests. Auclair foresees innovations to be at first focused on COVID point-of-care testing, but he expects improvements within diagnostic testing for other types of viruses and diseases too.

"We still need more testing to get the pandemic under control, likely over the next 12 months," Auclair says. "The vaccine roll-out will not eliminate the need for testing until late 2021 or early 2022."

Rise of mRNA-based Vaccines and Therapies

A year ago, vaccines weren't being talked about like they are today.

"But clearly vaccines are the talk of the town," Auclair says. "The reason we got a vaccine so fast was there was so much money thrown at it."

A vaccine can take more than 10 years to fully develop, according to the World Economic Forum. Prior to the new COVID vaccines, which were remarkably developed and tested in under a year, the fastest vaccine ever made was for mumps -- and it took four years.

"Normally you have to produce a protein. This is typically done in eggs. It takes forever," says Catherine Dulac, a neuroscientist and developmental biologist at Harvard University who won the 2021 Breakthrough Prize in Life Sciences. "But an mRNA vaccine just enabled [us] to skip all sorts of steps [compared with burdensome conventional manufacturing] and go directly to a product that can be injected into people."

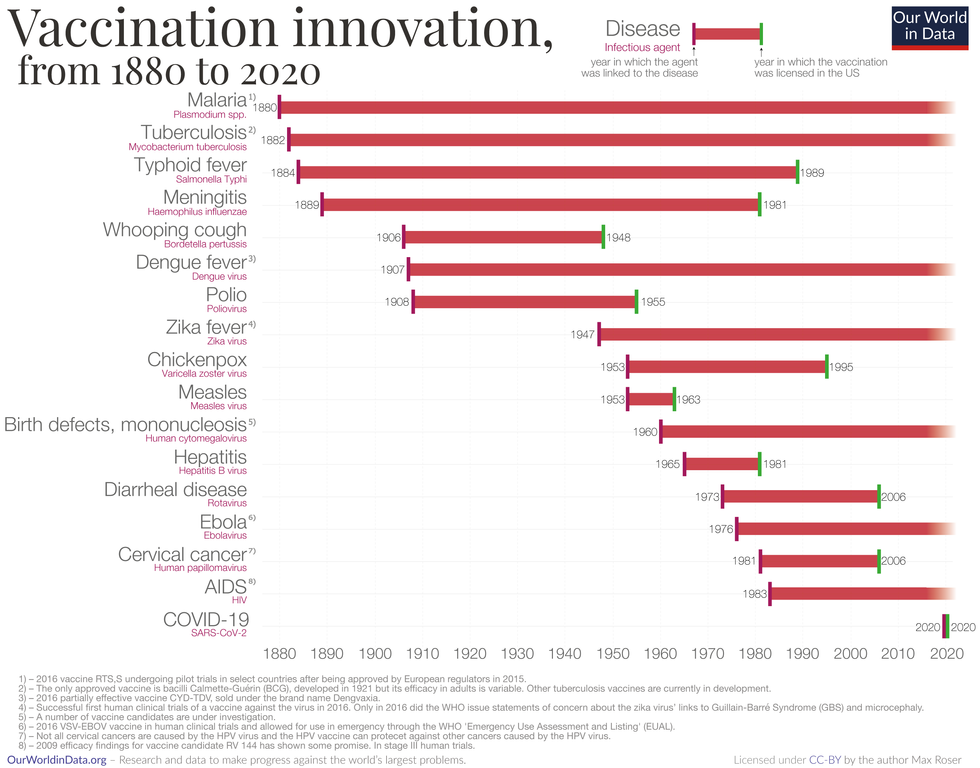

Non-traditional medicines based on genetic research are in their infancy. With mRNA-based vaccines hitting the market for the first time, look for more vaccines to be developed for whatever viruses we don't currently have vaccines for, like dengue virus and Ebola, Auclair says.

"There's a whole bunch of things that could be explored now that haven't been thought about in the past," Auclair says. "It could really be a game changer."

Vaccine Innovation over the last 140 years.

Max Roser/Our World in Data (Creative Commons license)

Advancements in Cell and Gene Therapies

CRISPR, a type of gene editing, is going to be huge in 2021, especially after the Nobel Prize in Chemistry was awarded to Emmanuelle Charpentier and Jennifer Doudna in October for pioneering the technology.

Right now, CRISPR isn't completely precise and can cause deletions or rearrangements of DNA.

"It's definitely not there yet, but over the next year it's going to get a lot closer and you're going to have a lot of momentum in this space," Auclair says. "CRISPR is one of the technologies I'm most excited about and 2021 is the year for it."

Gene therapies are typically used on rare genetic diseases. They work by replacing the faulty dysfunctional genes with corrected DNA codes.

"Cell and gene therapies are really where the field is going," Auclair says. "There is so much opportunity....For the first time in our life, in our existence as a species, we may actually be able to cure disease by using [techniques] like gene editing, where you cut in and out of pieces of DNA that caused a disease and put in healthy DNA," Auclair says.

For example, Spinal Muscular Atrophy is a rare genetic disorder that leads to muscle weakness, paralysis and death in children by age two. As of last year, afflicted children can take a gene therapy drug called Zolgensma that targets the missing or nonworking SMN1 gene with a new copy.

Another recent breakthrough uses gene editing for sickle cell disease. Victoria Gray, a mom from Mississippi who was exclusively followed by NPR, was the first person in the United States to be successfully treated for the genetic disorder with the help of CRISPR. She has continued to improve since her landmark treatment on July 2, 2019 and her once-debilitating pain has greatly eased.

"This is really a life-changer for me," she told NPR. "It's magnificent."

"You are going to see bigger leaps in gene therapies."

Look out also for improvements in cell therapies, but on a much lesser scale.

Cell therapies remove immune cells from a person or use cells from a donor. The cells are modified or cultured in lab, multiplied by the millions and then injected back into patients. These include stem cell therapies as well as CAR-T cell therapies, which are typically therapies of last resort and used in cancers like leukemia, Auclair says.

"You are going to see bigger leaps in gene therapies," Auclair says. "It's being heavily researched and we understand more about how to do gene therapies. Cell therapies will lie behind it a bit because they are so much more difficult to work with right now."

More Monoclonal Antibody Therapies

Look for more customized drugs to personalize medicine even more in the biotechnology space.

In 2019, the FDA anticipated receiving more than 200 Investigational New Drug (IND) applications in 2020. But with COVID, the number of INDs skyrocketed to 6,954 applications for the 2020 fiscal year, which ended September 30, 2020, according to the FDA's online tracker. Look for antibody therapies to play a bigger role.

Monoclonal antibodies are lab-grown proteins that mimic or enhance the immune system's response to fight off pathogens, like viruses, and they've been used to treat cancer. Now they are being used to treat patients with COVID-19.

President Donald Trump received a monoclonal antibody cocktail, called REGEN-COV2, which later received FDA emergency use authorization.

A newer type of monoclonal antibody therapy is Antibody-Drug Conjugates, also called ADCs. It's something we're going to be hearing a lot about in 2021, Auclair says.

"Antibody-Drug Conjugates is a monoclonal antibody with a chemical, we consider it a chemical warhead on it," Auclair says. "The monoclonal antibody binds to a specific antigen in your body or protein and delivers a chemical to that location and kills the infected cell."

Moving Beyond Male-Centric Lab Testing

Scientific testing for biology has, until recently, focused on testing males. Dulac, a Howard Hughes Medical Investigator and professor of molecular and cellular biology at Harvard University, challenged that idea to find brain circuitry behind sex-specific behaviors.

"For the longest time, until now, all the model systems in biology, are male," Dulac says. "The idea is if you do testing on males, you don't need to do testing on females."

Clinical models are done in male animals, as well as fundamental research. Because biological research is always done on male models, Dulac says the outcomes and understanding in biology is geared towards understanding male biology.

"All the drugs currently on the market and diagnoses of diseases are biased towards the understanding of male biology," Dulac says. "The diagnostics of diseases is way weaker in women than men."

That means the treatment isn't necessarily as good for women as men, she says, including what is known and understood about pain medication.

"So pain medication doesn't work well in women," Dulac says. "It works way better in men. It's true for almost all diseases that I know. Why? because you have a science that is dominated by males."

Although some in the scientific community challenge that females are not interesting or too complicated with their hormonal variations, Dulac says that's simply not true.

"There's absolutely no reason to decide 50% of life forms are interesting and the other 50% are not interesting. What about looking at both?" says Dulac, who was awarded the $3 million Breakthrough Prize in Life Sciences in September for connecting specific neural mechanisms to male and female parenting behaviors.

Disease Research on Single Cells

To better understand how diseases manifest in the body's cell and tissues, many researchers are looking at single-cell biology. Cells are the most fundamental building blocks of life. Much still needs to be learned.

"A remarkable development this year is the massive use of analysis of gene expression and chromosomal regulation at the single-cell level," Dulac says.

Much is focused on the Human Cell Atlas (HCA), a global initiative to map all cells in healthy humans and to better identify which genes associated with diseases are active in a person's body. Most estimates put the number of cells around 30 trillion.

Dulac points to work being conducted by the Cell Census Network (BICCN) Brain Initiative, an initiative by the National Institutes of Health to come up with an atlas of cell types in mouse, human and non-human primate brains, and the Chan Zuckerberg Initiative's funding of single-cell biology projects, including those focused on single-cell analysis of inflammation.

"Our body and our brain are made of a large number of cell types," Dulac says. "The ability to explore and identify differences in gene expression and regulation in massively multiplex ways by analyzing millions of cells is extraordinarily important."

Converting Plastics into Food

Yep, you heard it right, plastics may eventually be turned into food. The Defense Advanced Research Projects Agency, better known as DARPA, is funding a project—formally titled "Production of Macronutrients from Thermally Oxo-Degraded Wastes"—and asking researchers how to do this.

"When I first heard about this challenge, I thought it was absolutely absurd," says Dr. Robert Brown, director of the Bioeconomy Institute at Iowa State University and the project's principal investigator, who is working with other research partners at the University of Delaware, Sandia National Laboratories, and the American Institute of Chemical Engineering (AIChE)/RAPID Institute.

But then Brown realized plastics will slowly start oxidizing—taking in oxygen—and microorganisms can then consume it. The oxidation process at room temperature is extremely slow, however, which makes plastics essentially not biodegradable, Brown says.

That changes when heat is applied at brick pizza oven-like temperatures around 900-degrees Fahrenheit. The high temperatures get compounds to oxidize rapidly. Plastics are synthetic polymers made from petroleum—large molecules formed by linking many molecules together in a chain. Heated, these polymers will melt and crack into smaller molecules, causing them to vaporize in a process called devolatilization. Air is then used to cause oxidation in plastics and produce oxygenated compounds—fatty acids and alcohols—that microorganisms will eat and grow into single-cell proteins that can be used as an ingredient or substitute in protein-rich foods.

"The caveat is the microorganisms must be food-safe, something that we can consume," Brown says. "Like supplemental or nutritional yeast, like we use to brew beer and to make bread or is used in Australia to make Vegemite."

What do the microorganisms look like? For any home beer brewers, it's the "gunky looking stuff you'd find at the bottom after the fermentation process," Brown says. "That's cellular biomass. Like corn grown in the field, yeast or other microorganisms like bacteria can be harvested as macro-nutrients."

Brown says DARPA's ReSource program has challenged all the project researchers to find ways for microorganisms to consume any plastics found in the waste stream coming out of a military expeditionary force, including all the packaging of food and supplies. Then the researchers aim to remake the plastic waste into products soldiers can use, including food. The project is in the first of three phases.

"We are talking about polyethylene, polypropylene, like PET plastics used in water bottles and converting that into macronutrients that are food," says Brown.

Renewed Focus on Climate Change

The Union of Concerned Scientists say carbon dioxide levels are higher today than any point in at least 800,000 years.

"Climate science is so important for all of humankind. It is critical because the quality of life of humans on the planet depends on it."

Look for technology to help locate large-scale emitters of carbon dioxide, including sensors on satellites and artificial intelligence to optimize energy usage, especially in data centers.

Other technologies focus on alleviating the root cause of climate change: emissions of heat-trapping gasses that mainly come from burning fossil fuels.

Direct air carbon capture, an emerging effort to capture carbon dioxide directly from ambient air, could play a role.

The technology is in the early stages of development and still highly uncertain, says Peter Frumhoff, director of science and policy at Union of Concerned Scientists. "There are a lot of questions about how to do that at sufficiently low costs...and how to scale it up so you can get carbon dioxide stored in the right way," he says, and it can be very energy intensive.

One of the oldest solutions is planting new forests, or restoring old ones, which can help convert carbon dioxide into oxygen through photosynthesis. Hence the Trillion Trees Initiative launched by the World Economic Forum. Trees are only part of the solution, because planting trees isn't enough on its own, Frumhoff says. That's especially true, since 2020 was the year that human-made, artificial stuff now outweighs all life on earth.

More research is also going into artificial photosynthesis for solar fuels. The U.S. Department of Energy awarded $100 million in 2020 to two entities that are conducting research. Look also for improvements in battery storage capacity to help electric vehicles, as well as back-up power sources for solar and wind power, Frumhoff says.

Another method to combat climate change is solar geoengineering, also called solar radiation management, which reflects sunlight back to space. The idea stems from a volcanic eruption in 1991 that released a tremendous amount of sulfate aerosol particles into the stratosphere, reflecting the sunlight away from Earth. The planet cooled by a half degree for nearly a year, Frumhoff says. However, he acknowledges, "there's a lot of things we don't know about the potential impacts and risks" involved in this controversial approach.

Whatever the approach, scientific solutions to climate change are attracting renewed attention. Under President Trump, the White House Office of Science and Technology Policy didn't have an acting director for almost two years. Expect that to change when President-elect Joe Biden takes office.

"Climate science is so important for all of humankind," Dulac says. "It is critical because the quality of life of humans on the planet depends on it."