This Special Music Helped Preemie Babies’ Brains Develop

Listening to music helped preterm babies' brains develop, according to the results of a new Swiss study.

Move over, Baby Einstein: New research from Switzerland shows that listening to soothing music in the first weeks of life helps encourage brain development in preterm babies.

For the study, the scientists recruited a harpist and a new-age musician to compose three pieces of music.

The Lowdown

Children who are born prematurely, between 24 and 32 weeks of pregnancy, are far more likely to survive today than they used to be—but because their brains are less developed at birth, they're still at high risk for learning difficulties and emotional disorders later in life.

Researchers in Geneva thought that the unfamiliar and stressful noises in neonatal intensive care units might be partially responsible. After all, a hospital ward filled with alarms, other infants crying, and adults bustling in and out is far more disruptive than the quiet in-utero environment the babies are used to. They decided to test whether listening to pleasant music could have a positive, counterbalancing effect on the babies' brain development.

Led by Dr. Petra Hüppi at the University of Geneva, the scientists recruited Swiss harpist and new-age musician Andreas Vollenweider (who has collaborated with the likes of Carly Simon, Bryan Adams, and Bobby McFerrin). Vollenweider developed three pieces of music specifically for the NICU babies, which were played for them five times per week. Each track was used for specific purposes: To help the baby wake up; to stimulate a baby who was already awake; and to help the baby fall back asleep.

When they reached an age equivalent to a full-term baby, the infants underwent an MRI. The researchers focused on connections within the salience network, which determines how relevant information is, and then processes and acts on it—crucial components of healthy social behavior and emotional regulation. The neural networks of preemies who had listened to Vollenweider's pieces were stronger than preterm babies who had not received the intervention, and were instead much more similar to full-term babies.

Next Up

The first infants in the study are now 6 years old—the age when cognitive problems usually become diagnosable. Researchers plan to follow up with more cognitive and socio-emotional assessments, to determine whether the effects of the music intervention have lasted.

The first infants in the study are now 6 years old—the age when cognitive problems usually become diagnosable.

The scientists note in their paper that, while they saw strong results in the babies' primary auditory cortex and thalamus connections—suggesting that they had developed an ability to recognize and respond to familiar music—there was less reaction in the regions responsible for socioemotional processing. They hypothesize that more time spent listening to music during a NICU stay could improve those connections as well; but another study would be needed to know for sure.

Open Questions

Because this initial study had a fairly small sample size (only 20 preterm infants underwent the musical intervention, with another 19 studied as a control group), and they all listened to the same music for the same amount of time, it's still undetermined whether variations in the type and frequency of music would make a difference. Are Vollenweider's harps, bells, and punji the runaway favorite, or would other styles of music help, too? (Would "Baby Shark" help … or hurt?) There's also a chance that other types of repetitive sounds, like parents speaking or singing to their children, might have similar effects.

But the biggest question is still the one that the scientists plan to tackle next: Whether the intervention lasts as the children grow up. If it does, that's great news for any family with a preemie — and for the baby-sized headphone industry.

Can Spare Parts from Pigs Solve Our Organ Shortage?

A decellularized small porcine liver.

Jennifer Cisneros was 18 years old, commuting to college from her family's home outside Annapolis, Maryland, when she came down with what she thought was the flu. Over the following weeks, however, her fatigue and nausea worsened, and her weight began to plummet. Alarmed, her mother took her to see a pediatrician. "When I came back with the urine cup, it was orange," Cisneros recalls. "He was like, 'Oh, my God. I've got to send you for blood work.'"

"Eventually, we'll be better off than with a human organ."

Further tests showed that her kidneys were failing, and at Johns Hopkins Hospital, a biopsy revealed the cause: Goodpasture syndrome (GPS), a rare autoimmune disease that attacks the kidneys or lungs. Cisneros was put on dialysis to filter out the waste products that her body could no longer process, and given chemotherapy and steroids to suppress her haywire immune system.

The treatment drove her GPS into remission, but her kidneys were beyond saving. At 19, Cisneros received a transplant, with her mother as donor. Soon, she'd recovered enough to return to school; she did some traveling, and even took up skydiving and parasailing. Then, after less than two years, rejection set in, and the kidney had to be removed.

She went back on dialysis until she was 26, when a stranger learned of her plight and volunteered to donate. That kidney lasted four years, but gave out after a viral infection. Since 2015, Cisneros—now 32, and working as an office administrator between thrice-weekly blood-filtering sessions—has been waiting for a replacement.

She's got plenty of company. About 116,000 people in the United States currently need organ transplants, but fewer than 35,000 organs become available every year. On average, 20 people on the waiting list die each day. And despite repeated campaigns to boost donorship, the gap shows no sign of narrowing.

"This is going to revolutionize medicine, in ways we probably can't yet appreciate."

For decades, doctors and scientists have envisioned a radical solution to the shortage: harvesting other species for spare parts. Xenotransplantation, as the practice is known, could provide an unlimited supply of lifesaving organs for patients like Cisneros. Those organs, moreover, could be altered by genetic engineering or other methods to reduce the danger of rejection—and thus to eliminate the need for immunosuppressive drugs, whose potential side effects include infections, diabetes, and cancer. "Eventually, we'll be better off than with a human organ," says David Cooper, MD, PhD, co-director of the xenotransplant program at the University of Alabama School of Medicine. "This is going to revolutionize medicine, in ways we probably can't yet appreciate."

Recently, progress toward that revolution has accelerated sharply. The cascade of advances began in April 2016, when researchers at the National Heart, Lung, and Blood Institute (NHLBI) reported keeping pig hearts beating in the abdomens of five baboons for a record-breaking mean of 433 days, with one lasting more than two-and-a-half years. Then a team at Emory University announced that a pig kidney sustained a rhesus monkey for 435 days before being rejected, nearly doubling the previous record. At the University of Munich, in Germany, researchers doubled the record for a life-sustaining pig heart transplant in a baboon (replacing the animal's own heart) to 90 days. Investigators at the Salk Institute and the University of California, Davis, declared that they'd grown tissue in pig embryos using human stem cells—a first step toward cultivating personalized replacement organs. The list goes on.

Such breakthroughs, along with a surge of cash from biotech investors, have propelled a wave of bullish media coverage. Yet this isn't the first time that xenotransplantation has been touted as the next big thing. Twenty years ago, the field seemed poised to overcome its final hurdles, only to encounter a setback from which it is just now recovering.

Which raises a question: Is the current excitement justified? Or is the hype again outrunning the science?

A History of Setbacks

The idea behind xenotransplantation dates back at least as far as the 17th century, when French physician Jean-Baptiste Denys tapped the veins of sheep and cows to perform the first documented human blood transfusions. (The practice was banned after two of the four patients died, probably from an immune reaction.) In the 19th century, surgeons began transplanting corneas from pigs and other animals into humans, and using skin xenografts to aid in wound healing; despite claims of miraculous cures, medical historians believe those efforts were mostly futile. In the 1920s and '30s, thousands of men sought renewed vigor through testicular implants from monkeys or goats, but the fad collapsed after studies showed the effects to be imaginary.

Research shut down when scientists discovered a virus in pig DNA that could infect human cells.

After the first successful human organ transplant in 1954—of a kidney, passed between identical twin sisters—the limited supply of donor organs brought a resurgence of interest in animal sources. Attention focused on nonhuman primates, our species' closest evolutionary relatives. At Tulane University, surgeon Keith Reemstma performed the first chimpanzee-to-human kidney transplants in 1963 and '64. Although one of the 13 patients lived for nine months, the rest died within a few weeks due to organ rejection or infections. Other surgeons attempted liver and heart xenotransplants, with similar results. Even the advent of the first immunosuppressant drug, cyclosporine, in 1983, did little to improve survival rates.

In the 1980s, Cooper—a pioneering heart transplant surgeon who'd embraced the dream of xenotransplantation—began arguing that apes and monkeys might not be the best donor animals after all. "First of all, there's not enough of them," he explains. "They breed in ones and twos, and take years to grow to full size. Even then, their hearts aren't big enough for a 70-kg. patient." Pigs, he suggested, would be a more practical alternative. But when he tried transplanting pig organs into nonhuman primates (as surrogates for human recipients), they were rejected within minutes.

In 1992, Cooper's team identified a sugar on the surface of porcine cells, called alpha-1,3-galactose (a-gal), as the main target for the immune system's attack. By then, the first genetically modified pigs had appeared, and biotech companies—led by the Swiss-based pharma giant Novartis—began pouring millions of dollars into developing one whose organs could elude or resist the human body's defenses.

Disaster struck five years later, when scientists reported that a virus whose genetic code was written into pig DNA could infect human cells in lab experiments. Although there was no evidence that the virus, known as PERV (for porcine endogenous retrovirus) could cause disease in people, the discovery stirred fears that xenotransplants might unleash a deadly epidemic. Facing scrutiny from government regulators and protests from anti-GMO and animal-rights activists, Novartis "pulled out completely," Cooper recalls. "They slaughtered all their pigs and closed down their research facility." Competitors soon followed suit.

The riddles surrounding animal-to-human transplants are far from fully solved.

A New Chapter – With New Questions

Yet xenotransplantation's visionaries labored on, aided by advances in genetic engineering and immunosuppression, as well as in the scientific understanding of rejection. In 2003, a team led by Cooper's longtime colleague David Sachs, at Harvard Medical School, developed a pig lacking the gene for a-gal; over the next few years, other scientists knocked out genes expressing two more problematic sugars. In 2013, Muhammad Mohiuddin, then chief of the transplantation section at the NHLBI, further modified a group of triple-knockout pigs, adding genes that code for two human proteins: one that shields cells from attack by an immune mechanism known as the complement system; another that prevents harmful coagulation. (It was those pigs whose hearts recently broke survival records when transplanted into baboon bellies. Mohiuddin has since become director of xenoheart transplantation at the University of Maryland's new Center for Cardiac Xenotransplantation Research.) And in August 2017, researchers at Harvard Medical School, led by George Church and Luhan Yang, announced that they'd used CRISPR-cas9—an ultra-efficient new gene-editing technique—to disable 62 PERV genes in fetal pig cells, from which they then created cloned embryos. Of the 37 piglets born from this experiment, none showed any trace of the virus.

Still, the riddles surrounding animal-to-human transplants are far from fully solved. One open question is what further genetic manipulations will be necessary to eliminate all rejection. "No one is so naïve as to think, 'Oh, we know all the genes—let's put them in and we are done,'" biologist Sean Stevens, another leading researcher, told the The New York Times. "It's an iterative process, and no one that I know can say whether we will do two, or five, or 100 iterations." Adding traits can be dangerous as well; pigs engineered to express multiple anticoagulation proteins, for example, often die of bleeding disorders. "We're still finding out how many you can do, and what levels are acceptable," says Cooper.

Another question is whether PERV really needs to be disabled. Cooper and some of his colleagues note that pig tissue has long been used for various purposes, such as artificial heart valves and wound-repair products, without incident; requiring the virus to be eliminated, they argue, will unnecessarily slow progress toward creating viable xenotransplant organs and the animals that can provide them. Others disagree. "You cannot do anything with pig organs if you do not remove them," insists bioethicist Jeantine Lunshof, who works with Church and Yang at Harvard. "The risk is simply too big."

"We've removed the cells, so we don't have to worry about latent viruses."

Meanwhile, over the past decade, other approaches to xenotransplantation have emerged. One is interspecies blastocyst complementation, which could produce organs genetically identical to the recipient's tissues. In this method, genes that produce a particular organ are knocked out in the donor animal's embryo. The embryo is then injected with pluripotent stem cells made from the tissue of the intended recipient. The stem cells move in to fill the void, creating a functioning organ. This technique has been used to create mouse pancreases in rats, which were then successfully transplanted into mice. But the human-pig "chimeras" recently created by scientists were destroyed after 28 days, and no one plans to bring such an embryo to term anytime soon. "The problem is that cells don't stay put; they move around," explains Father Kevin FitzGerald, a bioethicist at Georgetown University. "If human cells wind up in a pig's brain, that leads to a really interesting conundrum. What if it's self-aware? Are you going to kill it?"

Much further along, and less ethically fraught, is a technique in which decellularized pig organs act as a scaffold for human cells. A Minnesota-based company called Miromatrix Medical is working with Mayo Clinic researchers to develop this method. First, a mild detergent is pumped through the organ, washing away all cellular material. The remaining structure, composed mainly of collagen, is placed in a bioreactor, where it's seeded with human cells. In theory, each type of cell that normally populates the organ will migrate to its proper place (a process that naturally occurs during fetal development, though it remains poorly understood). One potential advantage of this system is that it doesn't require genetically modified pigs; nor will the animals have to be raised under controlled conditions to avoid exposure to transmissible pathogens. Instead, the organs can be collected from ordinary slaughterhouses.

Recellularized livers in bioreactors

(Courtesy of Miromatrix)

"We've removed the cells, so we don't have to worry about latent viruses," explains CEO Jeff Ross, who describes his future product as a bioengineered human organ rather than a xeno-organ. That makes PERV a nonissue. To shorten the pathway to approval by the Food and Drug Administration, the replacement cells will initially come from human organs not suitable for transplant. But eventually, they'll be taken from the recipient (as in blastocyst complementation), which should eliminate the need for immunosuppression.

Clinical trials in xenotransplantation may begin as early as 2020.

Miromatrix plans to offer livers first, followed by kidneys, hearts, and eventually lungs and pancreases. The company recently succeeded in seeding several decellularized pig livers with human and porcine endothelial cells, which flocked obediently to the blood vessels. Transplanted into young pigs, the organs showed unimpaired circulation, with no sign of clotting. The next step is to feed all four liver cell types back into decellularized livers, and see if the transplanted organs will keep recipient pigs alive.

Ross hopes to launch clinical trials by 2020, and several other groups (including Cooper's, which plans to start with kidneys) envision a similar timeline. Investors seem to share their confidence. The biggest backer of xenotransplantation efforts is United Therapeutics, whose founder and co-CEO, Martine Rothblatt, has a daughter with a lung condition that may someday require a transplant; since 2011, the biotech firm has poured at least $100 million into companies pursuing such technologies, while supporting research by Cooper, Mohiuddin, and other leaders in the field. Church and Yang, at Harvard, have formed their own company, eGenesis, bringing in a reported $40 million in funding; Miromatrix has raised a comparable amount.

It's impossible to predict who will win the xenotransplantation race, or whether some new obstacle will stop the competition in its tracks. But Jennifer Cisneros is rooting for all the contestants. "These technologies could save my life," she says. If she hasn't found another kidney before trials begin, she has just one request: "Sign me up."

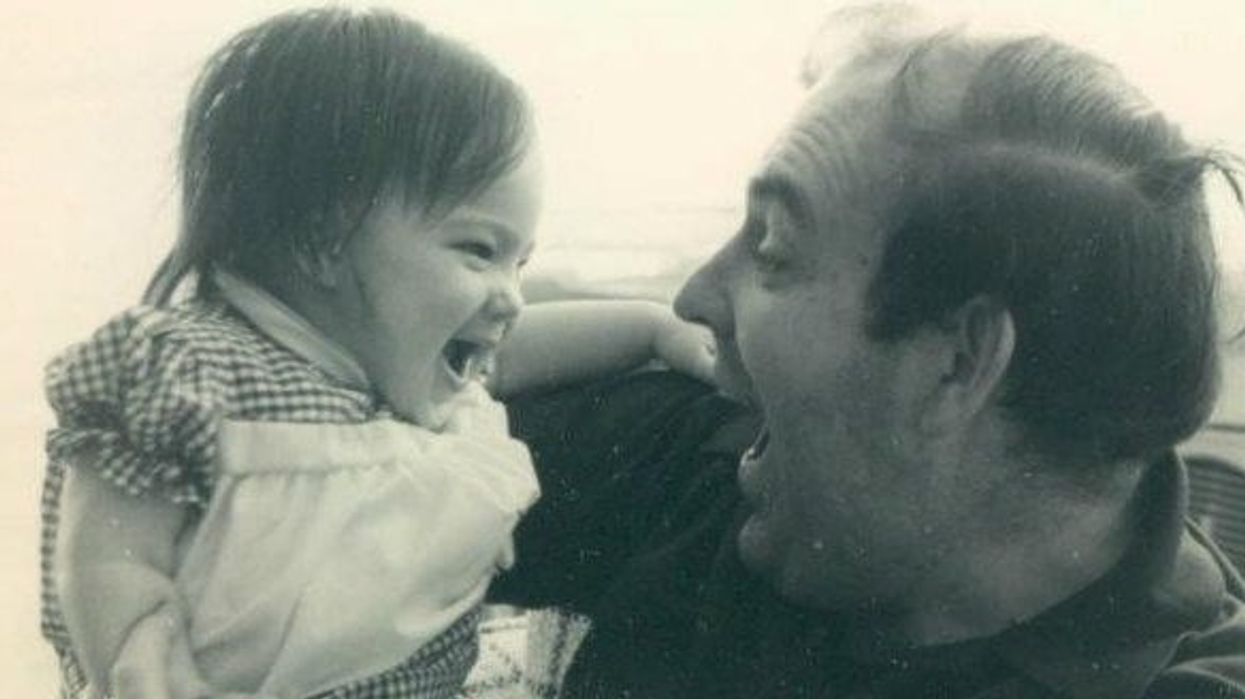

The author as a child with her father in 1981, before he was diagnosed with Alzheimer's.

Editor's Note: A team of researchers in Italy recently used artificial intelligence and machine learning to diagnose Alzheimer's disease on a brain scan an entire decade before symptoms show up in the patient. While some people argue that early detection is critical, others believe the knowledge would do more harm than good. LeapsMag invited contributors with opposite opinions to share their perspectives.

Alzheimer's doesn't run in my family. When my father was diagnosed at the age of 58, we looked at his familial history. Both his parents lived into their late 80's. All of their surviving siblings were similarly long-lived and none had had Alzheimer's or any related dementias. My dad had spent 20 years working for the United Nations in the 60's and 70's in Africa. He was convinced that the Alzheimer's had come from his time spent in dodgy mines where he was exposed without the proper protections to all kinds of chemical processes.

Maybe that was true. Maybe it wasn't. The theory that metals, particularly aluminum, is an environmental factor leading to Alzheimer's has been around for a while. It's mostly been debunked, but clearly something is causing this epidemic as the vast majority of the cases in the world today are age-related. But no one knows what the trigger is, nor are we close to knowing.

If my father had had the Alzheimer's gene, I would go get myself checked for it. If some new MRI were commercially available to scan my brain and let me know if I was developing Alzheimer's, I would also take that test. There are four reasons why.

First, studies have shown that lifestyle has a major impact on the disease. I already run three miles a day. I eat relatively healthily. But like anyone, I don't live strictly on boiled chicken and broccoli. And I definitely enjoy a glass of wine or two. If I knew I had a propensity for the disease, or was developing it, I would be more diligent. I would eat my broccoli and cut out my wine. Life would be less fun, but I'd get more life and that's what's important.

The last picture taken of the author with her father before his death, in 2015.

Secondly, I would also have time to create an end-of-life plan the way my father did not. He told me repeatedly early on in his diagnosis that he did not want to live when he no longer knew me, when he became a burden, when he couldn't feed or bathe himself. I did my best in his final years to help him die quicker: I know that was what he wanted. But, given U.S. laws, all that meant was taking him off his heart and stroke medications and letting him eat anything he wanted, no matter how unhealthy. Knowing what's to come, having seen him go through it, I might consider moving to Belgium, which has begun to allow assisted suicide of those living with Alzheimer's and dementia if they can clearly state their intentions early on in the disease when they still have clarity of mind.

Next, I could help. Right now, there are dozens of Alzheimer's and dementia studies in the works. They are short thousands of willing test subjects. One of the top barriers to learning what's triggering the disease, and finding a cure, is populating these studies. So, knowing would make me a stronger candidate and would potentially help others down the road.

Finally, it would change my priorities. My father died the longest death possible: he succumbed last year more than 15 years after his diagnosis. My mother died the quickest possible way: she had a stress-related brain aneurysm 10 years after my father's diagnosis. Caring for him was too much for her and aneurysms ran in her family; her mother died of one as well. I already get a scan once every five years to see if I'm developing a brain aneurysm. If I am, odds are only 66% that they can operate on it—some aneurysms develop much too deep in the brain to operate, like my mother's.

Would she have wanted to know? Even though the aneurysm in her case was inoperable? I'm not sure. But I imagine if she had known, she would've lived her final years differently. She might have taken that trip to Alaska that she debated but thought was too expensive. She might have gotten organized earlier to make out a will so I wasn't left with chaos in the wake of her death; we'd planned for my father's death, knowing he was ill, but not my mother's. And she might have finally gotten around to dictating her story to me, as she'd always promised me she would when she found the time.

Telling my father's story at the end of his life helped his care.

With my startup MemoryWell, I spend my life now collecting senior stories before they are lost, in part because telling my father's story at the end of his life helped his care. But it's also in part for the story I lost with my mother.

If I knew that my time was limited, I'd not worry so much about saving for retirement. I'd make progress on my bucket list: hike Machu Picchu, scuba dive the Maldives, or raft the Grand Canyon. I'd tell my loved ones as much as I can in my time remaining how much they mean to me. And I would spend more time writing my own story to pass it down—finally finishing the book I've been working on. Maybe it's the writer in me, or maybe it's that I don't have kids of my own yet to carry on a legacy, but I'd want my story to be known, to have others learn from my experiences. And that's the biggest gift knowing would give me.

Editor's Note: Consider the other side of the argument here.