How Should Genetic Engineering Shape Our Future?

An artist's rendering of genetic codes.

Terror. Error. Success. These are the three outcomes that ethicists evaluating a new technology should fear. The possibility that a breakthrough might be used maliciously. The possibility that newly empowered scientists might make a catastrophic mistake. And the possibility that a technology will be so successful that it will change how we live in ways that we can only guess—and that we may not want.

These tools will allow scientists to practice genetic engineering on a scale that is simultaneously far more precise and far more ambitious than ever before.

It was true for the scientists behind the Manhattan Project, who bequeathed a fear of nuclear terror and nuclear error, even as global security is ultimately defined by these weapons of mass destruction. It was true for the developers of the automobile, whose invention has been weaponized by terrorists and kills 3,400 people by accident each day, even as the more than 1 billion cars on the road today have utterly reshaped where we live and how we move. And it is true for the researchers behind the revolution in gene editing and writing.

Put simply, these tools will allow scientists to practice genetic engineering on a scale that is simultaneously far more precise and far more ambitious than ever before. Editing techniques like CRISPR enable exact genetic repairs through a simple cut and paste of DNA, while synthetic biologists aim to redo entire genomes through the writing and substitution of synthetic genes. The technologies are complementary, and they herald an era when the book of life will be not just readable, but rewritable. Food crops, endangered animals, even the human body itself—all will eventually be programmable.

The benefits are easy to imagine: more sustainable crops; cures for terminal genetic disorders; even an end to infertility. Also easy to picture are the ethical pitfalls as the negative images of those same benefits.

Terror is the most straightforward. States have sought to use biology as a weapon at least since invading armies flung the corpses of plague victims into besieged castles. The 1975 biological weapons convention banned—with general success—the research and production of offensive bioweapons, though a handful of lone terrorists and groups like the Oregon-based Rajneeshee cult have still carried out limited bioweapon attacks. Those incidents ultimately caused little death and damage, in part because medical science is mostly capable of defending us from those pathogens that are most easily weaponized. But gene editing and writing offers the chance to engineer germs that could be far more effective than anything nature could develop. Imagine a virus that combines the lethality of Ebola with the transmissibility of the common cold—and in the new world of biology, if you can imagine something, you will eventually be able to create it.

The benefits are easy to imagine: more sustainable crops; cures for terminal genetic disorders; even an end to infertility. Also easy to picture are the ethical pitfalls.

That's one reason why James Clapper, then the U.S. director of national intelligence, added gene editing to the list of threats posed by "weapons of mass destruction and proliferation" in 2016. But these new tools aren't merely dangerous in the wrong hands—they can also be dangerous in the right hands. The list of labs accidents involving lethal bugs is much longer than you'd want to know, at least if you're the sort of person who likes to sleep at night. The U.S. recently lifted a ban on research that works to make existing pathogens, like the H5N1 avian flu virus, more virulent and transmissible, often using new technologies like gene editing. Such work can help medicine better prepare for what nature might throw at us, but it could also make the consequences of a lab error far more catastrophic. There's also the possibility that the use of gene editing and writing in nature—say, by CRISPRing disease-carrying mosquitoes to make them sterile—could backfire in some unforeseen way. Add in the fact that the techniques behind gene editing and writing are becoming simpler and more automated with every year, and eventually millions of people will be capable—through terror or error—of unleashing something awful on the world.

The good news is that both the government and the researchers driving these technologies are increasingly aware of the risks of bioterror and error. One government program, the Functional Genomic and Computational Assessment of Threats (Fun GCAT), provides funding for scientists to scan genetic data looking for the "accidental or intentional creation of a biological threat." Those in the biotech industry know to keep an eye out for suspicious orders—say, a new customer who orders part of the sequence of the Ebola or smallpox virus. "With every invention there is a good use and a bad use," Emily Leproust, the CEO of the commercial DNA synthesis startup Twist Bioscience, said in a recent interview. "What we try hard to do is put in place as many systems as we can to maximize the good stuff, and minimize any negative impact."

But the greatest ethical challenges in gene editing and writing will arise not from malevolence or mistakes, but from success. Through a new technology called in vitro gametogenesis (IVG), scientists are learning how to turn adult human cells like a piece of skin into lab-made sperm and egg cells. That would be a huge breakthrough for the infertile, or for same-sex couples who want to conceive a child biologically related to both partners. It would also open the door to using gene editing to tinker with those lab-made embryos. At first interventions would address any obvious genetic disorders, but those same tools would likely allow the engineering of a child's intelligence, height and other characteristics. We might be morally repelled today by such an ability, as many scientists and ethicists were repelled by in-vitro fertilization (IVF) when it was introduced four decades ago. Yet more than a million babies in the U.S. have been born through IVF in the years since. Ethics can evolve along with technology.

These new technologies offer control over the code of life, but only we as a society can seize control over where these tools will take us.

Fertility is just one human institution that stands to be changed utterly by gene editing and writing, and it's a change we can at least imagine. As the new biology grows more ambitious, it will alter society in ways we can't begin to picture. Harvard's George Church and New York University's Jef Boeke are leading an effort called HGP-Write to create a completely synthetic human genome. While gene editing allows scientists to make small changes to the genome, the gene synthesis that Church and his collaborators are developing allows for total genetic rewrites. "It's a difference between editing a book and writing one," Church said in an interview earlier this year.

Church is already working on synthesizing organs that would be resistant to viruses, while other researchers like Harris Wang at Columbia University are experimenting with bioengineering mammalian cells to produce nutrients like amino acids that we currently need to get from food. The horizon is endless—and so are the ethical concerns of success. What if parents feel pressure to engineer their children just so they don't fall behind their IVG peers? What if only the rich are able to access synthetic biology technologies that could make them stronger, smarter and longer lived? Could inequality become encoded in the genome?

These are questions that are different from the terror and errors fears around biosecurity, because they ask us to think hard about what kind of future we want. To their credit, Church and his collaborators have engaged bioethicists from the start of their work, as have the pioneers behind CRISPR. But the challenges coming from successful gene editing and writing are too large to be outsourced to professional ethicists. These new technologies offer control over the code of life, but only we as a society can seize control over where these tools will take us.

Thanks to safety cautions from the COVID-19 pandemic, a strain of influenza has been completely eliminated.

If you were one of the millions who masked up, washed your hands thoroughly and socially distanced, pat yourself on the back—you may have helped change the course of human history.

Scientists say that thanks to these safety precautions, which were introduced in early 2020 as a way to stop transmission of the novel COVID-19 virus, a strain of influenza has been completely eliminated. This marks the first time in human history that a virus has been wiped out through non-pharmaceutical interventions, such as vaccines.

The flu shot, explained

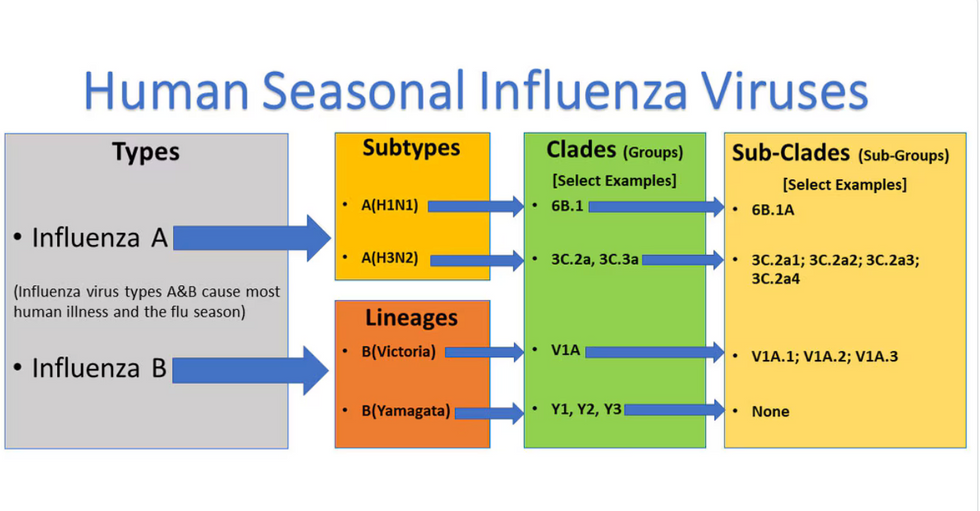

Influenza viruses type A and B are responsible for the majority of human illnesses and the flu season.

Centers for Disease Control

For more than a decade, flu shots have protected against two types of the influenza virus–type A and type B. While there are four different strains of influenza in existence (A, B, C, and D), only strains A, B, and C are capable of infecting humans, and only A and B cause pandemics. In other words, if you catch the flu during flu season, you’re most likely sick with flu type A or B.

Flu vaccines contain inactivated—or dead—influenza virus. These inactivated viruses can’t cause sickness in humans, but when administered as part of a vaccine, they teach a person’s immune system to recognize and kill those viruses when they’re encountered in the wild.

Each spring, a panel of experts gives a recommendation to the US Food and Drug Administration on which strains of each flu type to include in that year’s flu vaccine, depending on what surveillance data says is circulating and what they believe is likely to cause the most illness during the upcoming flu season. For the past decade, Americans have had access to vaccines that provide protection against two strains of influenza A and two lineages of influenza B, known as the Victoria lineage and the Yamagata lineage. But this year, the seasonal flu shot won’t include the Yamagata strain, because the Yamagata strain is no longer circulating among humans.

How Yamagata Disappeared

Flu surveillance data from the Global Initiative on Sharing All Influenza Data (GISAID) shows that the Yamagata lineage of flu type B has not been sequenced since April 2020.

Nature

Experts believe that the Yamagata lineage had already been in decline before the pandemic hit, likely because the strain was naturally less capable of infecting large numbers of people compared to the other strains. When the COVID-19 pandemic hit, the resulting safety precautions such as social distancing, isolating, hand-washing, and masking were enough to drive the virus into extinction completely.

Because the strain hasn’t been circulating since 2020, the FDA elected to remove the Yamagata strain from the seasonal flu vaccine. This will mark the first time since 2012 that the annual flu shot will be trivalent (three-component) rather than quadrivalent (four-component).

Should I still get the flu shot?

The flu shot will protect against fewer strains this year—but that doesn’t mean we should skip it. Influenza places a substantial health burden on the United States every year, responsible for hundreds of thousands of hospitalizations and tens of thousands of deaths. The flu shot has been shown to prevent millions of illnesses each year (more than six million during the 2022-2023 season). And while it’s still possible to catch the flu after getting the flu shot, studies show that people are far less likely to be hospitalized or die when they’re vaccinated.

Another unexpected benefit of dropping the Yamagata strain from the seasonal vaccine? This will possibly make production of the flu vaccine faster, and enable manufacturers to make more vaccines, helping countries who have a flu vaccine shortage and potentially saving millions more lives.

After his grandmother’s dementia diagnosis, one man invented a snack to keep her healthy and hydrated.

Founder Lewis Hornby and his grandmother Pat, sampling Jelly Drops—an edible gummy containing water and life-saving electrolytes.

On a visit to his grandmother’s nursing home in 2016, college student Lewis Hornby made a shocking discovery: Dehydration is a common (and dangerous) problem among seniors—especially those that are diagnosed with dementia.

Hornby’s grandmother, Pat, had always had difficulty keeping up her water intake as she got older, a common issue with seniors. As we age, our body composition changes, and we naturally hold less water than younger adults or children, so it’s easier to become dehydrated quickly if those fluids aren’t replenished. What’s more, our thirst signals diminish naturally as we age as well—meaning our body is not as good as it once was in letting us know that we need to rehydrate. This often creates a perfect storm that commonly leads to dehydration. In Pat’s case, her dehydration was so severe she nearly died.

When Lewis Hornby visited his grandmother at her nursing home afterward, he learned that dehydration especially affects people with dementia, as they often don’t feel thirst cues at all, or may not recognize how to use cups correctly. But while dementia patients often don’t remember to drink water, it seemed to Hornby that they had less problem remembering to eat, particularly candy.

Hornby wanted to create a solution for elderly people who struggled keeping their fluid intake up. He spent the next eighteen months researching and designing a solution and securing funding for his project. In 2019, Hornby won a sizable grant from the Alzheimer’s Society, a UK-based care and research charity for people with dementia and their caregivers. Together, through the charity’s Accelerator Program, they created a bite-sized, sugar-free, edible jelly drop that looked and tasted like candy. The candy, called Jelly Drops, contained 95% water and electrolytes—important minerals that are often lost during dehydration. The final product launched in 2020—and was an immediate success. The drops were able to provide extra hydration to the elderly, as well as help keep dementia patients safe, since dehydration commonly leads to confusion, hospitalization, and sometimes even death.

Not only did Jelly Drops quickly become a favorite snack among dementia patients in the UK, but they were able to provide an additional boost of hydration to hospital workers during the pandemic. In NHS coronavirus hospital wards, patients infected with the virus were regularly given Jelly Drops to keep their fluid levels normal—and staff members snacked on them as well, since long shifts and personal protective equipment (PPE) they were required to wear often left them feeling parched.

In April 2022, Jelly Drops launched in the United States. The company continues to donate 1% of its profits to help fund Alzheimer’s research.