Meet the Scientists on the Frontlines of Protecting Humanity from a Man-Made Pathogen

From left: Jean Peccoud, Randall Murch, and Neeraj Rao.

Jean Peccoud wasn't expecting an email from the FBI. He definitely wasn't expecting the agency to invite him to a meeting. "My reaction was, 'What did I do wrong to be on the FBI watch list?'" he recalls.

You use those blueprints for white-hat research—which is, indeed, why the open blueprints exist—or you can do the same for a black-hat attack.

He didn't know what the feds could possibly want from him. "I was mostly scared at this point," he says. "I was deeply disturbed by the whole thing."

But he decided to go anyway, and when he traveled to San Francisco for the 2008 gathering, the reason for the e-vite became clear: The FBI was reaching out to researchers like him—scientists interested in synthetic biology—in anticipation of the potential nefarious uses of this technology. "The whole purpose of the meeting was, 'Let's start talking to each other before we actually need to talk to each other,'" says Peccoud, now a professor of chemical and biological engineering at Colorado State University. "'And let's make sure next time you get an email from the FBI, you don't freak out."

Synthetic biology—which Peccoud defines as "the application of engineering methods to biological systems"—holds great power, and with that (as always) comes great responsibility. When you can synthesize genetic material in a lab, you can create new ways of diagnosing and treating people, and even new food ingredients. But you can also "print" the genetic sequence of a virus or virulent bacterium.

And while it's not easy, it's also not as hard as it could be, in part because dangerous sequences have publicly available blueprints. You use those blueprints for white-hat research—which is, indeed, why the open blueprints exist—or you can do the same for a black-hat attack. You could synthesize a dangerous pathogen's code on purpose, or you could unwittingly do so because someone tampered with your digital instructions. Ordering synthetic genes for viral sequences, says Peccoud, would likely be more difficult today than it was a decade ago.

"There is more awareness of the industry, and they are taking this more seriously," he says. "There is no specific regulation, though."

Trying to lock down the interconnected machines that enable synthetic biology, secure its lab processes, and keep dangerous pathogens out of the hands of bad actors is part of a relatively new field: cyberbiosecurity, whose name Peccoud and colleagues introduced in a 2018 paper.

Biological threats feel especially acute right now, during the ongoing pandemic. COVID-19 is a natural pathogen -- not one engineered in a lab. But future outbreaks could start from a bug nature didn't build, if the wrong people get ahold of the right genetic sequences, and put them in the right sequence. Securing the equipment and processes that make synthetic biology possible -- so that doesn't happen -- is part of why the field of cyberbiosecurity was born.

The Origin Story

It is perhaps no coincidence that the FBI pinged Peccoud when it did: soon after a journalist ordered a sequence of smallpox DNA and wrote, for The Guardian, about how easy it was. "That was not good press for anybody," says Peccoud. Previously, in 2002, the Pentagon had funded SUNY Stonybrook researchers to try something similar: They ordered bits of polio DNA piecemeal and, over the course of three years, strung them together.

Although many years have passed since those early gotchas, the current patchwork of regulations still wouldn't necessarily prevent someone from pulling similar tricks now, and the technological systems that synthetic biology runs on are more intertwined — and so perhaps more hackable — than ever. Researchers like Peccoud are working to bring awareness to those potential problems, to promote accountability, and to provide early-detection tools that would catch the whiff of a rotten act before it became one.

Peccoud notes that if someone wants to get access to a specific pathogen, it is probably easier to collect it from the environment or take it from a biodefense lab than to whip it up synthetically. "However, people could use genetic databases to design a system that combines different genes in a way that would make them dangerous together without each of the components being dangerous on its own," he says. "This would be much more difficult to detect."

After his meeting with the FBI, Peccoud grew more interested in these sorts of security questions. So he was paying attention when, in 2010, the Department of Health and Human Services — now helping manage the response to COVID-19 — created guidance for how to screen synthetic biology orders, to make sure suppliers didn't accidentally send bad actors the sequences that make up bad genomes.

Guidance is nice, Peccoud thought, but it's just words. He wanted to turn those words into action: into a computer program. "I didn't know if it was something you can run on a desktop or if you need a supercomputer to run it," he says. So, one summer, he tasked a team of student researchers with poring over the sentences and turning them into scripts. "I let the FBI know," he says, having both learned his lesson and wanting to get in on the game.

Peccoud later joined forces with Randall Murch, a former FBI agent and current Virginia Tech professor, and a team of colleagues from both Virginia Tech and the University of Nebraska-Lincoln, on a prototype project for the Department of Defense. They went into a lab at the University of Nebraska at Lincoln and assessed all its cyberbio-vulnerabilities. The lab develops and produces prototype vaccines, therapeutics, and prophylactic components — exactly the kind of place that you always, and especially right now, want to keep secure.

"We were creating wiki of all these nasty things."

The team found dozens of Achilles' heels, and put them in a private report. Not long after that project, the two and their colleagues wrote the paper that first used the term "cyberbiosecurity." A second paper, led by Murch, came out five months later and provided a proposed definition and more comprehensive perspective on cyberbiosecurity. But although it's now a buzzword, it's the definition, not the jargon, that matters. "Frankly, I don't really care if they call it cyberbiosecurity," says Murch. Call it what you want: Just pay attention to its tenets.

A Database of Scary Sequences

Peccoud and Murch, of course, aren't the only ones working to screen sequences and secure devices. At the nonprofit Battelle Memorial Institute in Columbus, Ohio, for instance, scientists are working on solutions that balance the openness inherent to science and the closure that can stop bad stuff. "There's a challenge there that you want to enable research but you want to make sure that what people are ordering is safe," says the organization's Neeraj Rao.

Rao can't talk about the work Battelle does for the spy agency IARPA, the Intelligence Advanced Research Projects Activity, on a project called Fun GCAT, which aims to use computational tools to deep-screen gene-sequence orders to see if they pose a threat. It can, though, talk about a twin-type internal project: ThreatSEQ (pronounced, of course, "threat seek").

The project started when "a government customer" (as usual, no one will say which) asked Battelle to curate a list of dangerous toxins and pathogens, and their genetic sequences. The researchers even started tagging sequences according to their function — like whether a particular sequence is involved in a germ's virulence or toxicity. That helps if someone is trying to use synthetic biology not to gin up a yawn-inducing old bug but to engineer a totally new one. "How do you essentially predict what the function of a novel sequence is?" says Rao. You look at what other, similar bits of code do.

"We were creating wiki of all these nasty things," says Rao. As they were working, they realized that DNA manufacturers could potentially scan in sequences that people ordered, run them against the database, and see if anything scary matched up. Kind of like that plagiarism software your college professors used.

Battelle began offering their screening capability, as ThreatSEQ. When customers -- like, currently, Twist Bioscience -- throw their sequences in, and get a report back, the manufacturers make the final decision about whether to fulfill a flagged order — whether, in the analogy, to give an F for plagiarism. After all, legitimate researchers do legitimately need to have DNA from legitimately bad organisms.

"Maybe it's the CDC," says Rao. "If things check out, oftentimes [the manufacturers] will fulfill the order." If it's your aggrieved uncle seeking the virulent pathogen, maybe not. But ultimately, no one is stopping the manufacturers from doing so.

Beyond that kind of tampering, though, cyberbiosecurity also includes keeping a lockdown on the machines that make the genetic sequences. "Somebody now doesn't need physical access to infrastructure to tamper with it," says Rao. So it needs the same cyber protections as other internet-connected devices.

Scientists are also now using DNA to store data — encoding information in its bases, rather than into a hard drive. To download the data, you sequence the DNA and read it back into a computer. But if you think like a bad guy, you'd realize that a bad guy could then, for instance, insert a computer virus into the genetic code, and when the researcher went to nab her data, her desktop would crash or infect the others on the network.

Something like that actually happened in 2017 at the USENIX security symposium, an annual programming conference: Researchers from the University of Washington encoded malware into DNA, and when the gene sequencer assembled the DNA, it corrupted the sequencer's software, then the computer that controlled it.

"This vulnerability could be just the opening an adversary needs to compromise an organization's systems," Inspirion Biosciences' J. Craig Reed and Nicolas Dunaway wrote in a paper for Frontiers in Bioengineering and Biotechnology, included in an e-book that Murch edited called Mapping the Cyberbiosecurity Enterprise.

Where We Go From Here

So what to do about all this? That's hard to say, in part because we don't know how big a current problem any of it poses. As noted in Mapping the Cyberbiosecurity Enterprise, "Information about private sector infrastructure vulnerabilities or data breaches is protected from public release by the Protected Critical Infrastructure Information (PCII) Program," if the privateers share the information with the government. "Government sector vulnerabilities or data breaches," meanwhile, "are rarely shared with the public."

"What I think is encouraging right now is the fact that we're even having this discussion."

The regulations that could rein in problems aren't as robust as many would like them to be, and much good behavior is technically voluntary — although guidelines and best practices do exist from organizations like the International Gene Synthesis Consortium and the National Institute of Standards and Technology.

Rao thinks it would be smart if grant-giving agencies like the National Institutes of Health and the National Science Foundation required any scientists who took their money to work with manufacturing companies that screen sequences. But he also still thinks we're on our way to being ahead of the curve, in terms of preventing print-your-own bioproblems: "What I think is encouraging right now is the fact that we're even having this discussion," says Rao.

Peccoud, for his part, has worked to keep such conversations going, including by doing training for the FBI and planning a workshop for students in which they imagine and work to guard against the malicious use of their research. But actually, Peccoud believes that human error, flawed lab processes, and mislabeled samples might be bigger threats than the outside ones. "Way too often, I think that people think of security as, 'Oh, there is a bad guy going after me,' and the main thing you should be worried about is yourself and errors," he says.

Murch thinks we're only at the beginning of understanding where our weak points are, and how many times they've been bruised. Decreasing those contusions, though, won't just take more secure systems. "The answer won't be technical only," he says. It'll be social, political, policy-related, and economic — a cultural revolution all its own.

Recent advancements in engineering mean that the first preclinical trials for an artificial kidney could happen as soon as 18 months from now

Like all those whose kidneys have failed, Scott Burton’s life revolves around dialysis. For nearly two decades, Burton has been hooked up (or, since 2020, has hooked himself up at home) to a dialysis machine that performs the job his kidneys normally would. The process is arduous, time-consuming, and expensive. Except for a brief window before his body rejected a kidney transplant, Burton has depended on machines to take the place of his kidneys since he was 12-years-old. His whole life, the 39-year-old says, revolves around dialysis.

“Whenever I try to plan anything, I also have to plan my dialysis,” says Burton says, who works as a freelance videographer and editor. “It’s a full-time job in itself.”

Many of those on dialysis are in line for a kidney transplant that would allow them to trade thrice-weekly dialysis and strict dietary limits for a lifetime of immunosuppressants. Burton’s previous transplant means that his body will likely reject another donated kidney unless it matches perfectly—something he’s not counting on. It’s why he’s enthusiastic about the development of artificial kidneys, small wearable or implantable devices that would do the job of a healthy kidney while giving users like Burton more flexibility for traveling, working, and more.

Still, the devices aren’t ready for testing in humans—yet. But recent advancements in engineering mean that the first preclinical trials for an artificial kidney could happen as soon as 18 months from now, according to Jonathan Himmelfarb, a nephrologist at the University of Washington.

“It would liberate people with kidney failure,” Himmelfarb says.

An engineering marvel

Compared to the heart or the brain, the kidney doesn’t get as much respect from the medical profession, but its job is far more complex. “It does hundreds of different things,” says UCLA’s Ira Kurtz.

Kurtz would know. He’s worked as a nephrologist for 37 years, devoting his career to helping those with kidney disease. While his colleagues in cardiology and endocrinology have seen major advances in the development of artificial hearts and insulin pumps, little has changed for patients on hemodialysis. The machines remain bulky and require large volumes of a liquid called dialysate to remove toxins from a patient’s blood, along with gallons of purified water. A kidney transplant is the next best thing to someone’s own, functioning organ, but with over 600,000 Americans on dialysis and only about 100,000 kidney transplants each year, most of those in kidney failure are stuck on dialysis.

Part of the lack of progress in artificial kidney design is the sheer complexity of the kidney’s job. Each of the 45 different cell types in the kidney do something different.

Part of the lack of progress in artificial kidney design is the sheer complexity of the kidney’s job. To build an artificial heart, Kurtz says, you basically need to engineer a pump. An artificial pancreas needs to balance blood sugar levels with insulin secretion. While neither of these tasks is simple, they are fairly straightforward. The kidney, on the other hand, does more than get rid of waste products like urea and other toxins. Each of the 45 different cell types in the kidney do something different, helping to regulate electrolytes like sodium, potassium, and phosphorous; maintaining blood pressure and water balance; guiding the body’s hormonal and inflammatory responses; and aiding in the formation of red blood cells.

There's been little progress for patients during Ira Kurtz's 37 years as a nephrologist. Artificial kidneys would change that.

UCLA

Dialysis primarily filters waste, and does so well enough to keep someone alive, but it isn’t a true artificial kidney because it doesn’t perform the kidney’s other jobs, according to Kurtz, such as sensing levels of toxins, wastes, and electrolytes in the blood. Due to the size and water requirements of existing dialysis machines, the equipment isn’t portable. Physicians write a prescription for a certain duration of dialysis and assess how well it’s working with semi-regular blood tests. The process of dialysis itself, however, is conducted blind. Doctors can’t tell how much dialysis a patient needs based on kidney values at the time of treatment, says Meera Harhay, a nephrologist at Drexel University in Philadelphia.

But it’s the impact of dialysis on their day-to-day lives that creates the most problems for patients. Only one-quarter of those on dialysis are able to remain employed (compared to 85% of similar-aged adults), and many report a low quality of life. Having more flexibility in life would make a major different to her patients, Harhay says.

“Almost half their week is taken up by the burden of their treatment. It really eats away at their freedom and their ability to do things that add value to their life,” she says.

Art imitates life

The challenge for artificial kidney designers was how to compress the kidney’s natural functions into a portable, wearable, or implantable device that wouldn’t need constant access to gallons of purified and sterilized water. The other universal challenge they faced was ensuring that any part of the artificial kidney that would come in contact with blood was kept germ-free to prevent infection.

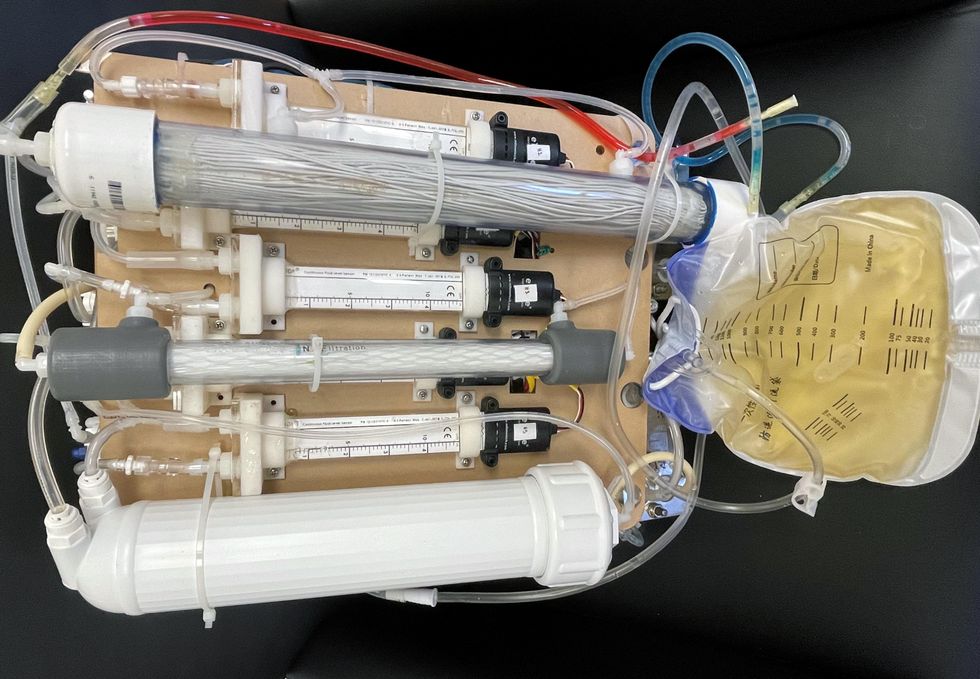

As part of last year’s KidneyX Prize, a partnership between the U.S. Department of Health and Human Services and the American Society of Nephrology, inventors were challenged to create prototypes for artificial kidneys. Himmelfarb’s team at the University of Washington’s Center for Dialysis Innovation won the prize by focusing on miniaturizing existing technologies to create a portable dialysis machine. The backpack sized AKTIV device (Ambulatory Kidney to Increase Vitality) will recycle dialysate in a closed loop system that removes urea from blood and uses light-based chemical reactions to convert the urea to nitrogen and carbon dioxide, which allows the dialysate to be recirculated.

Himmelfarb says that the AKTIV can be used when at home, work, or traveling, which will give users more flexibility and freedom. “If you had a 30-pound device that you could put in the overhead bins when traveling, you could go visit your grandkids,” he says.

Kurtz’s team at UCLA partnered with the U.S. Kidney Research Corporation and Arkansas University to develop a dialysate-free desktop device (about the size of a small printer) as the first phase of a progression that will he hopes will lead to something small and implantable. Part of the reason for the artificial kidney’s size, Kurtz says, is the number of functions his team are cramming into it. Not only will it filter urea from blood, but it will also use electricity to help regulate electrolyte levels in a process called electrodeionization. Kurtz emphasizes that these additional functions are what makes his design a true artificial kidney instead of just a small dialysis machine.

One version of an artificial kidney.

UCLA

“It doesn't have just a static function. It has a bank of sensors that measure chemicals in the blood and feeds that information back to the device,” Kurtz says.

Other startups are getting in on the game. Nephria Bio, a spinout from the South Korean-based EOFlow, is working to develop a wearable dialysis device, akin to an insulin pump, that uses miniature cartridges with nanomaterial filters to clean blood (Harhay is a scientific advisor to Nephria). Ian Welsford, Nephria’s co-founder and CTO, says that the device’s design means that it can also be used to treat acute kidney injuries in resource-limited settings. These potentials have garnered interest and investment in artificial kidneys from the U.S. Department of Defense.

For his part, Burton is most interested in an implantable device, as that would give him the most freedom. Even having a regular outpatient procedure to change batteries or filters would be a minor inconvenience to him.

“Being plugged into a machine, that’s not mimicking life,” he says.

Today’s more than 20,000 mental health apps have a wide range of functionalities and business models. Many of them can be useful for depression.

Even before the pandemic created a need for more telehealth options, depression was a hot area of research for app developers. Given the high prevalence of depression and its connection to suicidality — especially among today’s teenagers and young adults who grew up with mobile devices, use them often, and experience these conditions with alarming frequency — apps for depression could be not only useful but lifesaving.

“For people who are not depressed, but have been depressed in the past, the apps can be helpful for maintaining positive thinking and behaviors,” said Andrea K. Wittenborn, PhD, director of the Couple and Family Therapy Doctoral Program and a professor in human development and family studies at Michigan State University. “For people who are mildly to severely depressed, apps can be a useful complement to working with a mental health professional.”

Health and fitness apps, in general, number in the hundreds of thousands. These are driving a market expected to reach $102.45 billion by next year. The mobile mental health app market is a small part of this but still sizable at $500 million, with revenues generated through user health insurance, employers, and direct payments from individuals.

Apps can provide data that health professionals cannot gather on their own. People’s constant interaction with smartphones and wearable devices yields data on many health conditions for millions of patients in their natural environments and while they go about their usual activities. Compared with the in-office measurements of weight and blood pressure and the brevity of doctor-patient interactions, the thousands of data points gathered unobtrusively over an extended time period provide a far better and more detailed picture of the person and their health.

At their most advanced level, apps for mental health, including depression, passively gather data on how the user touches and interacts with the mobile device through changes in digital biomarkers that relate to depressive symptoms and other conditions.

Building on three decades of research since early “apps” were used for delivering treatment manuals to health professionals, today’s more than 20,000 mental health apps have a wide range of functionalities and business models. Many of these apps can be useful for depression.

Some apps primarily provide a virtual connection to a group of mental health professionals employed or contracted by the app. Others have options for meditation, sleeping or, in the case of industry leaders Calm and Headspace, overall well-being. On the cutting edge are apps that detect changes in a person’s use of mobile devices and their interactions with them.

Apps such as AbleTo, Happify Health, and Woebot Health focus on cognitive behavioral therapy, a type of counseling with proven potential to change a person’s behaviors and feelings. “CBT has been demonstrated in innumerable studies over the last several decades to be effective in the treatment of behavioral health conditions such as depression and anxiety disorders,” said Dr. Reena Pande, chief medical officer at AbleTo. “CBT is intended to be delivered as a structured intervention incorporating key elements, including behavioral activation and adaptive thinking strategies.”

These CBT skills help break the negative self-talk (rumination) common in patients with depression. They are taught and reinforced by some self-guided apps, using either artificial intelligence or programmed interactions with users. Apps can address loneliness and isolation through connections with others, even when a symptomatic person doesn’t feel like leaving the house.

At their most advanced level, apps for mental health, including depression, passively gather data on how the user touches and interacts with the mobile device through changes in “digital biomarkers” that can be associated with onset or worsening of depressive symptoms and other cognitive conditions. In one study, Mindstrong Health gathered a year’s worth of data on how people use their smartphones, such as scrolling through articles, typing and clicking. Mindstrong, whose founders include former leaders of the National Institutes of Health, modeled the timing and order of these actions to make assessments that correlated closely with gold-standard tests of cognitive function.

National organizations of mental health professionals have been following the expanding number of available apps over the years with keen interest. App Advisor is an initiative of the American Psychiatric Association that helps psychiatrists and other mental health professionals navigate the issues raised by mobile health technology. App Advisor does not rate or recommend particular apps but rather provides guidance about why apps should be assessed and how health professionals can do this.

A website that does review mental health apps is One Mind Psyber Guide, an independent nonprofit that partners with several national organizations. One Mind users can select among numerous search terms for the condition and therapeutic approach of interest. Apps are rated on a five-point scale, with reviews written by professionals in the field.

Do mental health apps related to depression have the kind of safety and effectiveness data required for medications and other medical interventions? Not always — and not often. Yet the overall results have shown early promise, Wittenborn noted.

“Studies that have attempted to detect depression from smartphone and wearable sensors [during a single session] have ranged in accuracy from about 86 to 89 percent,” Wittenborn said. “Studies that tried to predict changes in depression over time have been less accurate, with accuracy ranging from 59 to 85 percent.”

The Food and Drug Administration encourages the development of apps and has approved a few of them—mostly ones used by health professionals—but it is generally “hands off,” according to the American Psychiatric Association. The FDA has published a list of examples of software (including programming of apps) that it does not plan to regulate because they pose low risk to the public. First on the list is software that helps patients with diagnosed psychiatric conditions, including depression, maintain their behavioral coping skills by providing a “Skill of the Day” technique or message.

On its App Advisor site, the American Psychiatric Association says mental health apps can be dangerous or cause harm in multiple ways, such as by providing false information, overstating the app’s therapeutic value, selling personal data without clearly notifying users, and collecting data that isn’t relevant to mental health.

Although there is currently reason for caution, patients may eventually come to expect mental health professionals to recommend apps, especially as their rating systems, features and capabilities expand. Through such apps, patients might experience more and higher quality interactions with their mental health professionals. “Apps will continue to be refined and become more effective through future research,” said Wittenborn. “They will become more integrated into practice over time.”