How a Deadly Fire Gave Birth to Modern Medicine

The Cocoanut Grove fire in Boston in 1942 tragically claimed 490 lives, but was the catalyst for several important medical advances.

On the evening of November 28, 1942, more than 1,000 revelers from the Boston College-Holy Cross football game jammed into the Cocoanut Grove, Boston's oldest nightclub. When a spark from faulty wiring accidently ignited an artificial palm tree, the packed nightspot, which was only designed to accommodate about 500 people, was quickly engulfed in flames. In the ensuing panic, hundreds of people were trapped inside, with most exit doors locked. Bodies piled up by the only open entrance, jamming the exits, and 490 people ultimately died in the worst fire in the country in forty years.

"People couldn't get out," says Dr. Kenneth Marshall, a retired plastic surgeon in Boston and president of the Cocoanut Grove Memorial Committee. "It was a tragedy of mammoth proportions."

Within a half an hour of the start of the blaze, the Red Cross mobilized more than five hundred volunteers in what one newspaper called a "Rehearsal for Possible Blitz." The mayor of Boston imposed martial law. More than 300 victims—many of whom subsequently died--were taken to Boston City Hospital in one hour, averaging one victim every eleven seconds, while Massachusetts General Hospital admitted 114 victims in two hours. In the hospitals, 220 victims clung precariously to life, in agonizing pain from massive burns, their bodies ravaged by infection.

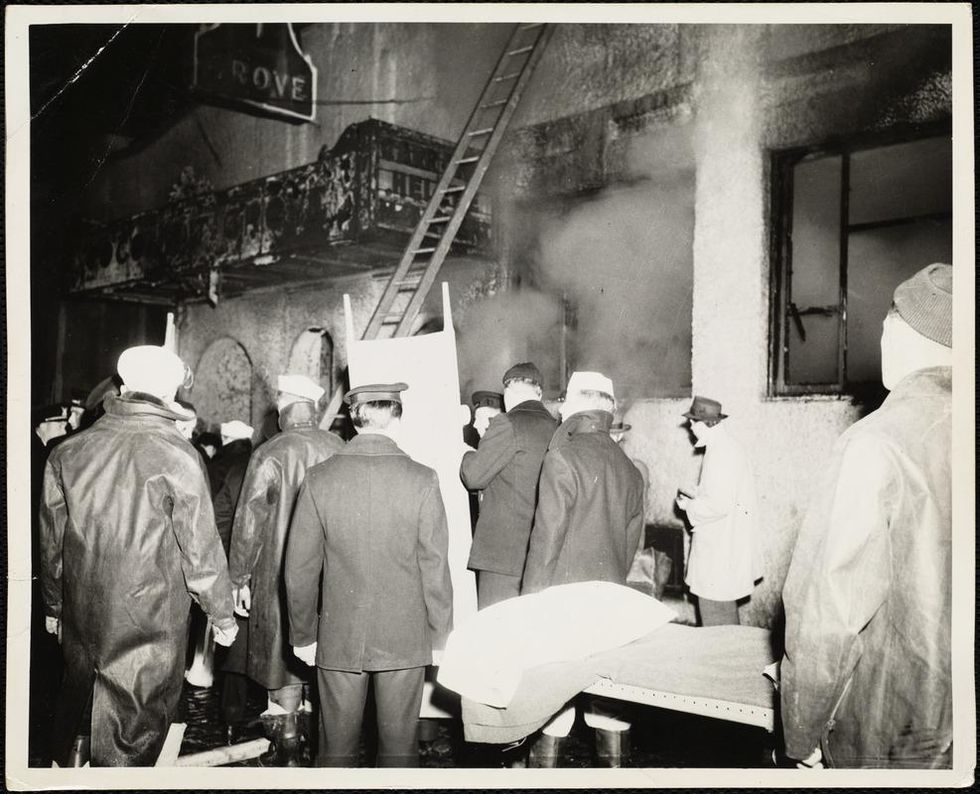

The scene of the fire.

Boston Public Library

Tragic Losses Prompted Revolutionary Leaps

But there is a silver lining: this horrific disaster prompted dramatic changes in safety regulations to prevent another catastrophe of this magnitude and led to the development of medical techniques that eventually saved millions of lives. It transformed burn care treatment and the use of plasma on burn victims, but most importantly, it introduced to the public a new wonder drug that revolutionized medicine, midwifed the birth of the modern pharmaceutical industry, and nearly doubled life expectancy, from 48 years at the turn of the 20th century to 78 years in the post-World War II years.

The devastating grief of the survivors also led to the first published study of post-traumatic stress disorder by pioneering psychiatrist Alexandra Adler, daughter of famed Viennese psychoanalyst Alfred Adler, who was a student of Freud. Dr. Adler studied the anxiety and depression that followed this catastrophe, according to the New York Times, and "later applied her findings to the treatment World War II veterans."

Dr. Ken Marshall is intimately familiar with the lingering psychological trauma of enduring such a disaster. His mother, an Irish immigrant and a nurse in the surgical wards at Boston City Hospital, was on duty that cold Thanksgiving weekend night, and didn't come home for four days. "For years afterward, she'd wake up screaming in the middle of the night," recalls Dr. Marshall, who was four years old at the time. "Seeing all those bodies lined up in neat rows across the City Hospital's parking lot, still in their evening clothes. It was always on her mind and memories of the horrors plagued her for the rest of her life."

The sheer magnitude of casualties prompted overwhelmed physicians to try experimental new procedures that were later successfully used to treat thousands of battlefield casualties. Instead of cutting off blisters and using dyes and tannic acid to treat burned tissues, which can harden the skin, they applied gauze coated with petroleum jelly. Doctors also refined the formula for using plasma--the fluid portion of blood and a medical technology that was just four years old--to replenish bodily liquids that evaporated because of the loss of the protective covering of skin.

"Every war has given us a new medical advance. And penicillin was the great scientific advance of World War II."

"The initial insult with burns is a loss of fluids and patients can die of shock," says Dr. Ken Marshall. "The scientific progress that was made by the two institutions revolutionized fluid management and topical management of burn care forever."

Still, they could not halt the staph infections that kill most burn victims—which prompted the first civilian use of a miracle elixir that was being secretly developed in government-sponsored labs and that ultimately ushered in a new age in therapeutics. Military officials quickly realized this disaster could provide an excellent natural laboratory to test the effectiveness of this drug and see if it could be used to treat the acute traumas of combat in this unfortunate civilian approximation of battlefield conditions. At the time, the very existence of this wondrous medicine—penicillin—was a closely guarded military secret.

From Forgotten Lab Experiment to Wonder Drug

In 1928, Alexander Fleming discovered the curative powers of penicillin, which promised to eradicate infectious pathogens that killed millions every year. But the road to mass producing enough of the highly unstable mold was littered with seemingly unsurmountable obstacles and it remained a forgotten laboratory curiosity for over a decade. But Fleming never gave up and penicillin's eventual rescue from obscurity was a landmark in scientific history.

In 1940, a group at Oxford University, funded in part by the Rockefeller Foundation, isolated enough penicillin to test it on twenty-five mice, which had been infected with lethal doses of streptococci. Its therapeutic effects were miraculous—the untreated mice died within hours, while the treated ones played merrily in their cages, undisturbed. Subsequent tests on a handful of patients, who were brought back from the brink of death, confirmed that penicillin was indeed a wonder drug. But Britain was then being ravaged by the German Luftwaffe during the Blitz, and there were simply no resources to devote to penicillin during the Nazi onslaught.

In June of 1941, two of the Oxford researchers, Howard Florey and Ernst Chain, embarked on a clandestine mission to enlist American aid. Samples of the temperamental mold were stored in their coats. By October, the Roosevelt Administration had recruited four companies—Merck, Squibb, Pfizer and Lederle—to team up in a massive, top-secret development program. Merck, which had more experience with fermentation procedures, swiftly pulled away from the pack and every milligram they produced was zealously hoarded.

After the nightclub fire, the government ordered Merck to dispatch to Boston whatever supplies of penicillin that they could spare and to refine any crude penicillin broth brewing in Merck's fermentation vats. After working in round-the-clock relays over the course of three days, on the evening of December 1st, 1942, a refrigerated truck containing thirty-two liters of injectable penicillin left Merck's Rahway, New Jersey plant. It was accompanied by a convoy of police escorts through four states before arriving in the pre-dawn hours at Massachusetts General Hospital. Dozens of people were rescued from near-certain death in the first public demonstration of the powers of the antibiotic, and the existence of penicillin could no longer be kept secret from inquisitive reporters and an exultant public. The next day, the Boston Globe called it "priceless" and Time magazine dubbed it a "wonder drug."

Within fourteen months, penicillin production escalated exponentially, churning out enough to save the lives of thousands of soldiers, including many from the Normandy invasion. And in October 1945, just weeks after the Japanese surrender ended World War II, Alexander Fleming, Howard Florey and Ernst Chain were awarded the Nobel Prize in medicine. But penicillin didn't just save lives—it helped build some of the most innovative medical and scientific companies in history, including Merck, Pfizer, Glaxo and Sandoz.

"Every war has given us a new medical advance," concludes Marshall. "And penicillin was the great scientific advance of World War II."

Dana Lewis, pictured in Mount Vernon in 2017, worked with her engineer husband to design an artificial pancreas system to manage her type 1 diabetes.

For years, a continuous glucose monitor would beep at night if Dana Lewis' blood sugar measured too high or too low. At age 14, she was diagnosed with type 1 diabetes, an autoimmune disease that destroys insulin-producing cells in the pancreas.

The FDA just issued its first warning to the DIY diabetic community, after one patient suffered an accidental insulin overdose.

But being a sound sleeper, the Seattle-based independent researcher, now 30, feared not waking up. That concerned her most when she would run, after which her glucose dropped overnight. Now, she rarely needs a rousing reminder to alert her to out-of-range blood glucose levels.

That's because Lewis and her husband, Scott Leibrand, a network engineer, developed an artificial pancreas system—an algorithm that calculates adjustments to insulin delivery based on data from the continuous glucose monitor and her insulin pump. When the monitor gives a reading, she no longer needs to press a button. The algorithm tells the pump how much insulin to release while she's sleeping.

"Most of the time, it's preventing the frequent occurrences of high or low blood sugars automatically," Lewis explains.

Like other do-it-yourself device innovations, home-designed artificial pancreas systems are not approved by the Food and Drug Administration, so individual users assume any associated risks. Experts recommend that patients consult their doctor before adopting a new self-monitoring approach and to keep the clinician apprised of their progress.

DIY closed-loop systems can be uniquely challenging, according to the FDA. Patients may not fully comprehend how the devices are intended to work or they may fail to recognize the limitations. The systems have not been evaluated under quality control measures and pose risks of inappropriate dosing from the automated algorithm or potential incompatibility with a patient's other medications, says Stephanie Caccomo, an FDA spokeswoman.

Earlier this month, in fact, the FDA issued its first warning to the DIY diabetic community, which includes thousands of users, after one patient suffered an accidental insulin overdose.

Patients who built their own systems from scratch may be more well-versed in the operations, while those who are implementing unapproved designs created by others are less likely to be familiar with their intricacies, she says.

"Malfunctions or misuse of automated-insulin delivery systems can lead to acute complications of hypo- and hyperglycemia that may result in serious injury or death," Caccomo cautions. "FDA provides independent review of complex systems to assess the safety of these nontransparent devices, so that users do not have to be software/hardware designers to get the medical devices they need."

Only one hybrid closed-loop technology—the MiniMed 670G System from Minneapolis-based Medtronic—has been FDA-approved for type 1 use since September 2016. The term "hybrid" indicates that the system is not a fully automatic closed loop; it still requires minimal input from patients, including the need to enter mealtime carbohydrates, manage insulin dosage recommendations, and periodically calibrate the sensor.

Meanwhile, some tech-savvy people with type 1 diabetes have opted to design their own systems. About one-third of the DIY diabetes loopers are children whose parents have built them a closed system, according to Lewis' website.

Lewis began developing her system in 2014, well before Medtronic's device hit the market. "The choice to wait is not a luxury," she says, noting that "diabetes is inherently dangerous," whether an individual relies on a device to inject insulin or administers it with a syringe.

Hybrid closed-loop insulin delivery improves glucose control while decreasing the risk of low blood sugar in patients of various ages with less than optimally controlled type 1 diabetes, according to a study published in The Lancet last October. The multi-center randomized trial, conducted in the United Kingdom and the United States, spanned 12 weeks and included adults, adolescents, and children aged 6 years and older.

"We have compelling data attesting to the benefits of closed-loop systems," says Daniel Finan, research director at JDRF (formerly the Juvenile Diabetes Research Foundation) in New York, a global organization funding the study.

Medtronic's system costs between $6,000 and $9,000. However, end-user pricing varies based on an individual's health plan. It is covered by most insurers, according to the device manufacturer.

To give users more choice, in 2017 JDRF launched the Open Protocol Automated Insulin Delivery Systems initiative to collaborate with the FDA and experts in the do-it-yourself arena. The organization hopes to "forge a new regulatory paradigm," Finan says.

As diabetes management becomes more user-controlled, there is a need for better coordination. "We've had insulin pumps for a very long time, but having sensors that can detect blood sugars in real time is still a very new phenomenon," says Leslie Lam, interim chief in the division of pediatric endocrinology and diabetes at The Children's Hospital at Montefiore in the Bronx, N.Y.

"There's a lag in the integration of this technology," he adds. Innovators are indeed working to bring new products to market, "but on the consumer side, people want that to be here now instead of a year or two later."

The devices aren't foolproof, and mishaps can occur even with very accurate systems. For this reason, there is some reluctance to advocate for universal use in children with type 1 diabetes. Supervision by a parent, school nurse, and sometimes a coach would be a prudent precaution, Lam says.

People engage in "this work because they are either curious about it themselves or not getting the care they need from the health care system, or both."

Remaining aware of blood sugar levels and having a backup plan are essential. "People still need to know how to give injections the old-school way," he says.

To ensure readings are correct on Medtronic's device, users should check their blood sugar with traditional finger pricking at least five or six times per day—before every meal and whenever directed by the system, notes Elena Toschi, an endocrinologist and director of the Young Adult Clinic at Joslin Diabetes Center, an affiliate of Harvard Medical School.

"There can be pump failure and cross-talking failure," she cautions, urging patients not to stop being vigilant because they are using an automated device. "This is still something that can happen; it doesn't eliminate that."

While do-it-yourself devices help promote autonomy and offer convenience, the lack of clinical trial data makes it difficult for clinicians and patients to assess risks versus benefits, says Lisa Eckenwiler, an associate professor in the departments of philosophy and health administration and policy at George Mason University in Fairfax, Va.

"What are the responsibilities of physicians in that context to advise patients?" she questions. Some clinicians foresee the possibility that "down the road, if things go awry" with disease management, that could place them "in a moral quandary."

Whether it's controlling diabetes, obesity, heart disease or asthma, emerging technologies are having a major influence on individuals' abilities to stay on top of their health, says Camille Nebeker, an assistant professor in the School of Medicine at the University of California, San Diego, and founder and director of its Research Center for Optimal Data Ethics.

People engage in "this work because they are either curious about it themselves or not getting the care they need from the health care system, or both," she says. In "citizen science communities," they may partner in participant-led research while gaining access to scientific and technical expertise. Others "may go it alone in solo self-tracking studies or developing do-it-yourself technologies," which raises concerns about whether they are carefully considering potential risks and weighing them against possible benefits.

Dana Lewis admits that "using do-it-yourself systems might not be for everyone. But the advances made in the do-it-yourself community show what's possible for future commercial developments, and give a lot of hope for improved quality of life for those of us living with type 1 diabetes."

The Grim Reaper Can Now Compost Your Body

An artist's rendering of a future Recompose facility in Washington state, with reusable modular vessels that convert human remains to soil.

Ultra-green Seattle isn't just getting serious about living eco-friendly, but dying that way, too. As of this week, Washington is officially the first state to allow citizens to compost their own dead bodies.

Their bodies, including bones, were converted into clean, odorless soil free of harmful pathogens.

The Lowdown

Keep in mind this doesn't mean dumping your relative in a nearby river. Scientists and organizations have ways to help Mother Nature process the remains. For instance, the late actor Luke Perry reportedly was buried in a mushroom suit. Perry's garment is completely biodegradable and the attached microorganisms help the decomposition process cleanly and efficiently.

A biodegradable burial requires only a fraction of the energy used for cremation and can save a metric ton of CO2. The body decomposes in about a month. Besides a mushroom suit, another option coming down the pike in Washington state is to have your body converted directly into soil in a special facility.

A pilot study last summer by a public benefit corporation called Recompose signed up six terminally ill people who donated their remains for such research. Their bodies, including bones, were converted into clean, odorless soil free of harmful pathogens. That soil—about a cubic yard per person--could then be returned after 30 days to the subjects' families.

Green burials open the door to creative memorials. A tree or garden could be planted with your soil. This method provides a climate-friendly alternative to traditional funerals, circumventing toxic embalming fluid, expensive casket materials and other ecological overhead. The fertile soil could also be given to conservationist organizations.

Next Up

The new legislation in Washington will take effect May 1, 2020. The Pacific Northwest state has one of the highest cremation rates in the nation at 78 percent, only second to Nevada. Rising climate change and increased interest in death management will only speed this discussion to the forefront in other states.

A biodegradable burial requires only a fraction of the energy used for cremation and can save a metric ton of CO2.

It's also worth noting Perry wasn't buried in Washington State, but in Tennessee. It is unknown where exactly he was laid to rest, nor if it was done under a legal precedent or special exception.

According to the Green Burial Council, each state varies on how and where you can bury someone. Home burials are usually legal, but to do so requires establishing an official cemetery area on the property. How someone is buried has even more dynamic legislation. There will be new discussions about how neighbors contend with nearby decomposing bodies, legal limitations to private burial techniques, and other issues never addressed before in modern mainstream America.

Open Questions

It's unclear if green burials will be commonplace for those with less financial means or access. Mushroom suits average a couple thousand dollars, making them more expensive than a low-end casket. There are also the less obvious expenses, including designating the place of burial, and getting proper burial support and guidance. In short, you likely won't go to the local funeral home and be taken care of properly. It is still experimental.

As for "natural organic reduction" (converting human remains to soil in reusable modular vessels), Recompose is still figuring out its pricing for Washington residents, but expects the service to cost more than cremation and less than a conventional burial.

For now, environmentally sustainable death care may be comparable to vegetarianism in the 1970s or solar paneling in the 1980s: A discussion among urbanites and upwardly-mobile financial classes, but not yet an accessible option for the average American. It's not a coincidence that the new Washington law received support in Seattle, one of the top 10 wealthiest cities in America. A similar push may take off in less affluent areas if ecological concerns drive a demand for affordable green burial options.

Until then, your neighborhood mortician still has the death business on lock.