How a Deadly Fire Gave Birth to Modern Medicine

The Cocoanut Grove fire in Boston in 1942 tragically claimed 490 lives, but was the catalyst for several important medical advances.

On the evening of November 28, 1942, more than 1,000 revelers from the Boston College-Holy Cross football game jammed into the Cocoanut Grove, Boston's oldest nightclub. When a spark from faulty wiring accidently ignited an artificial palm tree, the packed nightspot, which was only designed to accommodate about 500 people, was quickly engulfed in flames. In the ensuing panic, hundreds of people were trapped inside, with most exit doors locked. Bodies piled up by the only open entrance, jamming the exits, and 490 people ultimately died in the worst fire in the country in forty years.

"People couldn't get out," says Dr. Kenneth Marshall, a retired plastic surgeon in Boston and president of the Cocoanut Grove Memorial Committee. "It was a tragedy of mammoth proportions."

Within a half an hour of the start of the blaze, the Red Cross mobilized more than five hundred volunteers in what one newspaper called a "Rehearsal for Possible Blitz." The mayor of Boston imposed martial law. More than 300 victims—many of whom subsequently died--were taken to Boston City Hospital in one hour, averaging one victim every eleven seconds, while Massachusetts General Hospital admitted 114 victims in two hours. In the hospitals, 220 victims clung precariously to life, in agonizing pain from massive burns, their bodies ravaged by infection.

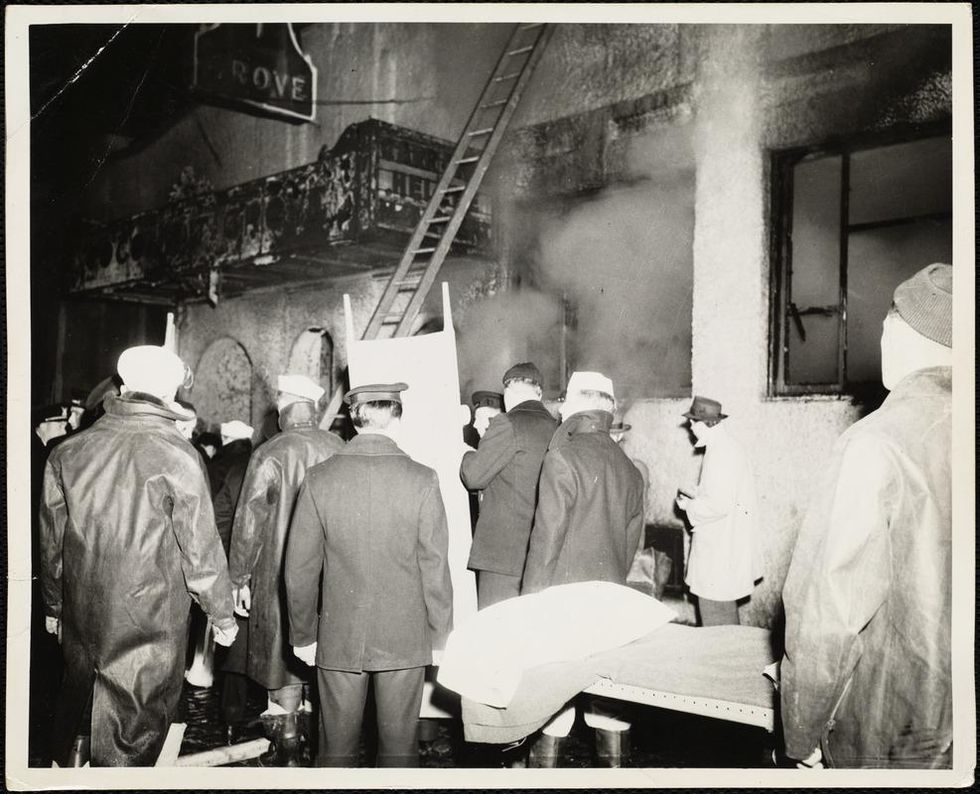

The scene of the fire.

Boston Public Library

Tragic Losses Prompted Revolutionary Leaps

But there is a silver lining: this horrific disaster prompted dramatic changes in safety regulations to prevent another catastrophe of this magnitude and led to the development of medical techniques that eventually saved millions of lives. It transformed burn care treatment and the use of plasma on burn victims, but most importantly, it introduced to the public a new wonder drug that revolutionized medicine, midwifed the birth of the modern pharmaceutical industry, and nearly doubled life expectancy, from 48 years at the turn of the 20th century to 78 years in the post-World War II years.

The devastating grief of the survivors also led to the first published study of post-traumatic stress disorder by pioneering psychiatrist Alexandra Adler, daughter of famed Viennese psychoanalyst Alfred Adler, who was a student of Freud. Dr. Adler studied the anxiety and depression that followed this catastrophe, according to the New York Times, and "later applied her findings to the treatment World War II veterans."

Dr. Ken Marshall is intimately familiar with the lingering psychological trauma of enduring such a disaster. His mother, an Irish immigrant and a nurse in the surgical wards at Boston City Hospital, was on duty that cold Thanksgiving weekend night, and didn't come home for four days. "For years afterward, she'd wake up screaming in the middle of the night," recalls Dr. Marshall, who was four years old at the time. "Seeing all those bodies lined up in neat rows across the City Hospital's parking lot, still in their evening clothes. It was always on her mind and memories of the horrors plagued her for the rest of her life."

The sheer magnitude of casualties prompted overwhelmed physicians to try experimental new procedures that were later successfully used to treat thousands of battlefield casualties. Instead of cutting off blisters and using dyes and tannic acid to treat burned tissues, which can harden the skin, they applied gauze coated with petroleum jelly. Doctors also refined the formula for using plasma--the fluid portion of blood and a medical technology that was just four years old--to replenish bodily liquids that evaporated because of the loss of the protective covering of skin.

"Every war has given us a new medical advance. And penicillin was the great scientific advance of World War II."

"The initial insult with burns is a loss of fluids and patients can die of shock," says Dr. Ken Marshall. "The scientific progress that was made by the two institutions revolutionized fluid management and topical management of burn care forever."

Still, they could not halt the staph infections that kill most burn victims—which prompted the first civilian use of a miracle elixir that was being secretly developed in government-sponsored labs and that ultimately ushered in a new age in therapeutics. Military officials quickly realized this disaster could provide an excellent natural laboratory to test the effectiveness of this drug and see if it could be used to treat the acute traumas of combat in this unfortunate civilian approximation of battlefield conditions. At the time, the very existence of this wondrous medicine—penicillin—was a closely guarded military secret.

From Forgotten Lab Experiment to Wonder Drug

In 1928, Alexander Fleming discovered the curative powers of penicillin, which promised to eradicate infectious pathogens that killed millions every year. But the road to mass producing enough of the highly unstable mold was littered with seemingly unsurmountable obstacles and it remained a forgotten laboratory curiosity for over a decade. But Fleming never gave up and penicillin's eventual rescue from obscurity was a landmark in scientific history.

In 1940, a group at Oxford University, funded in part by the Rockefeller Foundation, isolated enough penicillin to test it on twenty-five mice, which had been infected with lethal doses of streptococci. Its therapeutic effects were miraculous—the untreated mice died within hours, while the treated ones played merrily in their cages, undisturbed. Subsequent tests on a handful of patients, who were brought back from the brink of death, confirmed that penicillin was indeed a wonder drug. But Britain was then being ravaged by the German Luftwaffe during the Blitz, and there were simply no resources to devote to penicillin during the Nazi onslaught.

In June of 1941, two of the Oxford researchers, Howard Florey and Ernst Chain, embarked on a clandestine mission to enlist American aid. Samples of the temperamental mold were stored in their coats. By October, the Roosevelt Administration had recruited four companies—Merck, Squibb, Pfizer and Lederle—to team up in a massive, top-secret development program. Merck, which had more experience with fermentation procedures, swiftly pulled away from the pack and every milligram they produced was zealously hoarded.

After the nightclub fire, the government ordered Merck to dispatch to Boston whatever supplies of penicillin that they could spare and to refine any crude penicillin broth brewing in Merck's fermentation vats. After working in round-the-clock relays over the course of three days, on the evening of December 1st, 1942, a refrigerated truck containing thirty-two liters of injectable penicillin left Merck's Rahway, New Jersey plant. It was accompanied by a convoy of police escorts through four states before arriving in the pre-dawn hours at Massachusetts General Hospital. Dozens of people were rescued from near-certain death in the first public demonstration of the powers of the antibiotic, and the existence of penicillin could no longer be kept secret from inquisitive reporters and an exultant public. The next day, the Boston Globe called it "priceless" and Time magazine dubbed it a "wonder drug."

Within fourteen months, penicillin production escalated exponentially, churning out enough to save the lives of thousands of soldiers, including many from the Normandy invasion. And in October 1945, just weeks after the Japanese surrender ended World War II, Alexander Fleming, Howard Florey and Ernst Chain were awarded the Nobel Prize in medicine. But penicillin didn't just save lives—it helped build some of the most innovative medical and scientific companies in history, including Merck, Pfizer, Glaxo and Sandoz.

"Every war has given us a new medical advance," concludes Marshall. "And penicillin was the great scientific advance of World War II."

A simple ten-minute universal cancer test that can be detected by the human eye or an electronic device - published in Nature Communications (Dec 2018) by the Trau lab at the University of Queensland. Red indicates the presence of cancerous cells and blue doesn't.

Matt Trau, a professor of chemistry at the University of Queensland, stunned the science world back in December when the prestigious journal Nature Communications published his lab's discovery about a unique property of cancer DNA that could lead to a simple, cheap, and accurate test to detect any type of cancer in under 10 minutes.

No one believed it. I didn't believe it. I thought, "Gosh, okay, maybe it's a fluke."

Trau granted very few interviews in the wake of the news, but he recently opened up to leapsmag about the significance of this promising early research. Here is his story in his own words, as told to Editor-in-Chief Kira Peikoff.

There's been an incredible explosion of knowledge over the past 20 years, particularly since the genome was sequenced. The area of diagnostics has a tremendous amount of promise and has caught our lab's interest. If you catch cancer early, you can improve survival rates to as high as 98 percent, sometimes even now surpassing that.

My lab is interested in devices to improve the trajectory of cancer patients. So, once people get diagnosed, can we get really sophisticated information about the molecular origins of the disease, and can we measure it in real time? And then can we match that with the best treatment and monitor it in real time, too?

I think those approaches, also coupled with immunotherapy, where one dreams of monitoring the immune system simultaneously with the disease progress, will be the future.

But currently, the methodologies for cancer are still pretty old. So, for example, let's talk about biopsies in general. Liquid biopsy just means using a blood test or a urine test, rather than extracting out a piece of solid tissue. Now consider breast cancer. Still, the cutting-edge screening method is mammography or the physical interrogation for lumps. This has had a big impact in terms of early detection and awareness, but it's still primitive compared to interrogating, forensically, blood samples to look at traces of DNA.

Large machines like CAT scans, PET scans, MRIs, are very expensive and very subjective in terms of the operator. They don't look at the root causes of the cancer. Cancer is caused by changes in DNA. These can be changes in the hard drive of the DNA (the genomic changes) or changes in the apps that the DNA are running (the epigenetics and the transcriptomics).

We don't look at that now, even though we have, emerging, all of these technologies to do it, and those technologies are getting so much cheaper. I saw some statistics at a conference just a few months ago that, in the United States, less than 1 percent of cancer patients have their DNA interrogated. That's the current state-of-the-art in the modern medical system.

Professor Matt Trau, a cancer researcher at the University of Queensland in Australia.

(Courtesy)

Blood, as the highway of the body, is carrying all of this information. Cancer cells, if they are present in the body, are constantly getting turned over. When they die, they release their contents into the blood. Many of these cells end up in the urine and saliva. Having technologies that can forensically scan the highways looking for evidence of cancer is little bit like looking for explosives at the airport. That's very valuable as a security tool.

The trouble is that there are thousands of different types of cancer. Going back to breast cancer, there's at least a dozen different types, probably more, and each of them change the DNA (the hard drive of the disease) and the epigenetics (or the RAM memory). So one of the problems for diagnostics in cancer is to find something that is a signature of all cancers. That's been a really, really, really difficult problem.

Ours was a completely serendipitous discovery. What we found in the lab was this one marker that just kept coming up in all of the types of breast cancers we were studying.

No one believed it. I didn't believe it. I thought, "Gosh, okay, maybe it's a fluke, maybe it works just for breast cancer." So we went on to test it in prostate cancer, which is also many different types of diseases, and it seemed to be working in all of those. We then tested it further in lymphoma. Again, many different types of lymphoma. It worked across all of those. We tested it in gastrointestinal cancer. Again, many different types, and still, it worked, but we were skeptical.

Then we looked at cell lines, which are cells that have come from previous cancer patients, that we grow in the lab, but are used as model experimental systems. We have many of those cell lines, both ones that are cancerous, and ones that are healthy. It was quite remarkable that the marker worked in all of the cancer cell lines and didn't work in the healthy cell lines.

What could possibly be going on?

Well, imagine DNA as a piece of string, that's your hard drive. Epigenetics is like the beads that you put on that string. Those beads you can take on and off as you wish and they control which apps are run, meaning which genetic programs the cell runs. We hypothesized that for cancer, those beads cluster together, rather than being randomly distributed across the string.

Ultimately, I see this as something that would be like a pregnancy test you could take at your doctor's office.

The implications of this are profound. It means that DNA from cancer folds in water into three-dimensional structures that are very different from healthy cells' DNA. It's quite literally the needle in a haystack. Because when you do a liquid biopsy for early detection of cancer, most of the DNA from blood contains a vast abundance of healthy DNA. And that's not of interest. What's of interest is to find the cancerous DNA. That's there only in trace.

Once we figured out what was going on, we could easily set up a system to detect the trace cancerous DNA. It binds to gold nanoparticles in water and changes color. The test takes 10 minutes, and you can detect it by eye. Red indicates cancer and blue doesn't.

We're very, very excited about where we go from here. We're starting to test the test on a greater number of cancers, in thousands of patient samples. We're looking to the scientific community to engage with us, and we're getting a really good response from groups around the world who are supplying more samples to us so we can test this more broadly.

We also are very interested in testing how early can we go with this test. Can we detect cancer through a simple blood test even before there are any symptoms whatsoever? If so, we might be able to convert a cancer diagnosis to something almost as good as a vaccine.

Of course, we have to watch what are called false positives. We don't want to be detecting people as positives when they don't have cancer, and so the technology needs to improve there. We see this version as the iPhone 1. We're interested in the iPhone 2, 3, 4, getting better and better.

Ultimately, I see this as something that would be like a pregnancy test you could take at your doctor's office. If it came back positive, your doctor could say, "Look, there's some news here, but actually, it's not bad news, it's good news. We've caught this so early that we will be able to manage this, and this won't be a problem for you."

If this were to be in routine use in the medical system, countless lives could be saved. Cancer is now becoming one of the biggest killers in the world. We're talking millions upon millions upon millions of people who are affected. This really motivates our work. We might make a difference there.

Kira Peikoff was the editor-in-chief of Leaps.org from 2017 to 2021. As a journalist, her work has appeared in The New York Times, Newsweek, Nautilus, Popular Mechanics, The New York Academy of Sciences, and other outlets. She is also the author of four suspense novels that explore controversial issues arising from scientific innovation: Living Proof, No Time to Die, Die Again Tomorrow, and Mother Knows Best. Peikoff holds a B.A. in Journalism from New York University and an M.S. in Bioethics from Columbia University. She lives in New Jersey with her husband and two young sons. Follow her on Twitter @KiraPeikoff.

A doctor cradles a newborn who is sick with measles.

Ethan Lindenberger, the Ohio teenager who sought out vaccinations after he was denied them as a child, recently testified before Congress about why his parents became anti-vaxxers. The trouble, he believes, stems from the pervasiveness of misinformation online.

There is evidence that 'educating' people with facts about the benefits of vaccination may not be effective.

"For my mother, her love and affection and care as a parent was used to push an agenda to create a false distress," he told the Senate Committee. His mother read posts on social media saying vaccines are dangerous, and that was enough to persuade her against them.

His story is an example of how widespread and harmful the current discourse on vaccinations is—and more importantly—how traditional strategies to convince people about the merits of vaccination have largely failed.

As responsible members of society, all of us have implicitly signed on to what ethicists call the "Social Contract" -- we agree to abide by certain moral and political rules of behavior. This is what our societal values, norms, and often governments are based upon. However, with the unprecedented rise of social media, alternative facts, and fake news, it is evident that our understanding—and application—of the social contract must also evolve.

Nowhere is this breakdown of societal norms more visible than in the failure to contain the spread of vaccine-preventable diseases like measles. What started off as unexplained episodes in New York City last October, mostly in communities that are under-vaccinated, has exploded into a national epidemic: 880 cases of measles across 24 states in 2019, according to the CDC (as of May 17, 2019). In fact, the Unites States is only eight months away from losing its "measles free" status, joining Venezuela as the second country out of North and South America with that status.

The U.S. is not the only country facing this growing problem. Such constant and perilous reemergence of measles and other vaccine-preventable diseases in various parts of the world raises doubts about the efficacy of current vaccination policies. In addition to the loss of valuable life, these outbreaks lead to loss of millions of dollars in unnecessary expenditure of scarce healthcare resources. While we may be living through an age of information, we are also navigating an era whose hallmark is a massive onslaught on truth.

There is ample evidence on how these outbreaks start: low-vaccination rates. At the same time, there is evidence that 'educating' people with facts about the benefits of vaccination may not be effective. Indeed, human reasoning has a limit, and facts alone rarely change a person's opinion. In a fascinating report by researchers from the University of Pennsylvania, a small experiment revealed how "behavioral nudges" could inform policy decisions around vaccination.

In the reported experiment, the vaccination rate for employees of a company increased by 1.5 percent when they were prompted to name the date when they planned to get their flu shot. In the same experiment, when employees were prompted to name both a date and a time for their planned flu shot, vaccination rate increased by 4 percent.

A randomized trial revealed the subtle power of "announcements" – direct, brief, assertive statements by physicians that assumed parents were ready to vaccinate their children.

This experiment is a part of an emerging field of behavioral economics—a scientific undertaking that uses insights from psychology to understand human decision-making. The field was born from a humbling realization that humans probably do not possess an unlimited capacity for processing information. Work in this field could inform how we can formulate vaccination policy that is effective, conserves healthcare resources, and is applicable to current societal norms.

Take, for instance, the case of Human Papilloma Virus (HPV) that can cause several types of cancers in both men and women. Research into the quality of physician communication has repeatedly revealed how lukewarm recommendations for HPV vaccination by primary care physicians likely contributes to under-immunization of eligible adolescents and can cause confusion for parents.

A randomized trial revealed the subtle power of "announcements" – direct, brief, assertive statements by physicians that assumed parents were ready to vaccinate their children. These announcements increased vaccination rates by 5.4 percent. Lengthy, open-ended dialogues demonstrated no benefit in vaccination rates. It seems that uncertainty from the physician translates to unwillingness from a parent.

Choice architecture is another compelling concept. The premise is simple: We hardly make any of our decisions in vacuum; the environment in which these decisions are made has an influence. If health systems were designed with these insights in mind, people would be more likely to make better choices—without being forced.

This theory, proposed by Richard Thaler, who won the 2017 Nobel Prize in Economics, was put to the test by physicians at the University of Pennsylvania. In their study, flu vaccination rates at primary care practices increased by 9.5 percent all because the staff implemented "active choice intervention" in their electronic health records—a prompt that nudged doctors and nurses to ask patients if they'd gotten the vaccine yet. This study illustrated how an intervention as simple as a reminder can save lives.

To be sure, some bioethicists do worry about implementing these policies. Are behavioral nudges akin to increased scrutiny or a burden for the disadvantaged? For example, would incentives to quit smoking unfairly target the poor, who are more likely to receive criticism for bad choices?

The measles outbreak is a sober reminder of how devastating it can be when the social contract breaks down.

While this is a valid concern, behavioral economics offers one of the only ethical solutions to increasing vaccination rates by addressing the most critical—and often legal—challenge to universal vaccinations: mandates. Choice architecture and other interventions encourage and inform a choice, allowing an individual to retain his or her right to refuse unwanted treatment. This distinction is especially important, as evidence suggests that people who refuse vaccinations often do so as a result of cognitive biases – systematic errors in thinking resulting from emotional attachment or a lack of information.

For instance, people are prone to "confirmation bias," or a tendency to selectively believe in information that confirms their preexisting theories, rather than the available evidence. At the same time, people do not like mandates. In such situations, choice architecture provides a useful option: people are nudged to make the right choice via the design of health delivery systems, without needing policies that rely on force.

The measles outbreak is a sober reminder of how devastating it can be when the social contract breaks down and people fall prey to misinformation. But all is not lost. As we fight a larger societal battle against alternative facts, we now have another option in the trenches to subtly encourage people to make better choices.

Using insights from research in decision-making, we can all contribute meaningfully in controversial conversations with family, friends, neighbors, colleagues, and our representatives — and push for policies that protect those we care about. A little more than a hundred years ago, thousands of lives were routinely lost to preventive illnesses. We've come too far to let ignorance destroy us now.