How a Deadly Fire Gave Birth to Modern Medicine

The Cocoanut Grove fire in Boston in 1942 tragically claimed 490 lives, but was the catalyst for several important medical advances.

On the evening of November 28, 1942, more than 1,000 revelers from the Boston College-Holy Cross football game jammed into the Cocoanut Grove, Boston's oldest nightclub. When a spark from faulty wiring accidently ignited an artificial palm tree, the packed nightspot, which was only designed to accommodate about 500 people, was quickly engulfed in flames. In the ensuing panic, hundreds of people were trapped inside, with most exit doors locked. Bodies piled up by the only open entrance, jamming the exits, and 490 people ultimately died in the worst fire in the country in forty years.

"People couldn't get out," says Dr. Kenneth Marshall, a retired plastic surgeon in Boston and president of the Cocoanut Grove Memorial Committee. "It was a tragedy of mammoth proportions."

Within a half an hour of the start of the blaze, the Red Cross mobilized more than five hundred volunteers in what one newspaper called a "Rehearsal for Possible Blitz." The mayor of Boston imposed martial law. More than 300 victims—many of whom subsequently died--were taken to Boston City Hospital in one hour, averaging one victim every eleven seconds, while Massachusetts General Hospital admitted 114 victims in two hours. In the hospitals, 220 victims clung precariously to life, in agonizing pain from massive burns, their bodies ravaged by infection.

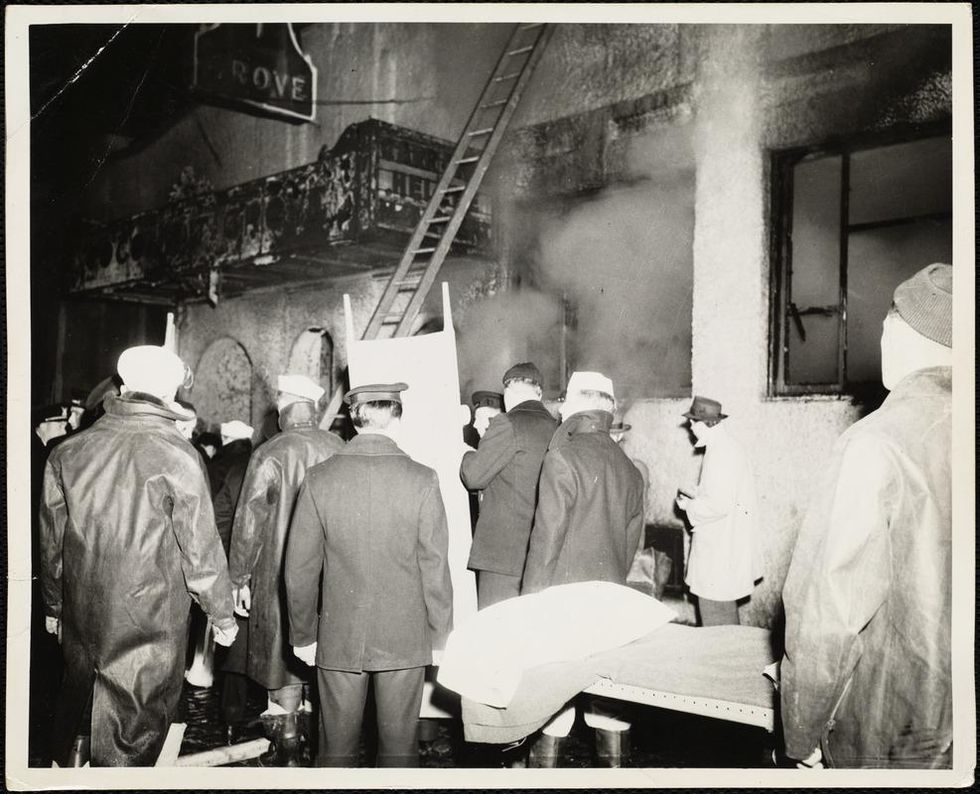

The scene of the fire.

Boston Public Library

Tragic Losses Prompted Revolutionary Leaps

But there is a silver lining: this horrific disaster prompted dramatic changes in safety regulations to prevent another catastrophe of this magnitude and led to the development of medical techniques that eventually saved millions of lives. It transformed burn care treatment and the use of plasma on burn victims, but most importantly, it introduced to the public a new wonder drug that revolutionized medicine, midwifed the birth of the modern pharmaceutical industry, and nearly doubled life expectancy, from 48 years at the turn of the 20th century to 78 years in the post-World War II years.

The devastating grief of the survivors also led to the first published study of post-traumatic stress disorder by pioneering psychiatrist Alexandra Adler, daughter of famed Viennese psychoanalyst Alfred Adler, who was a student of Freud. Dr. Adler studied the anxiety and depression that followed this catastrophe, according to the New York Times, and "later applied her findings to the treatment World War II veterans."

Dr. Ken Marshall is intimately familiar with the lingering psychological trauma of enduring such a disaster. His mother, an Irish immigrant and a nurse in the surgical wards at Boston City Hospital, was on duty that cold Thanksgiving weekend night, and didn't come home for four days. "For years afterward, she'd wake up screaming in the middle of the night," recalls Dr. Marshall, who was four years old at the time. "Seeing all those bodies lined up in neat rows across the City Hospital's parking lot, still in their evening clothes. It was always on her mind and memories of the horrors plagued her for the rest of her life."

The sheer magnitude of casualties prompted overwhelmed physicians to try experimental new procedures that were later successfully used to treat thousands of battlefield casualties. Instead of cutting off blisters and using dyes and tannic acid to treat burned tissues, which can harden the skin, they applied gauze coated with petroleum jelly. Doctors also refined the formula for using plasma--the fluid portion of blood and a medical technology that was just four years old--to replenish bodily liquids that evaporated because of the loss of the protective covering of skin.

"Every war has given us a new medical advance. And penicillin was the great scientific advance of World War II."

"The initial insult with burns is a loss of fluids and patients can die of shock," says Dr. Ken Marshall. "The scientific progress that was made by the two institutions revolutionized fluid management and topical management of burn care forever."

Still, they could not halt the staph infections that kill most burn victims—which prompted the first civilian use of a miracle elixir that was being secretly developed in government-sponsored labs and that ultimately ushered in a new age in therapeutics. Military officials quickly realized this disaster could provide an excellent natural laboratory to test the effectiveness of this drug and see if it could be used to treat the acute traumas of combat in this unfortunate civilian approximation of battlefield conditions. At the time, the very existence of this wondrous medicine—penicillin—was a closely guarded military secret.

From Forgotten Lab Experiment to Wonder Drug

In 1928, Alexander Fleming discovered the curative powers of penicillin, which promised to eradicate infectious pathogens that killed millions every year. But the road to mass producing enough of the highly unstable mold was littered with seemingly unsurmountable obstacles and it remained a forgotten laboratory curiosity for over a decade. But Fleming never gave up and penicillin's eventual rescue from obscurity was a landmark in scientific history.

In 1940, a group at Oxford University, funded in part by the Rockefeller Foundation, isolated enough penicillin to test it on twenty-five mice, which had been infected with lethal doses of streptococci. Its therapeutic effects were miraculous—the untreated mice died within hours, while the treated ones played merrily in their cages, undisturbed. Subsequent tests on a handful of patients, who were brought back from the brink of death, confirmed that penicillin was indeed a wonder drug. But Britain was then being ravaged by the German Luftwaffe during the Blitz, and there were simply no resources to devote to penicillin during the Nazi onslaught.

In June of 1941, two of the Oxford researchers, Howard Florey and Ernst Chain, embarked on a clandestine mission to enlist American aid. Samples of the temperamental mold were stored in their coats. By October, the Roosevelt Administration had recruited four companies—Merck, Squibb, Pfizer and Lederle—to team up in a massive, top-secret development program. Merck, which had more experience with fermentation procedures, swiftly pulled away from the pack and every milligram they produced was zealously hoarded.

After the nightclub fire, the government ordered Merck to dispatch to Boston whatever supplies of penicillin that they could spare and to refine any crude penicillin broth brewing in Merck's fermentation vats. After working in round-the-clock relays over the course of three days, on the evening of December 1st, 1942, a refrigerated truck containing thirty-two liters of injectable penicillin left Merck's Rahway, New Jersey plant. It was accompanied by a convoy of police escorts through four states before arriving in the pre-dawn hours at Massachusetts General Hospital. Dozens of people were rescued from near-certain death in the first public demonstration of the powers of the antibiotic, and the existence of penicillin could no longer be kept secret from inquisitive reporters and an exultant public. The next day, the Boston Globe called it "priceless" and Time magazine dubbed it a "wonder drug."

Within fourteen months, penicillin production escalated exponentially, churning out enough to save the lives of thousands of soldiers, including many from the Normandy invasion. And in October 1945, just weeks after the Japanese surrender ended World War II, Alexander Fleming, Howard Florey and Ernst Chain were awarded the Nobel Prize in medicine. But penicillin didn't just save lives—it helped build some of the most innovative medical and scientific companies in history, including Merck, Pfizer, Glaxo and Sandoz.

"Every war has given us a new medical advance," concludes Marshall. "And penicillin was the great scientific advance of World War II."

Your Future Smartphone May Detect Problems in Your Water

Biosensors on a touchscreen are showing promise for detecting arsenic and lead in water.

In 2014, the city of Flint, Michigan switched the residents' water supply to the Flint river, citing cheaper costs. However, due to improper filtering, lead contaminated this water, and according to the Associated Press, many of the city's residents soon reported health issues like hair loss and rashes. In 2015, a report found that children there had high levels of lead in their blood. The National Resource Defense Council recently discovered there could still be as many as twelve million lead pipes carrying water to homes across the U.S.

What if Flint residents and others in afflicted areas could simply flick water onto their phone screens and an app would tell them if they were about to drink contaminated water? This is what researchers at the University of Cambridge are working on to prevent catastrophes like what occurred in Flint, and to prepare for an uncertain future of scarcer resources.

Underneath the tough glass of our phone screen lies a transparent layer of electrodes. Because our bodies hold an electric charge, when our finger touches the screen, it disrupts the electric field created among the electrodes. This is how the screen can sense where a touch occurs. Cambridge scientists used this same idea to explore whether the screen could detect charges in water, too. Metals like arsenic and lead can appear in water in the form of ions, which are charged particles. When the ionic solution is placed on the screen's surface, the electrodes sense that charge like how they sense our finger.

Imagine a new generation of smartphones with a designated area of the screen responsible for detecting contamination—this is one of the possible futures the researchers propose.

The experiment measured charges in various electrolyte solutions on a touchscreen. The researchers found that a thin polymer layer between the electrodes and the sample solution helped pick up the charges.

"How can we get really close to the touch electrodes, and be better than a phone screen?" Horstmann, the lead scientist on the study, asked himself while designing the protective coating. "We found that when we put electrolytes directly on the electrodes, they were too close, even short-circuiting," he said. When they placed the polymer layer on top the electrodes, however, this short-circuiting did not occur. Horstmann speaks of the polymer layer as one of the key findings of the paper, as it allowed for optimum conductivity. The coating they designed was much thinner than what you'd see with a typical smartphone touchscreen, but because it's already so similar, he feels optimistic about the technology's practical applications in the real world.

While the Cambridge scientists were using touchscreens to measure water contamination, Dr. Baojun Wang, a synthetic biologist at the University of Edinburgh, along with his team, created a way to measure arsenic contamination in Bangladesh groundwater samples using what is called a cell-based biosensor. These biosensors use cornerstones of cellular activity like transcription and promoter sequences to detect the presence of metal ions in water. A promoter can be thought of as a "flag" that tells certain molecules where to begin copying genetic code. By hijacking this aspect of the cell's machinery and increasing the cell's sensing and signal processing ability, they were able to amplify the signal to detect tiny amounts of arsenic in the groundwater samples. All this was conducted in a 384-well plate, each well smaller than a pencil eraser.

They placed arsenic sensors with different sensitivities across part of the plate so it resembled a volume bar of increasing levels of arsenic, similar to diagnostics on a Fitbit or glucose monitor. The whole device is about the size of an iPhone, and can be scaled down to a much smaller size.

Dr. Wang says cell-based biosensors are bringing sensing technology closer to field applications, because their machinery uses inherent cellular activity. This makes them ideal for low-resource communities, and he expects his device to be affordable, portable, and easily stored for widespread use in households.

"It hasn't worked on actual phones yet, but I don't see any reason why it can't be an app," says Horstmann of their technology. Imagine a new generation of smartphones with a designated area of the screen responsible for detecting contamination—this is one of the possible futures the researchers propose. But industry collaborations will be crucial to making their advancements practical. The scientists anticipate that without collaborative efforts from the business sector, the public might have to wait ten years until this becomes something all our smartphones are capable of—but with the right partners, "it could go really quickly," says Dr. Elizabeth Hall, one of the authors on the touchscreen water contamination study.

"That's where the science ends and the business begins," Dr. Hall says. "There is a lot of interest coming through as a result of this paper. I think the people who make the investments and decisions are seeing that there might be something useful here."

As for Flint, according to The Detroit News, the city has entered the final stages in removing lead pipe infrastructure. It's difficult to imagine how many residents might fare better today if they'd had the technology that scientists are now creating.

Of all its tragedy, COVID-19 has increased demand for at-home testing methods, which has carried over to non-COVID-19-related devices. Various testing efforts are now in the public eye.

"I like that the public is watching these directions," says Horstmann. "I think there's a long way to go still, but it's exciting."

Fungus is the ‘New Black’ in Eco-Friendly Fashion

On the left, a Hermès bag made using fine mycelium as a leather alternative, made in partnership with the biotech company MycoWorks; on right, a sheet of mycelium "leather."

A natural material that looks and feels like real leather is taking the fashion world by storm. Scientists view mycelium—the vegetative part of a mushroom-producing fungus—as a planet-friendly alternative to animal hides and plastics.

Products crafted from this vegan leather are emerging, with others poised to hit the market soon. Among them are the Hermès Victoria bag, Lululemon's yoga accessories, Adidas' Stan Smith Mylo sneaker, and a Stella McCartney apparel collection.

The Adidas' Stan Smith Mylo concept sneaker, made in partnership with Bolt Threads, uses an alternative leather grown from mycelium; a commercial version is expected in the near future.

Adidas

Hermès has held presales on the new bag, says Philip Ross, co-founder and chief technology officer of MycoWorks, a San Francisco Bay area firm whose materials constituted the design. By year-end, Ross expects several more clients to debut mycelium-based merchandise. With "comparable qualities to luxury leather," mycelium can be molded to engineer "all the different verticals within fashion," he says, particularly footwear and accessories.

More than a half-dozen trailblazers are fine-tuning mycelium to create next-generation leather materials, according to the Material Innovation Initiative, a nonprofit advocating for animal-free materials in the fashion, automotive, and home-goods industries. These high-performance products can supersede items derived from leather, silk, down, fur, wool, and exotic skins, says A. Sydney Gladman, the institute's chief scientific officer.

That's only the beginning of mycelium's untapped prowess. "We expect to see an uptick in commercial leather alternative applications for mycelium-based materials as companies refine their R&D [research and development] and scale up," Gladman says, adding that "technological innovation and untapped natural materials have the potential to transform the materials industry and solve the enormous environmental challenges it faces."

In fewer than 10 days in indoor agricultural farms, "we grow large slabs of mycelium that are many feet wide and long. We are not confined to the shape or geometry of an animal."

Reducing our carbon footprint becomes possible because mycelium can flourish in indoor farms, using agricultural waste as feedstock and emitting inherently low greenhouse gas emissions. Carbon dioxide is the primary greenhouse gas. "We often think that when plant tissues like wood rot, that they go from something to nothing," says Jonathan Schilling, professor of plant and microbial biology at the University of Minnesota and a member of MycoWorks' Scientific Advisory Board.

But that assumption doesn't hold true for all carbon in plant tissues. When the fungi dominating the decomposition of plants fulfill their function, they transform a large portion of carbon into fungal biomass, Schilling says. That, in turn, ends up in the soil, with mycelium forming a network underneath that traps the carbon.

Unlike the large amounts of fossil fuels needed to produce styrofoam, leather and plastic, less fuel-intensive processing is involved in creating similar materials with a fungal organism. While some fungi consist of a single cell, others are multicellular and develop as very fine threadlike structures. A mass of them collectively forms a "mycelium" that can be either loose and low density or tightly packed and high density. "When these fungi grow at extremely high density," Schilling explains, "they can take on the feel of a solid material such as styrofoam, leather or even plastic."

Tunable and supple in the cultivation process, mycelium is also reliably sturdy in composition. "We believe that mycelium has some unique attributes that differentiate it from plastic-based and animal-derived products," says Gavin McIntyre, who co-founded Ecovative Design, an upstate New York-based biomaterials company, in 2007 with the goal of displacing some environmentally burdensome materials and making "a meaningful impact on our planet."

After inventing a type of mushroom-based packaging for all sorts of goods, in 2013 the firm ventured into manufacturing mycelium that can be adapted for textiles, he says, because mushrooms are "nature's recycling system."

The company aims for its material—which is "so tough and tenacious" that it doesn't require any plastic add-on as reinforcement—to be generally accessible from a pricing standpoint and not confined to a luxury space. The cost, McIntyre says, would approach that of bovine leather, not the more upscale varieties of lamb and goat skins.

Already, production has taken off by leaps and bounds. In fewer than 10 days in indoor agricultural farms, "we grow large slabs of mycelium that are many feet wide and long," he says. "We are not confined to the shape or geometry of an animal," so there's a much lower scrap rate.

Decreasing the scrap rate is a major selling point. "Our customers can order the pieces to the way that they want them, and there is almost no waste in the processing," explains Ross of MycoWorks. "We can make ours thinner or thicker," depending on a client's specific needs. Growing materials locally also results in a reduction in transportation, shipping, and other supply chain costs, he says.

Yet another advantage to making things out of mycelium is its biodegradability at the end of an item's lifecycle. When a pair of old sneakers lands in a compost pile or landfill, it decomposes thanks to microbial processes that, once again, involve fungi. "It is cool to think that the same organism used to create a product can also be what recycles it, perhaps building something else useful in the same act," says biologist Schilling. That amounts to "more than a nice business model—it is a window into how sustainability works in nature."

A product can be called "sustainable" if it's biodegradable, leaves a minimal carbon footprint during production, and is also profitable, says Preeti Arya, an assistant professor at the Fashion Institute of Technology in New York City and faculty adviser to a student club of the American Association of Textile Chemists and Colorists.

On the opposite end of the spectrum, products composed of petroleum-based polymers don't biodegrade—they break down into smaller pieces or even particles. These remnants pollute landfills, oceans, and rivers, contaminating edible fish and eventually contributing to the growth of benign and cancerous tumors in humans, Arya says.

Commending the steps a few designers have taken toward bringing more environmentally conscious merchandise to consumers, she says, "I'm glad that they took the initiative because others also will try to be part of this competition toward sustainability." And consumers will take notice. "The more people become aware, the more these brands will start acting on it."

A further shift toward mycelium-based products has the capability to reap tremendous environmental dividends, says Drew Endy, associate chair of bioengineering at Stanford University and president of the BioBricks Foundation, which focuses on biotechnology in the public interest.

The continued development of "leather surrogates on a scaled and sustainable basis will provide the greatest benefit to the greatest number of people, in perpetuity," Endy says. "Transitioning the production of leather goods from a process that involves the industrial-scale slaughter of vertebrate mammals to a process that instead uses renewable fungal-based manufacturing will be more just."